nodejs event cycle and multi-process (5) - cluster multi-process model & worker process uses fork() function to realize communication with master process & multi-thread/multi-process waiting for the same socket event in the shocking group

cluster

Node multi-process module

properties and methods

- isMaster attribute, returns whether the process is the master process

- isWorker attribute, returns whether the process is a worker process

- The fork() method can only be called by the main process to spawn a new worker process and return a worker object. The difference from process.child, no need to create a new child.js

- The setupMaster([settings]) method is used to modify the default behavior of fork(). Once called, it will be set according to cluster.settings.

- settings attribute, used for configuration, parameter exec: worker file path; args: parameter passed to worker; execArgv: parameter list passed to Node.js executable file

event

- fork event, triggered when a new worker process is forked, can be used to record worker process activity

- The listening event is triggered when a worker process calls listen(), and the event handler has two parameters worker: the worker process object

- The message event is more special and needs to be monitored on a separate worker.

- online event, after copying a working process, the working process actively sends an online message to the main process, the main process triggers after receiving the message, and the callback parameter worker object

- disconnect event, triggered after the IPC channel between the main process and the worker process is disconnected

- exit event, triggered when a worker process exits, callback parameters worker object, code exit code, signal signal when the process is killed

- setup event, triggered after cluster.setupMaster() is executed

Document address:

https://nodejs.org/api/child_process.html Read more documents!

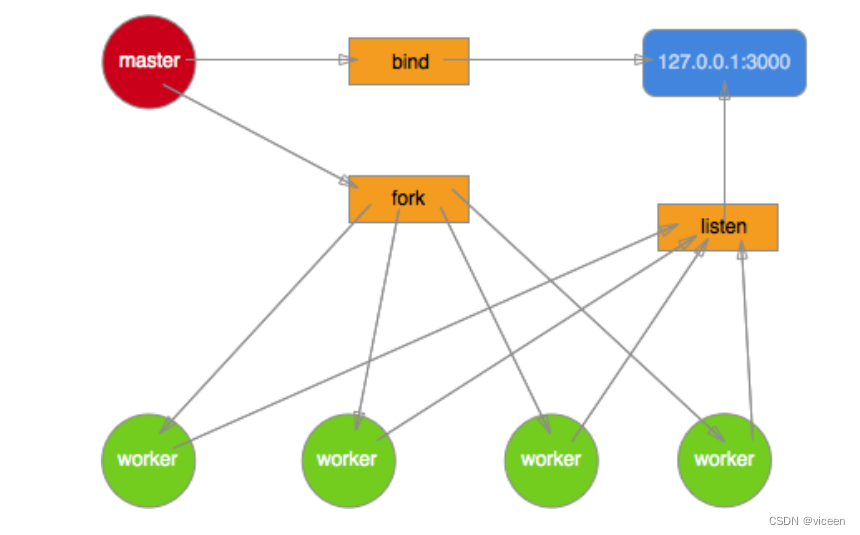

cluster multi-process model

Each worker process communicates with the master process based on IPC (Inter-Process Communication) by using the child_process.fork() function.

Then we can just use child_process.fork() to implement it ourselves, why do we need cluster?

这样的方式仅仅实现了多进程。多进程运行还涉及父子进程通信,子进程管理,以及负载均衡等问题,这些特性cluster帮你实现了。

example

File 1

cluster\child.js

console.log('我是子进程')

file 2

cluster\main.js

var cluster = require('cluster');

var cpuNums = require('os').cpus().length;

var http = require('http');

if (cluster.isMaster) {

for (var i = 0;i < cpuNums; i++) {

cluster.fork('./child.js')

}

// 当新的工作进程被fork时,cluster模块将触发'fork'事件。 可以被用来记录工作进程活动,产生一个自定义的timeout。

// cluster.on('fork', (worker) => {

// console.log('主进程fork了一个worker pid为', worker.process.pid);

// })

// 当一个工作进程调用listen()后,工作进程上的server会触发'listening' 事件,同时主进程上的 cluster 也会被触发'listening'事件。

//事件处理器使用两个参数来执行,其中worker包含了工作进程对象, address 包含了以下连接属性: address、 port 和 addressType。当工作进程同时监听多个地址时,这些参数非常有用。

// cluster.on('listening', (worker) => {

// console.log('主进程fork了一个worker进行http服务 pid为', worker.process.pid);

// })

// 当cluster主进程接收任意工作进程发送的消息后被触发

// cluster.on('message', (data) => {

// console.log('收到data', data)

// })

// var log = cluster;

// Object.keys(cluster.workers).forEach((id) => {

// cluster.workers[id].on('message', function(data) {

// console.log(data)

// })

// });

cluster.on('disconnect', (worker)=> {

console.log('有工作进程退出了', worker.process.pid )

cluster.fork(); // 保证你永远有8个worker

});

} else {

// http.createServer((req, res) => {

// try{

// res.end(aasdasd); // 报错,整个线程挂掉,不能提供服务

// } catch(err) {

// process.disconnect(); // 断开连接, 断开和master的连接,守护进程其实就是重启

// }

// }).listen(8001, () => {

// console.log('server is listening: ' + 8001);

// });

// console.log('xxx')

process.send(process.pid);

// process.disconnect();

}

Open a terminal and execute the command

node .\main.js

The console content of the main file can be printed

The original multi-process model

The original Node.js multi-process model is implemented in this way. After the master process creates a socket and binds it to a certain address and port, it does not call listen to listen for connections and accept connections, but passes the fd of the socket to the fork. The worker process, after receiving the fd, the worker calls listen and accepts a new connection. But in fact, a new incoming connection can only be processed by a certain worker process accpet in the end. As for which worker can accept it, the developer cannot predict and intervene at all. This will inevitably lead to competition among multiple worker processes when a new connection arrives, and finally the winning worker will get the connection.

I believe everyone here should also know the obvious problems of this multi-process model

- Multiple processes will compete to accpet a connection, resulting in a shocking group phenomenon, and the efficiency is relatively low.

- Since it is impossible to control which process handles a new connection, it will inevitably lead to a very unbalanced load among worker processes.

This is actually the famous "shocking crowd" phenomenon.

To put it simply, multiple threads/processes wait for the same socket event. When this event occurs, these threads/processes are awakened at the same time, which is a shocking group. It is conceivable that the efficiency is very low. Many processes are awakened by the kernel rescheduling and respond to this event at the same time. Of course, only one process can successfully handle the event, and other processes will go back to sleep after failing to handle the event (there are other options). This performance waste phenomenon is the shocking group.

Shocking herd usually happens on the server. When the parent process binds a port to listen to the socket, and then forks out multiple child processes, the child processes start to process (such as accept) the socket in a loop. Whenever a user initiates a TCP connection, multiple sub-processes are awakened at the same time, and then one of the sub-processes accepts the new connection successfully, and the rest fail and go back to sleep.

http.Server inherits net.Server, and the communication between http client and http server depends on socket (net.Socket).

cluster\master.js

const net = require('net');

const fork = require('child_process').fork;

var handle = net._createServerHandle('0.0.0.0', 3000);

for(var i=0;i<4;i++) {

console.log('11111111111111111111111111')

fork('./worker').send({

}, handle);

}

cluster\worker.js

const net = require('net');

process.on('message', function(m, handle) {

//master接收客户端的请求,worker去响应

start(handle);

});

var buf = 'hello nodejs';

var res = ['HTTP/1.1 200 OK','content-length:'+buf.length].join('\r\n')+'\r\n\r\n'+buf;

var data = {

};

function start(server) {

// 响应逻辑,重点关注惊群的效果、计数

server.listen();

server.onconnection = function(err,handle) {

var pid = process.pid;

if (!data[pid]) {

data[pid] = 0;

}

data[pid] ++; //每次服务 +1

console.log('got a connection on worker, pid = %d', process.pid, data[pid]);

var socket = new net.Socket({

handle: handle

});

socket.readable = socket.writable = true;

socket.end(res);

}

}

Open a terminal and execute the command

node .\master.js

The console content of the worker.js file can be printed