1. UDP

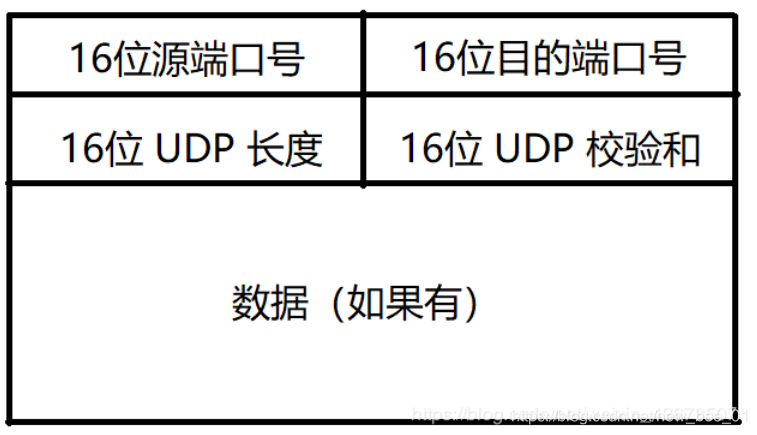

1.1 UDP protocol segment format

- 16-bit UDP length, indicating the maximum length of the entire datagram (UDP header + UDP data), that is, the maximum size of the datagram is 2^16byte = 64KB

- If the checksum is wrong, it will be discarded directly

1.2 Features of UDP

1.2.1 No connection is not reliable

- No connection

Know the IP and port number of the peer and transmit directly without establishing a connection - Unreliable

There is no confirmation mechanism and no retransmission mechanism; if the segment cannot be sent to the other party due to network failure, the UDP protocol layer will not

return any error information to the application layer

. UDP does not guarantee the reliability and orderly arrival of data, so there may be chaos sequence, need to manage the packet sequence at the application layer

1.2.2 Datagram Oriented

- The length of the message given by the application layer to UDP, UDP is sent as it is, neither split nor merged, and the maximum length is 64KB

- If the data we need to transmit exceeds 64K, we need to manually subpackage at the application layer, send it multiple times, and manually assemble it at the receiving end

1.3 UDP buffer

- UDP does not have a real sending buffer. Calling sendto will be directly handed over to the kernel, and the kernel will pass the data to the network layer protocol for subsequent transmission actions;

- UDP has a receiving buffer. But this receiving buffer cannot guarantee that the order of received UDP packets is consistent with the sequence of sending UDP packets; if the buffer is full, the arriving UDP data will be discarded;

- UDP sockets can both read and write. This concept is called full-duplex

1.4 Well-known protocols based on UDP

- DHCP : Dynamic Host Configuration Protocol

- DNS : Domain Name Resolution Protocol

2. TCP

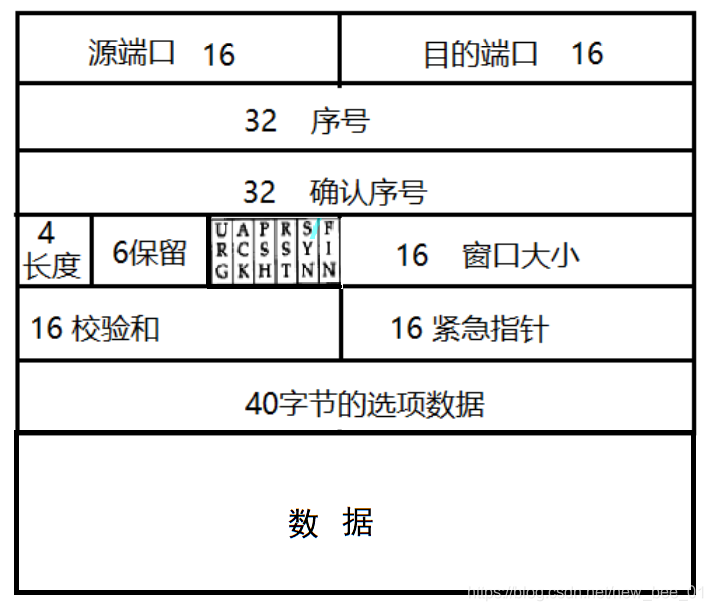

2.1 TCP protocol segment format

- Source/destination port number : Indicates which process the data comes from and which process it goes to;

- 32-bit serial number/32-bit confirmation number : I will talk about it in detail later;

- 4-bit TCP header length : Indicates how many 32-bit bits (how many 4 bytes) the TCP header has; so the maximum length of the TCP header is 15 * 4 = 60 bytes

- 6 flag bits :

- URG: Whether the urgent pointer is valid

- ACK: Whether the acknowledgment number is valid

- PSH: Prompt the receiving end application to read the data from the TCP buffer immediately

- RST: The other party requests to re-establish the connection; we call the segment carrying the RST flag a reset segment

- SYN: Request to establish a connection; we call the SYN identifier as a synchronization segment

- FIN: Notify the other party that the local end is going to be closed, and we call the end segment carrying the FIN flag

- 16-bit window size : Indicates the number of bytes that the recipient of this message can receive from the confirmation sequence number (the size of the buffer prepared by the recipient). Because of the limited buffer area, it prevents the other party from sending data too fast, resulting in data loss .Actually, the 40-byte option of the TCP header also includes a window expansion factor M, and the actual window size is the value of the window field shifted left by M bits;

- 16-bit checksum : padding at the sending end, CRC checking. If the checking at the receiving end fails, it is considered that there is a problem with the data. The checksum here includes not only the TCP header, but also the TCP data part.

- 16-bit urgent pointer : identify which part of the data is urgent data;

- 40-byte header option : temporarily ignored;

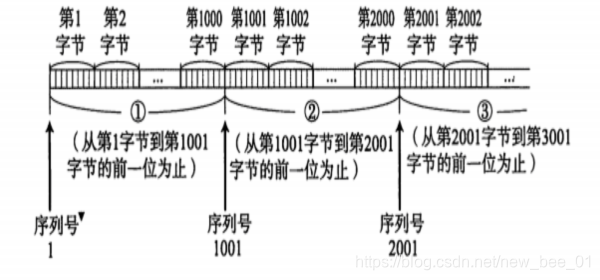

2.2 TCP data transmission format

- TCP sends data in the form of a byte stream. It doesn't care what type of data it is, but in order to ensure data correctness, retransmission control and repeat control, etc., these functions are implemented with serial numbers. The initial value of serial numbers is not 0, but is randomly selected by the client when establishing a connection. produced

- The data length of TCP is not written into the TCP header. The formula for calculating the length of the TCP packet in actual communication is: the length of the data packet in the IP header – the length of the IP header and the length of the TCP header

- TCP numbers each byte of data. It is the sequence number. Each ACK has a corresponding confirmation sequence number, which means to tell the sender what data I have received; where do you start sending next time?

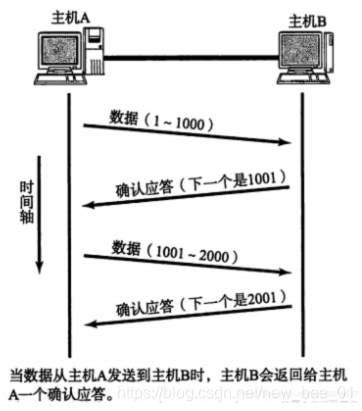

2.3 Acknowledgment response mechanism

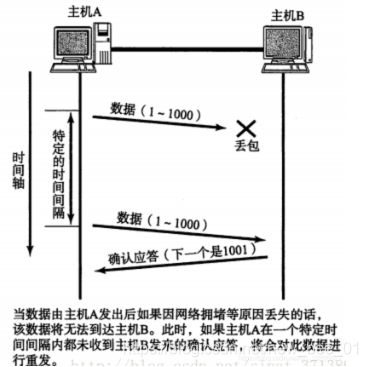

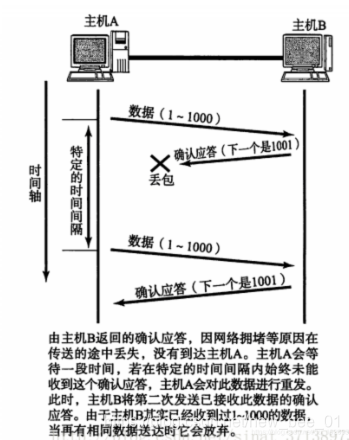

2.4 Timeout transmission mechanism

So, how to determine the time of timeout?

- Ideally, find a minimum time to ensure that "the confirmation response must be returned within this time".

- However, the length of this time varies with different network environments.

- If the timeout is set too long, it will affect the overall retransmission efficiency;

- If the timeout is set too short, repeated packets may be sent frequently;

In order to ensure high-performance communication in any environment, TCP will dynamically calculate the maximum timeout period.

- In Linux (the same is true for BSD Unix and Windows), the timeout is controlled with a unit of 500ms , and the timeout time for each timeout retransmission is an integer multiple of 500ms .

- If there is still no response after retransmission, wait for 2*500ms before retransmitting.

- If there is still no response, wait for 4*500ms for retransmission. And so on, increasing exponentially.

- When a certain number of retransmissions has been accumulated, TCP considers that the network or the peer host is abnormal, and forcibly closes the connection

2.5 Response management mechanism

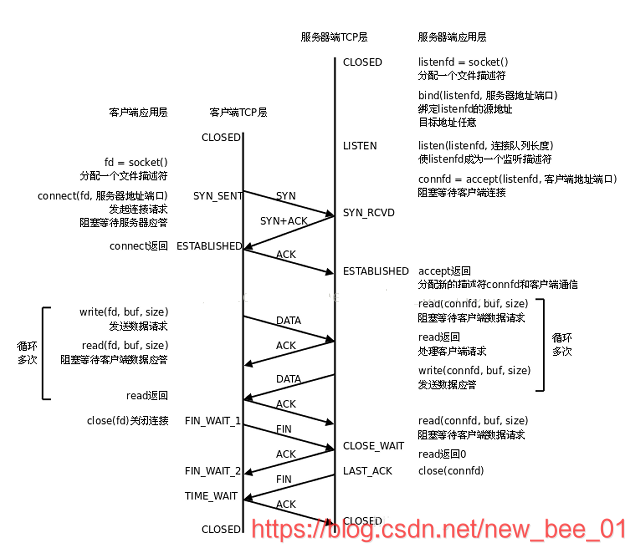

Server state transition:

- [CLOSED -> LISTEN] The server enters the LISTEN state after calling listen, waiting for the client to connect;

- [LISTEN -> SYN_RCVD] Once the connection request (synchronization segment) is monitored, the connection will be placed in the kernel waiting queue, and a SYN confirmation message will be sent to the client.

- [SYN_RCVD -> ESTABLISHED] Once the server receives the confirmation message from the client, it enters the ESTABLISHED state and can read and write data.

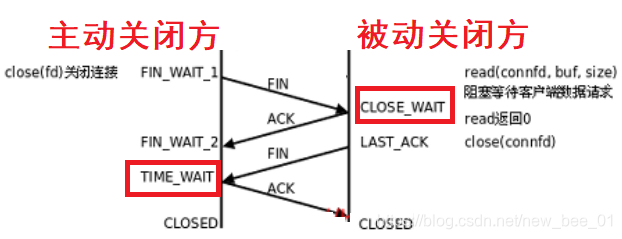

- [ESTABLISHED -> CLOSE_WAIT] When the client actively closes the connection (calling close), the server will receive the end segment, and the server will

return the confirmation segment and enter CLOSE_WAIT; - [CLOSE_WAIT -> LAST_ACK] After entering CLOSE_WAIT, it means that the server is ready to close the connection (the previous data needs to be processed); when the server actually calls close to close the connection, it will send a FIN to the client, and at this time the server enters the LAST_ACK state, waiting for the last ACK Arrival (this ACK is the client's confirmation of receipt of FIN)

- [LAST_ACK -> CLOSED] The server has received the ACK for FIN and completely closes the connection

Client state transition:

- [CLOSED -> SYN_SENT] The client calls connect and sends a synchronization segment;

- [SYN_SENT -> ESTABLISHED] If the connect call is successful, it will enter the ESTABLISHED state and start reading and writing data;

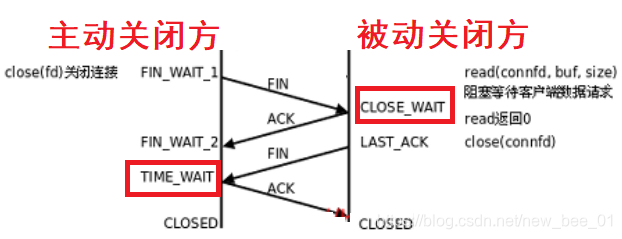

- [ESTABLISHED -> FIN_WAIT_1] When the client actively calls close, it sends an end segment to the server and enters FIN_WAIT_1 at the same time;

- [FIN_WAIT_1 -> FIN_WAIT_2] The client receives the server's confirmation of the end segment, then enters FIN_WAIT_2, and begins to wait for the server's end segment;

- [FIN_WAIT_2 -> TIME_WAIT] The client receives the end segment from the server, enters TIME_WAIT, and sends LAST_ACK;

- [TIME_WAIT -> CLOSED] The client will wait for a 2MSL (Max Segment Life, message maximum survival time) time before entering the CLOSED state

Why a three-way handshake

- If the connection request sent by the client arrives at the server after the client connection is released due to network delay, this is a message that has already expired. If only the second handshake is performed, the server will think that there is a new connection request, but the client has not established a connection. Data will be sent to the server, but the server will always consume resources for this connection.

Why is it waved four times?

Both parties need to agree to close the connection.

(1) In order to ensure that the last ACK segment sent by the client can reach the server. That is, the last confirmation message may be lost, and the server will retransmit it after a timeout. Then the server sends a FIN request to close the connection, and the client sends an ACK confirmation. One round trip is two message life cycles.

If there is no waiting time, if the connection is released immediately after sending the confirmation segment, the server cannot retransmit, so the confirmation cannot be received, and the CLOSE state cannot be entered according to the steps, that is, the confirmation must be received to close.

(2) Prevent invalid connection request messages from appearing in the connection. After 2MSL, all the generated message segments can disappear from the network within this continuous period of time.

2.6 TIME_WAIT

- The TCP protocol stipulates that the party that actively closes the connection must be in the TIME_WAIT state and wait for two MSL (maximum segment lifetime) times before returning to the CLOSED state .

- MSL is the maximum lifetime of a TCP message, so if TIME_WAIT persists for 2MSL,

it can ensure that the unreceived or late message segments in both transmission directions have disappeared (otherwise the server restarts immediately, and may receive messages from the upper Late data for a process, but this data is likely to be wrong); - At the same time, it is also theoretically guaranteed that the last message arrives reliably (assuming that the last ACK is lost, then the server will resend a FIN. At this time, although the client process is gone, the TCP connection is still there, and the LAST_ACK can still be resent);

- MSL is the maximum lifetime of a TCP message, so if TIME_WAIT persists for 2MSL,

- We use Ctrl-C to terminate the server, so the server is the party that actively closes the connection, and it still cannot listen to the same server port again during TIME_WAIT ;

- MSL is specified as two minutes in RFC1122, but the implementation of each operating system is different. The default configuration value on Centos7 is 60s ; you can check the value of msl through cat /proc/sys/net/ipv4/tcp_fin_timeout;

2.6.1 TIME_WAIT improvements

Re-listening is not allowed until the server's TCP connection is completely disconnected, which may be unreasonable in some cases

- The server needs to handle a very large number of client connections (the lifetime of each connection may be very short, but there are a large number of clients requesting each second).

- At this time, if the server side actively closes the connection (for example, if some clients are not active, they need to be actively cleaned up by the server side), a large number of TIME_WAIT connections will be generated.

- Due to our large amount of requests, it may lead to a large number of TIME_WAIT connections, and each connection will occupy a communication quintuple (source ip, source port, destination ip, destination port, protocol). Among them, the server's ip and port and The protocol is fixed. If the ip and port number of the new client connection and the link occupied by TIME_WAIT are repeated, there will be problems

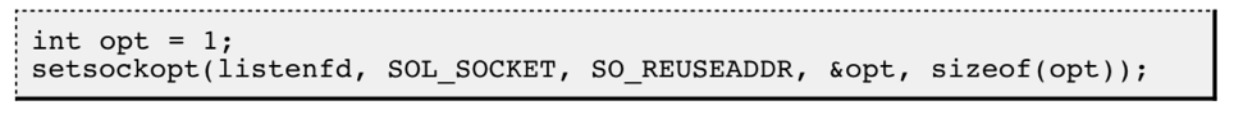

- Use setsockopt() to set the option SO_REUSEADDR of the socket descriptor to 1, which means that multiple socket descriptors with the same port number but different IP addresses are allowed to be created

2.7 CLOSE_WAIT

In the server program, if we remove new_sock.Close(); this code,

we compile and run the server. Start the client connection, check the TCP status, the client server is in the ESTABLELISHED status, there is no problem. Then we close the client program and observe the TCP status

At this time, the server has entered the CLOSE_WAIT state, and combined with the flow chart of our four waved hands, it can be considered that the four waved hands have not been completed correctly.

- For a large number of CLOSE_WAIT states on the server, the reason is that the server did not close the socket correctly, resulting in the four waved hands not being completed correctly. This is a BUG. You only need to add the corresponding close to solve the problem

2.7 Sliding windows

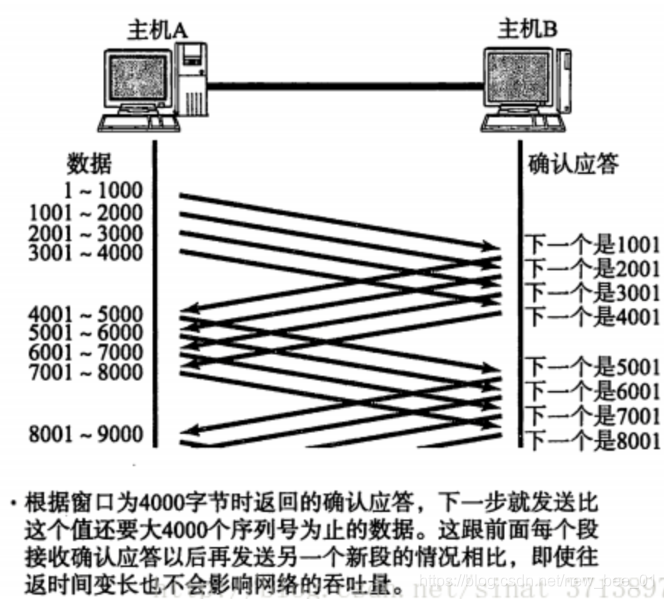

For each data segment sent, an ACK must be given to confirm the response. After receiving the ACK, the next data segment is sent.

This has a relatively large disadvantage, that is, poor performance. Especially for longer round-trip data Time. Since the performance of this method of sending and receiving is low, we can greatly improve performance by sending multiple pieces of data at a time (in fact, the waiting time of multiple segments is overlapped together)

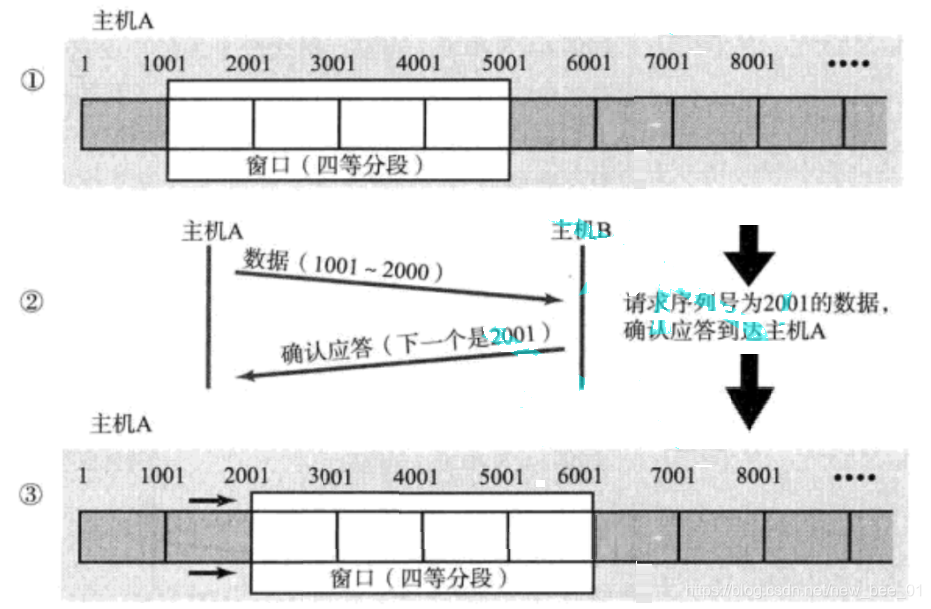

- The window size refers to the maximum value that can continue to send data without waiting for an acknowledgment. The window size in the above figure is 4000 bytes (four segments).

- When sending the first four segments, there is no need to wait for any ACK, just send directly

- After receiving the first ACK, the sliding window moves backwards, and continues to send the data of the fifth segment; and so on;

- In order to maintain this sliding window, the operating system kernel needs to create a sending buffer to record which data is currently unanswered; only the data that has been answered can be deleted from the buffer;

- The larger the window, the higher the throughput of the network;

So if there is a packet loss, how to retransmit? Here are two situations to discuss.

-

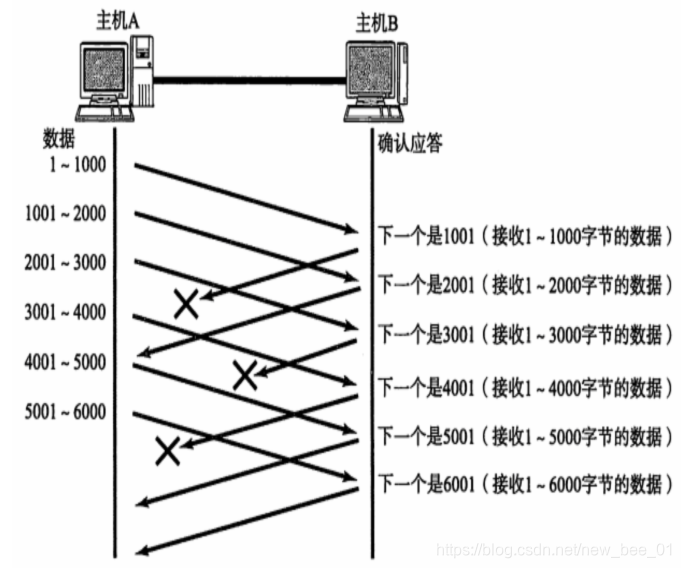

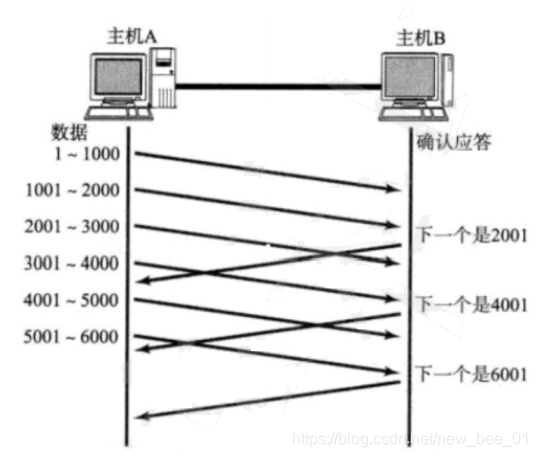

Case 1: The data packet has arrived and the ACK is lost —sliding window handles retransmission

In this case, it does not matter if part of the ACK is lost, because it can be confirmed by subsequent ACKs:

1-1000 bytes of data have been sent to host B , but the data confirmation response of 1-1000 is lost. When the confirmation sequence number ACK = 2001 is received, the client 1-1000 data can be deleted from the buffer, because the host B has sent the confirmation sequence number ACK = 2001, indicating that the 1-1000 data has been sent successfully -

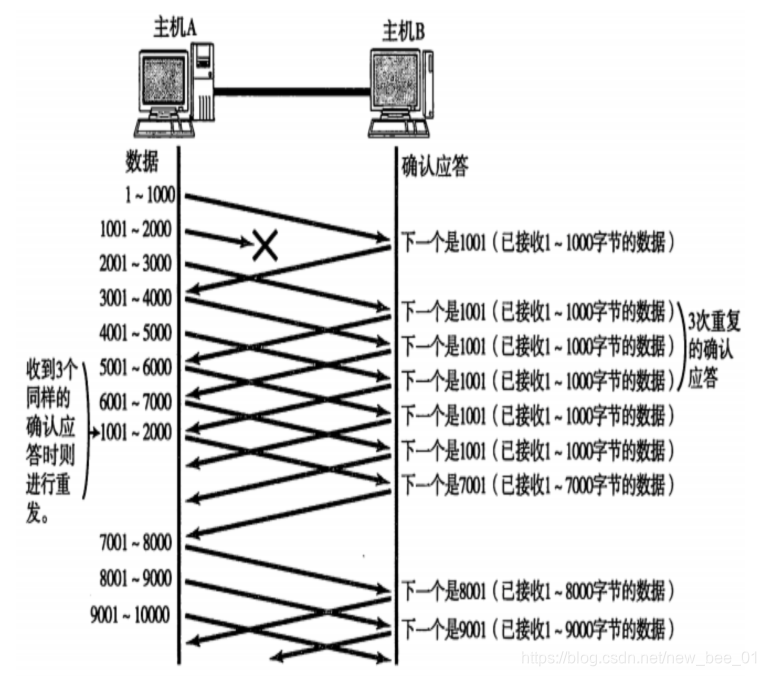

Situation 2: The data packet is lost directly - high-speed retransmission control

-

When a certain segment is lost, the sender will always receive ACK like 1001, just like reminding the sender "I want 1001";

-

If the sending host receives the same "1001" response for three consecutive times, it will resend the corresponding data 1001 - 2000;

-

At this time, after receiving 1001 at the receiving end, the ACK returned again is 7001 (because 2001 - 7000). The receiving end has actually received it before, and it is placed in the receiving buffer of the operating system kernel of the receiving end;

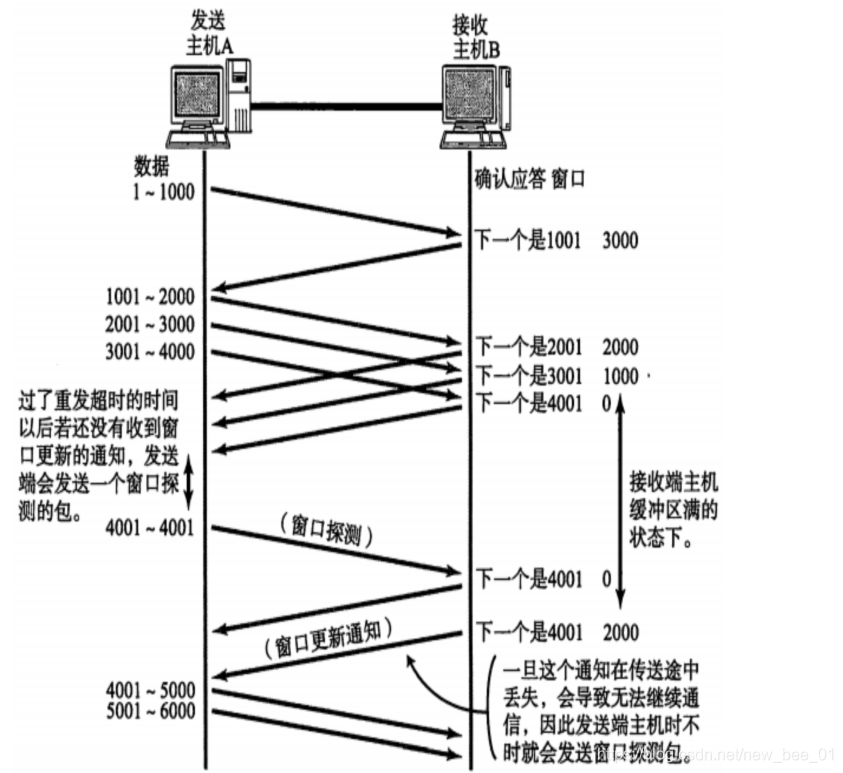

2.8 Flow Control

The speed at which the receiving end can process data is limited. If the sending end sends too fast, the buffer at the receiving end will be filled. At this time, if the sending end continues to send, it will cause packet loss, which in turn will cause packet loss and retransmission, etc. A series of chain reactions.

Therefore, TCP supports determining the sending speed of the sending end according to the processing capability of the receiving end. This mechanism is called flow control (Flow Control);

- The receiving end puts the buffer size that it can receive into the " window size " field in the TCP header , and notifies the sending end through the ACK end;

- The larger the window size field, the higher the throughput of the network;

- Once the receiving end finds that its buffer is almost full, it will set the window size to a smaller value and notify the sending end;

- After the sender receives this window, it will slow down its sending speed;

- If the buffer at the receiving end is full, the window will be set to 0; at this time, the sender will no longer send data, but it needs to send a window detection data segment periodically, so that the receiving end can tell the sending end how to set the window

size What about telling the sender? Recall that in our TCP header, there is a 16-bit window field, which stores the window size information;

then the question arises, the maximum 16-bit number represents 65535, so is the maximum TCP window 65535 bytes?

Actually , the TCP header 40-byte option also includes a window expansion factor M, and the actual window size is the value of the window field shifted left by M bits;

2.9 Congestion Control

- Although TCP has the big killer of sliding window, it can send a large amount of data efficiently and reliably. However, if a large amount of data is sent in the initial stage, it may still cause problems.

- Because there are many computers on the network, the current network status may already be relatively congested. If you don’t know the current network status, sending a large amount of data rashly is likely to make things worse.

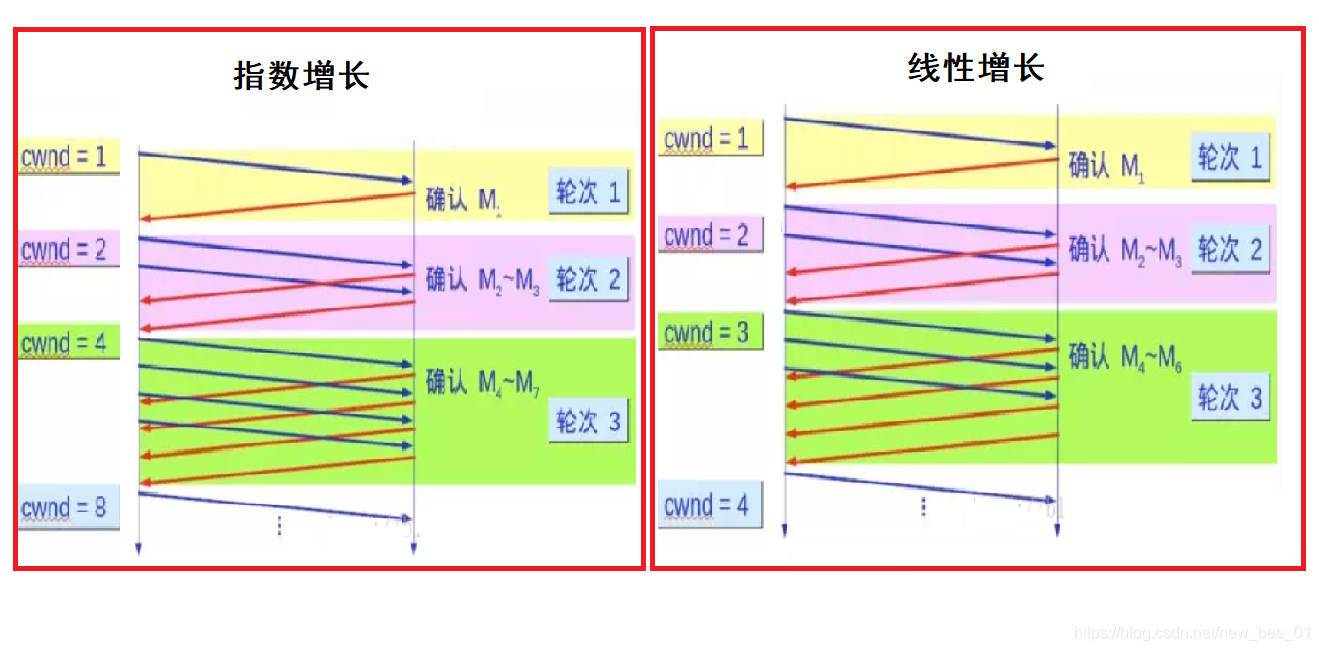

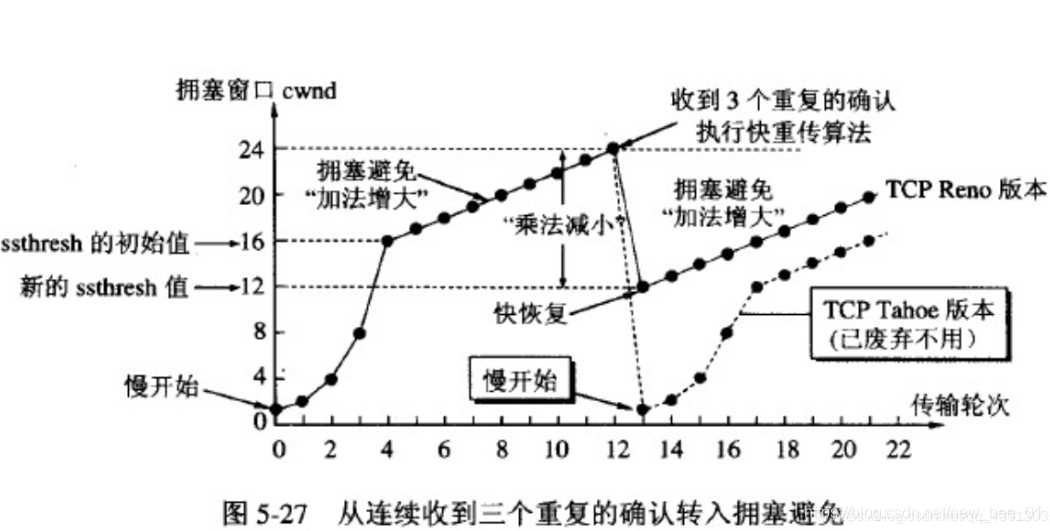

- TCP introduces a slow start mechanism, which sends a small amount of data first, explores the path, finds out the current network congestion state, and then decides at what speed to transmit data. The growth

rate of the congestion window like the upper left is exponential. "Slow start" It just means that it is slow at the beginning, but the growth rate is very fast. - In order not to grow so fast, the congestion window cannot be simply doubled. A threshold called slow start is introduced here

- When the congestion window exceeds this threshold, it no longer grows exponentially, but grows linearly.

A small amount of packet loss, we just trigger timeout retransmission; a large number of packet loss, we think the network is congested;

when TCP communication starts After that, the network throughput will gradually increase; as the network becomes congested, the throughput will immediately decrease;

congestion control, in the final analysis, is that the TCP protocol wants to transmit data to the other party as quickly as possible, but it must also avoid causing too much pressure on the network. compromise

2.10 Delayed response

If the host receiving the data returns an ACK response immediately, the return window may be relatively small at this time.

- Assume that the receiver buffer is 1M. 500K data is received at one time; if the response is immediate, the returned window is 500K;

- But in fact, the processing speed of the processing end may be very fast, and 500K data will be consumed from the buffer within 10ms;

- In this case, the processing at the receiving end is far from reaching its limit, even if the window is enlarged, it can still handle it;

- If the receiving end waits for a while before answering, for example, waits for 200ms before answering, then the window size returned at this time is 1M;

It must be remembered that the larger the window, the greater the network throughput and the higher the transmission efficiency. Our goal is to improve the transmission efficiency as much as possible while ensuring that the network is not congested. Can all packets be delayed in response? Certainly

not ;

- Quantity limit : Respond every N packets;

- Time limit : Respond once when the maximum delay time is exceeded;

the specific number and timeout time vary depending on the operating system; generally, N is 2, and the timeout time is 200ms

2.11 Piggybacking

Based on the delayed response, we found that in many cases, the client server also "sends and receives" at the application layer. It means that the client says "How are you" to the server, and the server will also reply to the client. A "Fine, thank you";

Then at this time, the ACK can hitch a ride and send back to the client together with the "Fine, thank you" response from the server

2.12 Byte Stream Oriented (MSS)

Create a TCP socket, and create a send buffer and a receive buffer in the kernel at the same time;

- When calling write, the data will be written into the send buffer first;

- If the number of bytes sent is too long, it will be split into multiple TCP packets and sent;

- If the number of bytes sent is too short, it will wait in the buffer until the length of the buffer is almost full, or send it at other suitable times;

- When receiving data, the data also arrives at the receiving buffer of the kernel from the network card driver;

- Then the application can call read to get data from the receive buffer;

- On the other hand, a TCP connection has both a sending buffer and a receiving buffer, so for this connection, data can be read or written. This concept is called full-duplex

Due to the existence of the buffer, the reading and writing of the TCP program does not need to match one by one, for example:

- When writing 100 bytes of data, you can call write once to write 100 bytes, or you can call write 100 times, writing one byte at a time;

- When reading 100 bytes of data, there is no need to consider how to write it. You can read 100 bytes at a time, or you can read one byte at a time and repeat 100 times;

There is a "window size" field in the header of the TCP segment, which occupies 16bit=2byte. This field is mainly used for TCP sliding window flow control. Many people like to confuse the MSS of TCP with the "window size" field. Here is a distinction

MSS is the maximum length of the data part in the TCP segment . If the data delivered by the upper layer exceeds the MSS, the link layer will segment the delivered data. 1500byte, if you don’t discuss the size, the bottom layer will encapsulate it yourself). In the first and second handshakes of the TCP connection, the MSS of the other party will be notified respectively, so as to achieve the effect of negotiating the MSS between the two communicating parties.

In the TCP segment header, the " window size " field is usually used to inform the other party of its acceptable data volume. The window is essentially a buffer buffer, and the value of this field is used to inform the other party of the remaining available buffer size . In each TCP segment, the "window" field will be used to inform the other party of the size of the data it can receive. The window size is usually controlled with a sliding window flow.

For another example , the size of MTU is like the load-bearing tonnage of a bridge, and the bridge is equivalent to a network card;

Given the MSS in advance, it can prevent batch transportation because your truck has too much cargo;

If you don’t specify MSS, once your truck is overloaded and the tonnage exceeds the load-bearing capacity of the bridge, you have to split the goods into several batches and ship them over, and you have to assemble them after shipment, which is not worth the candle;

Article reference : Click me to see the role of MSS

2.13 Sticky package problem

First of all, it must be clear that the "package" in the sticky packet problem refers to the data packet of the application layer.

- In the protocol header of TCP, there is no such field as "packet length" like UDP, but there is a field such as sequence number.

- From the perspective of the transport layer, TCP comes one by one. They are arranged in the buffer according to the sequence number.

- From the perspective of the application layer, what you see is just a series of continuous byte data.

- Then the application program sees such a series of byte data, and does not know which part to start from which part, it is a complete application layer data packet.

So how to avoid the sticky package problem? In the final analysis, it is a sentence, clear the boundary between two packages.

- For a fixed-length package, ensure that it is read at a fixed size every time; for example, the Request structure above is of a fixed size, then it can be read from the beginning of the buffer according to sizeof(Request);

- For variable-length packets, you can specify a field for the total length of the packet at the header of the packet, so that you know the end position of the packet;

- For variable-length packets, you can also use a clear separator between packets (the application layer protocol is determined by the programmer himself, as long as the separator does not conflict with the text);

Thinking: For the UDP protocol, is there also a "sticky packet problem"?

- For UDP, if no data has been delivered by the upper layer, the UDP packet length is still there. At the same time, UDP delivers data to the application layer one by one. There is a clear data boundary.

- From the perspective of the application layer, when using UDP, either a complete UDP packet is received or not. There will be no "half" situation

2.14 Summary of TCP

Why is TCP so complicated? Because it is necessary to ensure reliability and at the same time improve performance as much as possible.

reliability:

- checksum

- Serial number (arrives in order)

- confirmation response

- timeout resend

- connection management

- flow control

- congestion control

Improve performance:

- sliding window

- fast retransmit

- delayed response

- piggybacking

other:

- Timer (overtime retransmission timer, keep-alive timer, TIME_WAIT timer, etc.)

2.15 Based on TCP application layer protocol

- HTTP

- HTTPS

- SSH

- Telnet

- FTP

- SMTP

3. UDP and TCP application scenarios

Application scenarios of UDP

- Scenarios with high speed requirements: UDP protocol can be used for video chatting or watching live broadcast, because even if a few frames are lost, the impact on users is not great

Application scenarios of TCP

- In the scene of sending messages and file transfer and web browsing, it is necessary to ensure that the sent messages are not lost

If you only understand the above two, you will be useless. In fact, due to the fast transmission speed of UDP, the application scenarios are much larger than that of TCP. Even in HTTP, the latest HTTP 3.0 has already surrendered UDP. Common QQ chats are also UDP (poor network time-adaptive TCP), but it is necessary to solve the data loss at the application layer. Therefore, it can be said

UDP is the eternal god!