Purpose

I am writing a tool for video and AI, from access, algorithm processing, forwarding, storage, to calling the AI process, and interacting with the AI process, plug-in, scripting, doing a lot of work, the process and thread interaction during the period and The logic of rendering the results to the UI must be rigorous, otherwise, in terms of c++, the UI will not be handled well and the main process will easily crash.

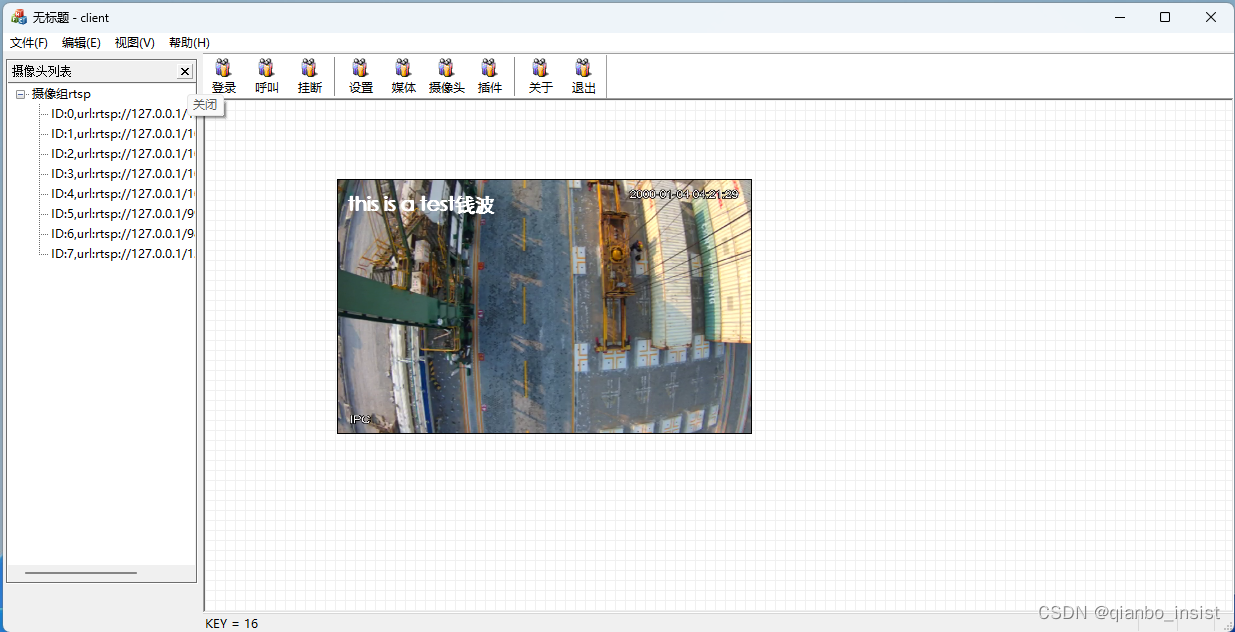

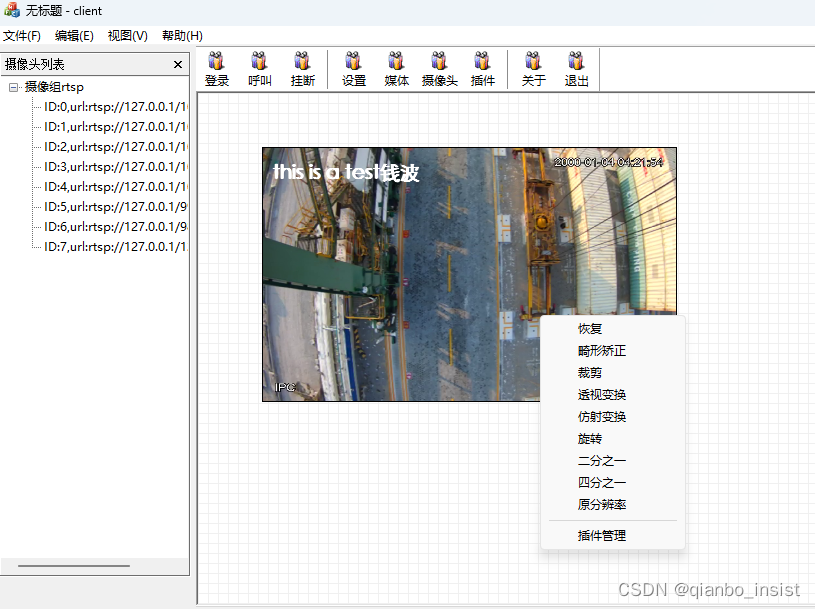

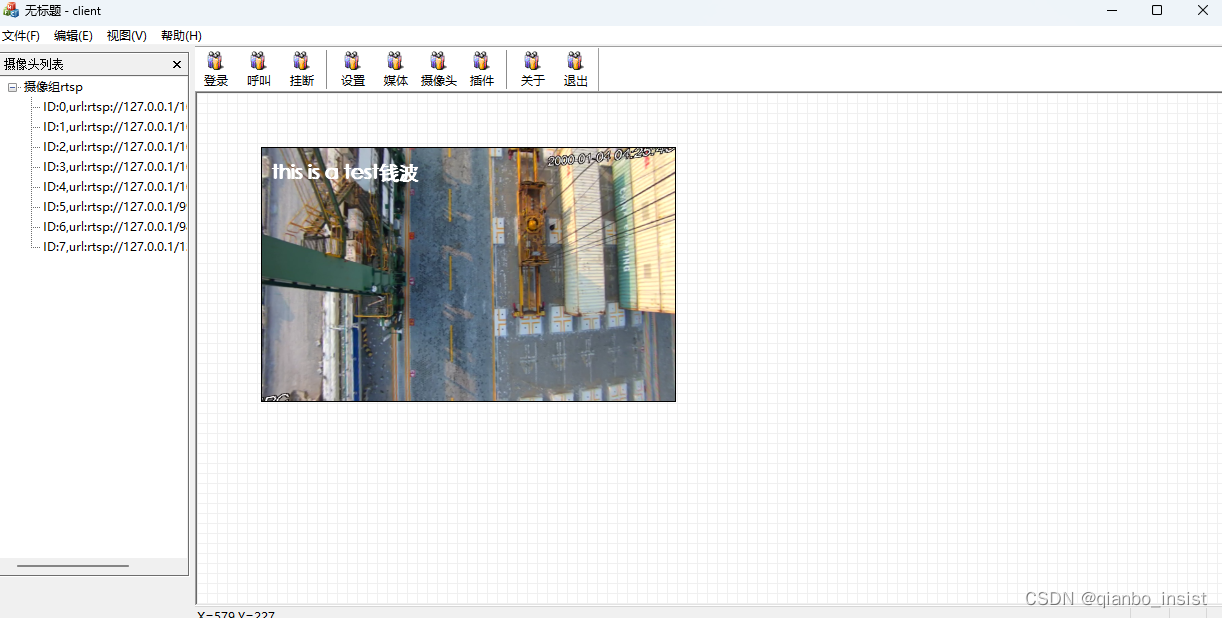

The figure below shows the tools for interaction and various ways of access, such as rtsp access, rtp direct access, ps GB28181 access, rtmp access, ts stream access, etc. The production of tools is extremely difficult and discarded He has worked on a lot of projects, with no distractions, and looks at the past lightly—it seems free and easy, but he is really helpless.

2 thoughtful

2.1 Ensure that the core is common

Since it is a relatively advanced tool, innovation is inevitable. However, I put innovation into the plug-in. Innovation must contain a lot of flexibility, because you are a tool, a kind of "emptiness", and only the void in the world can contain it. everything. Component plug-in and scripting are ways to ensure common core logic.

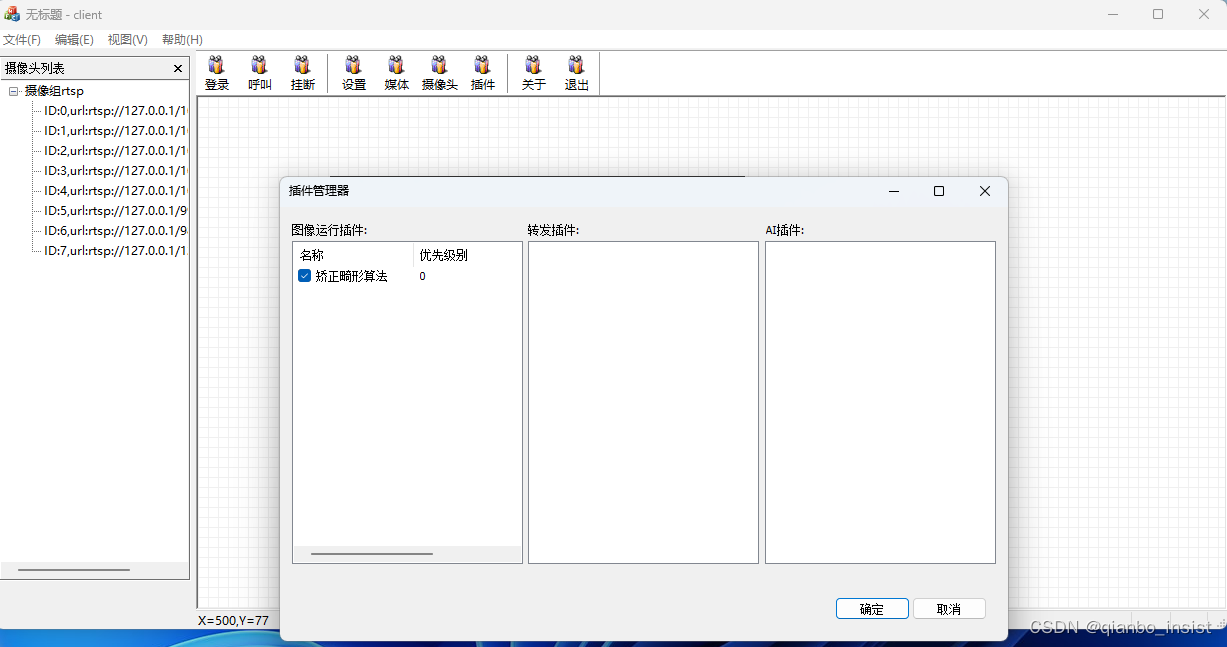

2.2 Plug-in

Run, forward, AI, and plug-in images, and manage plug-ins for each video image. Access is a unified external access, in order to make the dynamic library and the main process

3 data structure

There are many issues to consider when defining the data structure for access

typedef struct s_param

{

const char* name = NULL;//所属名称 如rtspclient

const char* url = NULL;//唯一名称,or ip+port

uint8_t* data = NULL;

int len = 0; //len = -1 收到结束

uint32_t ssrc;

void* obj = NULL; //create 中传入的obj

const char* ipfrom = NULL;

int width = 0;

int height = 0;

uint16_t fport;

uint16_t seq;//兼容rtp

int codeid; //与ffmpeg兼容

int payload = -1; //payload type

int64_t pts;

void* decoder = NULL;

uint8_t* extradata = NULL;

int extradatalen = 0;

//bool isnew = true; //是否是一个新的传输

//void* rtspworker = NULL;

}s_param;

//回调函数是压缩流

typedef void(*func_callback)(s_param* param);

typedef void(*func_callback_new)(void* obj, const char* url);

//len >0 vps sps pps 信息

//len = 0

//len = -1 无法连接

//len = -2 read 出错

//len = -3 其他错误

typedef void(*func_callback_extra)(uint8_t* data, int len);

//

After accessing the video during the production process, I realized that many things cannot be imagined alone. Even with decades of video experience, the program still needs to be polished. There are usually too many demo productions, and the disadvantages of not being productized are fully revealed. Even a The data structure is also changed and changed. In order to enable video stitching in the tool, many methods have been added: as shown in the figure below.

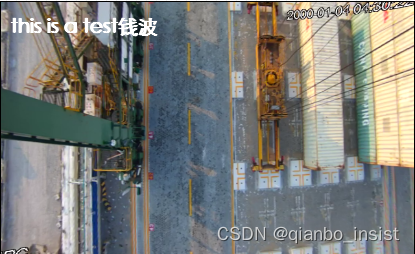

Video stitching is a relatively large topic, and we will describe it later. The following is the first step algorithm of video stitching, fisheye correction processing, and the plug-in dynamic library for fisheye correction is loaded.

Fisheye correction algorithm loading

extern "C"

{

_declspec(dllexport) const char* WINAPI func_name();

_declspec(dllexport) int WINAPI _fastcall func_worker(uint8_t* data, int w, int h, uint8_t* outdata);

_declspec(dllexport) int WINAPI func_no();

}

The above is the interface definition of the plug-in. In order to achieve infinite video caching, it needs to start from the application of memory, to the image algorithm of the dynamic library, to forwarding, storage, and then access to the AI script, sharing the memory with the AI script, and always using the same memory.

Test-Image Fisheye Correction Algorithm Loading

//画一帧图像

void func_draw(int px, int py, int drawrect)

{

AVFrame* frame = NULL;

if (v_frames.dequeue(&frame))

{

/*cv::Mat nmat;

nmat.cols = frame->width;

nmat.rows = frame->height;

nmat.data = frame->data[0];*/

//装载畸形矫正算法

//if (!v_funcs.empty())

auto iter = v_plugins.begin();

while (iter != v_plugins.end())

{

(*iter)->FUNC_worker(frame->data[0], frame->width, frame->height,NULL);

iter++;

}

v_drawer.func_draw(px, py, frame, drawrect);

{

if (v_script != nullptr && !v_script->IsStop())

{

if (frame->key_frame)

{

//写入共享内存

int w = frame->width;

int h = frame->height;

mem_info_ptr ptr = v_script->mem_getch_process(frame->data[0], w, h);

if (ptr != nullptr)

{

v_script->v_in.Push(ptr);

v_script->Notify();//通知取队列

}

}

if (!v_script->v_out.IsEmpty())

{

//开始画子画面//子画面中开始画结果

//v_script->

}

}

std::lock_guard<std::mutex> lock(v_mutex_cache);

if (v_cache != NULL)

{

av_freep(&v_cache->data[0]);

av_frame_free(&v_cache);

}

//保存最后一帧当前帧

v_cache = frame;

//是否需要和python脚本进程交互

}

}

}

The fisheye correction image is an algorithm for splicing multiple cameras. The entire logic connection, from drawing to the UI, enters the core drawing program through a callback, so there will be a lot of logic separation codes in the callback.

4 process communication

1 Lua

interacts with scripts, 1 is lua language, 2 is javascript language, 3 is glsl language 4 is the need for commonly used AI to deal with python lua The idea is very

general, that is, c++ provides API, httpserver and websocket server, and lua organizes the basic startup Of course, one advantage of lua is that no configuration files are needed, and the configuration files can be directly included in the lua script.

2 javascript

is simpler, directly calls the javascript function, otherwise javascript calls the c++ function and embeds the v8 engine.

2 Python

is the key point, that is, how does C++ drive python? Does it need a process management or a simple call? Later, I found out that process management is really needed. It is more convenient for C++ to use the boost library for process management, but first use a simpler solution. , is to use asynchronous call process and memory sharing,

#include <iostream>

#include <string>

#include <vector>

//#include <boost/filesystem.hpp>

#include <algorithm>

#include <boost/process.hpp>

int main_face()

{

//std::system("python.exe face.py");

namespace bp = boost::process;

//std::string child_process_name = "child_process";

//std::cout << "main_process: before spawning" << std::endl;

bp::child c(bp::search_path("python.exe"), "face.py");

tcpclient client;

while (c.running())

{

//printf("running");

client.send();

client.read();

}

c.wait(); //wait for the process to exit

int result = c.exit_code();

}

Use boost to start the process, record the process, and have a manager for process management in the UI.

Use the tcp protocol to send a certain frame of the video image is ready in the memory sharing, receive the tcp message to indicate that it has been processed in the memory sharing, insert the message into the queue, and the main process discovers and renders.

Other ways:

use std::system to start the process, use the advanced features of c++, async for circular waiting, still use tcp or udp for communication, pay attention

void f()

{

std::system("python.exe face.py");

}

int main()

{

auto fut = std::async(f); // 异步执行f

udpclient client;

while (fut.wait_for(1000ms) != // 循环直到f执行结束

std::future_status::ready) //意味着python程序已经退出进程

{

client.send(w,h,number)

client.read(w,h,number)

//tcp 或者udp 进行通信

//消息入队列

queue.push()

}

}

It is feasible to use boost to manage the process, or to manage the process asynchronously by itself. The advantage of the udp method over the tcp method is that udp itself is also semi-asynchronous. In the case of not binding udp, there is no need to consider udp cache Processing, in the case of binding udp, the udp cache will be blocked when it reaches the limit. We only need to add udp to know that the python process is ready when the data is received, and the tcp processing is the same.

This is part one of the series. I will continue to improve this tool until it is more convenient and the code is clear. In the next article, it must be that this tool has made great progress