It is very convenient to build and use OpenCV's C# development environment in Visual Studio, almost without keyboard input.

Using C# to develop OpenCV can directly become an industrial software product rather than a laboratory program. Almost all video manufacturers in the world provide C# OpenCV development interface.

OpenCV has learned the design idea of Matlab and uses Matrix as the basic data type. Therefore, this article also uses the knowledge of matrix as the basis for entry. We skip the most basic part of the matrix to start.

1 Know the Attributes of the OpenCV matrix Mat

To learn a development component, first understand its properties and methods.

1.1 A piece of code about the Mat attribute

using System;

using System.IO;

using System.Text;

using System.Collections.Generic;

using System.Windows.Forms;

using System.Drawing;

using System.Drawing.Imaging;

using System.Drawing.Drawing2D;

using System.Runtime.InteropServices;

using OpenCvSharp;

using OpenCvSharp.Extensions;

/// <summary>

/// 部分 OpenCVSharp 拓展函数

/// </summary>

public static partial class CVUtility

{

public static string Attributes(Mat src)

{

StringBuilder sb = new StringBuilder();

sb.AppendLine("<html>");

sb.AppendLine("<style>");

sb.AppendLine("td { padding:5px;font-size:12px; } ");

sb.AppendLine(".atd { text-align:right;white-space:nowrap; } ");

sb.AppendLine("</style>");

sb.AppendLine("<table width='100%' border='1' bordercolor='#AAAAAA' style='border-collapse:collapse;'>");

sb.AppendLine("<tr><td rowspan='5'>属<br>性</td><td class='atd'>数据首地址Data(IntPtr): </td><td>" + src.Data + "</td></tr>");

sb.AppendLine("<tr><td class='atd'>行数Rows(=Height): </td><td>" + src.Rows + "=" + src.Height + "</td></tr>");

sb.AppendLine("<tr><td class='atd'>列数Cols(=Width): </td><td>" + src.Cols + "=" + src.Width + "</td></tr>");

sb.AppendLine("<tr><td class='atd'>尺寸Size(Width x Height): </td><td>" + src.Size().Width + "x" + src.Size().Height + "</td></tr>");

sb.AppendLine("<tr><td class='atd'>矩阵维度Dims: </td><td>" + src.Dims + "</td></tr>");

sb.AppendLine("<tr><td rowspan='7'>方<br>法</td><td class='atd'>通道数Channels: </td><td>" + src.Channels() + "</td></tr>");

sb.AppendLine("<tr><td class='atd'>通道的深度Depth: </td><td>" + src.Depth() + "</td></tr>");

sb.AppendLine("<tr><td class='atd'>元素的数据大小ElemSize(bytes): </td><td>" + src.ElemSize() + "</td></tr>");

sb.AppendLine("<tr><td class='atd'>通道1元素的数据大小ElemSize1(bytes): </td><td>" + src.ElemSize1() + "</td></tr>");

sb.AppendLine("<tr><td class='atd'>每行步长Step(bytes): </td><td>" + src.Step() + "</td></tr>");

sb.AppendLine("<tr><td class='atd'>通道1每行步长Step1(bytes): </td><td>" + src.Step1() + "</td></tr>");

sb.AppendLine("<tr><td class='atd'>矩阵类型Type: </td><td>" + src.Type() + "</td></tr>");

sb.AppendLine("</table>");

sb.AppendLine("</html>");

return sb.ToString();

}

}

1.2 Display of Mat properties

using System;

using System.IO;

using System.Collections;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Windows.Forms;

using System.Drawing.Imaging;

using OpenCvSharp;

namespace Legalsoft.OpenCv.Train

{

public partial class Form1 : Form

{

private void button1_Click(object sender, EventArgs e)

{

Mat src = new Mat(Path.Combine(Application.StartupPath, "101.jpg"), ImreadModes.AnyColor | ImreadModes.AnyDepth);

webBrowser1.DocumentText = CVUtility.Attributes(src);

}

}

}

1.3 Attribute function running results

1.4 Explanation of properties and constant methods

1.4.1 Data

A pointer of type IntPtr, pointing to the first address of the Mat matrix data. Generally not used.

1.4.2 Rows or Height

The number of rows of the Mat matrix is also the height (pixels) of the image.

1.4.3 Cols or Width

The number of columns of the Mat matrix is also the width (pixels) of the image.

1.4.4 Size()

Size() returns the structure composed of Width and Height.

1.4.5 Dims

The dimension of the Mat matrix, if Mat is a two-dimensional matrix, then Dims=2, and for three-dimensional, Dims=3.

1.4.6 Channels()

The number of channels of Mat matrix elements.

For example, common RGB color images, Channels =3;

A grayscale image has only one grayscale component information, Channels =1.

1.4.7 Depth()

The precision of each channel in each pixel.

In Opencv, Mat.Depth() gets a number from 0 to 6, representing different digits respectively.

The corresponding relationship is as follows:

CV_8U=0

CV_8S=1

CV_16U=2

CV_16S=3

CV_32S=4

CV_32F=5

CV_64F=6

Among them, U means unsigned, and S means signed, that is, signed and unsigned numbers.

1.4.8 ElemSize() and ElemSize1()

The number of data bytes (bytes) for each element in the matrix.

If the data type in Mat is CV_8UC1, then ElemSize = 1;

If it is CV_8UC3 or CV_8SC3, then ElemSize = 3;

If it is CV_16UC3 or CV_16SC3, then ElemSize = 6;

It can be seen that ElemSize is in bytes;

ElemSize1() is the number of data bytes of channel 1. have:

ElemSize1 = ElemSize / Channels

1.4.9 Step() and Step1()

The step size (byte) of each row in the Mat matrix is the total number of bytes of all elements in each row.

Step1() is the step size of channel 1. have:

Step1 = Step / ElemSize1

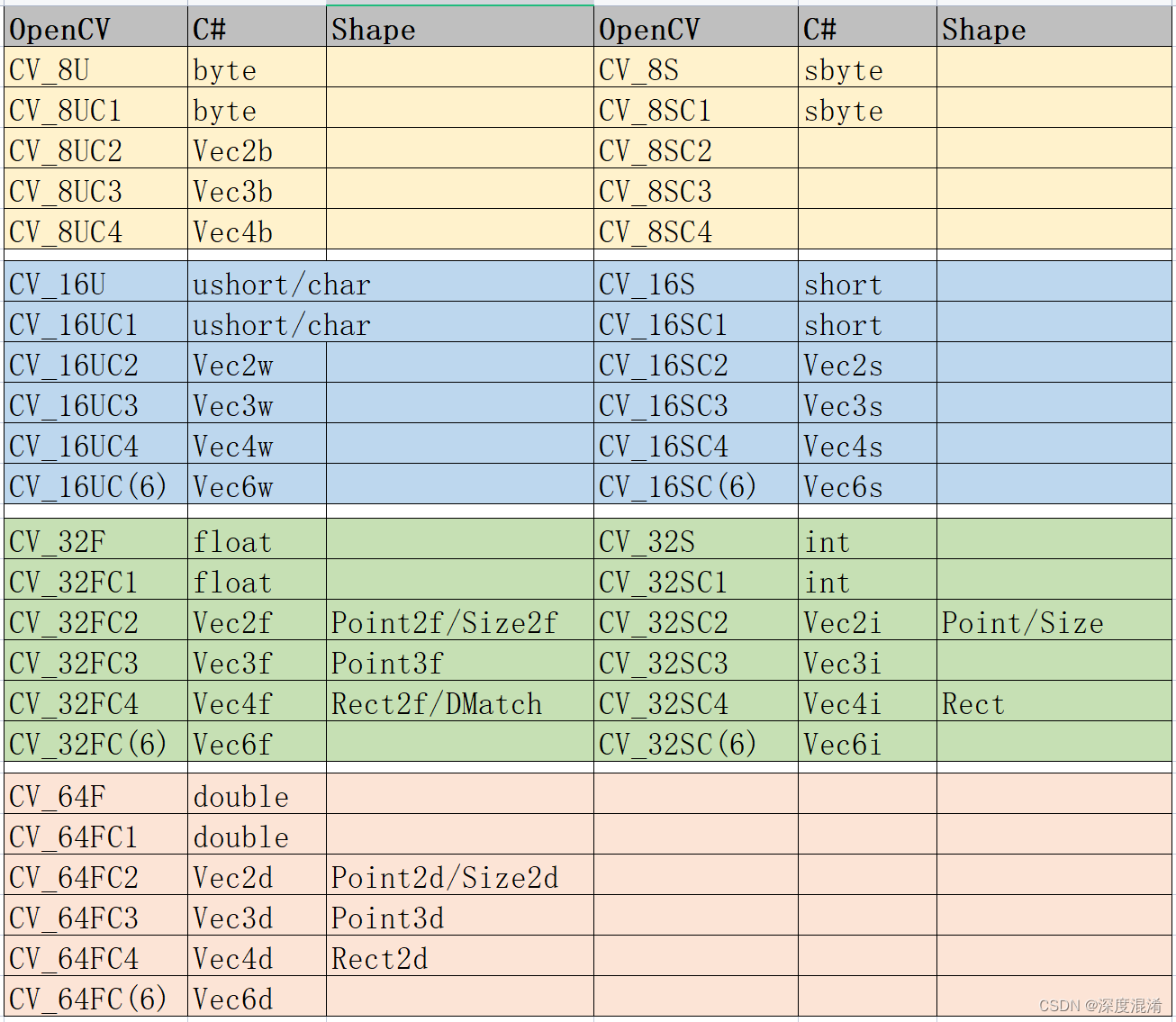

1.4.10 Type()

The type of Mat matrix, including the type of elements in the matrix and the number of channels.

1.5 Definition of Mat Type

/// <summary>

/// typeof(T) -> MatType

/// </summary>

protected static readonly IReadOnlyDictionary<Type, MatType> TypeMap = new Dictionary<Type, MatType>

{

[typeof(byte)] = MatType.CV_8UC1,

[typeof(sbyte)] = MatType.CV_8SC1,

[typeof(short)] = MatType.CV_16SC1,

[typeof(char)] = MatType.CV_16UC1,

[typeof(ushort)] = MatType.CV_16UC1,

[typeof(int)] = MatType.CV_32SC1,

[typeof(float)] = MatType.CV_32FC1,

[typeof(double)] = MatType.CV_64FC1,

[typeof(Vec2b)] = MatType.CV_8UC2,

[typeof(Vec3b)] = MatType.CV_8UC3,

[typeof(Vec4b)] = MatType.CV_8UC4,

[typeof(Vec6b)] = MatType.CV_8UC(6),

[typeof(Vec2s)] = MatType.CV_16SC2,

[typeof(Vec3s)] = MatType.CV_16SC3,

[typeof(Vec4s)] = MatType.CV_16SC4,

[typeof(Vec6s)] = MatType.CV_16SC(6),

[typeof(Vec2w)] = MatType.CV_16UC2,

[typeof(Vec3w)] = MatType.CV_16UC3,

[typeof(Vec4w)] = MatType.CV_16UC4,

[typeof(Vec6w)] = MatType.CV_16UC(6),

[typeof(Vec2i)] = MatType.CV_32SC2,

[typeof(Vec3i)] = MatType.CV_32SC3,

[typeof(Vec4i)] = MatType.CV_32SC4,

[typeof(Vec6i)] = MatType.CV_32SC(6),

[typeof(Vec2f)] = MatType.CV_32FC2,

[typeof(Vec3f)] = MatType.CV_32FC3,

[typeof(Vec4f)] = MatType.CV_32FC4,

[typeof(Vec6f)] = MatType.CV_32FC(6),

[typeof(Vec2d)] = MatType.CV_64FC2,

[typeof(Vec3d)] = MatType.CV_64FC3,

[typeof(Vec4d)] = MatType.CV_64FC4,

[typeof(Vec6d)] = MatType.CV_64FC(6),

[typeof(Point)] = MatType.CV_32SC2,

[typeof(Point2f)] = MatType.CV_32FC2,

[typeof(Point2d)] = MatType.CV_64FC2,

[typeof(Point3i)] = MatType.CV_32SC3,

[typeof(Point3f)] = MatType.CV_32FC3,

[typeof(Point3d)] = MatType.CV_64FC3,

[typeof(Size)] = MatType.CV_32SC2,

[typeof(Size2f)] = MatType.CV_32FC2,

[typeof(Size2d)] = MatType.CV_64FC2,

[typeof(Rect)] = MatType.CV_32SC4,

[typeof(Rect2f)] = MatType.CV_32FC4,

[typeof(Rect2d)] = MatType.CV_64FC4,

[typeof(DMatch)] = MatType.CV_32FC4,

};

2 Create a Mat instance

There are as many as 15 ways to create an instance of Mat. Choose the ones you use frequently.

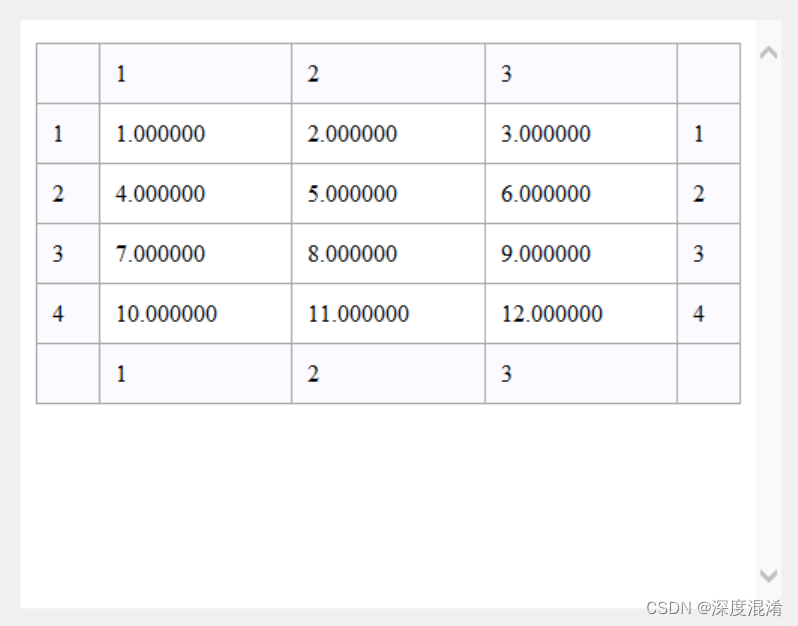

2.1 Create Mat from data (array)

One-, two-, and more-dimensional matrices can be created from arrays.

using System;

using System.IO;

using System.Collections;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Windows.Forms;

using System.Drawing.Imaging;

using OpenCvSharp;

namespace Legalsoft.OpenCv.Train

{

public partial class Form1 : Form

{

private void button1_Click(object sender, EventArgs e)

{

double[,] a = new double[4, 3] {

{ 1, 2, 3 },

{ 4, 5, 6 },

{ 7, 8, 9 },

{ 10, 11, 12 }

};

Mat src = new Mat(4, 3, MatType.CV_64F, a, 0);

webBrowser1.DocumentText = CVUtility.ToHtmlTable(src);

}

}

}

The source code of the ToHtmlTable method for displaying the matrix is:

using System;

using System.Text;

using System.Collections;

using System.Collections.Generic;

using OpenCvSharp;

using OpenCvSharp.Extensions;

/// <summary>

/// 部分 OpenCVSharp 拓展函数

/// </summary>

public static partial class CVUtility

{

/// <summary>

/// 矩阵输出为HTML表格

/// </summary>

/// <param name="src"></param>

/// <returns></returns>

public static string ToHtmlTable(Mat src)

{

StringBuilder sb = new StringBuilder();

sb.AppendLine("<html>");

sb.AppendLine("<style>");

sb.AppendLine("td { padding:10px; } ");

sb.AppendLine(".atd { background-color:#FAFAFF; } ");

sb.AppendLine("</style>");

sb.AppendLine("<table width='100%' border='1' bordercolor='#AAAAAA' style='border-collapse:collapse;'>");

for (int y = 0; y < src.Height; y++)

{

if (y == 0)

{

// 标题行

sb.AppendLine("<tr class='atd'>");

sb.Append("<td class='atd'></td>");

for (int x = 0; x < src.Width; x++)

{

sb.AppendFormat("<td class='atd'>{0:D}</td>", (x + 1));

}

sb.Append("<td class='atd'></td>");

sb.AppendLine("</tr>");

}

sb.AppendLine("<tr>");

sb.AppendFormat("<td class='atd'>{0:D}</td>", (y + 1));

for (int x = 0; x < src.Width; x++)

{

sb.AppendFormat("<td>{0:F6}</td>", src.At<double>(y, x));

}

sb.AppendFormat("<td class='atd'>{0:D}</td>", (y + 1));

sb.AppendLine("</tr>");

if (y == (src.Height - 1))

{

// 标题行

sb.AppendLine("<tr class='atd'>");

sb.Append("<td class='atd'></td>");

for (int x = 0; x < src.Width; x++)

{

sb.AppendFormat("<td class='atd'>{0:D}</td>", (x + 1));

}

sb.Append("<td class='atd'></td>");

sb.AppendLine("</tr>");

}

}

sb.AppendLine("</table>");

sb.AppendLine("</html>");

return sb.ToString();

}

}

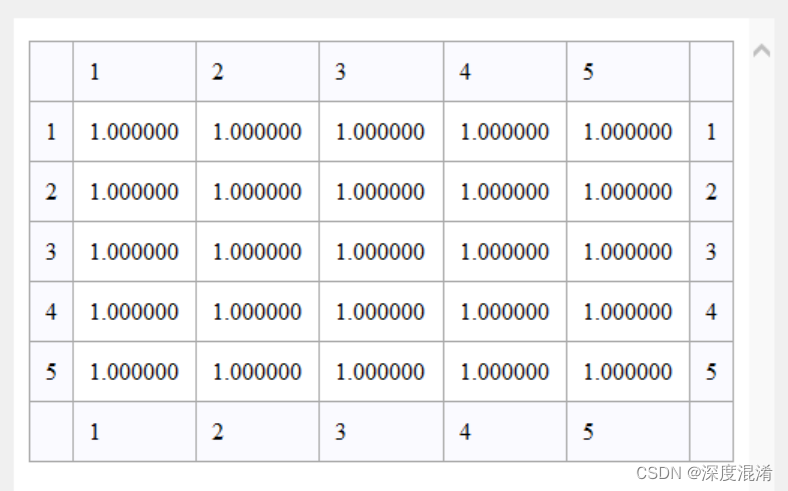

Matrix data display:

2.2 Read and create Mat from image file

The C# code is simple.

Mat src = Cv2.ImRead(imageFileName, ImreadModes.AnyColor | ImreadModes.AnyDepth);or:

Mat src = Cv2.ImRead(imageFileName);Definition of the Cv2.ImRead function:

Cv2.ImRead(string fileName, ImReadModes flags)

Cv2.IMREAD_COLOR: default parameter, read in a color picture, ignore the alpha channel Cv2.IMREAD_GRAYSCALE: read in grayscale images Cv2.IMREAD_UNCHANGED: As the name suggests, read in the complete picture, including the alpha channel Cv2.AnyColor Cv2.AnyDepth

Cv2.ImRead converts the image into a three-dimensional array by default. The innermost dimension represents the gray value of the three channels (BGR) of a pixel, the second dimension represents the gray value of all pixels in each row, and the third dimension, which is the outermost dimension Represents this picture.

The length of the second dimension after reading is the width (height) of the picture

Cv2.ImRead reads B, G, R (red, green, blue) and generally ranges from 0 to 255.

For the Cv2.ImRead function, it must be noted that the order of reading is BGR.

2.3 Get a part of the picture (usually a rectangle) to create a Mat

Image processing is often localized. This part is generally a rectangle, and may also be a circle, an ellipse, an irregular shape, or other shapes.

Part of the image used for processing becomes ROI (region of interest), the region of interest.

// 原图

Mat src = CVUtility.LoadImage("stars/roi/301.jpg");

// 定义 ROI 区域

int w = src.Width / 2;

int h = src.Height / 2;

int x = src.Width / 4;

int y = src.Height / 4;

Rect rect = new Rect(x, y, w, h);

// 提取 ROI

Mat dst = new Mat(src, rect);

2.4 Some special matrices

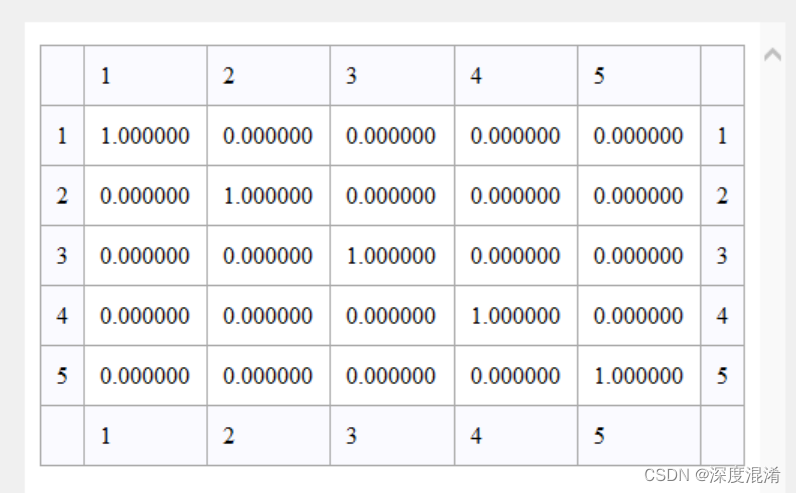

2.4.1 Identity matrix

Mat m1 = Mat.Eye(new OpenCvSharp.Size(5, 5), MatType.CV_64F);

webBrowser1.DocumentText = CVUtility.ToHtmlTable(m1);

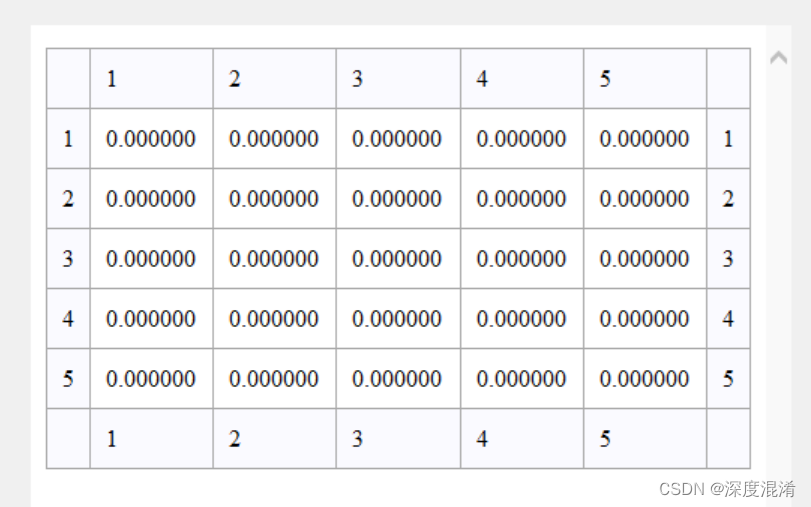

2.4.2 All 0 matrix

// 全为0的矩阵

Mat m2 = Mat.Zeros(new OpenCvSharp.Size(5, 5), MatType.CV_64F);

webBrowser1.DocumentText = CVUtility.ToHtmlTable(m2);

2.4.3 All 1s Matrix

// 全为1的矩阵

Mat m3 = Mat.Ones(new OpenCvSharp.Size(5, 5), MatType.CV_64F);

webBrowser1.DocumentText = CVUtility.ToHtmlTable(m3);

3 Multiple ways to access matrix elements (picture pixels)

Listed below are 3 methods of accessing image pixels and swapping instances of Red and Blue channels.

3.1 Get/Set (slow)

/// <summary>

/// 普通访问方式

/// Get/Set (slow)

/// </summary>

/// <param name="src"></param>

public static void Search_GetSet(Mat src)

{

for (int y = 0; y < src.Height; y++)

{

for (int x = 0; x < src.Width; x++)

{

Vec3b color = src.Get<Vec3b>(y, x);

byte temp = color.Item0;

color.Item0 = color.Item2; // B <- R

color.Item2 = temp; // R <- B

src.Set<Vec3b>(y, x, color);

}

}

}

3.2 GenericIndexer (reasonably fast)

/// <summary>

/// 通用索引器方式访问像素

/// GenericIndexer(reasonably fast)

/// </summary>

/// <param name="src"></param>

public static void Search_Indexer(Mat src)

{

Mat.Indexer<Vec3b> indexer = src.GetGenericIndexer<Vec3b>();

for (int y = 0; y < src.Height; y++)

{

for (int x = 0; x < src.Width; x++)

{

Vec3b color = indexer[y, x];

byte temp = color.Item0;

color.Item0 = color.Item2; // B <- R

color.Item2 = temp; // R <- B

indexer[y, x] = color;

}

}

}

3.3 TypeSpecificMat (faster)

/// <summary>

/// TypeSpecificMat(faster)

/// </summary>

/// <param name="src"></param>

public static void Search_TypeSpecific(Mat src)

{

Mat<Vec3b> mat3 = new Mat<Vec3b>(src);

var indexer = mat3.GetIndexer();

for (int y = 0; y < src.Height; y++)

{

for (int x = 0; x < src.Width; x++)

{

Vec3b color = indexer[y, x];

byte temp = color.Item0;

color.Item0 = color.Item2; // B <- R

color.Item2 = temp; // R <- B

indexer[y, x] = color;

}

}

}

4 Conversion of matrix and other image data

After using OpenCvSharp for calculation or image processing, the image needs to be reflected in various ways, so it is necessary to convert Mat to image information in other formats, or vice versa.

4.1 Mat -> System.Drawing.Bitmap

using OpenCvSharp;

using OpenCvSharp.Extensions;

Mat mat = new Mat("demo.jpg", ImreadModes.Color);

Bitmap bitmap = OpenCvSharp.Extensions.BitmapConverter.ToBitmap(mat);4.2 System.Drawing.Bitmap -> Mat

using OpenCvSharp;

using OpenCvSharp.Extensions;

Bitmap bitmap = new Bitmap("demo.png");

Mat mat = OpenCvSharp.Extensions.BitmapConverter.ToMat(bitmap);4.3 Mat -> byte[]

Mat mat = new Mat("demo.png", ImreadModes.Color);

byte[] bytes1 = mat.ToBytes(".png");

// or

Cv2.ImEncode(".png", mat, out byte[] bytes2);

4.4 Convert color image to grayscale image or others

Commonly used functions are

Cv2.CvtColor(Mat src, Mat dst, ColorConversionCodes code, int dstCn: 0);Common examples:

/// <summary>

/// 转为灰色图(8 bit)

/// </summary>

/// <param name="src"></param>

/// <returns></returns>

public static Mat ToGray(Mat src)

{

Mat dst = new Mat();

// 转为灰度图 但通道 8 bit (必须)

Cv2.CvtColor(src, dst, ColorConversionCodes.BGR2GRAY);

return dst;

}

There are a lot of ColorConversionCodes enumeration types, just remember a few commonly used ones.

enum ColorConversionCodes {

COLOR_BGR2BGRA = 0, //!< add alpha channel to RGB or BGR image

COLOR_RGB2RGBA = COLOR_BGR2BGRA,

COLOR_BGRA2BGR = 1, //!< remove alpha channel from RGB or BGR image

COLOR_RGBA2RGB = COLOR_BGRA2BGR,

COLOR_BGR2RGBA = 2, //!< convert between RGB and BGR color spaces (with or without alpha channel)

COLOR_RGB2BGRA = COLOR_BGR2RGBA,

COLOR_RGBA2BGR = 3,

COLOR_BGRA2RGB = COLOR_RGBA2BGR,

COLOR_BGR2RGB = 4,

COLOR_RGB2BGR = COLOR_BGR2RGB,

COLOR_BGRA2RGBA = 5,

COLOR_RGBA2BGRA = COLOR_BGRA2RGBA,

COLOR_BGR2GRAY = 6, //!< convert between RGB/BGR and grayscale, @ref color_convert_rgb_gray "color conversions"

COLOR_RGB2GRAY = 7,

COLOR_GRAY2BGR = 8,

COLOR_GRAY2RGB = COLOR_GRAY2BGR,

COLOR_GRAY2BGRA = 9,

COLOR_GRAY2RGBA = COLOR_GRAY2BGRA,

COLOR_BGRA2GRAY = 10,

COLOR_RGBA2GRAY = 11,

COLOR_BGR2BGR565 = 12, //!< convert between RGB/BGR and BGR565 (16-bit images)

COLOR_RGB2BGR565 = 13,

COLOR_BGR5652BGR = 14,

COLOR_BGR5652RGB = 15,

COLOR_BGRA2BGR565 = 16,

COLOR_RGBA2BGR565 = 17,

COLOR_BGR5652BGRA = 18,

COLOR_BGR5652RGBA = 19,

COLOR_GRAY2BGR565 = 20, //!< convert between grayscale to BGR565 (16-bit images)

COLOR_BGR5652GRAY = 21,

COLOR_BGR2BGR555 = 22, //!< convert between RGB/BGR and BGR555 (16-bit images)

COLOR_RGB2BGR555 = 23,

COLOR_BGR5552BGR = 24,

COLOR_BGR5552RGB = 25,

COLOR_BGRA2BGR555 = 26,

COLOR_RGBA2BGR555 = 27,

COLOR_BGR5552BGRA = 28,

COLOR_BGR5552RGBA = 29,

COLOR_GRAY2BGR555 = 30, //!< convert between grayscale and BGR555 (16-bit images)

COLOR_BGR5552GRAY = 31,

COLOR_BGR2XYZ = 32, //!< convert RGB/BGR to CIE XYZ, @ref color_convert_rgb_xyz "color conversions"

COLOR_RGB2XYZ = 33,

COLOR_XYZ2BGR = 34,

COLOR_XYZ2RGB = 35,

COLOR_BGR2YCrCb = 36, //!< convert RGB/BGR to luma-chroma (aka YCC), @ref color_convert_rgb_ycrcb "color conversions"

COLOR_RGB2YCrCb = 37,

COLOR_YCrCb2BGR = 38,

COLOR_YCrCb2RGB = 39,

COLOR_BGR2HSV = 40, //!< convert RGB/BGR to HSV (hue saturation value), @ref color_convert_rgb_hsv "color conversions"

COLOR_RGB2HSV = 41,

COLOR_BGR2Lab = 44, //!< convert RGB/BGR to CIE Lab, @ref color_convert_rgb_lab "color conversions"

COLOR_RGB2Lab = 45,

COLOR_BGR2Luv = 50, //!< convert RGB/BGR to CIE Luv, @ref color_convert_rgb_luv "color conversions"

COLOR_RGB2Luv = 51,

COLOR_BGR2HLS = 52, //!< convert RGB/BGR to HLS (hue lightness saturation), @ref color_convert_rgb_hls "color conversions"

COLOR_RGB2HLS = 53,

COLOR_HSV2BGR = 54, //!< backward conversions to RGB/BGR

COLOR_HSV2RGB = 55,

COLOR_Lab2BGR = 56,

COLOR_Lab2RGB = 57,

COLOR_Luv2BGR = 58,

COLOR_Luv2RGB = 59,

COLOR_HLS2BGR = 60,

COLOR_HLS2RGB = 61,

COLOR_BGR2HSV_FULL = 66,

COLOR_RGB2HSV_FULL = 67,

COLOR_BGR2HLS_FULL = 68,

COLOR_RGB2HLS_FULL = 69,

COLOR_HSV2BGR_FULL = 70,

COLOR_HSV2RGB_FULL = 71,

COLOR_HLS2BGR_FULL = 72,

COLOR_HLS2RGB_FULL = 73,

COLOR_LBGR2Lab = 74,

COLOR_LRGB2Lab = 75,

COLOR_LBGR2Luv = 76,

COLOR_LRGB2Luv = 77,

COLOR_Lab2LBGR = 78,

COLOR_Lab2LRGB = 79,

COLOR_Luv2LBGR = 80,

COLOR_Luv2LRGB = 81,

COLOR_BGR2YUV = 82, //!< convert between RGB/BGR and YUV

COLOR_RGB2YUV = 83,

COLOR_YUV2BGR = 84,

COLOR_YUV2RGB = 85,

//! YUV 4:2:0 family to RGB

COLOR_YUV2RGB_NV12 = 90,

COLOR_YUV2BGR_NV12 = 91,

COLOR_YUV2RGB_NV21 = 92,

COLOR_YUV2BGR_NV21 = 93,

COLOR_YUV420sp2RGB = COLOR_YUV2RGB_NV21,

COLOR_YUV420sp2BGR = COLOR_YUV2BGR_NV21,

COLOR_YUV2RGBA_NV12 = 94,

COLOR_YUV2BGRA_NV12 = 95,

COLOR_YUV2RGBA_NV21 = 96,

COLOR_YUV2BGRA_NV21 = 97,

COLOR_YUV420sp2RGBA = COLOR_YUV2RGBA_NV21,

COLOR_YUV420sp2BGRA = COLOR_YUV2BGRA_NV21,

COLOR_YUV2RGB_YV12 = 98,

COLOR_YUV2BGR_YV12 = 99,

COLOR_YUV2RGB_IYUV = 100,

COLOR_YUV2BGR_IYUV = 101,

COLOR_YUV2RGB_I420 = COLOR_YUV2RGB_IYUV,

COLOR_YUV2BGR_I420 = COLOR_YUV2BGR_IYUV,

COLOR_YUV420p2RGB = COLOR_YUV2RGB_YV12,

COLOR_YUV420p2BGR = COLOR_YUV2BGR_YV12,

COLOR_YUV2RGBA_YV12 = 102,

COLOR_YUV2BGRA_YV12 = 103,

COLOR_YUV2RGBA_IYUV = 104,

COLOR_YUV2BGRA_IYUV = 105,

COLOR_YUV2RGBA_I420 = COLOR_YUV2RGBA_IYUV,

COLOR_YUV2BGRA_I420 = COLOR_YUV2BGRA_IYUV,

COLOR_YUV420p2RGBA = COLOR_YUV2RGBA_YV12,

COLOR_YUV420p2BGRA = COLOR_YUV2BGRA_YV12,

COLOR_YUV2GRAY_420 = 106,

COLOR_YUV2GRAY_NV21 = COLOR_YUV2GRAY_420,

COLOR_YUV2GRAY_NV12 = COLOR_YUV2GRAY_420,

COLOR_YUV2GRAY_YV12 = COLOR_YUV2GRAY_420,

COLOR_YUV2GRAY_IYUV = COLOR_YUV2GRAY_420,

COLOR_YUV2GRAY_I420 = COLOR_YUV2GRAY_420,

COLOR_YUV420sp2GRAY = COLOR_YUV2GRAY_420,

COLOR_YUV420p2GRAY = COLOR_YUV2GRAY_420,

//! YUV 4:2:2 family to RGB

COLOR_YUV2RGB_UYVY = 107,

COLOR_YUV2BGR_UYVY = 108,

//COLOR_YUV2RGB_VYUY = 109,

//COLOR_YUV2BGR_VYUY = 110,

COLOR_YUV2RGB_Y422 = COLOR_YUV2RGB_UYVY,

COLOR_YUV2BGR_Y422 = COLOR_YUV2BGR_UYVY,

COLOR_YUV2RGB_UYNV = COLOR_YUV2RGB_UYVY,

COLOR_YUV2BGR_UYNV = COLOR_YUV2BGR_UYVY,

COLOR_YUV2RGBA_UYVY = 111,

COLOR_YUV2BGRA_UYVY = 112,

//COLOR_YUV2RGBA_VYUY = 113,

//COLOR_YUV2BGRA_VYUY = 114,

COLOR_YUV2RGBA_Y422 = COLOR_YUV2RGBA_UYVY,

COLOR_YUV2BGRA_Y422 = COLOR_YUV2BGRA_UYVY,

COLOR_YUV2RGBA_UYNV = COLOR_YUV2RGBA_UYVY,

COLOR_YUV2BGRA_UYNV = COLOR_YUV2BGRA_UYVY,

COLOR_YUV2RGB_YUY2 = 115,

COLOR_YUV2BGR_YUY2 = 116,

COLOR_YUV2RGB_YVYU = 117,

COLOR_YUV2BGR_YVYU = 118,

COLOR_YUV2RGB_YUYV = COLOR_YUV2RGB_YUY2,

COLOR_YUV2BGR_YUYV = COLOR_YUV2BGR_YUY2,

COLOR_YUV2RGB_YUNV = COLOR_YUV2RGB_YUY2,

COLOR_YUV2BGR_YUNV = COLOR_YUV2BGR_YUY2,

COLOR_YUV2RGBA_YUY2 = 119,

COLOR_YUV2BGRA_YUY2 = 120,

COLOR_YUV2RGBA_YVYU = 121,

COLOR_YUV2BGRA_YVYU = 122,

COLOR_YUV2RGBA_YUYV = COLOR_YUV2RGBA_YUY2,

COLOR_YUV2BGRA_YUYV = COLOR_YUV2BGRA_YUY2,

COLOR_YUV2RGBA_YUNV = COLOR_YUV2RGBA_YUY2,

COLOR_YUV2BGRA_YUNV = COLOR_YUV2BGRA_YUY2,

COLOR_YUV2GRAY_UYVY = 123,

COLOR_YUV2GRAY_YUY2 = 124,

//CV_YUV2GRAY_VYUY = CV_YUV2GRAY_UYVY,

COLOR_YUV2GRAY_Y422 = COLOR_YUV2GRAY_UYVY,

COLOR_YUV2GRAY_UYNV = COLOR_YUV2GRAY_UYVY,

COLOR_YUV2GRAY_YVYU = COLOR_YUV2GRAY_YUY2,

COLOR_YUV2GRAY_YUYV = COLOR_YUV2GRAY_YUY2,

COLOR_YUV2GRAY_YUNV = COLOR_YUV2GRAY_YUY2,

//! alpha premultiplication

COLOR_RGBA2mRGBA = 125,

COLOR_mRGBA2RGBA = 126,

//! RGB to YUV 4:2:0 family

COLOR_RGB2YUV_I420 = 127,

COLOR_BGR2YUV_I420 = 128,

COLOR_RGB2YUV_IYUV = COLOR_RGB2YUV_I420,

COLOR_BGR2YUV_IYUV = COLOR_BGR2YUV_I420,

COLOR_RGBA2YUV_I420 = 129,

COLOR_BGRA2YUV_I420 = 130,

COLOR_RGBA2YUV_IYUV = COLOR_RGBA2YUV_I420,

COLOR_BGRA2YUV_IYUV = COLOR_BGRA2YUV_I420,

COLOR_RGB2YUV_YV12 = 131,

COLOR_BGR2YUV_YV12 = 132,

COLOR_RGBA2YUV_YV12 = 133,

COLOR_BGRA2YUV_YV12 = 134,

//! Demosaicing

COLOR_BayerBG2BGR = 46,

COLOR_BayerGB2BGR = 47,

COLOR_BayerRG2BGR = 48,

COLOR_BayerGR2BGR = 49,

COLOR_BayerBG2RGB = COLOR_BayerRG2BGR,

COLOR_BayerGB2RGB = COLOR_BayerGR2BGR,

COLOR_BayerRG2RGB = COLOR_BayerBG2BGR,

COLOR_BayerGR2RGB = COLOR_BayerGB2BGR,

COLOR_BayerBG2GRAY = 86,

COLOR_BayerGB2GRAY = 87,

COLOR_BayerRG2GRAY = 88,

COLOR_BayerGR2GRAY = 89,

//! Demosaicing using Variable Number of Gradients

COLOR_BayerBG2BGR_VNG = 62,

COLOR_BayerGB2BGR_VNG = 63,

COLOR_BayerRG2BGR_VNG = 64,

COLOR_BayerGR2BGR_VNG = 65,

COLOR_BayerBG2RGB_VNG = COLOR_BayerRG2BGR_VNG,

COLOR_BayerGB2RGB_VNG = COLOR_BayerGR2BGR_VNG,

COLOR_BayerRG2RGB_VNG = COLOR_BayerBG2BGR_VNG,

COLOR_BayerGR2RGB_VNG = COLOR_BayerGB2BGR_VNG,

//! Edge-Aware Demosaicing

COLOR_BayerBG2BGR_EA = 135,

COLOR_BayerGB2BGR_EA = 136,

COLOR_BayerRG2BGR_EA = 137,

COLOR_BayerGR2BGR_EA = 138,

COLOR_BayerBG2RGB_EA = COLOR_BayerRG2BGR_EA,

COLOR_BayerGB2RGB_EA = COLOR_BayerGR2BGR_EA,

COLOR_BayerRG2RGB_EA = COLOR_BayerBG2BGR_EA,

COLOR_BayerGR2RGB_EA = COLOR_BayerGB2BGR_EA,

//! Demosaicing with alpha channel

COLOR_BayerBG2BGRA = 139,

COLOR_BayerGB2BGRA = 140,

COLOR_BayerRG2BGRA = 141,

COLOR_BayerGR2BGRA = 142,

COLOR_BayerBG2RGBA = COLOR_BayerRG2BGRA,

COLOR_BayerGB2RGBA = COLOR_BayerGR2BGRA,

COLOR_BayerRG2RGBA = COLOR_BayerBG2BGRA,

COLOR_BayerGR2RGBA = COLOR_BayerGB2BGRA,

COLOR_COLORCVT_MAX = 143

};

POWER BY Docs Document Management System