Neural Radiation Fields (NeRF): Principles, Challenges, and Future Prospects

1. Background introduction

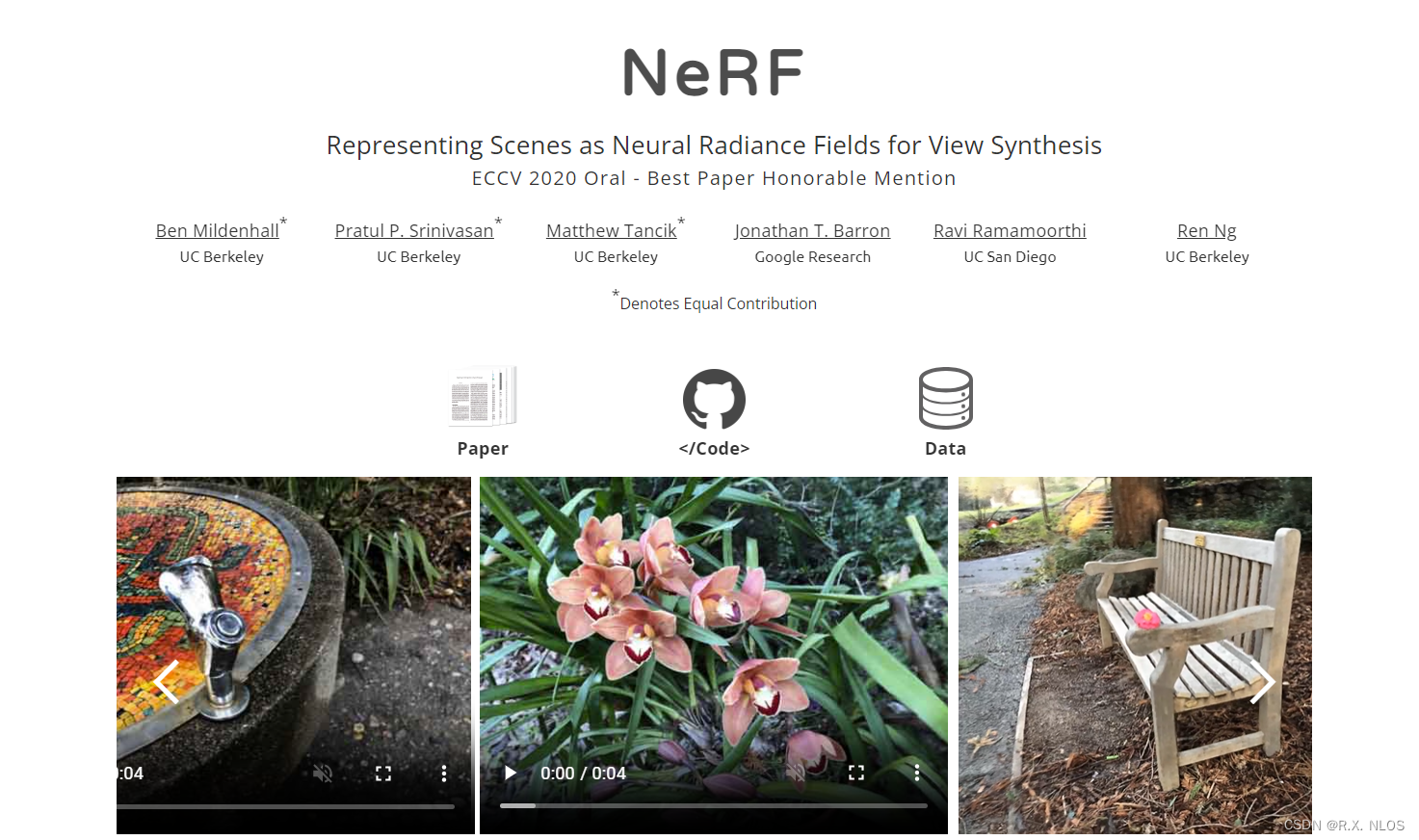

With the continuous development of deep learning and computer graphics, more and more attention has been paid to the intersection research in the fields of artificial intelligence and graphics. Neural Radiation Field ( NeRF ) is one of the promising research directions, which combines computer graphics and deep learning, aiming to model and radiate 3D scenes through neural networks.

2. Principle introduction and derivation

2.1 Basic principles of neural radiation field

Neural Radiant Fields is a novel approach to view generation that trains a continuous 3D scene representation using deep neural networks. The goal of NeRF is to learn a continuous 3D scene representation from a sparse set of 2D views, and then generate high-quality new views at arbitrary viewpoints. The key idea of NeRF is to treat the neural network as a field function that maps a three-dimensional position ( x , y , z ) in the scene to color and transparency. With this mapping, NeRF can generate color and transparency values for arbitrary rays and composite the final 2D image through volumetric radiance techniques.

2.2 Mathematical modeling and formula derivation

The neural radiation field uses a neural network f θ f_\thetafiThe three-dimensional position in the scene ( x , y , z ) (x, y, z)(x,y,z ) and viewpoint direction( θ , ϕ ) (\theta, \phi)( i ,ϕ ) maps to the colorC ( x , y , z ) C(x, y, z)C(x,y,z ) and transparencyα ( x , y , z ) \alpha(x, y, z)a ( x ,y,z ) . The parameters of the networkθ \thetaθ is learned by minimizing the difference between the input view and the predicted view.

Suppose we have a ray r ( t ) = o + tdr(t) = o + td in a scener(t)=o+t d , whereooo represents the optical center position of the camera,ttt means depth,ddd represents the light direction. We can compute the ray r( t ) r(t)by integrating the color and transparency of all voxels in the sceneThe color of r ( t ) :

C ( r ) = ∫ 0 ∞ T ( t ) C ( t ) α ( t ) d t , C(r) = \int_0^{\infty} T(t) C(t) \alpha(t) dt, C(r)=∫0∞T(t)C(t)α(t)dt,

其中 T ( t ) = exp ( − ∫ 0 t α ( t ′ ) d t ′ ) T(t) = \exp(-\int_0^t \alpha(t') dt') T(t)=exp(−∫0ta ( t′)dt′ )means from the starting point of the ray to the depthtttransfer function between t . To simplify calculations, the continuous integral is discretized into a finite sum:

C ( r ) ≈ ∑ i = 1 N T i C i α i Δ t i , C(r) \approx \sum_{i=1}^N T_i C_i \alpha_i \Delta t_i, C(r)≈i=1∑NTiCiaiΔti,

where NNN represents the number of sampling points,Δ ti \Delta t_iΔtiIndicates the sampling interval. Predict color C ( r ) by minimizing C(r)The difference between C ( r ) and the color of the input view can learn the parameters of the neural networkθ \thetai :

θ ∗ = arg min θ ∑ r ∈ R ∥ C ( r ) − C gt ( r ) ∥ 2 , \theta^* = \arg\min_\theta \sum_{r \in R} \lVert C(r ) - C_\text{gt}(r) \rVert^2,i∗=argiminr∈R∑∥C(r)−Cgt(r)∥2,

where RRR represents all rays in the input view,C gt ( r ) C_\text{gt}(r)Cgt( r ) represents the real color of the input view.

3. Research Status

Since neural radiation fields were first proposed, many remarkable advances have been made in this field. Researchers have devised various improvements and extensions to improve radiation quality and speed, handle dynamic scenes, and support real-time radiation, among other things. The following are some research directions worthy of attention:

-

Accelerate Radiation and Optimize Storage: Reduce radiation time and storage consumption by introducing strategies such as spatial layering, adaptive sampling, and network compression.

-

Dynamic Scenes: Modeling and radiation of dynamic scenes is enabled by extending neural radiation fields in the temporal dimension.

-

Real-time radiation: By combining ray tracing hardware and optimizing the neural network structure, the goal of real-time radiation is achieved.

-

More scene information: Integrate more scene information such as lighting and materials into the neural radiation field to improve the radiation effect.

4. Challenge

Despite remarkable progress in the neural radiation field, many challenges remain, such as:

-

High Computational and Storage Requirements: NeRF usually requires a lot of computing resources and storage space, which limits its use in low-end devices and real-time applications.

-

Issues with sparse input views: When the input views are few or unevenly distributed, NeRF may not reconstruct the scene well.

-

Limitations of lighting and material modeling: The current NeRF method mainly focuses on geometric modeling, and the modeling of lighting and materials still needs to be strengthened.

5. Future Outlook

As an emerging view generation method, neural radiation fields have great potential and broad application prospects. Future research may focus on the following areas:

-

Improve computing efficiency and reduce storage consumption: Reduce NeRF's computing and storage requirements through more efficient sampling strategies, network structures, and compression techniques.

-

Handling More Complex Scenes: Investigate how to extend NeRF to scenes with complex dynamics, lighting, and materials.

-

Real-time radiation and mobile device applications: Combining hardware acceleration and optimized algorithms to achieve real-time radiation enables NeRF to be widely used in mobile devices and real-time applications.

-

Overcoming the problem of sparse input views: Improving the reconstruction performance of NeRF under sparse input views through more powerful neural network structures and prior knowledge.

-

Combining Multimodal Data: Explore how to combine NeRF with other types of data (such as depth maps, semantic segmentation, etc.) to improve the accuracy of scene reconstruction and radiometry.

-

Expansion of application fields: Apply NeRF to a wider range of fields, such as virtual reality, augmented reality, unmanned driving, etc., to provide innovative solutions to practical problems.