foreword

These are some topics I collected from the Internet and related books when I was studying the course of digital image processing. These topics are mainly for the final exam. Before taking the question, you need to pay attention to the following points:

- This article sorts out the fourth question type, namely short answer questions.

- If you need an answer, you can go to my personal homepage to download the corresponding resources (sorting out is not easy, I hope everyone supports it).

- In order to improve the efficiency of the review, this article starts from the various knowledge points that may be involved in the exam, and classifies the topics according to the knowledge points. For some important knowledge points, there are also knowledge block diagrams.

- These topics are sorted and classified by me personally, so it is inevitable that there will be errors or inaccurate classification of some topics, please understand, and you can also point out in the comment area

- In this part, some questions will be marked with stars. These are the grades I divided according to the frequency of questions and the importance of the knowledge points themselves when I sorted out the materials and in the usual learning process. There are 3 grades in total. They are 1-star, 2-star, and 3-star respectively. Among them, the topics of 3-star are the most important. It is best for everyone to master these topics ( Note : Since these are the key points I marked personally, they are for reference only. Please refer to the key points of your respective schools. )

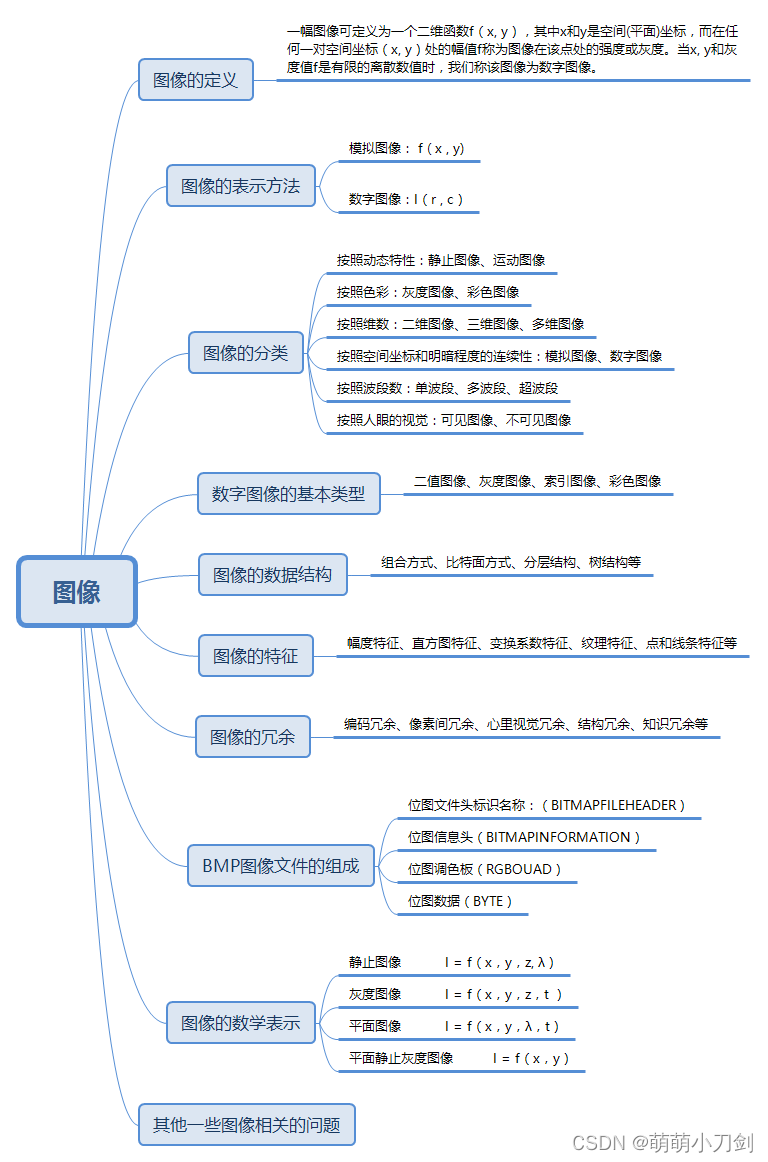

Knowledge point 01: Concepts related to images

In this section, I sort out some knowledge points and topics about images, mainly including the definition of images, the classification of images, the representation of images, the characteristics of images, the redundancy of images and so on. The following is the knowledge framework of this section and the frequently asked questions.

- What is an image?

- What are physical images, analog images, digital images?

- How to represent analog images, digital images? What is the meaning of each component? ( ★ )

- How are images classified?

- What are the basic types of digital images? What are the characteristics of each? ( ★★★ )

- What are the data structures of images? What are the characteristics of each?

- What are the characteristics of the image? What are the characteristics of each?

- What are the main types of image redundancy? What are the characteristics of each? ( ★★ )

- Briefly describe the imaging model of the image ( ★★ )

- How to represent still images, monochrome images, flat images?

- Explain the meaning of the components in I = f(x,y,z,λ,t)? ( ★ )

- What are the components of a BMP image file?

- State the connections and differences between visual information such as images, videos, graphics, and animations

- How to convert continuous image and digital image to each other?

- Briefly describe the characteristics of digital image information

Knowledge point 02: Basic concepts of digital image processing

In this section, I sort out some topics about the basic concepts of digital image processing, mainly some macro topics, including the level of digital image processing, system composition, characteristics, algorithms, purpose, application, research content, etc. The following is the knowledge framework of this section and the frequently asked questions.

- What are the levels of digital image processing? What does the specific content include? What are the characteristics of each? ( ★★★ )

- What does low-level image processing include? What are the characteristics? ( ★★ )

- What does intermediate image processing include? What are the characteristics? ( ★★ )

- What does advanced image processing include? What are the characteristics? ( ★★ )

- What are the components of a digital image processing system? What is the role of each? ( ★ )

- What are the characteristics of digital image processing? ( ★★ )

- What kinds of algorithms are mainly included in digital image processing?

- What are the two main methods of digital image processing? What are the characteristics of each?

- What is the future development trend of digital image processing?

- What are the issues that require further research in digital image processing?

- What is the purpose of digital image processing? ( ★★★ )

- What are the application fields of digital image processing? Try to mention a few aspects? (★)

- What are the main research contents of digital image processing? Briefly explain what they do? ( ★★ )

- What are the geometry, smoothing, sharpening, degradation, restoration, and segmentation processes of digital image processing used for?

- What is the significance of digital image processing? ( ★★ )

- What is the difference and connection between digital image processing and computer graphics?

- What are the commonly used development tools for digital image processing?

- What are the commonly used application software for digital image processing?

- Please take a specific application (such as OCR, automatic driving, face recognition punching, etc.) as an example to explain how the three levels of digital image processing are reflected? ( ★ )

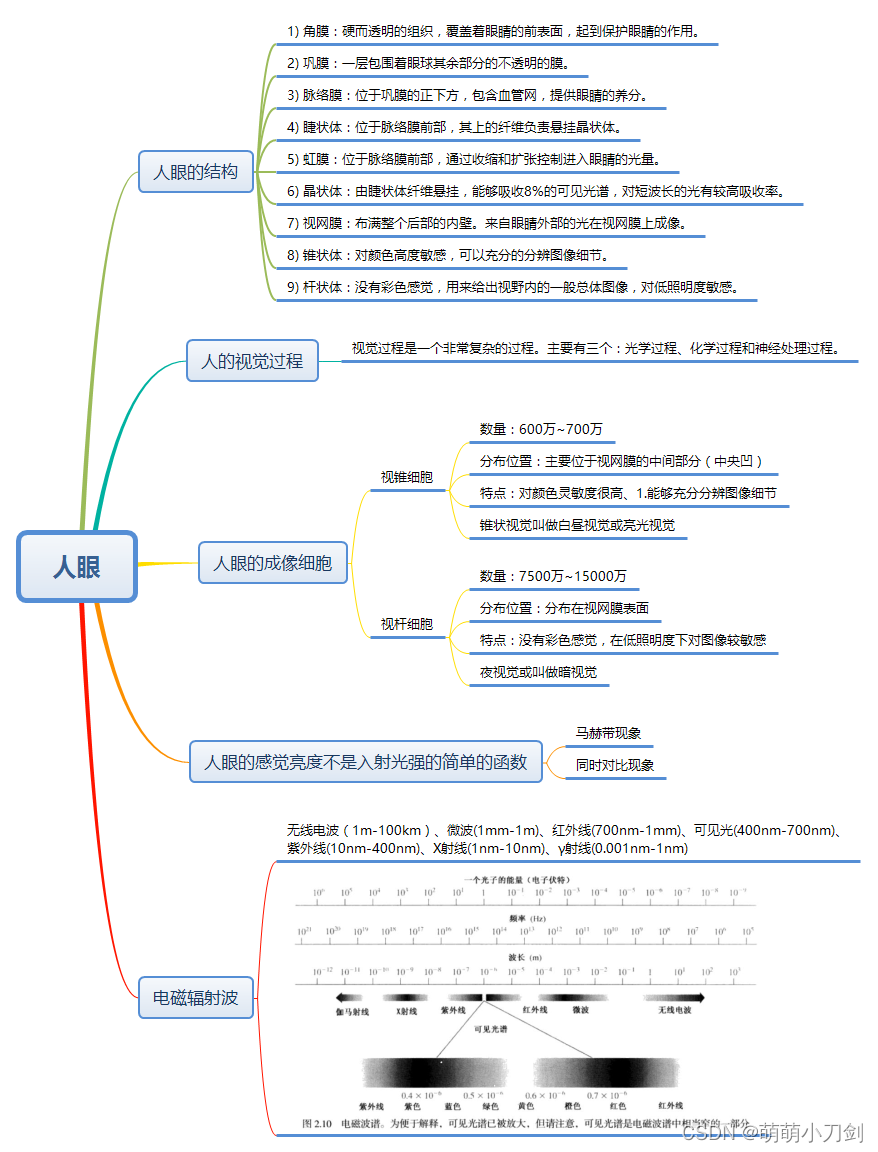

Knowledge point 03: Vision principle, Mach band effect, electromagnetic spectrum

In this section, I sort out some knowledge points and topics about the human eye, mainly including the structure of the human eye, the human visual process, the imaging cells of the human eye, the electromagnetic spectrum, the Mach band phenomenon, and so on. The following is the knowledge framework of this section and the frequently asked questions.

- What are the structures of the human eye? Briefly describe their characteristics? ( ★ )

- Briefly describe the process of human vision. ( ★ )

- When entering a dark theater during the day, it takes a while to get used to being able to see clearly and find an empty seat. Try to describe the visual principle of this phenomenon. ( ★★ )

- What are the imaging cells of the human eye and what are their characteristics? ( ★★★ )

- What is a Mach belt? What is the Mach Belt Effect? How to use this effect to process the image? ( ★★ )

- What phenomena indicate that the perceived brightness of the human eye is not a simple function of the incident light intensity? ( ★★ )

- Briefly describe the mechanism of the human eye.

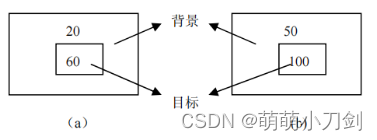

- When a person observes two target images of the same shape as shown in the title picture, which one will the target be brighter? Is there any difference from the actual brightness? Brief reasons. [ The black (darkest) grayscale value is set to 0, and the white (brightest) grayscale value is set to 255

]

] - Briefly describe the principle of three primary colors.

- What are electromagnetic radiation waves? Arranged by wavelength from longest to shortest

- What is monochromatic light, composite light, and achromatic light?

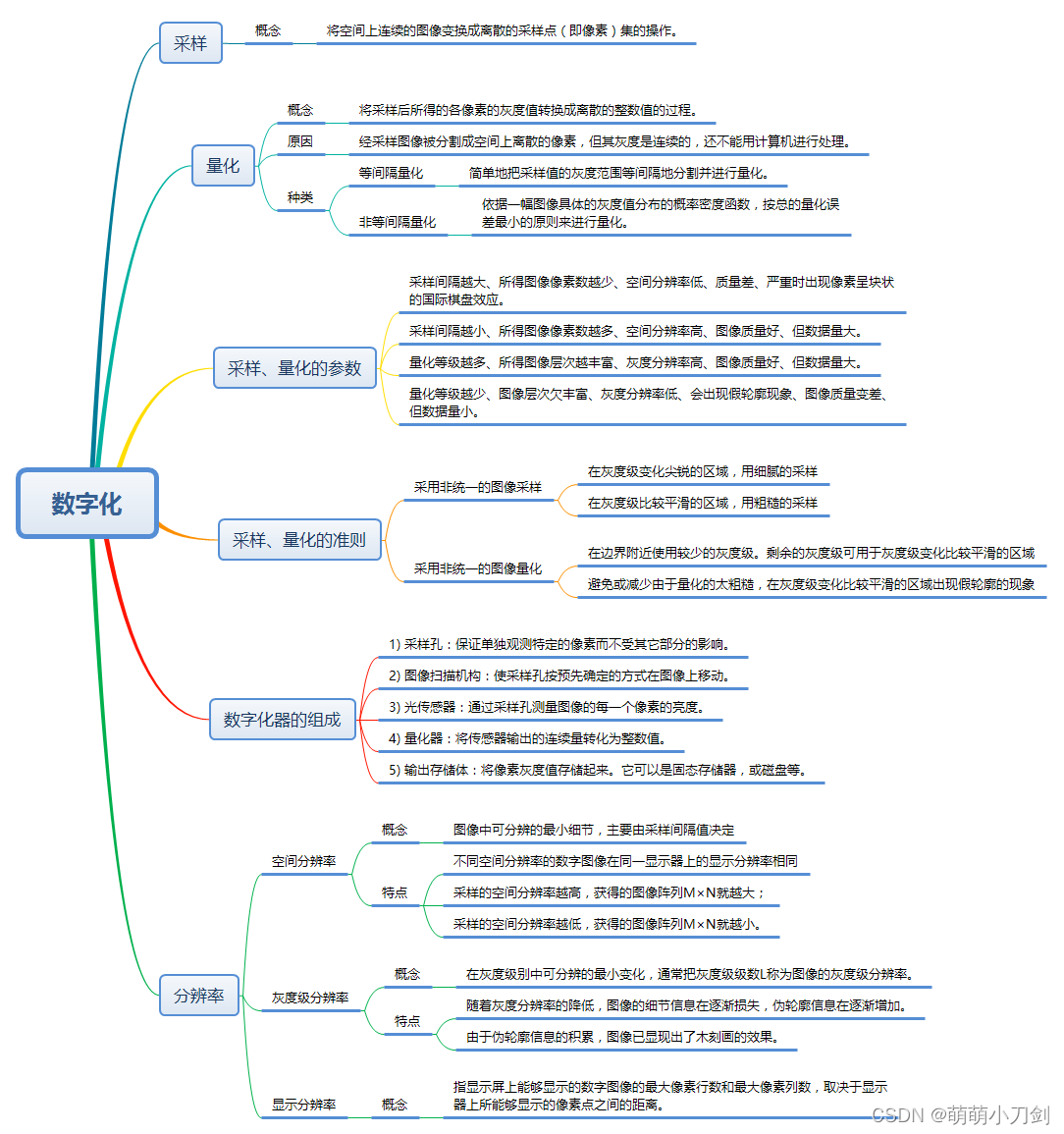

Knowledge point 04: digitization, resolution

I sorted out some knowledge points and topics about digitization, mainly including the concepts, reasons, parameters, and criteria of sampling and quantization, the composition of digitizers, spatial resolution, grayscale resolution, and so on. The following is the knowledge framework of this section and the frequently asked questions.

- Briefly describe the reason and process of digitization? ( ★★ )

- What does sampling and quantization mean? ( ★★★ )

- What are the two types of continuous gray value quantization? What are the characteristics of each?

- What is the relationship between sampling, quantization parameters and digitized images? ( ★★★ )

- How does the size of the sampling interval affect the image? ( ★★ )

- What effect does the quantization level have on the image? ( ★★ )

- What causes the chessboard effect and false contour phenomenon ? ( ★★ )

- What are the criteria for sampling and quantization? ( ★★ )

- How to sample and quantify slowly changing images and images with rich details?

- When quantizing an image, what happens if the quantization level is relatively small? Why? ( ★★ )

- Briefly describe the principle of uniform sampling and uniform quantization.

- What are the parts of a digitizer?

- Briefly describe the steps of image digitization, and explain which two kinds of quality defects will appear? Please describe the strategies used to ensure image quality when digitizing scenes with slow grayscale transitions and images with a large amount of detail. What are false contours?

- Does the larger the aperture, the brighter the captured image? Does the lower the number of the aperture mean the smaller the aperture? 2.8 aperture or 5.6 aperture, which picture is brighter? The smaller the aperture, the more three-dimensional the picture?

- What is the impact of the sampling number and spatial resolution changes on the visual effect of the image?

- What factors limit the resolution of video cameras and digital cameras? Is higher the better? How can I further increase the resolution?

- What is spatial resolution? What is Grayscale Resolution? What are the characteristics of each? ( ★★★ )

- What is display resolution? what does it depend on?

Knowledge point 05: Histogram

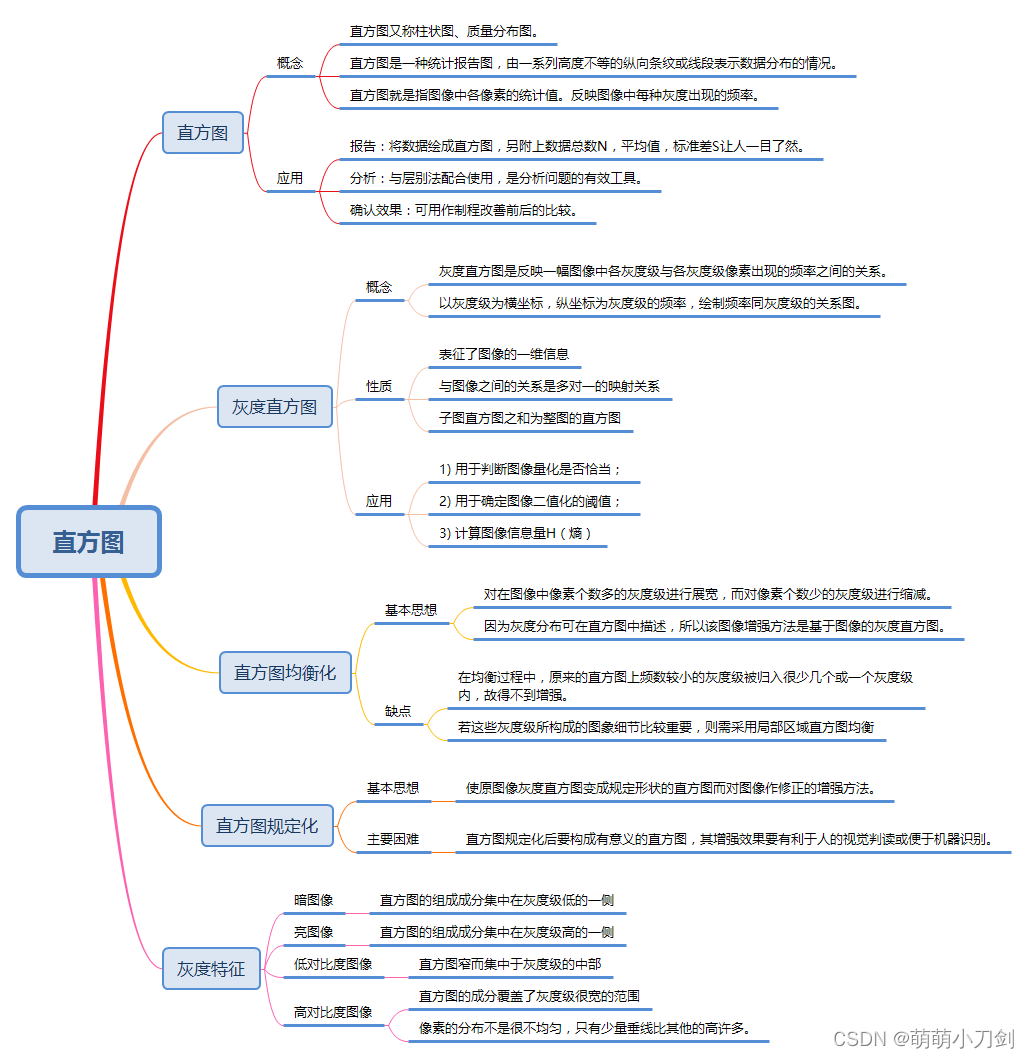

In this section, I have sorted out some knowledge points and topics about histograms, mainly including the concept, properties, and applications of histograms; the concept, properties, and applications of grayscale histograms; the two most commonly used methods for histogram correction : Histogram equalization and histogram regulation; the 4 gray features of the image and the changes after equalization, etc. The following is the knowledge framework of this section and the frequently asked questions.

- What is a histogram?

- What are the properties and applications of histograms?

- What is a grayscale histogram? (★★)

- What are the properties of a grayscale histogram? (★★★)

- What are the applications of grayscale histogram? (★★)

- What is the basic idea of histogram equalization? What is the purpose? What are the disadvantages? (★★★)

- What is the basic idea of histogram specification? What are the main difficulties? (★)

- Assuming that a digital image has been enhanced with histogram equalization technology, will it change the result if it is enhanced with this method?

- Assume that a histogram equalization process is performed on a digital image. Try to prove (on the image after histogram equalization) that the result of the second histogram equalization process is the same as the result of the first histogram equalization process.

- What is the relationship between the histogram distribution and the contrast of a grayscale image? (★★)

- What are the basic grayscale features? What changes will be produced by equalizing the graph under these characteristics? (★★★)

- Try to explain why the discrete histogram equalization technique generally cannot get a flat histogram? (★★★)

- The two images ( 1 for white and 0 for black ) are completely different as shown in the title image , but their histograms are the same. Assume that each image is processed with a 3 × 3 smooth template (the image boundary is not considered, and the result is still 0 or 1 according to rounding ).

(1) Is the histogram of the processed image still the same? (2) If they are not the same, find the two histograms.

Knowledge point 06: Basic operations on images

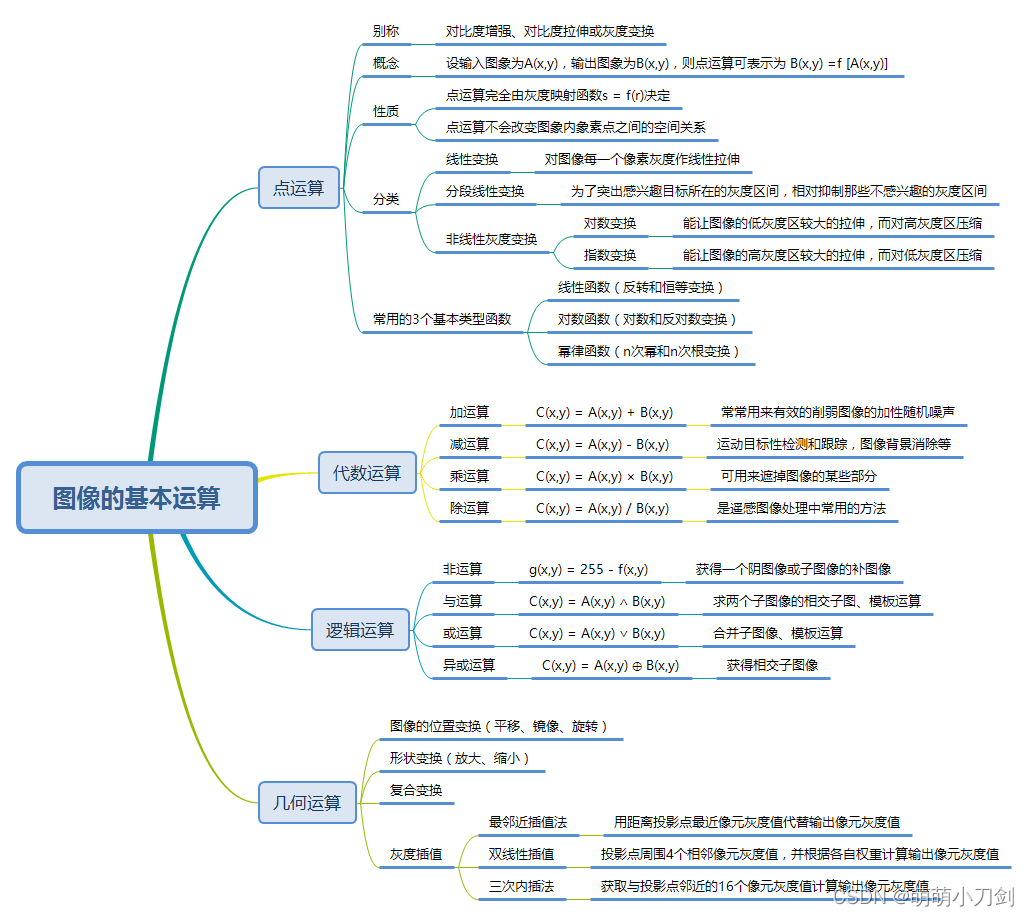

In this section, I have sorted out some knowledge points and topics about basic operations on images, mainly including four parts: point operations, algebraic operations, geometric operations, and logical operations. The following is the knowledge framework of this section and the frequently asked questions.

- What are the types of algebraic operations? What are the characteristics of each? (★★)

- What is image point operation? What are the characteristics? (★★)

- What is a point operation? What is a space operation? What is the difference between the two? (★★)

- Briefly describe the three basic types of functions commonly used in image enhancement point processing. (★★★)

- Briefly describe the principles of three commonly used pixel gray value interpolation (★)

- Briefly describe the characteristics of three commonly used pixel gray value interpolation (★)

- What types of grayscale transformations include? What are the characteristics of each? (★★★)

- What is logarithmic transformation? What is Exponential Transformation? What is the difference between the two?

- Does image rotation cause image distortion? Why?

- Briefly describe the process of image rotation in the Cartesian coordinate system.

- How to solve the problem of image holes generated during image rotation in the Cartesian coordinate system?

- What is the use of difference shadow method? (★)

- Why can multi-image averaging method denoise? What is its main difficulty? (★★)

- How to extract the outline of a rectangle in an image using only logical operations?

Knowledge point 07: Image transformation

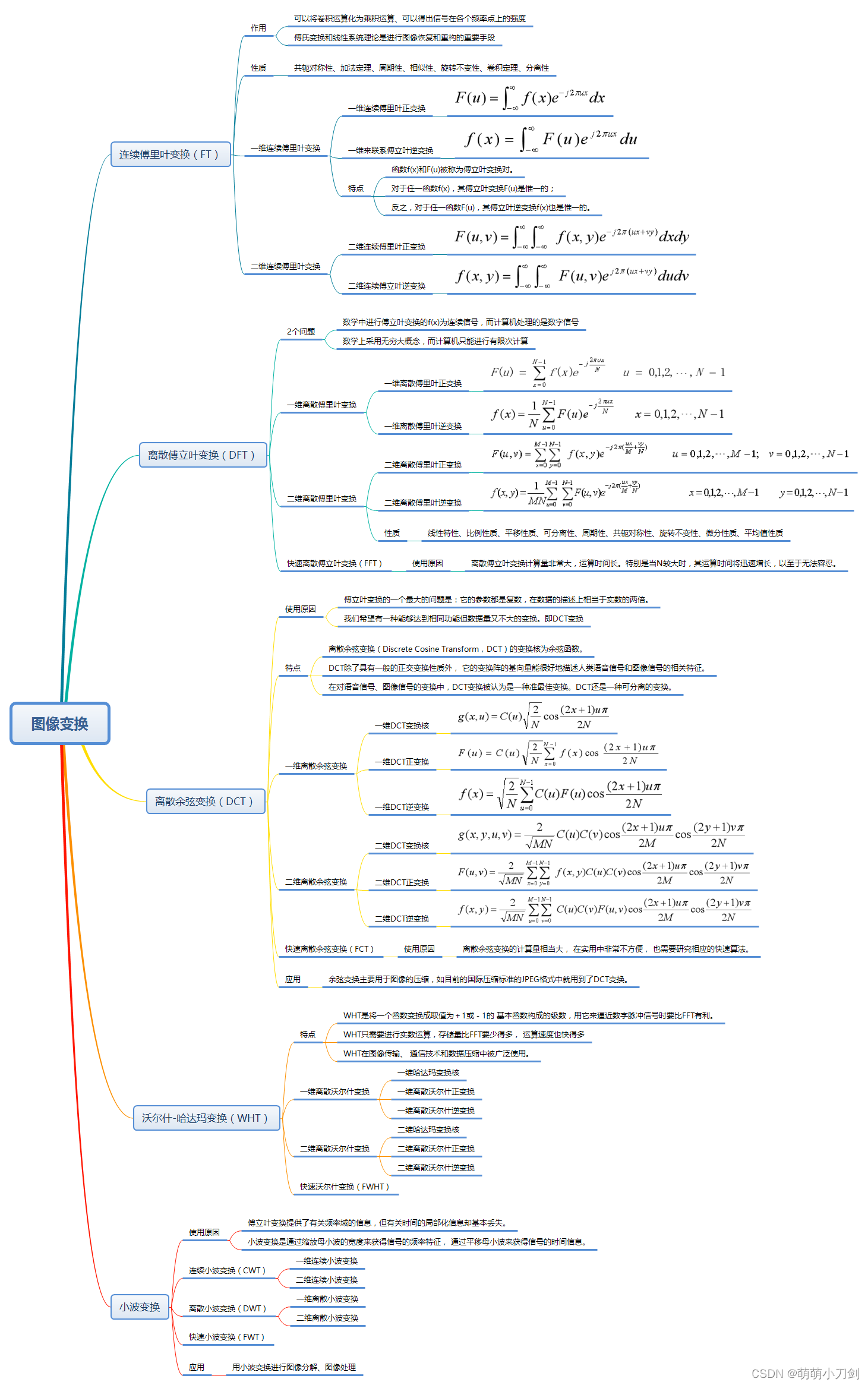

In this section, I sort out some knowledge points and topics about image transformation, mainly including Fourier transform, discrete Fourier transform, fast Fourier transform, wavelet transform, orthogonal transform and so on. The following is the knowledge framework of this section and the frequently asked questions.

- What is the purpose of Fourier transforming an image? (★★★)

- Please briefly describe the principle of the Fast Fourier Transform. (★★)

- Briefly describe the application principle of Fourier transform in high-pass filtering of images. (★★★)

- Briefly describe the application principle of Fourier transform in low-pass filtering of images. (★★★)

- Briefly describe the application principle of wavelet transform in image compression.

- What are the properties of the two-dimensional discrete Fourier transform? (★★)

- Why is the operation of c*log(K+1) often performed when drawing the power spectrum in the frequency domain? (★★★)

- Why do you often move the 0 frequency point to the middle of the spectrum? (★★★)

- How would the plot of the power spectrum look like without moving the 0 frequency point to the middle of the spectrum? (★★)

- How to move the 0 frequency point to the middle of the spectrum mathematically and in Matlab?

- What is the meaning of F(0,0) in Fourier transform?

- It is known that the FFT calculation result is H(u,v) = M(u,v)+j N(u,v), where M(u,v) and N(u,v) are both real matrixes, and j is an imaginary number unit. So how to calculate magnitude? How to calculate phase angle? How to calculate power spectrum? (★★)

- Why when graphing in the frequency domain, we usually only plot the magnitude of the Fourier transform, but not the phase angle? (★★ )

- With respect to f(x,y), how does the power spectrum change on the image after the following new function? (★★) 1. f(ax,by) 2. f(xa, yb) 3. f rotates clockwise by β degree

- What is the significance of 2D discrete Fourier transform separability? (★★)

- What are the similarities and differences between the discrete Walsh transform and the Hadamard transform? (★★)

- What are wavelets? What is the difference between wavelet basis functions and Fourier transform basis functions?

- Why is the wavelet transform called the "electron microscope" of the signal, and how to realize this function?

- What is the difference between the time-frequency characteristics of Fourier transform, windowed Fourier transform and wavelet transform?

- Purpose of Image Transformation (★★)

- What is the purpose of orthogonal transform in image processing? What are the main uses of image transformation?

- Briefly describe several commonly used image transformations (★★)

- What are the characteristics of orthogonal transformation?

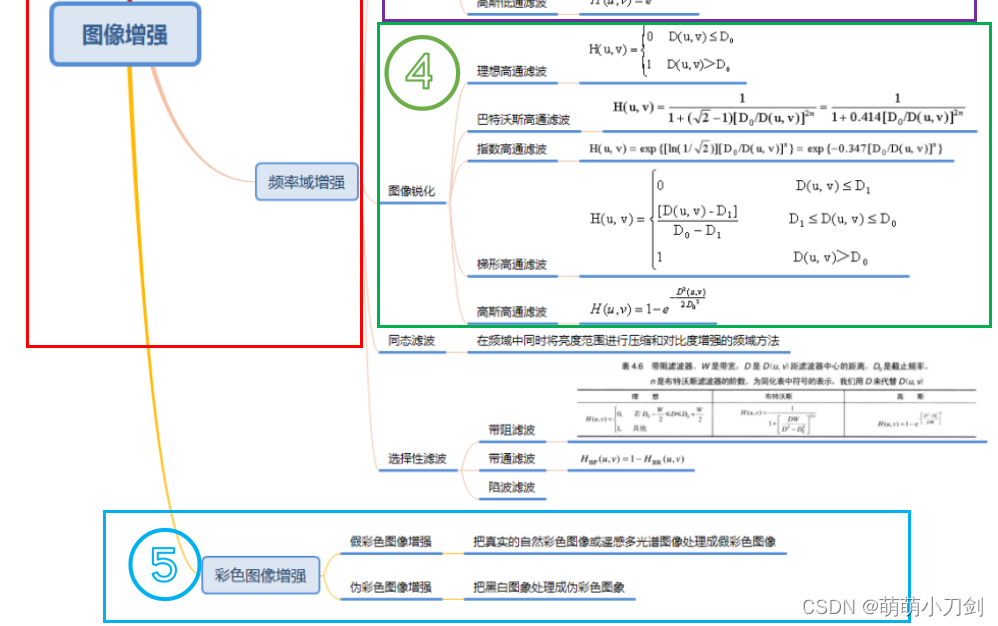

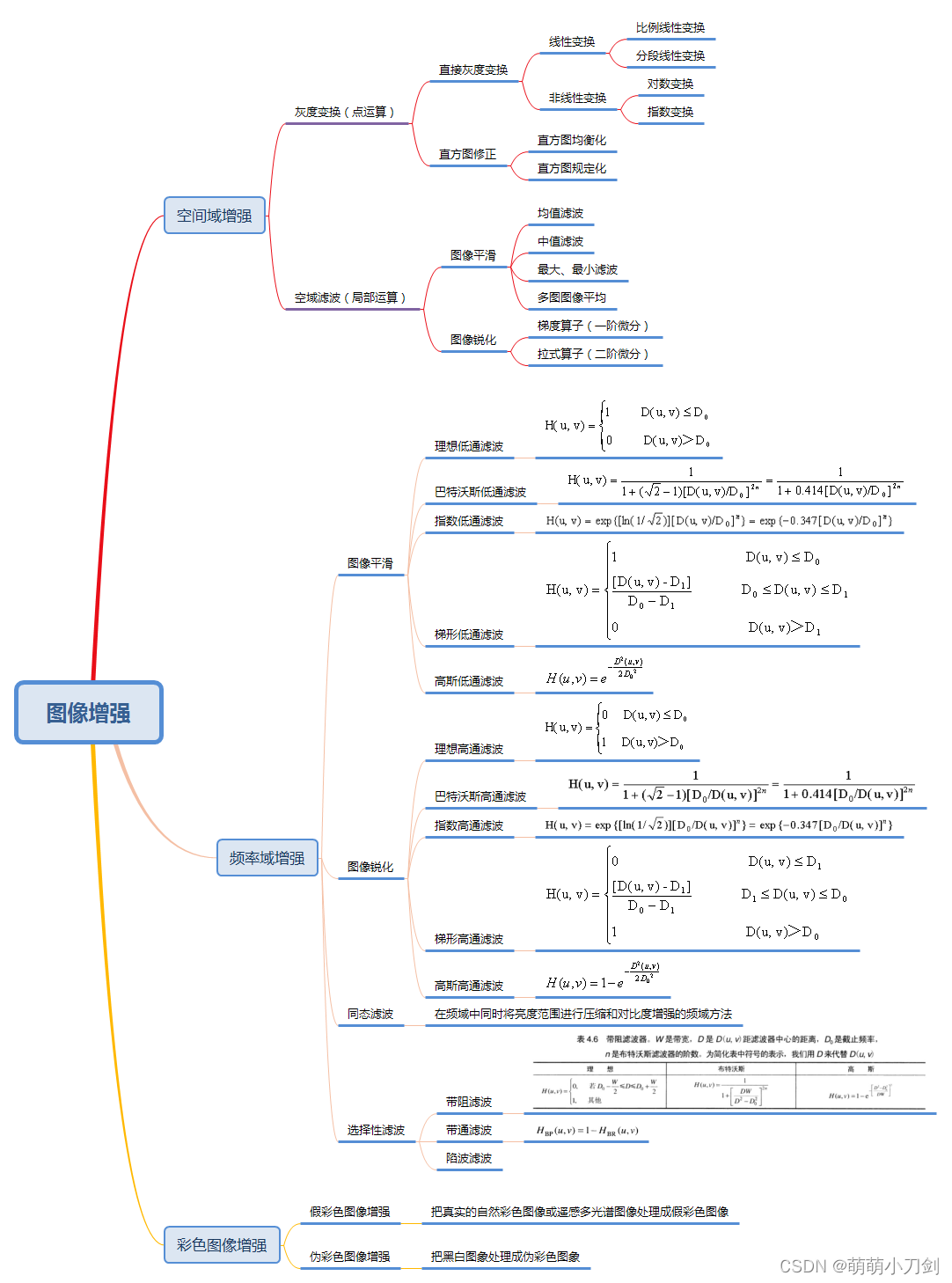

Knowledge point 08: Basic concepts of image enhancement

Since the knowledge point of image enhancement involves a lot of content and topics, I will further split it according to the following parts. in:

Part 1: Basic concepts such as image smoothing and image sharpening in image enhancement. These knowledge points will be organized in this section, mainly some macro issues.

Part 2: Grayscale transformation, this part has been covered in the previous section "Knowledge Point 05: Histogram"

Part 3: Image smoothing, this part will be organized in the following subsection "Knowledge point 09: Image smoothing"

Part 4: Image sharpening, this part will be organized in the following subsection "Knowledge point 10: Image sharpening"

Part 5: Color Image Enhancement, this part will be organized in the following subsection "Knowledge Point 11: Color Image Enhancement"

- What research is involved in image enhancement?

- What is the purpose of image enhancement? (★★)

- What is image smoothing? How to judge and eliminate noise? (★★)

- What is the principle of spatial domain smoothing of images? What are the methods? (★★)

- Why study image enhancement in the frequency domain? (★★★)

- What are the steps of the frequency domain spatial enhancement method?

- What are the characteristics of smooth and sharp templates?

- What is the principle of frequency domain smoothing and frequency domain sharpening? (★★★)

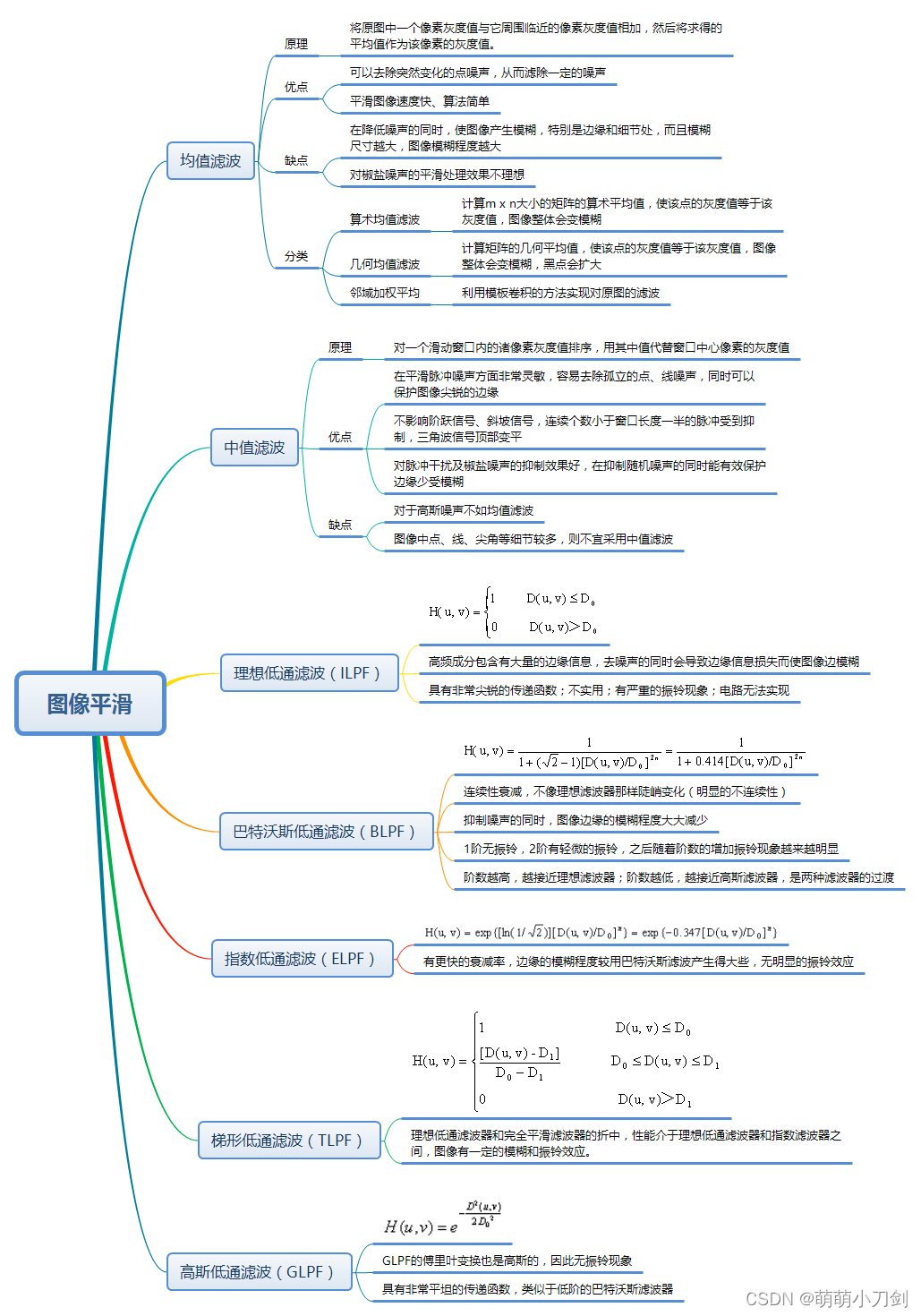

Knowledge point 09: Image smoothing

- What are the spatial smoothing methods? Briefly describe their rationale? (★★)

- What are the spatial smoothing methods? What are their characteristics? (★★)

- What is the median filter method? What should I pay attention to when using it?

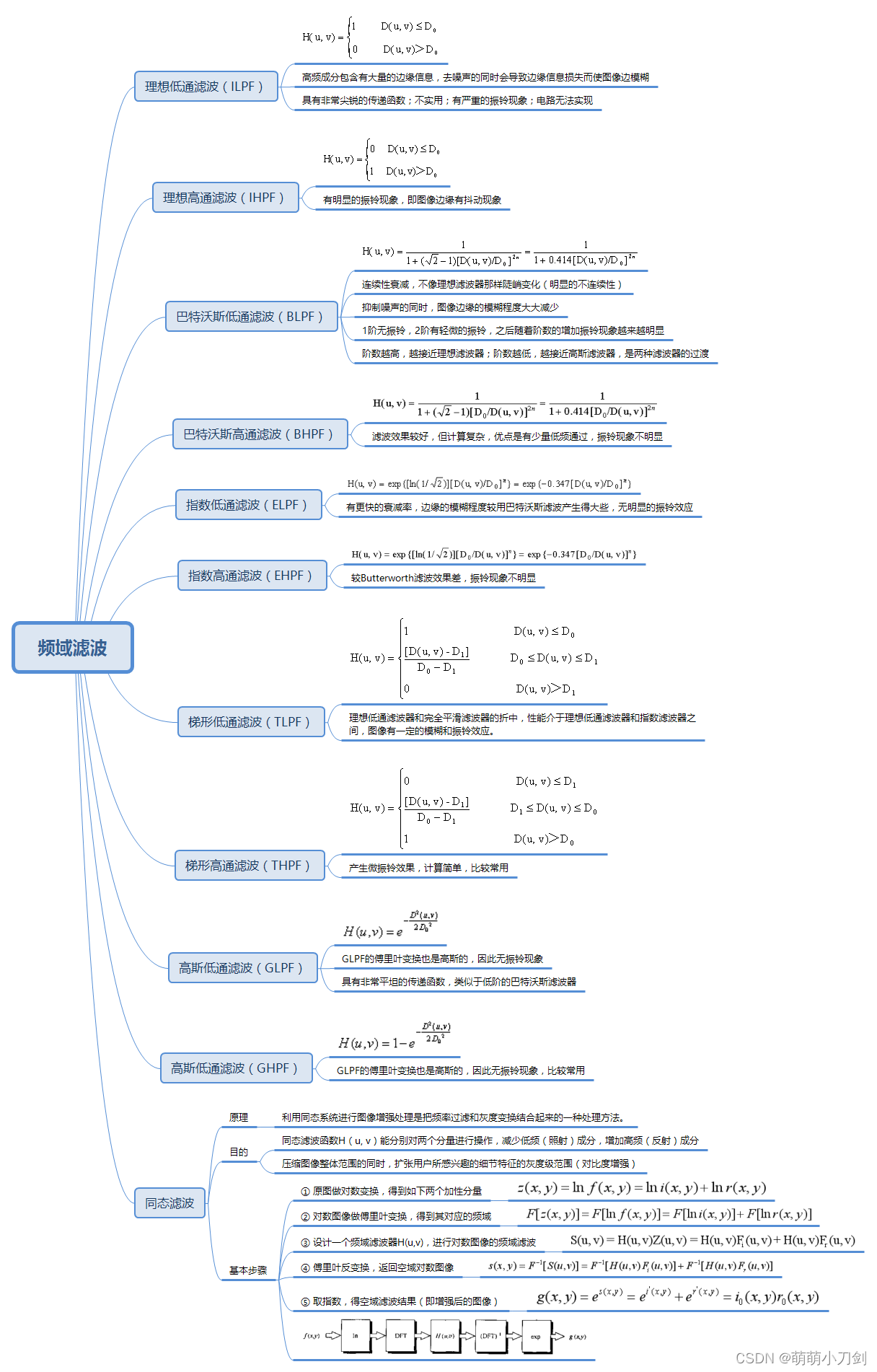

- What are the frequency domain low-pass filtering methods? What are their characteristics? (★★★)

- What are the characteristics of smooth templates?

- What is mean filtering method? What are its pros and cons? (★★★)

- What is the median filter method? What are its pros and cons? (★★★)

- What kind of noise are mean filter and median filter good at dealing with? Why? (★★★)

- Using 3*3, 5*5 mean templates to process the same image, what is the difference between the processed image? Why? (★★)

- Compare the principles and characteristics of arithmetic mean filtering and geometric mean filtering?

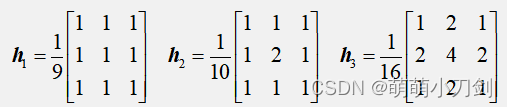

- Briefly describe the physical meaning of the following templates

- As shown in the title picture, the 256 × 256 binary image (white is 1 , black is 0 ), where the white bars are 7 pixels wide and 210 pixels high. The width between the two white bars is 17 pixels. What is the change result of the image when the following method is applied (still 0 or 1 according to the closest principle)? (image boundaries are not considered)

( 1 ) 3 × 3 Neighborhood Average Filtering. ( 2 ) 7 × 7 Neighborhood Average Filtering. ( 3 ) 9 × 9 Neighborhood Average Filtering.

(4) 3 × 3 median filter. (5) 7 × 7 median filtering. (6) 9 × 9 median filtering.

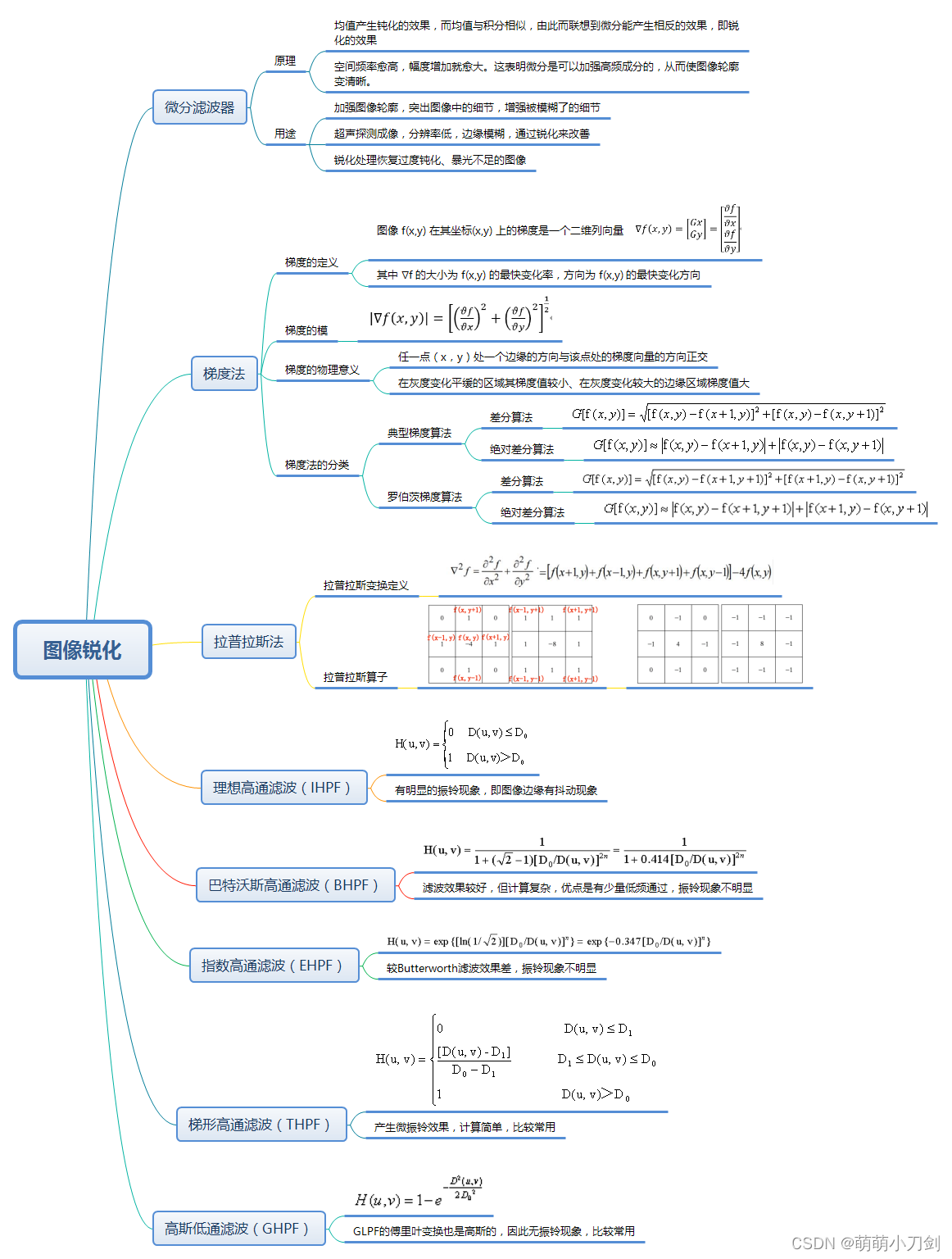

Knowledge point 10: Image sharpening

- What are the spatial sharpening methods? Briefly describe their rationale? (★★)

- What are the high-pass filtering methods? What are their characteristics? (★★★)

- For a grayscale image, how is its gradient defined? What is the physical meaning of image gradient? (★)

- What are the methods for enhancing images with gradients? Briefly describe their rationale? (★★)

- What are the methods of image sharpening and filtering? (★★)

- What conditions need to be met for sharpening template operation (differential operation)? (★★★)

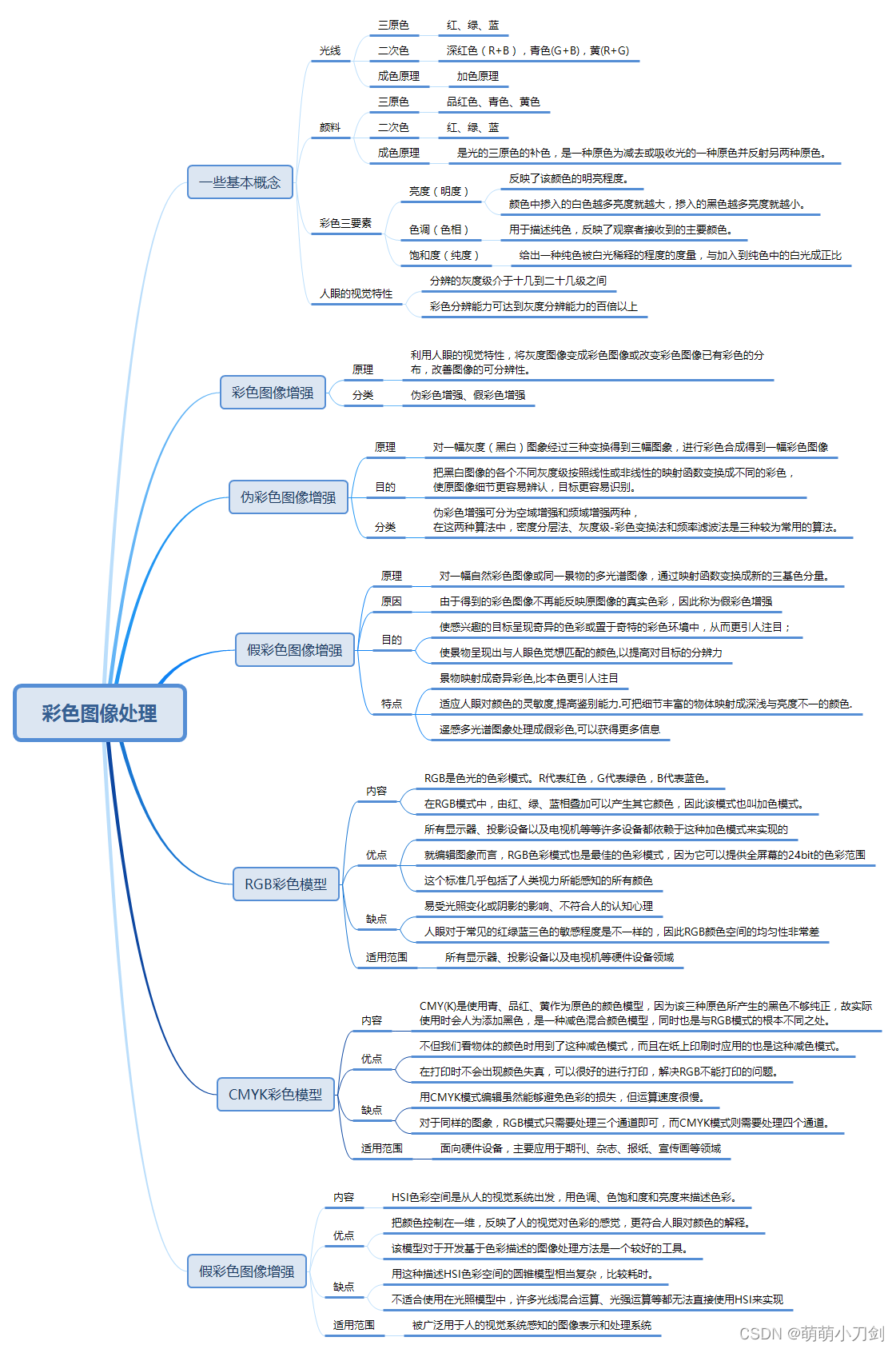

Knowledge point 11: color image processing

In this section, I sorted out some knowledge points and topics about color image processing. Due to the large number of topics, it is divided into 3 parts, namely color image enhancement, various color models, and other topics. The following is the knowledge framework of this section and the frequently asked questions.

Topic 1: Color Image Enhancement

- What are the principles of color image enhancement, false color image enhancement, and false color image enhancement? (★★)

- What are the similarities and differences between false color image enhancement and false color image enhancement? (★★★)

- What are the categories of true color image enhancement? What are the characteristics of each?

- What is the significance of false color enhancement?

- Why can pseudo-color processing achieve an enhanced effect?

- Discuss the application of pseudo-color in meteorological fields such as cloud image drawing and display.

Topic 2: Various color models

- What are the two types of commonly used color models? (★★)

- What are the subtractive and additive models of color? Which model is more suitable for display, picture and print occasions?

- List the content, advantages, disadvantages and scope of application of the RGB color model? (★★★)

- List the content, advantages, disadvantages and scope of application of the CMY (K) color model? (★★★)

- List the content, advantages, disadvantages and scope of application of the HSI color model? (★★★)

- Why is it sometimes necessary to convert from one color data representation to another? How to calculate HSV value from RGB value?

- What are the advantages of the YUV color system?

- Why is the YUV color system suitable for the color representation of color TV?

- In color image processing, the HSI model is often used. What is the reason why it is suitable for image processing? (★★)

- In the HSI color model, what do the three components H, S, and I represent? What are the characteristics of using this model to represent color?

- The color of the photo printed by a color printer is different from the color displayed on the monitor. Please give at least one possible reason.

- Which color space is closest to the characteristics of the human visual system? (★★)

- How to represent the color value of a point in an image? What does the color model do?

Topic 3: Other Color Image Processing Issues

- Discuss the relationship between color image enhancement and grayscale image enhancement.

- What are the three primary colors? What are the three elements of color? (★★)

- Compare grayscale and color images.

- Describe the principle of the three primary colors of light and the three primary colors of raw materials, and illustrate their respective application ranges; if a computer displays a blue color, how should the color be adjusted? (★★)

- What are the three attributes of color? Name three different color modes? In the color solid, what do the vertical axis, each point on the circumference of the horizontal circular surface, and the transition from the circumference to the center represent respectively?

- What are the definitions of Hue, Saturation, and Lightness? What role does each play in characterizing the color of a point in an image?

- What is grayscale of color image? What is the essence?

- What is the main principle of the white balance method?

- Why is color quantization required in some cases? What is the basis for the quantification of color images?

- How to achieve color histogram matching in computer?

- Prove that the saturation component of the complementary color of a color image cannot be calculated from the saturation component of the input image alone. (★★)

- Assume that an imaging system's monitor and printer are not perfectly aligned. An image that looked balanced on this monitor was printed with cyan. Describes a general transformation that corrects this imbalance.

- How does dithering reproduce richly colored images from devices that can only display fewer colors?

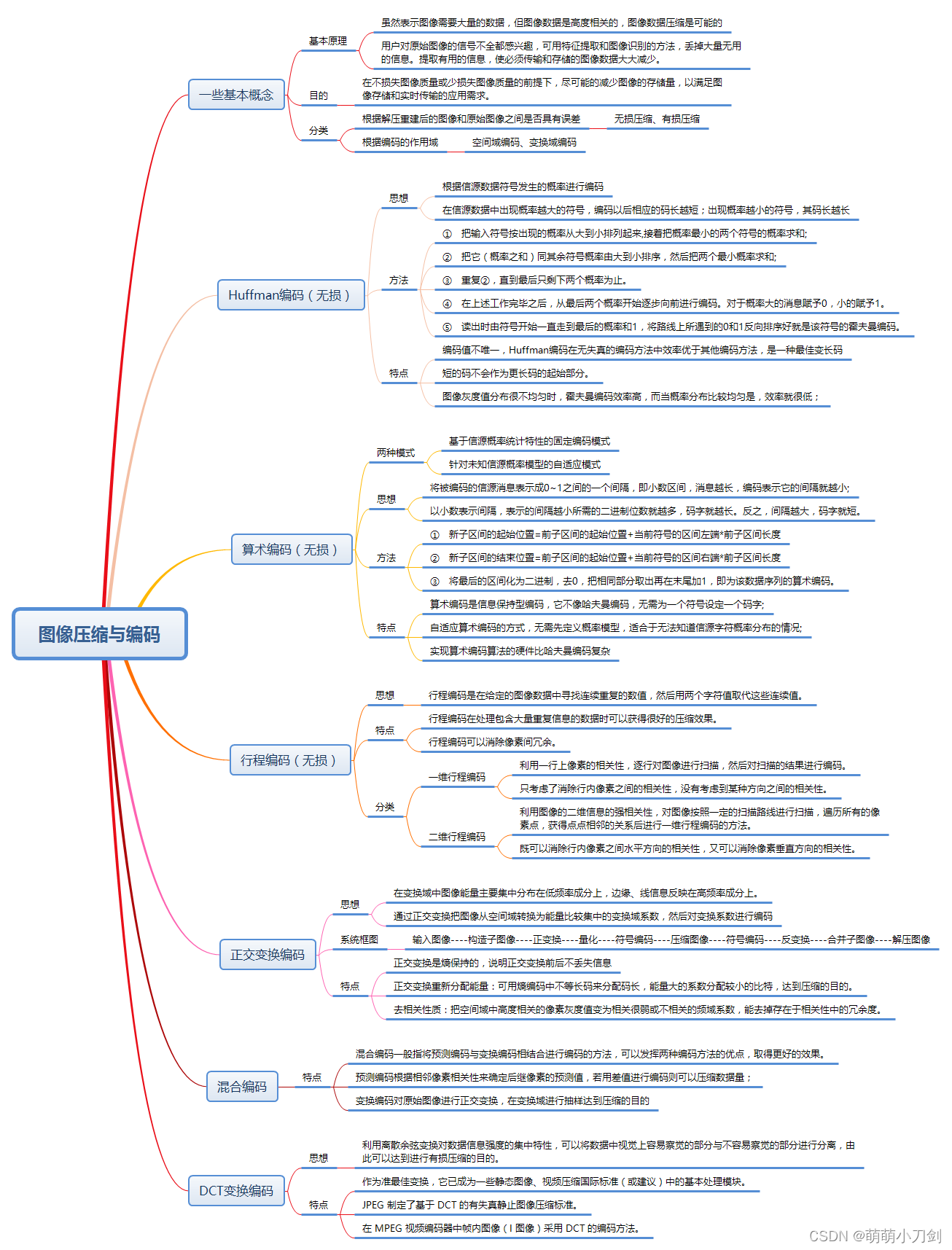

Knowledge point 12: image compression and coding

- How is image coding classified?

- What is the basic principle of image coding?

- What are lossless compressions? What are the characteristics of each?

- What are lossy compressions? What are the characteristics of each?

- What is lossless compression of images? Two lossless compression algorithms are given.

- Briefly describe the idea, coding steps and coding characteristics of Huffman Huffman coding? (★★★)

- Huffman coding is the best coding, why study other entropy coding algorithms like arithmetic coding? (★★)

- What are the encoding steps of Feynau-Shanon encoding?

- What are the 2 ways of arithmetic coding? What are the characteristics of arithmetic coding? (★★)

- What is the principle of run-length encoding? What is the scope of application? (★★)

- What is one-dimensional run-length encoding? What is two-dimensional run-length encoding? What is the difference between the two? (★★★)

- From what aspects to illustrate the necessity of data compression? (★)

- What is the principle of Orthogonal Transform Coding? What are the properties?

- Are most video compression methods lossy or lossless? Why?

- How to measure the performance of an image encoding compression method?

- What are the advantages of hybrid encoding? (★)

- With the discrete Fourier and its fast algorithm FFT, why should the discrete cosine algorithm DCT and its fast algorithm be proposed? Why do many international video standards use DCT as the basic compression algorithm for intra-frame coding?

- What is the main idea of DCT transform coding?

- What is the main process of DCT transform coding?

- From what aspects to illustrate the possibility of image data compression? (★)

- Can data be compressed without redundancy? Why?

- There are many compression coding algorithms, why use hybrid compression coding? Please give an example.

- What is JPEG encoding?

- Briefly describe the compression process of JPEG, and explain what kind of redundancy is reduced in the relevant steps of compression?

- According to the JPEG algorithm, the reason why the mosaic phenomenon will appear when the JPEG image is displayed is explained.

- What does the quantization table of JPEG do?

- What is the effect of zigzag rearrangement of DCT coefficients in JPEG algorithm?

- Why does JPEG need color space conversion?

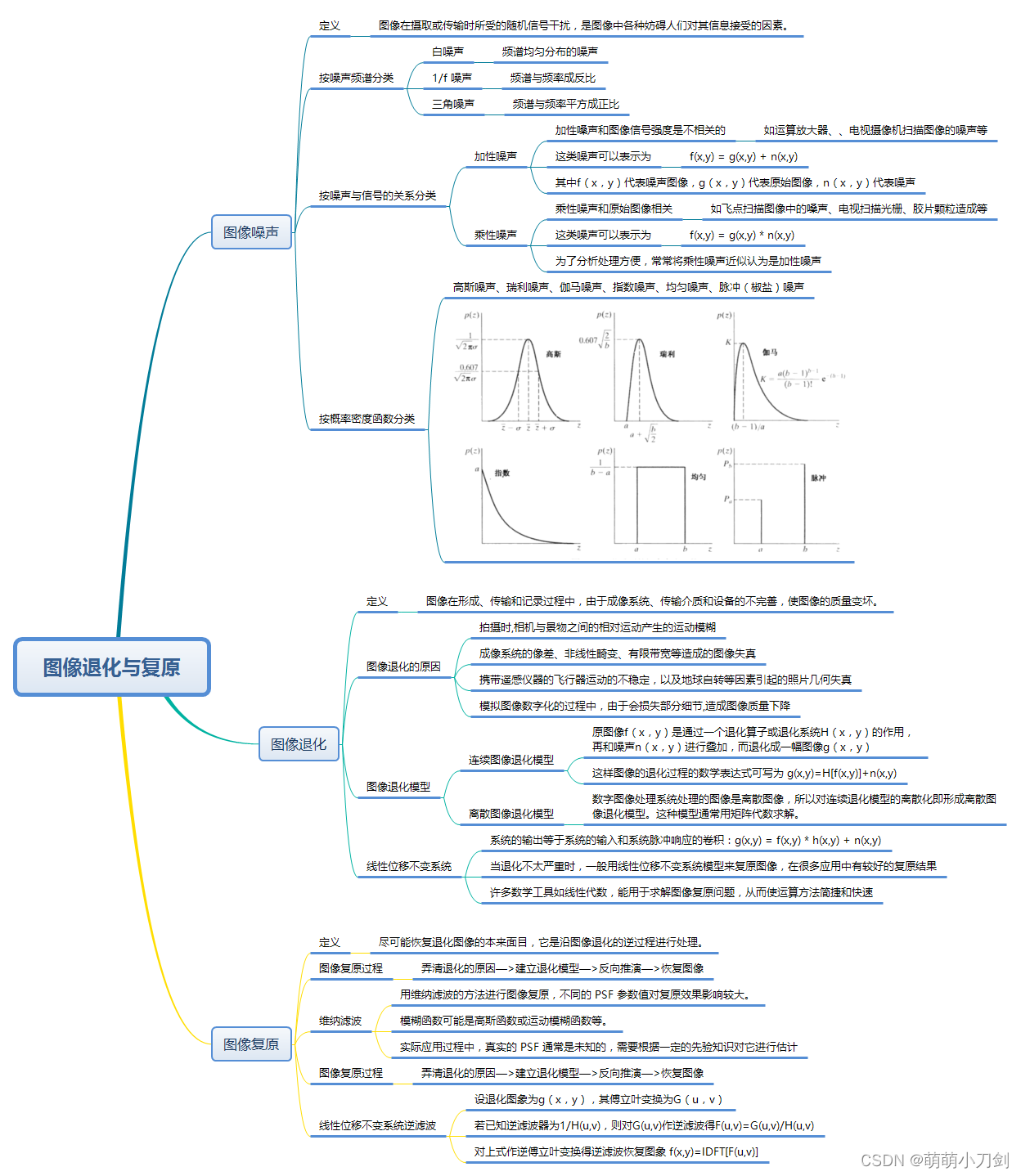

Knowledge point 13: image degradation, image restoration, image noise

- What is image degradation? What is Image Restoration? What is the image restoration process? (★★)

- What is an image degradation model?

- What are the causes of image degradation? (★)

- In the blind deconvolution method, how to choose an appropriate PSF value?

- Using the method of Wiener filtering for image restoration, what influence does different PSF have on the restoration effect?

- What is a linear displacement invariant system? (★)

- What are the types of common image degradation models? (★★)

- When restoring with constrained least squares filtering, what influence do different noise intensities, search ranges of Laplace operator and constraint operators have on the restoration effect?

- What is the reason for adopting the linear displacement invariant system model?

- What is the principle of image recovery by inverse filtering of linear displacement invariant system?

- What is image noise? (★★)

- How is image noise classified according to the relationship between noise and signal? What are the characteristics of each of them? (★★★)

- What types of image noise can be divided into according to the probability density function? (★★★)

Knowledge point 14: image edge, edge detection, edge tracking

- What are image edges? What are the characteristics?

- What is the principle of Hough (Hough) transform? What are the characteristics?

- What is the effect of the choice of Marr's operator σ on the template size and edge detection results?

- What is the principle of Canny edge detector?

- What is edge tracking? There are 2 ways to connect edges?

- What is the theoretical basis of operator segmentation technology based on image edge?

- What is Hough Transform? Describe the principle of using Hough transform to detect straight lines.

Knowledge point 15: Morphological image processing

- What are open and close operations? What are the characteristics of each?

- What is the process of erosion operation?

- What is the process of dilation operation?

- What are structural elements and what principles should be considered when selecting them?

- What are typical geometric distortions?

- What are the general features of the details in the image? Where are the general details reflected in the image?

- If the luminance level values of any two pixels on the image are equal or the luminance level values of pixels at the same position at any two moments are equal, can it be explained that the pixels are related in the above two cases? Why?

- What are the main research contents of mathematical morphology?

- What are the characteristics of image processing based on mathematical morphology?

Knowledge point 16: image segmentation, image recognition

- What is image segmentation? What is its basic strategy ?

- What are the commonly used image segmentation methods? What are their characteristics?

- What is Threshold Segmentation Technique? In what scenarios is this technology suitable for image segmentation?

- What is image recognition? How to classify?

- In bitmap cutting, in terms of bit-plane extraction for 8-bit images

(1) In general, what is the effect on the histogram of an image if the low-order bit planes are set to zero values?

(2) What will be the effect on the histogram if the high-order bit plane is set to zero value?

Knowledge point 17: Other topics

1. The following three images are blurred images processed by square mean masks with n=23, 25 and 45 respectively. The vertical bar in the lower left corner of Figures (a) and (c) is blurred, but the separation between bars is still clear. However, the vertical bars in (b) have been integrated into the entire image, although the mask used to generate this image is much smaller than that used to process image (c). Please explain this phenomenon.

2. Try to analyze the quality problems of the following images, and propose corresponding measures

3. When inverse filtering, why can't full filtering be used when there is noise in the image? Try to explain the principle of inverse filtering, and give the correct processing method.

4. The picture below is a two-dimensional online stereographic projection of a blurry heart. It is known that the crosshair at the bottom right of each image is 3 pixels wide, 30 pixels long, and has a gray value of 255 before blurring. Please provide a process and point out how to apply the above information to obtain the blur function H(u,v).

5. The combination of high frequency enhancement and histogram equalization is an effective method to obtain edge sharpening and contrast enhancement. Does the sequence of the above two operations affect the result? Why?

6. An archeology professor was doing research on currency circulation in ancient Rome, and recently realized that 4 Roman coins were key to his research, and they were listed in the collection catalog of the British Museum in London. Unfortunately, when he got there, he was told that the coins had now been stolen, but fortunately some photos kept by the museum are also reliable for research. But the photograph of the coin is blurred, and the date and other small markings cannot be read. The cause of the blur is that the camera was out of focus when the photo was taken. As an image processing expert, you are asked to help decide whether computer processing can be used to restore the image, and to help the professor read the marks. And the original camera used to take this image is still in use, as are some other representative coins from the same period. Suggest a process for addressing this issue.

7. 1) Briefly describe the relationship between frequency domain spectrum images and image spatial features;

2) Figure 1 is a centered spectrum diagram, indicating which information of the original space image corresponds to the areas pointed by the two arrows;

3) Figure 2 is an intensity image of a spectral domain filter, please indicate whether it is a low-pass or high-pass filter.

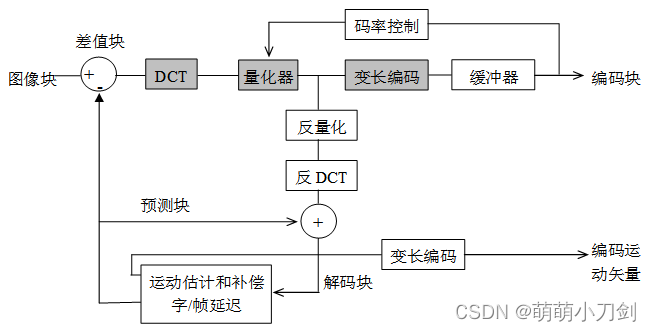

8. The figure below shows a block diagram of a basic MPEG video compression encoder with motion compensation, and draws the block diagram of the corresponding decoder based on this encoder.

9. How many models are there for image reconstruction? What are the characteristics of each?

10. What is the nature of affine transformation?

11. What are 4 neighborhoods, 4 adjacencies, 4 connections, and 4 Unicoms?

12. What about neighborhood and adjacency?

13. Based on the knowledge you have learned, judge whether the following views are right or wrong, and briefly explain the reasons.

- Any color model can accurately describe all colors

- Calculate the distance between ( 0,0) and ( 3,4) , which is 5

- After learning DIP, I can start a business, able to undertake any image enhancement/restoration business, 1 00% customer satisfaction

- In the class, we learned about spatial image enhancement and frequency domain image enhancement. Therefore, for any practical application, you can choose to enhance the image in the spatial domain or the image in the frequency domain, and the effect is the same.

- In matlab, imread reads a jpg photo file and returns a 2D matrix of M * N

14. What are the characteristics of frequency domain processing of image information?

15. You took a lot of photos when the spring flowers were romantic. Please answer in each of the following environments

- There is a wonderful moment where your hand shakes, the photo is blurred and you only take this one photo. Do you have a way to manipulate photos?

- In one photo, due to a mistaken touch, the exposure was reduced, resulting in a lack of black in the overall picture. Do you have a way to manipulate photos?

- In some photos, you took them through the window screen of the window glass. There is a window screen on the photo, do you have a way to process the photo?

Topic 01: Various frequency domain filters

- Compare the characteristics of various low-pass and high-pass filters? (★★★)

- When the cut-off frequency of the ideal low-pass filter is not selected properly, there will be a strong ringing effect. Explain the cause of the ringing effect . (★★★)

- What are the digital image sorting filters in the spatial domain? What are the characteristics of each?

- How does the ringing phenomenon appear on the image? How did it come about? How to remove it? Why can it be removed? (★★★)

- What is the principle of homomorphic processing? What is the principle of homomorphic sharpening method?

- What is the basic principle (basic steps) of homomorphic filtering? (★★★)

- What is the purpose of homomorphic filtering? (★★)

- The images obtained at the Astronomical Institute show bright spots of corresponding stars that are quite far apart. The superimposed illumination due to atmospheric scattering often makes these bright spots difficult to see. If this type of image is modeled as the product of a constant brightness background and a group of pulses, an enhancement method is designed based on the concept of homomorphic filtering to extract the bright spots of the corresponding stars.

- Which methods can be used to deal with the following practical applications? 1. During OCR, the text in the input image has broken pens 2. When taking pictures, acne or wrinkles on the face 3. Satellite images, periodic scan lines are obvious

- If the transfer function of smoothing filter in frequency domain is H(u,v). Please design a sharpening filter transfer function? (★★)

Topic 02: Various edge detection operators

- The second-order differential Laplace operator is sensitive to noise and plays an amplifying role. Actual edges are noisy, which will produce false edges. what to do?

- What are the three major criteria of the Canny operator ?

- Briefly describe the characteristics of several commonly used edge detection operators? (★★)

- What are the similarities and differences between the gradient operator and the Laplacian operator to detect edges? (★★)

- Try to point out which operator is the corresponding method of the following gradient algorithm.

- What is the difference between Laplacian operator and Laplacian enhanced operator? (★★)

- What are the characteristics of the first-order derivative and the second-order derivative of the edge point? what is it used for? (★★★)

- What are the similarities and differences between the first-order differential operator and the second-order differential operator when extracting the detailed information of the image? (★★)

Topic 03: The difference between A and B

- What is the difference between image processing and image analysis?

- What is the difference between image enhancement and image restoration? What's the connection? ( ★★★ )

- What is the difference between image transformation and image geometric transformation? ( ★★ )

- What is the difference between image sharpening and image smoothing? What's the connection? ( ★★★ )

- What is the difference between image segmentation and image classification?

- What is the difference between cone cells and rod cells? (Try to answer from three aspects: quantity, distribution location, and characteristics) ( ★★★ )

- What is the difference between sampling and quantization? ( ★★★ )

- What is the difference between equal interval quantization and non-equal interval quantization?

- What is the difference between point operations and spatial operations? ( ★★ )

- What is the difference between spatial resolution and grayscale resolution? ( ★★ )

- What is the difference between histogram equalization and histogram specification? ( ★★ )

- What is the difference between objective fidelity criterion and subjective fidelity criterion? ( ★★ )

- What is the difference between region segmentation and region growth?

- What is the difference between digital image processing and analog image processing? ( ★★ )

- What is the difference between spatial domain enhancement and frequency domain enhancement? ( ★★★ )

- What is the difference between frequency domain smoothing and frequency domain sharpening? ( ★★★ )

- What is the difference between mean filter and median filter? ( ★★★ )

- What is the difference between false color image enhancement and false color image enhancement? What's the connection? ( ★★★ )

- What is the difference between color image enhancement and grayscale image enhancement?

- What is the difference between a grayscale image and a color image?

- What is the difference between one-dimensional run-length encoding and two-dimensional run-length encoding? ( ★★ )

- What is the difference between additive noise and multiplicative noise? What's the connection? ( ★★ )

- What is the difference between opening and closing operations? ( ★★ )

- What is the difference between the first derivative and the second derivative of the edge point? ( ★★ )

- What is the difference between Laplacian operator and Laplacian enhanced operator?

- What is the difference between a binary image, a grayscale image, and a color image?

- What is the difference between traditional orthogonal transform coding and wavelet transform coding?

- What is the difference between discrete Walsh transform and Hadamard transform?

- Adding and averaging M images can eliminate noise, and smoothing with a template can also eliminate noise. Try to compare the noise elimination effects of these two methods.

- What is the difference between an edge detection template, a sharpening template, and a smoothing template? ( ★★ )