particle swarm optimization

1 Algorithm introduction

The idea of particle swarm optimization (PSO) originates from the research on the predation behavior of birds/fish schools, and simulates the behavior of birds flocking for food. The birds achieve the optimal goal through collective cooperation.

The particle swarm optimization algorithm makes the following assumptions:

- Each optimal solution to the problem is imagined as a bird, called a "particle". All particles are searched in a D-dimensional space.

- All particles are determined by a fitness function to determine the fitness value to judge whether the current position is good or bad.

- Each particle must be endowed with a memory function that can remember the best position it has found.

- Each particle also has a velocity that determines the distance and direction of flight. This speed is dynamically adjusted according to its own flying experience and that of its companions.

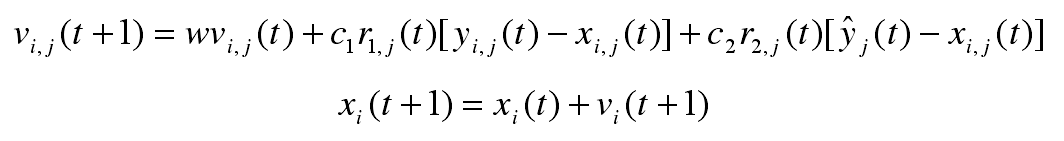

The position update formula of the traditional particle swarm optimization algorithm is as follows

Each bird will determine the speed (including size and direction) at the next moment according to its own speed inertia, its own best position and the group's best position, and update its position according to the speed.

When applying PSO to solve the TSP problem, some improvements need to be made to convert the multi-dimensional city list information into a kind of coordinate information, and define the corresponding speed, acceleration, etc. on this basis. These studies are introduced in detail in Bian Feng's two articles "Research and Application of Particle Swarm Optimization Algorithm in TSP" and "Improved QPSO Algorithm for Solving TSP". One of the most important concepts is the exchange order, which is equivalent to the speed in traditional PSO. After making these improvements, the PSO algorithm can be applied to the TSP problem.

2 experimental code

3 Experimental results

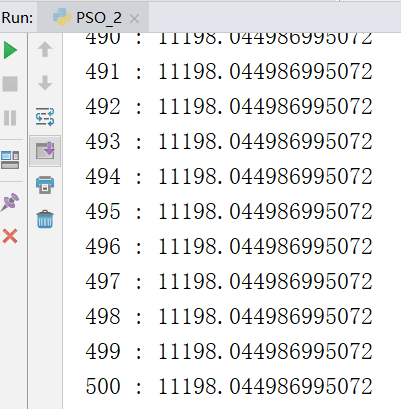

The following is the result after 500 iterations, and the optimal solution is 11198

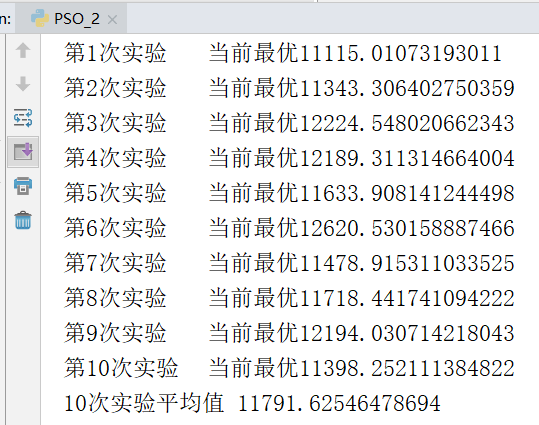

In order to avoid the contingency of the calculation process, the following 10 repeated experiments were carried out and the average value was calculated.

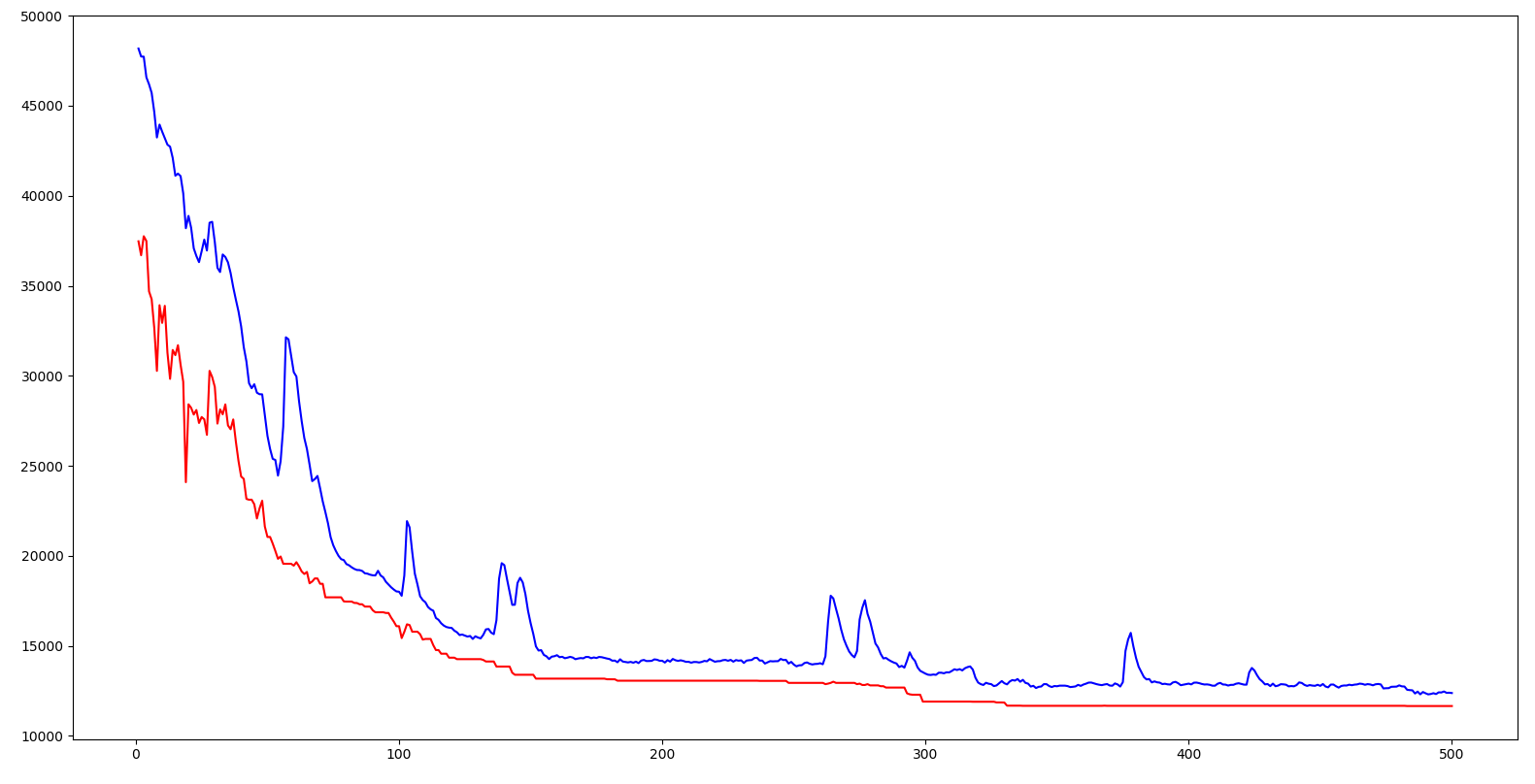

The abscissa in the figure above is the number of iterations, the ordinate is the distance, and the red curve is the bird closest to the food in each iteration (that is, the optimal solution of this iteration, and the position of the food is the coordinate formed by the optimal solution city sequence) , the blue curve is the average distance of all birds at each iteration. It can be seen that unlike the genetic algorithm, the initial optimal solution has volatility and does not always decline (the reason why the genetic algorithm has been declining is that the optimal individual retained is directly passed to the next generation each time). This happens The reason is that at the very beginning, all the birds are scattered randomly, and everyone is at about the same distance from the food, so even the bird closest to the food can provide little reference value for the information. So in the first period of time, the bird in the optimal position is fluctuating, but in the later period, when the food position is more certain, its volatility disappears.

From the trend point of view, whether it is the optimal distance of each iteration or the average distance of everyone, the overall trend is downward, that is to say, the whole group is moving towards the position of food.

4 Experimental summary

1. After reading the two articles by Bian Feng and using his method to apply PSO to solve the TSP problem, I realized that an algorithm is not limited to solving a specific type of problem. Combination, and then abstraction and analogy can be applied to the solution of new problems. This kind of abstract and analogical thinking is very surprising to me, and I have to study hard.

2. Introduce mutation operation of genetic algorithm

When the PSO was first completed, the test found that it was easy to fall into a local optimum far from the optimal solution. After referring to Bian Feng's "Improved QPSO Algorithm for Solving TSP", the relevant mutation operation is introduced to solve this problem.

The greedy handstand variation he came up with is interesting. Greedy inverted mutation refers to finding a city, then finding the nearest city, and then sorting the sequence between the two cities in the city sequence in reverse order. In this way, while optimizing the distance between the two selected cities, it is possible to ensure that the order of other cities does not change as much as possible.

I don't know how the author came up with this mutation method. The first thing I thought of after reading the article was an inversion in chromosomal variation, that is, a segment of a chromosome is rotated 180 degrees and put back into its original position. Chromosomal inversion mutations are very similar to the greedy inversion mutations mentioned by the authors. Maybe the author also borrowed from the chromosomal variation in nature, and once again lamented the infinite wisdom of nature.

There is no mutation operation in traditional PSO, and this operation of introducing mutation is borrowed from genetic algorithm. It can be seen that absorbing the essence of other algorithms can improve the efficiency of its own algorithm.

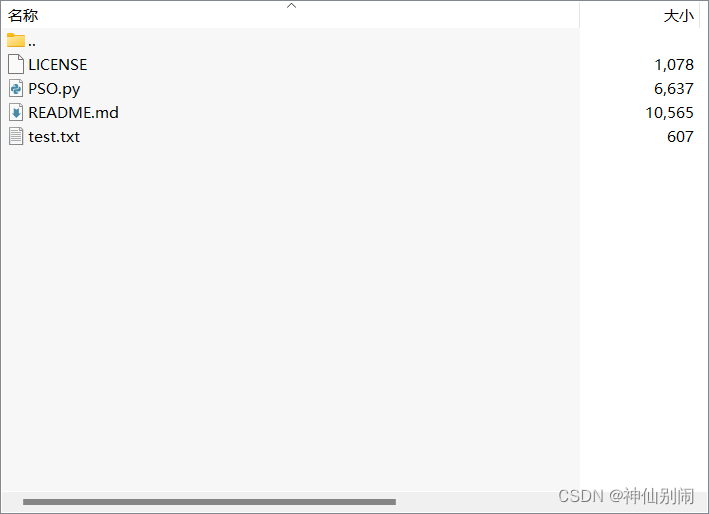

♻️ Resources

Size: 9.16KB

➡️ Resource download: https://download.csdn.net/download/s1t16/87547847

Note: If the current article or code violates your rights, please private message the author to delete it!