Article directory

- 1. Purpose of the experiment

- 2. Experimental environment

- 3. Experimental content

-

- (1) Program to implement the following functions, and use the Shell commands provided by Hadoop to complete the same tasks:

-

- 1. Upload any text file to HDFS

- 2. Download the specified file from HDFS

- 3. Output the content of the specified file in HDFS to the terminal

- 4. Display the read and write permissions, size, creation time, path and other information of the specified file in HDFS

- 5. Given a directory in HDFS, output the read and write permissions, size, creation time and other information of all files in the directory

- 6. Provide a path to a file in HDFS, and create and delete the file

- 7. Provide the path of an HDFS directory, and create and delete the directory

- 8. Add content to the file specified in HDFS, and add the content specified by the user to the beginning or end of the original file

- 9. Delete the specified file in HDFS

- 10. In HDFS, move files from source path to destination path

- (2) Program to implement a class "MyFSDataInputStream"

- (3) Check the Java help manual or other materials, and output the text of the specified file in HDFS to the terminal after programming.

- Fourth, experience:

1. Purpose of the experiment

(1) Understand the role of HDFS in Hadoop architecture.

(2) Proficiency in using Shell commands commonly used in HDFS operations.

(3) Familiar with Java API commonly used in HDFS operations.

2. Experimental environment

Windows operating system

VMware Workstation Pro 15.5

remote terminal tool Xshell7

Xftp7 transmission tool

CentOS7.5

3. Experimental content

(1) Program to implement the following functions, and use the Shell commands provided by Hadoop to complete the same tasks:

1. Upload any text file to HDFS

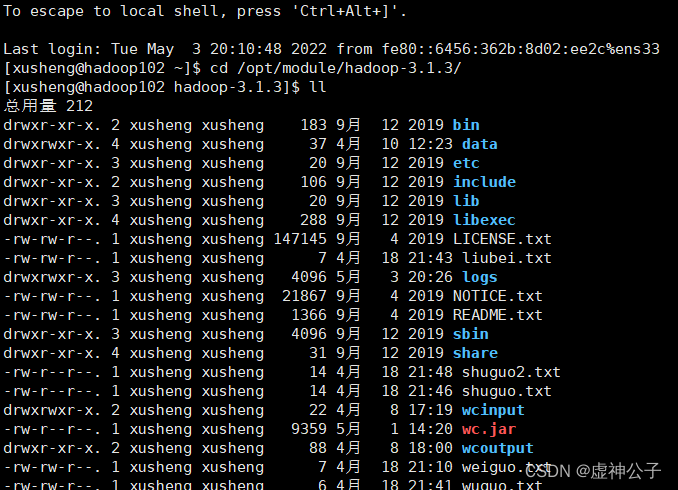

(1) Upload any text file to HDFS. If the specified file already exists in HDFS, the user can specify whether to append to the end of the original file or overwrite the original file; Shell command: to check whether the file exists, you

can

use Command as follows:

cd /opt/module/hadoop-3.1.3/

$ ./bin/hdfs dfs -test -e weiguo.txt

After executing the above command, the result will not be output, you need to continue to enter the command to view the result:

$ echo $?

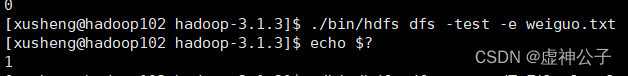

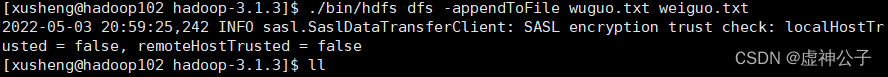

If the result shows that the file already exists, the user can choose to append to the end of the original file or overwrite the original file. The specific command is as follows:

$ ./bin/hdfs dfs -appendToFile wuguo.txt weiguo.txt #追加到原文件末尾

$ ./bin/hdfs dfs -copyFromLocal -f wuguo.txt weiguo.txt#覆盖原来文件,第一种命令形式

code:

package com.xusheng.hdfs;

//import org.apache.commons.configuration2.Configuration;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import java.io.FileInputStream;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.io.*;

public class HDFSApi {

/**

* 判断路径是否存在

*/

public static boolean test(Configuration conf, String path) throws IOException {

FileSystem fs = FileSystem.get(conf);

return fs.exists(new Path(path));

}

/**

* 复制文件到指定路径

* 若路径已存在,则进行覆盖

*/

public static void copyFromLocalFile(Configuration conf, String localFilePath, String remoteFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path localPath = new Path(localFilePath);

Path remotePath = new Path(remoteFilePath);

/* fs.copyFromLocalFile 第一个参数表示是否删除源文件,第二个参数表示是否覆盖 */

fs.copyFromLocalFile(false, true, localPath, remotePath);

fs.close();

}

/**

* 追加文件内容

*/

public static void appendToFile(Configuration conf, String localFilePath, String remoteFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path remotePath = new Path(remoteFilePath);

/* 创建一个文件读入流 */

FileInputStream in = new FileInputStream(localFilePath);

/* 创建一个文件输出流,输出的内容将追加到文件末尾 */

FSDataOutputStream out = fs.append(remotePath);

/* 读写文件内容 */

byte[] data = new byte[1024];

int read = -1;

while ( (read = in.read(data)) > 0 ) {

out.write(data, 0, read);

}

out.close();

in.close();

fs.close();

}

/**

* 主函数

*/

public static void main(String[] args) {

Configuration conf = new Configuration();

conf.set("fs.default.name"," hdfs://hadoop102:8020");

//String localFilePath = "/home/hadoop/text.txt"; // 本地路径

//String remoteFilePath = "/user/hadoop/text.txt"; // HDFS路径

String localFilePath = "/user/xusheng/weiguo.txt"; // 本地路径

String remoteFilePath = "/opt/module/hadoop-3.1.3/weiguo.txt"; // HDFS路径

String choice = "append"; // 若文件存在则追加到文件末尾

// String choice = "overwrite"; // 若文件存在则覆盖

try {

/* 判断文件是否存在 */

Boolean fileExists = false;

if (HDFSApi.test(conf, remoteFilePath)) {

fileExists = true;

System.out.println(remoteFilePath + " 已存在.");

} else {

System.out.println(remoteFilePath + " 不存在.");

}

/* 进行处理 */

if ( !fileExists) {

// 文件不存在,则上传

HDFSApi.copyFromLocalFile(conf, localFilePath, remoteFilePath);

System.out.println(localFilePath + " 已上传至 " + remoteFilePath);

} else if ( choice.equals("overwrite") ) {

// 选择覆盖

HDFSApi.copyFromLocalFile(conf, localFilePath, remoteFilePath);

System.out.println(localFilePath + " 已覆盖 " + remoteFilePath);

} else if ( choice.equals("append") ) {

// 选择追加

HDFSApi.appendToFile(conf, localFilePath, remoteFilePath);

System.out.println(localFilePath + " 已追加至 " + remoteFilePath);

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

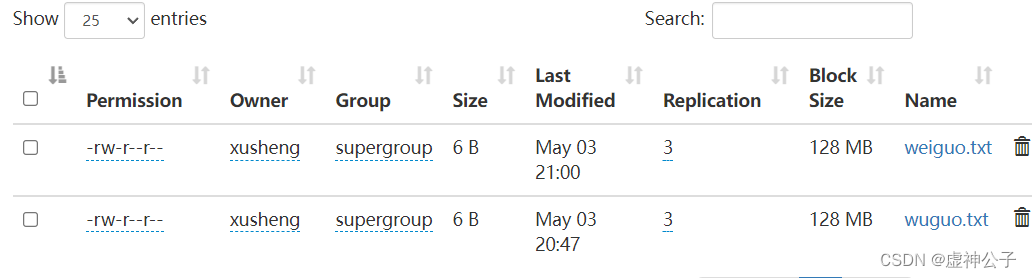

result:

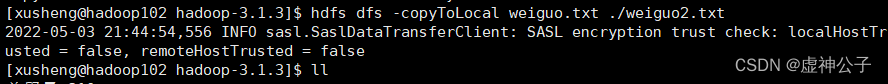

2. Download the specified file from HDFS

(2) Download the specified file from HDFS. If the name of the local file is the same as that of the file to be downloaded, the downloaded file will be automatically renamed;

Shell command:

$ if $(hdfs dfs -test -e file:///home/hadoop/weiguo.txt);

$ then $(hdfs dfs -copyToLocal weiguo.txt ./ weiguo2.txt);

$ else $(hdfs dfs -copyToLocal weiguo.txt ./ weiguo.txt);

$ fi

code:

package com.xusheng.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.io.*;

public class HDFSApi2 {

/**

* 下载文件到本地

* 判断本地路径是否已存在,若已存在,则自动进行重命名

*/

public static void copyToLocal(Configuration conf, String remoteFilePath, String localFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path remotePath = new Path(remoteFilePath);

File f = new File(localFilePath);

/* 如果文件名存在,自动重命名(在文件名后面加上 _0, _1 ...) */

if (f.exists()) {

System.out.println(localFilePath + " 已存在.");

Integer i = 0;

while (true) {

f = new File(localFilePath + "_" + i.toString());

if (!f.exists()) {

localFilePath = localFilePath + "_" + i.toString();

break;

}

}

System.out.println("将重新命名为: " + localFilePath);

}

// 下载文件到本地

Path localPath = new Path(localFilePath);

fs.copyToLocalFile(remotePath, localPath);

fs.close();

}

/**

* 主函数

*/

public static void main(String[] args) {

Configuration conf = new Configuration();

conf.set("fs.default.name","hdfs://hadoop102:8020");

String localFilePath = "/home/hadoop/weiguo.txt"; // 本地路径

String remoteFilePath = "/user/xusheng/weiguo.txt"; // HDFS路径

try {

HDFSApi2.copyToLocal(conf, remoteFilePath, localFilePath);

System.out.println("下载完成");

} catch (Exception e) {

e.printStackTrace();

}

}

}

result:

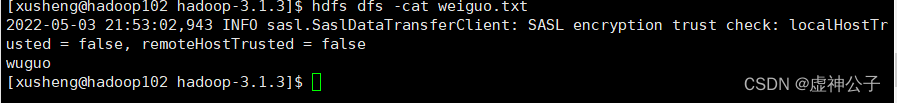

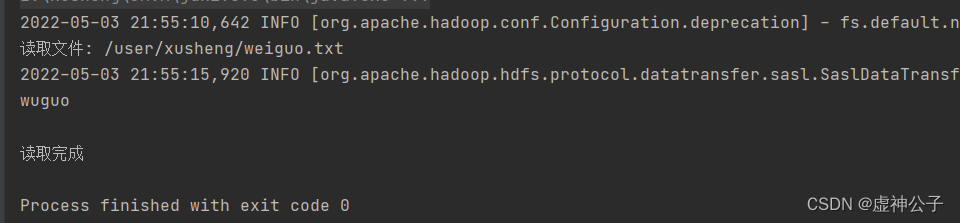

3. Output the content of the specified file in HDFS to the terminal

(3) Output the content of the specified file in HDFS to the terminal;

Shell command:

$ hdfs dfs -cat weiguo.txt

code:

package com.xusheng.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.io.*;

public class HDFSApi3 {

/**

* 读取文件内容

*/

public static void cat(Configuration conf, String remoteFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path remotePath = new Path(remoteFilePath);

FSDataInputStream in = fs.open(remotePath);

BufferedReader d = new BufferedReader(new InputStreamReader(in));

String line = null;

while ( (line = d.readLine()) != null ) {

System.out.println(line);

}

d.close();

in.close();

fs.close();

}

/**

* 主函数

*/

public static void main(String[] args) {

Configuration conf = new Configuration();

conf.set("fs.default.name","hdfs://hadoop102:8020");

String remoteFilePath = "/user/xusheng/weiguo.txt"; // HDFS路径

try {

System.out.println("读取文件: " + remoteFilePath);

HDFSApi3.cat(conf, remoteFilePath);

System.out.println("\n读取完成");

} catch (Exception e) {

e.printStackTrace();

}

}

}

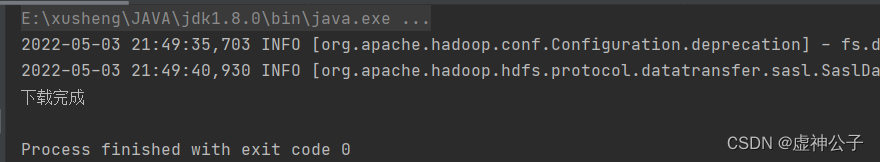

result:

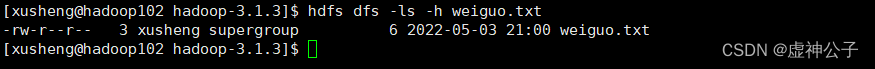

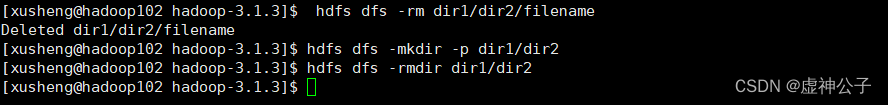

4. Display the read and write permissions, size, creation time, path and other information of the specified file in HDFS

(4) Display the read and write permissions, size, creation time, path and other information of the specified file in HDFS;

Shell command:

$ hdfs dfs -ls -h weiguo.txt

code:

package com.xusheng.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.io.*;

import java.text.SimpleDateFormat;

public class HDFSApi4 {

/**

* 显示指定文件的信息

*/

public static void ls(Configuration conf, String remoteFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path remotePath = new Path(remoteFilePath);

FileStatus[] fileStatuses = fs.listStatus(remotePath);

for (FileStatus s : fileStatuses) {

System.out.println("路径: " + s.getPath().toString());

System.out.println("权限: " + s.getPermission().toString());

System.out.println("大小: " + s.getLen());

/* 返回的是时间戳,转化为时间日期格式 */

Long timeStamp = s.getModificationTime();

SimpleDateFormat format = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

String date = format.format(timeStamp);

System.out.println("时间: " + date);

}

fs.close();

}

/**

* 主函数

*/

public static void main(String[] args) {

Configuration conf = new Configuration();

conf.set("fs.default.name","hdfs://hadoop102:8020");

String remoteFilePath = "/user/xusheng/weiguo.txt"; // HDFS路径

try {

System.out.println("读取文件信息: " + remoteFilePath);

HDFSApi4.ls(conf, remoteFilePath);

System.out.println("\n读取完成");

} catch (Exception e) {

e.printStackTrace();

}

}

}

result:

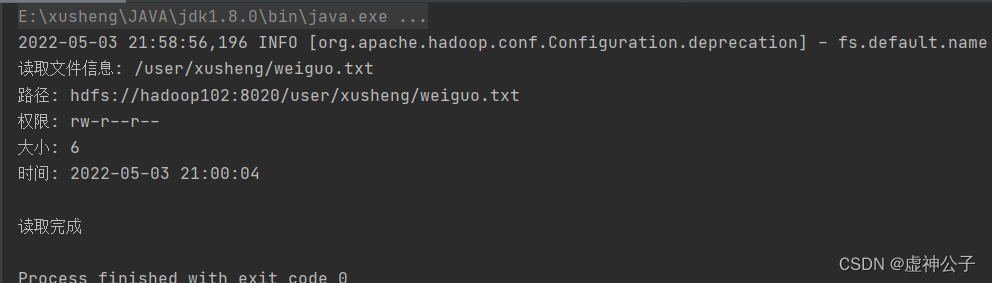

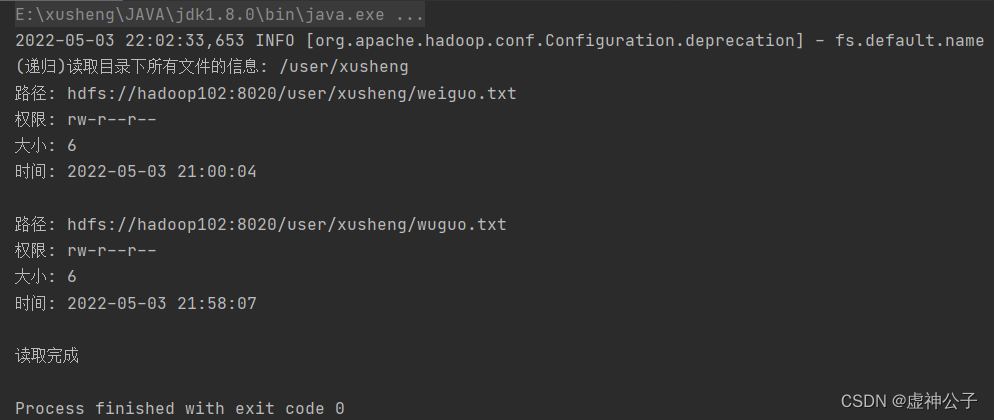

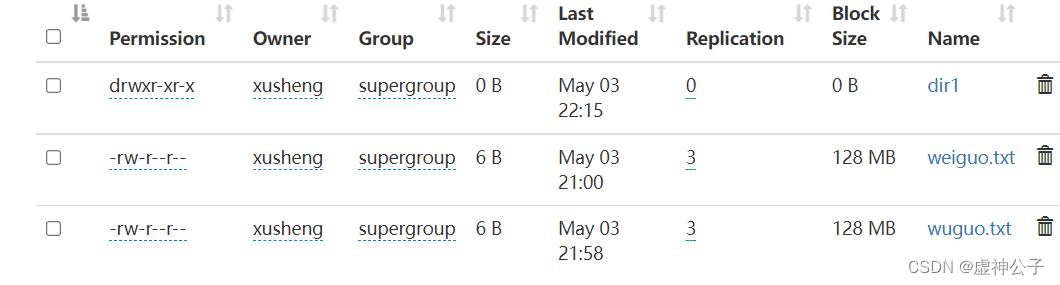

5. Given a directory in HDFS, output the read and write permissions, size, creation time and other information of all files in the directory

(5) Given a certain directory in HDFS, output information such as read and write permissions, size, creation time, and path of all files in the directory, and if the file is a directory, recursively output all file-related information in the directory;

Shell Order:

$ ./bin/hdfs dfs -ls -R -h /user/xusheng

code:

package com.xusheng.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.io.*;

import java.text.SimpleDateFormat;

public class HDFSApi5 {

/**

* 显示指定文件夹下所有文件的信息(递归)

*/

public static void lsDir(Configuration conf, String remoteDir) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path dirPath = new Path(remoteDir);

/* 递归获取目录下的所有文件 */

RemoteIterator<LocatedFileStatus> remoteIterator = fs.listFiles(dirPath, true);

/* 输出每个文件的信息 */

while (remoteIterator.hasNext()) {

FileStatus s = remoteIterator.next();

System.out.println("路径: " + s.getPath().toString());

System.out.println("权限: " + s.getPermission().toString());

System.out.println("大小: " + s.getLen());

/* 返回的是时间戳,转化为时间日期格式 */

Long timeStamp = s.getModificationTime();

SimpleDateFormat format = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

String date = format.format(timeStamp);

System.out.println("时间: " + date);

System.out.println();

}

fs.close();

}

/**

* 主函数

*/

public static void main(String[] args) {

Configuration conf = new Configuration();

conf.set("fs.default.name","hdfs://hadoop102:8020");

String remoteDir = "/user/xusheng"; // HDFS路径

try {

System.out.println("(递归)读取目录下所有文件的信息: " + remoteDir);

HDFSApi5.lsDir(conf, remoteDir);

System.out.println("读取完成");

} catch (Exception e) {

e.printStackTrace();

}

}

}

result:

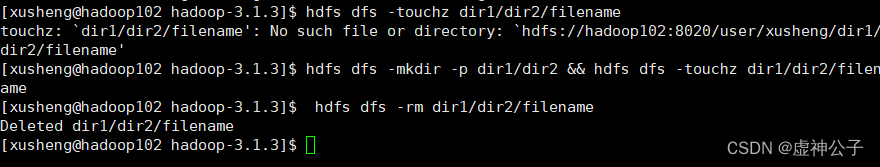

6. Provide a path to a file in HDFS, and create and delete the file

(6) Provide a path to a file in HDFS, and create and delete the file. If the directory where the file is located does not exist, the directory will be created automatically;

Shell command:

$ hdfs dfs -test -d dir1/dir2

$ hdfs dfs -touchz dir1/dir2/filename

$ hdfs dfs -mkdir -p dir1/dir2 && hdfs dfs -touchz dir1/dir2/filename

$ hdfs dfs -rm dir1/dir2/filename#删除文件

code:

package com.xusheng.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.io.*;

public class HDFSApi6 {

/**

* 判断路径是否存在

*/

public static boolean test(Configuration conf, String path) throws IOException {

FileSystem fs = FileSystem.get(conf);

return fs.exists(new Path(path));

}

/**

* 创建目录

*/

public static boolean mkdir(Configuration conf, String remoteDir) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path dirPath = new Path(remoteDir);

boolean result = fs.mkdirs(dirPath);

fs.close();

return result;

}

/**

* 创建文件

*/

public static void touchz(Configuration conf, String remoteFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path remotePath = new Path(remoteFilePath);

FSDataOutputStream outputStream = fs.create(remotePath);

outputStream.close();

fs.close();

}

/**

* 删除文件

*/

public static boolean rm(Configuration conf, String remoteFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path remotePath = new Path(remoteFilePath);

boolean result = fs.delete(remotePath, false);

fs.close();

return result;

}

/**

* 主函数

*/

public static void main(String[] args) {

Configuration conf = new Configuration();

conf.set("fs.default.name","hdfs://hadoop102:8020");

String remoteFilePath = "/user/xusheng/input/text.txt"; // HDFS路径

String remoteDir = "/user/xusheng/input"; // HDFS路径对应的目录

try {

/* 判断路径是否存在,存在则删除,否则进行创建 */

if ( HDFSApi6.test(conf, remoteFilePath) ) {

HDFSApi6.rm(conf, remoteFilePath); // 删除

System.out.println("删除路径: " + remoteFilePath);

} else {

if ( !HDFSApi6.test(conf, remoteDir) ) {

// 若目录不存在,则进行创建

HDFSApi6.mkdir(conf, remoteDir);

System.out.println("创建文件夹: " + remoteDir);

}

HDFSApi6.touchz(conf, remoteFilePath);

System.out.println("创建路径: " + remoteFilePath);

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

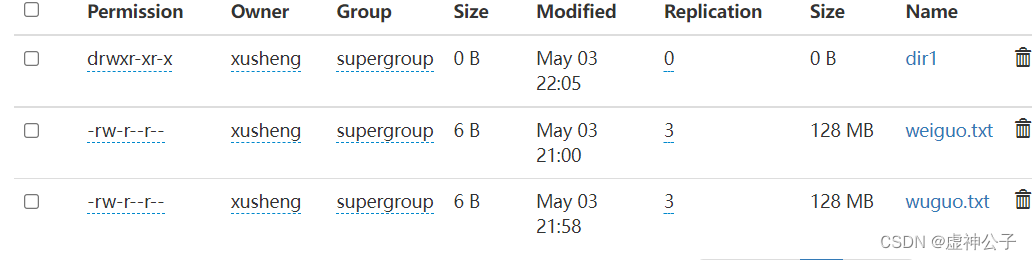

7. Provide the path of an HDFS directory, and create and delete the directory

(7) Provide the path of an HDFS directory, and create and delete the directory. When creating a directory, if the directory where the directory file is located does not exist, the corresponding directory will be created automatically; when deleting the directory, the user specifies whether to delete the directory when the directory is not empty; Shell command

:

创建目录的命令如下:$ hdfs dfs -mkdir -p dir1/dir2

删除目录的命令如下:$ hdfs dfs -rmdir dir1/dir2

After the above command is executed, if the directory is not empty, it will prompt not empty, and the delete operation will not be executed. If you want to forcefully delete a directory, you can use the following command:

$ hdfs dfs -rm -R dir1/dir2

code:

package com.xusheng.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.io.*;

public class HDFSApi7 {

/**

* 判断路径是否存在

*/

public static boolean test(Configuration conf, String path) throws IOException {

FileSystem fs = FileSystem.get(conf);

return fs.exists(new Path(path));

}

/**

* 判断目录是否为空

* true: 空,false: 非空

*/

public static boolean isDirEmpty(Configuration conf, String remoteDir) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path dirPath = new Path(remoteDir);

RemoteIterator<LocatedFileStatus> remoteIterator = fs.listFiles(dirPath, true);

return !remoteIterator.hasNext();

}

/**

* 创建目录

*/

public static boolean mkdir(Configuration conf, String remoteDir) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path dirPath = new Path(remoteDir);

boolean result = fs.mkdirs(dirPath);

fs.close();

return result;

}

/**

* 删除目录

*/

public static boolean rmDir(Configuration conf, String remoteDir) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path dirPath = new Path(remoteDir);

/* 第二个参数表示是否递归删除所有文件 */

boolean result = fs.delete(dirPath, true);

fs.close();

return result;

}

/**

* 主函数

*/

public static void main(String[] args) {

Configuration conf = new Configuration();

conf.set("fs.default.name","hdfs://hadoop102:8020");

String remoteDir = "/user/xusheng/dir1/dir2"; // HDFS目录

Boolean forceDelete = false; // 是否强制删除

try {

/* 判断目录是否存在,不存在则创建,存在则删除 */

if ( !HDFSApi7.test(conf, remoteDir) ) {

HDFSApi7.mkdir(conf, remoteDir); // 创建目录

System.out.println("创建目录: " + remoteDir);

} else {

if ( HDFSApi7.isDirEmpty(conf, remoteDir) || forceDelete ) {

// 目录为空或强制删除

HDFSApi7.rmDir(conf, remoteDir);

System.out.println("删除目录: " + remoteDir);

} else {

// 目录不为空

System.out.println("目录不为空,不删除: " + remoteDir);

}

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

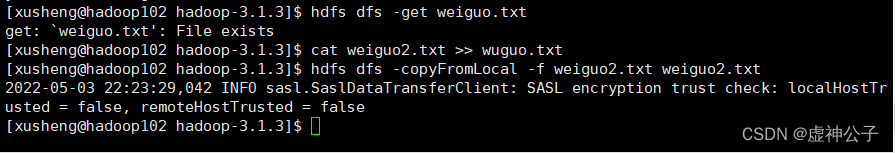

8. Add content to the file specified in HDFS, and add the content specified by the user to the beginning or end of the original file

(8) Add content to the file specified in HDFS, and add the content specified by the user to the beginning or end of the original file;

Shell command:

$ hdfs dfs -appendToFile wuguo.txt weiguo.txt

Append to the beginning of the original file. There is no command corresponding to this operation in HDFS, so it cannot be completed with a single command. You can move to the local to operate first, and then upload and overwrite. The specific commands are as follows:

$ hdfs dfs -get weiguo.txt

$ cat weiguo2.txt >> wuguo.txt

$ hdfs dfs -copyFromLocal -f weiguo2.txt weiguo2.txt

code:

package com.xusheng.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.io.*;

public class HDFSApi8 {

/**

* 判断路径是否存在

*/

public static boolean test(Configuration conf, String path) throws IOException {

FileSystem fs = FileSystem.get(conf);

return fs.exists(new Path(path));

}

/**

* 追加文本内容

*/

public static void appendContentToFile(Configuration conf, String content, String remoteFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path remotePath = new Path(remoteFilePath);

/* 创建一个文件输出流,输出的内容将追加到文件末尾 */

FSDataOutputStream out = fs.append(remotePath);

out.write(content.getBytes());

out.close();

fs.close();

}

/**

* 追加文件内容

*/

public static void appendToFile(Configuration conf, String localFilePath, String remoteFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path remotePath = new Path(remoteFilePath);

/* 创建一个文件读入流 */

FileInputStream in = new FileInputStream(localFilePath);

/* 创建一个文件输出流,输出的内容将追加到文件末尾 */

FSDataOutputStream out = fs.append(remotePath);

/* 读写文件内容 */

byte[] data = new byte[1024];

int read = -1;

while ( (read = in.read(data)) > 0 ) {

out.write(data, 0, read);

}

out.close();

in.close();

fs.close();

}

/**

* 移动文件到本地

* 移动后,删除源文件

*/

public static void moveToLocalFile(Configuration conf, String remoteFilePath, String localFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path remotePath = new Path(remoteFilePath);

Path localPath = new Path(localFilePath);

fs.moveToLocalFile(remotePath, localPath);

}

/**

* 创建文件

*/

public static void touchz(Configuration conf, String remoteFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path remotePath = new Path(remoteFilePath);

FSDataOutputStream outputStream = fs.create(remotePath);

outputStream.close();

fs.close();

}

/**

* 主函数

*/

public static void main(String[] args) {

Configuration conf = new Configuration();

conf.set("fs.default.name","hdfs://hadoop102:8020");

String remoteFilePath = "/user/xusheng/weiguo.txt"; // HDFS文件

String content = "新追加的内容\n";

String choice = "after"; //追加到文件末尾

// String choice = "before"; // 追加到文件开头

try {

/* 判断文件是否存在 */

if ( !HDFSApi.test(conf, remoteFilePath) ) {

System.out.println("文件不存在: " + remoteFilePath);

} else {

if ( choice.equals("after") ) {

// 追加在文件末尾

HDFSApi8.appendContentToFile(conf, content, remoteFilePath);

System.out.println("已追加内容到文件末尾" + remoteFilePath);

} else if ( choice.equals("before") ) {

// 追加到文件开头

/* 没有相应的api可以直接操作,因此先把文件移动到本地*/

/*创建一个新的HDFS,再按顺序追加内容 */

String localTmpPath = "/user/xusheng/tmp.txt";

// 移动到本地

HDFSApi8.moveToLocalFile(conf, remoteFilePath, localTmpPath);

// 创建一个新文件

HDFSApi8.touchz(conf, remoteFilePath);

// 先写入新内容

HDFSApi8.appendContentToFile(conf, content, remoteFilePath);

// 再写入原来内容

HDFSApi.appendToFile(conf, localTmpPath, remoteFilePath);

System.out.println("已追加内容到文件开头: " + remoteFilePath);

}

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

9. Delete the specified file in HDFS

(9) Delete the specified file in HDFS;

Shell command:

$ hdfs dfs -rm weiguo2.txt

code:

package com.xusheng.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.io.*;

public class HDFSApi9 {

/**

* 删除文件

*/

public static boolean rm(Configuration conf, String remoteFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path remotePath = new Path(remoteFilePath);

boolean result = fs.delete(remotePath, false);

fs.close();

return result;

}

/**

* 主函数

*/

public static void main(String[] args) {

Configuration conf = new Configuration();

conf.set("fs.default.name","hdfs://hadoop102:8020");

String remoteFilePath = "/user/xusheng/text.txt"; // HDFS文件

try {

if ( HDFSApi9.rm(conf, remoteFilePath) ) {

System.out.println("文件删除: " + remoteFilePath);

} else {

System.out.println("操作失败(文件不存在或删除失败)");

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

result:

10. In HDFS, move files from source path to destination path

(10) In HDFS, move the file from the source path to the destination path.

Shell command:

$ hdfs dfs -mv weiguo.txt wuguo.txt

code:

package com.xusheng.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.io.*;

public class HDFSApi10 {

/**

* 移动文件

*/

public static boolean mv(Configuration conf, String remoteFilePath, String remoteToFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path srcPath = new Path(remoteFilePath);

Path dstPath = new Path(remoteToFilePath);

boolean result = fs.rename(srcPath, dstPath);

fs.close();

return result;

}

/**

* 主函数

*/

public static void main(String[] args) {

Configuration conf = new Configuration();

conf.set("fs.default.name","hdfs://hadoop102:8020");

String remoteFilePath = "hdfs:///user/xusheng/text.txt"; // 源文件HDFS路径

String remoteToFilePath = "hdfs:///user/xusheng/new.txt"; // 目的HDFS路径

try {

if ( HDFSApi10.mv(conf, remoteFilePath, remoteToFilePath) ) {

System.out.println("将文件 " + remoteFilePath + " 移动到 " + remoteToFilePath);

} else {

System.out.println("操作失败(源文件不存在或移动失败)");

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

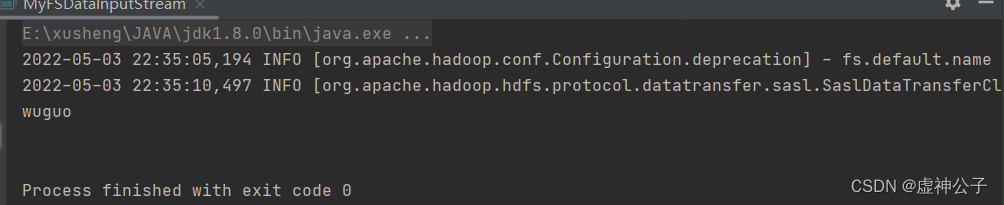

(2) Program to implement a class "MyFSDataInputStream"

(2) Program to implement a class "MyFSDataInputStream", which inherits "org.apache.hadoop.fs.FSDataInputStream", the requirements are as follows: implement the method "readLine()" to read the specified file in HDFS line by line, if the file is read end, it returns empty, otherwise it returns the text of a line in the file.

code:

package com.xusheng.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import java.io.*;

public class MyFSDataInputStream extends FSDataInputStream {

public MyFSDataInputStream(InputStream in) {

super(in);

}

/**

* 实现按行读取

* 每次读入一个字符,遇到"\n"结束,返回一行内容

*/

public static String readline(BufferedReader br) throws IOException {

char[] data = new char[1024];

int read = -1;

int off = 0;

// 循环执行时,br 每次会从上一次读取结束的位置继续读取

//因此该函数里,off 每次都从0开始

while ( (read = br.read(data, off, 1)) != -1 ) {

if (String.valueOf(data[off]).equals("\n") ) {

off += 1;

break;

}

off += 1;

}

if (off > 0) {

return String.valueOf(data);

} else {

return null;

}

}

/**

* 读取文件内容

*/

public static void cat(Configuration conf, String remoteFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path remotePath = new Path(remoteFilePath);

FSDataInputStream in = fs.open(remotePath);

BufferedReader br = new BufferedReader(new InputStreamReader(in));

String line = null;

while ( (line = MyFSDataInputStream.readline(br)) != null ) {

System.out.println(line);

}

br.close();

in.close();

fs.close();

}

/**

* 主函数

*/

public static void main(String[] args) {

Configuration conf = new Configuration();

conf.set("fs.default.name","hdfs://hadoop102:8020");

String remoteFilePath = "/user/xusheng/wuguo.txt"; // HDFS路径

try {

MyFSDataInputStream.cat(conf, remoteFilePath);

} catch (Exception e) {

e.printStackTrace();

}

}

}

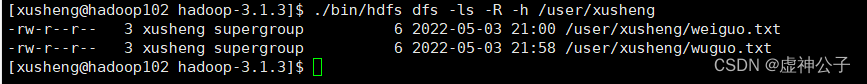

result:

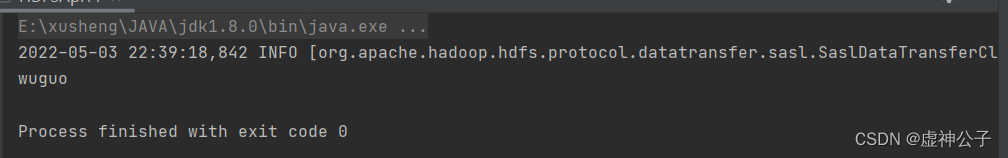

(3) Check the Java help manual or other materials, and output the text of the specified file in HDFS to the terminal after programming.

(3) Check the Java help manual or other materials, and use "java.net.URL" and "org.apache.hadoop.fs.FsURLStreamHandlerFactory" to program and output the text of the specified file in HDFS to the terminal.

code:

package com.xusheng.hdfs;

import org.apache.hadoop.fs.*;

import org.apache.hadoop.io.IOUtils;

import java.io.*;

import java.net.URL;

public class HDFSApi11 {

static{

URL.setURLStreamHandlerFactory(new FsUrlStreamHandlerFactory());

}

/**

* 主函数

*/

public static void main(String[] args) throws Exception {

String remoteFilePath = "hdfs://hadoop102:8020//user/xusheng/weiguo.txt"; // HDFS文件

InputStream in = null;

try{

/* 通过URL对象打开数据流,从中读取数据 */

in = new URL(remoteFilePath).openStream();

IOUtils.copyBytes(in,System.out,4096,false);

} finally{

IOUtils.closeStream(in);

}

}

}

result:

Fourth, experience:

Hadoop operating mode

1) Hadoop official website: http://hadoop.apache.org/

2) Hadoop operating mode includes: local mode, pseudo-distributed mode and fully distributed mode.

➢ Local mode: run on a single machine, just to demonstrate the official case. The production environment does not need it.

➢ Pseudo-distributed mode: It also runs on a single machine, but has all the functions of a Hadoop cluster, and one server simulates

a distributed environment. Some companies that lack money are used for testing, but not for production environments.

➢ Fully distributed mode: Multiple servers form a distributed environment. Production environment use.