KIND network plug-in experiment

foreword

KIND environment introduction and startup (disable the default network plug-in), please jump to "KIND network plug-in experiment: using bridge as a cni plug-in"

The method of using bridge as a KIND network plug-in is introduced above. This article will use flannel

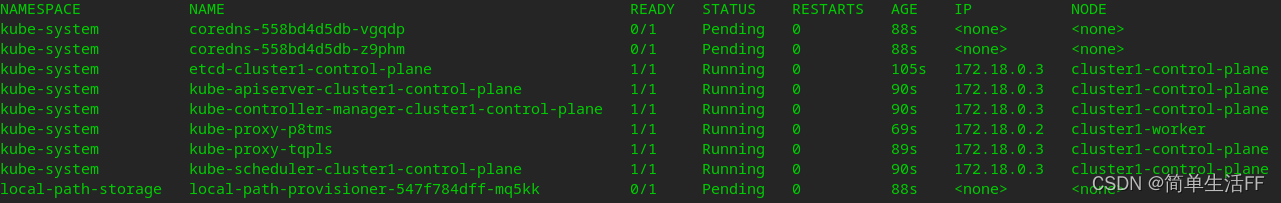

after KIND starts because there is no The network plug-in, coredns cannot be assigned an address, so it did not get up. The POD status after disabling the default network plugin startup is as follows:

deploy flannel

kubectl apply -f flannel-v0.14.0.yaml

YAML files can be obtained from flannel official website

# flannel-v0.14.0.yaml

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN', 'NET_RAW']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.14.0

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.14.0

command:

- /opt/bin/flanneld

args:

- --ip-masq=false

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

After the deployment is complete, the flannel configuration file will be generated in the /etc/cni/net.d directory of the node

/etc/cni/net.d# cat 10-flannel.conflist

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

Node subset information is generated in the node's /run/flannel/subnet.env file

root@cluster1-control-plane:/run/flannel# cat subnet.env

FLANNEL_NETWORK=10.244.0.0/16

FLANNEL_SUBNET=10.244.0.1/24

FLANNEL_MTU=1450

FLANNEL_IPMASQ=false

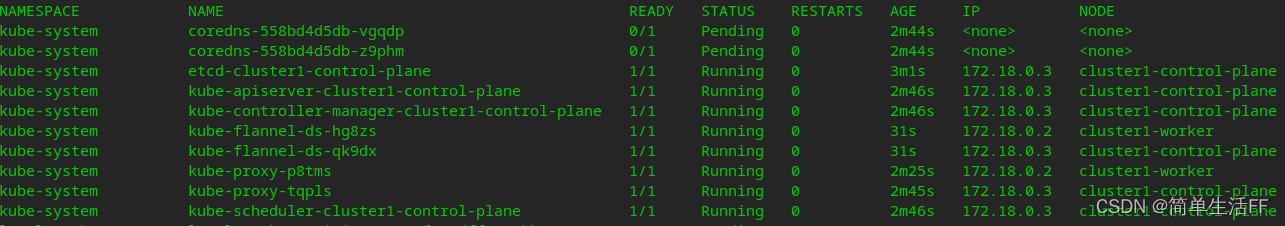

After the deployment is complete, kube-flannel runs on the master and worker nodes as a DaemonSet ,

but there are still problems with coredns, etc. View through kubectl describe:

… failed to find plugin “flannel” in path [/opt/cni/bin]

Warning FailedCreatePodSandBox 10s kubelet, cluster1-worker Failed to create pod sandbox: rpc error: code = Unknown desc = failed to setup network for sandbox “d9d1f4c592c10514313dec335bc24c6eb9c89903e59fa3db3a7f49696e1dd757”: failed to find plugin “flannel” in path [/opt/cni/bin]

Need to download the CNI plugin: CNI Plugins v0.8.7 (After version 1.0.0, there is no flannel in CNI Plugins, why?)

Put the compressed package in the /opt/cni/bin directory of the master and worker, and unzip it

#将插件放到master,worker节点类似

sudo docker cp '/home/cni-plugins-linux-arm64-v0.8.7.tgz' aa7c807c9c4a:/opt/cni/bin/

sudo docker exec -it aa7c807c9c4a /bin/bash

root@cluster1-worker:/# cd /opt/cni/bin/

root@cluster1-worker:/opt/cni/bin# ls

cni-plugins-linux-arm64-v0.8.7.tgz host-local loopback portmap ptp

root@cluster1-worker:/opt/cni/bin# tar -xzvf cni-plugins-linux-arm64-v0.8.7.tgz

./

./macvlan

./flannel

./static

./vlan

./portmap

./host-local

./bridge

./tuning

./firewall

./host-device

./sbr

./loopback

./dhcp

./ptp

./ipvlan

./bandwidth

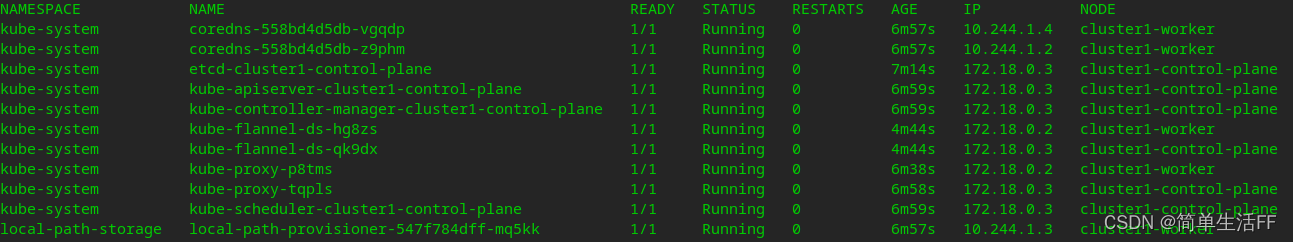

After the completion, the pods on the cluster are running normally

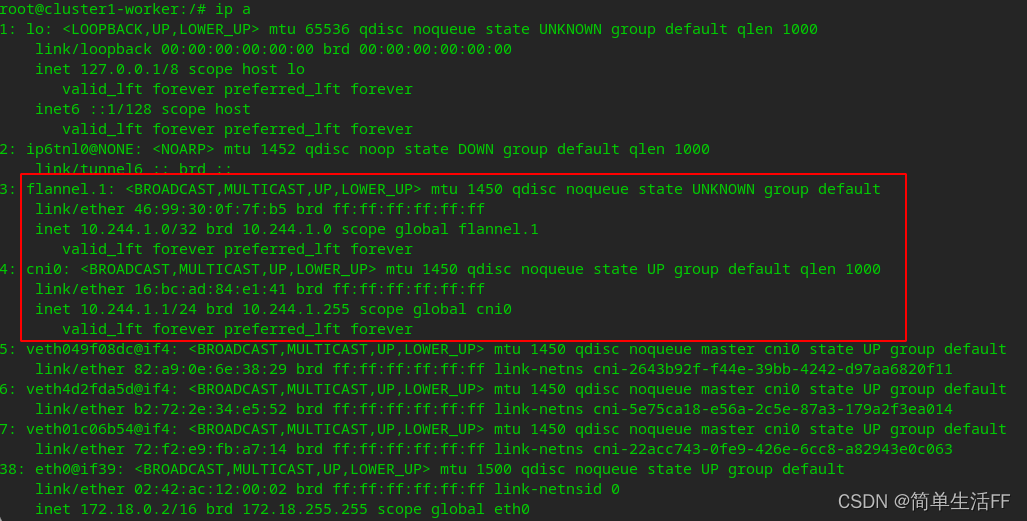

and enter the worker node, you can see:

and enter the worker node, you can see:

cni0 bridge and vtep device flannel.1 (flannel.[VNI], the default is flannel.1), mtu 1450 means using vxlan for inter-node communication

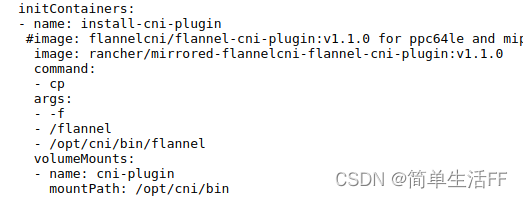

2022-06-01 Supplement: As reminded by the technical experts in the comment area, the new version adds the operation of copying the binary file to the host machine /opt/cni/bin in the yaml deployment file of flannel. For the specific yaml file, see the official website . The corresponding fragment of the yaml file is as follows:

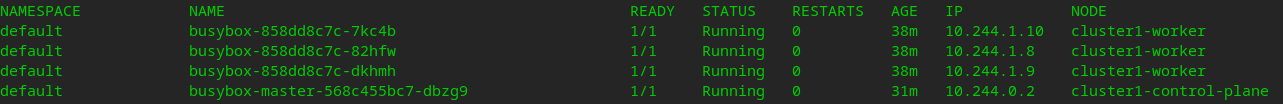

verify

Through the above deployment operations, the KIND (k8s) environment network is as follows

| the host | host address | POD subnet |

|---|---|---|

| master node | 172.18.0.3 | 10.244.0.0/24 |

| worker node | 172.18.0.2 | 10.244.1.0/24 |

Deploy the deployment

of the test, through the test ip allocation , enter the pod (10.244.0.2) on the master normally , and ping the pod (10.244.1.10) on the worker

of the test, through the test ip allocation , enter the pod (10.244.0.2) on the master normally , and ping the pod (10.244.1.10) on the worker

/ # ping 10.244.1.10

PING 10.244.1.10 (10.244.1.10): 56 data bytes

64 bytes from 10.244.1.10: seq=0 ttl=62 time=0.516 ms

64 bytes from 10.244.1.10: seq=1 ttl=62 time=0.480 ms

^C

--- 10.244.1.10 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.480/0.498/0.516 ms

/ # traceroute 10.244.1.10

traceroute to 10.244.1.10 (10.244.1.10), 30 hops max, 46 byte packets

1 10.244.0.1 (10.244.0.1) 0.057 ms 0.029 ms 0.024 ms

2 10.244.1.0 (10.244.1.0) 0.025 ms 0.021 ms 0.017 ms

3 10.244.1.10 (10.244.1.10) 0.015 ms 0.013 ms 0.008 ms

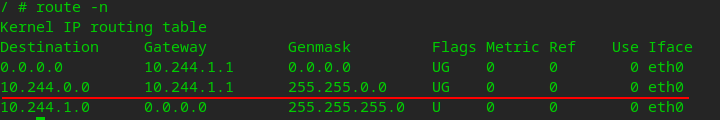

Enter the pod on the worker and check the routing table:

the packet with the destination address 10.244.0.0/16 (k8s network) is sent to the gateway 10.244.1.1. 10.244.1.1 is the address of the gateway cni0 on the worker node.

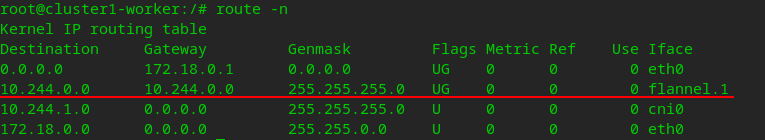

Check the routing table on the worker node:

the packet whose destination address is the 10.244.0.0/24 network segment (the subnet of the pod on the master) is sent to the vtep device flannel.1 of the master (10.244.0.0)

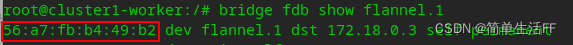

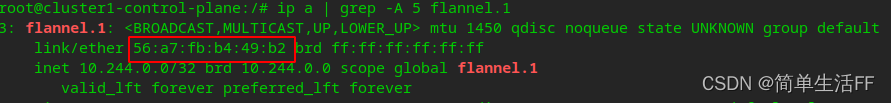

flannel.1 of the master node ( The MAC address of 10.244.0.0) has been maintained in the fdb of the worker node flannel.1.

56:a7:fb:b4:49:b2 in the above figure is the MAC address of the master node flannel.1

Summarize

The end part of the Flannel network of the k8s network is very good, so I took it directly and quoted it

In general, flannel is more like an extension of the classic bridge mode. We know that in bridge mode, the container of each host will use a default network segment, and the containers can communicate with each other, and the host and the container can communicate with each other. If so, we can manually configure the network segment of each host so that they do not conflict with each other. Then think of a way to send the traffic whose destination address is a non-local container to the corresponding host: if the hosts in the cluster are all in the same subnet, build a route to forward it; if they are not in the same subnet, build a tunnel Forward it. In this way, the cross-network communication problem of the container is solved. What flannel does is actually automate these tasks.

Follow up

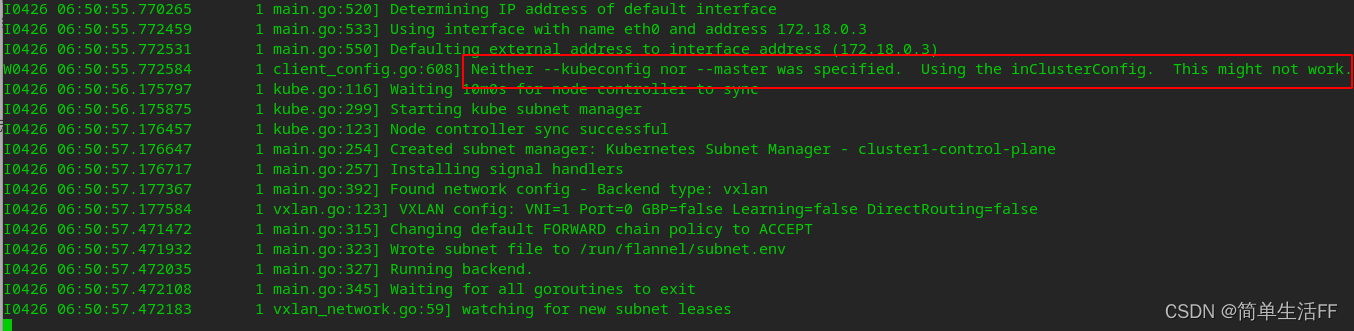

Check flannel's log

client_config.go:608] Neither --kubeconfig nor --master was specified. Using the inClusterConfig. This might not work.

It seems that the use has no effect.

If you want to increase the –kubeconfig startup parameter, you need the following:

# 进到master节点

# 注意:我这个集群的apiserver地址是172.18.0.3,集群name是cluster1

# 切换到需要生成配置文件的目录,比如我这里是/usr/local/flannel-wf/

kubectl config set-cluster cluster1 --kubeconfig=flannel.conf --embed-certs --server=https://172.18.0.3:6443 --certificate-authority=/etc/kubernetes/pki/ca.crt

kubectl config set-credentials flannel --kubeconfig=flannel.conf --token=$(kubectl get sa -n kube-system flannel -o jsonpath={

.secrets[0].name} | xargs kubectl get secret -n kube-system -o jsonpath={

.data.token} | base64 -d)

kubectl config set-context cluster1 --kubeconfig=flannel.conf --user=flannel --cluster=cluster1

# 将生成的flannel.conf复制到其他节点

# cat flannel.conf

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM1ekNDQWMrZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJeU1EUXlOVEEyTURjd01Wb1hEVE15TURReU1qQTJNRGN3TVZvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTUFYCjNONHUzR2xya1NNdXNDZjRTZkdSQzUzSGMwRDYvb29xeTBGZnIvUURYTmpnYVdXNzVMOTlaZ1JnRG1qSTVhZ1UKMlVxOTRQWFJ5NHNjSy9xZmlNQjdjS0NhSkVJSTJ1YUowcTlKdWlSUkd1REwwZEFSbTk4dU1LMlIzcHF4MXhMMAoyQUcybk0wcnorMGpMRkZ5UkxHQmFhL1hsNzFiRy9nK3FVVUpuMFRiNjNXb1d0c3BMT1pIVDArS1A3dm41TFZNCjNzYUdjWDRxSGswOWZPZEFCYzlrZGFKRUd3dU9ZQ0NpNmUzVnM0T3I1WFNobFl1RWc0OW9oeXZZQU5JQzdCa3gKbVNveXVQM09neEhHSyttS3hRYUxnK1JEZTc3UmgydEQ5WDNZb0haaHVWMk44QWs5Z1NzWHdVZ25NOHQzQWpQNwo5d2xmcmhQZG1iYWZwN1JIYWZVQ0F3RUFBYU5DTUVBd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0hRWURWUjBPQkJZRUZKNENmNjFQOTlPY2czbEprc3JjOU1TZlp6aUlNQTBHQ1NxR1NJYjMKRFFFQkN3VUFBNElCQVFDdGFVaEpnaTRXQis4NlEyTUN3WTdRZ2pUc2hPZ2t1UWlrZmRJZ3lDTjFyZ0ZiUTUyVQo3VHBHRS9aa09HdVRLOVRQUkgvUTJNWGNJejhiZHJoL245bFcveEViNklXUnBvaWF4U25tVTkrNHlxZ0pISkdlClVTbUZhZExIQjdaRHFlNnZZdmV6aWRPOWZ1OWRtNXFKUnJOdllxc0s4MVkvM3Y4b0NYbmQ1Uk12eFRoVjlhbFAKR2dHYTk5OGFXT1B6OHFEdHFIL3NkVnJ0UmpWOWVTSVJGNVg1ZE04bDlyTkNNZE43eGt4VGY5TG82V21kQXhseApMR3FYR2hnb1BHbHdIQnhPUGtLanBqZzRTZHpCZmFVZ1J0a1ZJUERsdW1Icm94RVNtZXlQNkc4UUVVY1AzVkJRCkJ3anRzb21aYnp4RWRhUCsydXJ5a05TMXY4cmFONGFXQkRlSAotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

server: https://172.18.0.3:6443

name: cluster1

contexts:

- context:

cluster: cluster1

user: flannel

name: cluster1

current-context: ""

kind: Config

preferences: {

}

users:

- name: flannel

user:

token: eyJhbGciOiJSUzI1NiIsImtpZCI6ImNPbkpfSkFlVTBWa2RfVjVqZ1d6LXNPUDk1VldSY2NVb1M0empJS3hQQ1EifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJmbGFubmVsLXRva2VuLXM0ajI5Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImZsYW5uZWwiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI2NzFmNDNiZS0zZjYzLTQwMjktOTQ4Ny1lYWZjZDhmMmQ5ZmIiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06Zmxhbm5lbCJ9.kHo7v-sKNDiRtOceIcngXzpY83Tp1Fnnyo6ueaDoSffAe3dBqW_W2ExU6F99DpujM6mnmlIUXXAq0Nbv6eANagK4fwGVD20so-mM9bVsOVJP74UufQh9oYhT77BHMMfryW7n3Q-ujerSswSvq5XFCax1WAOhQXYitIETIkD3ePKf5_HteRLLjvCTC2yuFNuYK3ZwpcOdypfvm28p_K6Ly0YW1EjOcN7UJ0FGMTAqJ71jeAT2OlVNxstuhNuMNOIfgyhYZ8lppxPuqh073EjTi0Ss59fRpFJCzbaEsEiIAwiel_hVw8xkNR7zCRvKQMlL5lmF-juMCXeoHeBKPcXwLQ

Modify the flannel deployment yaml file, only modify the DaemonSet, add the startup parameter –kubeconfig, and mount the configuration file to the Pod directory through the host directory.

Other ClusterRole, SA, ConfigMap, etc. do not change

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.14.0

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.14.0

command:

- /opt/bin/flanneld

args:

- --ip-masq=false

- --kube-subnet-mgr

- --kubeconfig-file=/usr/local/flannel-wf/flannel.conf # 增加启动参数!

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

- name: kube-cfg ##

mountPath: /usr/local/flannel-wf # 从宿主机挂载

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

###

- name: kube-cfg

hostPath:

path: /usr/local/flannel-wf # 宿主机目录

After re-deployment, check the startup log and there will be no such line prompt

$ sudo kubectl logs -f kube-flannel-ds-dn59l -n kube-system

I0426 07:06:52.970794 1 main.go:520] Determining IP address of default interface

I0426 07:06:52.971228 1 main.go:533] Using interface with name eth0 and address 172.18.0.3

I0426 07:06:52.971252 1 main.go:550] Defaulting external address to interface address (172.18.0.3)

I0426 07:06:53.676497 1 kube.go:116] Waiting 10m0s for node controller to sync

I0426 07:06:53.676660 1 kube.go:299] Starting kube subnet manager

I0426 07:06:54.676663 1 kube.go:123] Node controller sync successful

I0426 07:06:54.676715 1 main.go:254] Created subnet manager: Kubernetes Subnet Manager - cluster1-control-plane

I0426 07:06:54.676724 1 main.go:257] Installing signal handlers

I0426 07:06:54.676988 1 main.go:392] Found network config - Backend type: vxlan

I0426 07:06:54.677234 1 vxlan.go:123] VXLAN config: VNI=1 Port=0 GBP=false Learning=false DirectRouting=false

I0426 07:06:54.873344 1 main.go:315] Changing default FORWARD chain policy to ACCEPT

I0426 07:06:54.873695 1 main.go:323] Wrote subnet file to /run/flannel/subnet.env

I0426 07:06:54.873720 1 main.go:327] Running backend.

I0426 07:06:54.873758 1 main.go:345] Waiting for all goroutines to exit

I0426 07:06:54.873809 1 vxlan_network.go:59] watching for new subnet leases

Reference: Flannel network of

binary installation flannel k8s network