Records : 455

Scenario : On the CentOS 7.9 operating system, use the zookeeper-3.5.9 version, and install the Zookeeper cluster on three machines.

Version : zookeeper-3.5.9, CentOS 7.9, Linux kernel-5.4.218.

1. Host planning

Goal: Deploy a Zookeeper cluster with three hosts.

Host app161; IP: 192.168.19.161; Port: 22181, 28001, 28501.

Host app162; IP: 192.168.19.162; Port: 22181, 28001, 28501.

Host app163; IP: 192.168.19.163; Port: 22181, 28001, 28501.

2. Download the zookeeper-3.5.9 installation package

Download version: apache-zookeeper-3.5.9-bin.tar.gz

Download address: https://archive.apache.org/dist/zookeeper/

Download command: wget https://archive.apache.org/dist/zookeeper/zookeeper-3.5.9/apache-zookeeper-3.5.9-bin.tar.gz

Analysis: After the download is complete, the apache-zookeeper-3.5.9.tar.gz package is in the current directory.

3. Unzip the zookeeper package to the specified directory

3.1 Decompression

Command: tar -zxvf /home/apps/software/apache-zookeeper-3.5.9-bin.tar.gz -C /home/opt/zk

Analysis: Unzip to the specified directory: /home/opt/zk/apache-zookeeper-3.5.9-bin.

3.Double Naming

Command: mv /home/opt/zk/apache-zookeeper-3.5.9-bin /home/opt/zk/zookeeper-3.5.9

Resolution: Rename apache-zookeeper-3.5.9 to zookeeper-3.5.9.

4. Create zookeeper data and log directories

The data and log directories can be placed in /home/opt/zk/zookeeper-3.5.9 or independently.

Data directory command: mkdir -p /home/opt/zk/data

Log directory command: mkdir -p /home/opt/zk/log

Analysis: The data directory is to store the running data of zookeeper. The log directory stores zookeeper operation logs.

5. Modify the zookeeper configuration

5.1 Copy and rename zoo_sample.cfg to zoo.cfg

Command: cp -r /home/opt/zk/zookeeper-3.5.9/conf/zoo_sample.cfg /home/opt/zk/zookeeper-3.5.9/conf/zoo.cfg

Analysis: zoo_sample.cfg is the official template configuration, users can configure it according to their needs.

(1) Copy and rename zoo_sample.cfg to zoo.cfg

5.2 Modify the zoo.cfg configuration file

Modify the command: vi /home/opt/zk/zookeeper-3.5.9/conf/zoo.cfg

Modifications:

#修改数据目录和日志目录

dataDir=/home/opt/zk/data

dataLogDir=/home/opt/zk/log

#修改端口号,默认是2181

clientPort=22181

#zookeeper集群配置

server.1=192.168.19.161:28001:28501

server.2=192.168.19.162:28001:28501

server.3=192.168.19.163:28001:285015.3 Parsing the cluster configuration format

The zookeeper cluster configuration format description in the zoo.cfg file.

Format: server.A = B:C:D

A: It is a number, indicating the server number in the cluster.

B: Server IP address.

C: is a port number, which is used for information exchange among cluster members, and indicates the port through which this server exchanges information with the leader server in the cluster.

D: It is a port number, which is the port specially used to elect the leader when the leader hangs up.

6. Copy the zookeeper configured by host app161 to other hosts

6.1 Create directory remotely

Script name: mkdir_script.sh

Script content:

#!/bin/bash

for host_name in app162 app163

do

ssh -t root@${host_name} 'mkdir -p /home/opt/zk/zookeeper-3.5.9/ ;\

mkdir -p /home/opt/zk/data/ ;\

mkdir -p /home/opt/zk/log/'

doneExecute the script: bash mkdir_script.sh

6.2 Remotely copy zookeeper to other hosts

Script name: scp_script.sh

Script content:

#!/bin/bash

for host_name in app162 app163

do

scp -r /home/opt/zk/zookeeper-3.5.9/* root@${host_name}:/home/opt/zk/zookeeper-3.5.9

scp -r /home/opt/zk/data/* root@${host_name}:/home/opt/zk/data

scp -r /home/opt/zk/log* root@${host_name}:/home/opt/zk/log

doneExecute the script: bash scp_script.sh

7. Create myid file and write number

Command: echo '1' > /home/opt/zk/data/myid

Analysis: If multiple nodes of the zookeeper cluster are deployed on the same host, the value of myid needs to be different.

Script name: create_myid_script.sh

Script content:

#!/bin/bash

for host_name in app161 app162 app163

do

if [[ ${host_name} = 'app161' ]] ;then

ssh -t root@${host_name} " echo '1' > /home/opt/zk/data/myid "

elif [[ ${host_name} = 'app162' ]] ;then

ssh -t root@${host_name} " echo '2' > /home/opt/zk/data/myid "

elif [[ ${host_name} = 'app163' ]] ;then

ssh -t root@${host_name} " echo '3' > /home/opt/zk/data/myid "

fi

doneExecute the script: bash create_myid_script.sh

Analysis: The myid number of each host is different. To check whether two strings are equal use "=", and there are spaces on both sides of "=".

8. Modify zookeeper directory ownership

Before this step, the zookeeper-related operations are performed by the root user. The production environment generally runs zookeeper as an ordinary user and modifies the directory ownership.

Script name: chown_script.sh

Script content:

#!/bin/bash

for host_name in app161 app162 app163

do

ssh -t root@${host_name} 'chown -R learn:learn /home/opt/zk/'

doneExecute the script: bash chown_script.sh

Analysis: assign the ownership of the /home/opt/zk/ directory to the learn user. as follows:

Zookeeper main directory: /home/opt/zk/zookeeper-3.5.9.

zookeeper data directory: /home/opt/zk/data.

zookeeper log directory: /home/opt/zk/log.

9. Start the zookeeper cluster

Switch the operating user to the learn user: su learn

To start a zookeeper cluster, just start each node, and zookeeper will automatically form a cluster.

9.1 Start the zookeeper cluster (start one by one)

Use the startup name to start zookeeper on each host.

User: su learn

Operation directory: /home/opt/zk/zookeeper-3.5.9/bin

Start command: sh zkServer.sh start

Analysis: It is very troublesome to log in to the cluster host every time. You can consider using a script to start it.

9.2 Start zookeeper cluster (use script to start)

Script name: zk-start_script.sh

Script content:

#!/bin/bash

for host_name in app161 app162 app163

do

ssh -t learn@${host_name} 'cd /home/opt/zk/zookeeper-3.5.9/bin/ ; sh zkServer.sh start '

doneExecute the script: sh zk-start_script.sh

Log information:

9.3 To start zookeeper with a script , you need to modify the zkEnv.sh file

In this example, the zkEnv.sh file needs to be modified when using the script to start the zookeeper cluster.

(1) Modify zkEnv.sh of host app161

Full file path: /home/opt/zk/zookeeper-3.5.9/bin/zkEnv.sh

Modifications:

JAVA_HOME=/home/apps/module/jdk1.8.0_281Analysis: When using the script to start remotely, an error is reported: Error: JAVA_HOME is not set and java could not be found in PATH. Therefore, specify the environment variable in the zkEnv.sh file.

(2) Synchronize the modified zkEnv.sh to other hosts

Screenplay name: scp_zkEnv.sh

Script content:

for host_name in app162 app163

do

scp -r /home/opt/zk/zookeeper-3.5.9/bin/zkEnv.sh root@${host_name}:/home/opt/zk/zookeeper-3.5.9/bin/zkEnv.sh

doneExecute the script: bash scp_zkEnv.sh

10. View the zookeeper cluster startup status

Script name: zk-status_script.sh

Script content:

#!/bin/bash

for host_name in app161 app162 app163

do

echo "查看主机 ${host_name} 启动端口:"

ssh -t learn@${host_name} ' netstat -tlnp | grep java '

doneExecute the script: bash zk-status_script.sh

Log information:

11. Use the client to log in to the cluster

Client: /home/opt/zk/zookeeper-3.5.9/bin/zkCli.sh

Connection command:

sh zkCli.sh -timeout 5000 -server 192.168.19.161:22181

sh zkCli.sh -timeout 5000 -server 192.168.19.162:22181

sh zkCli.sh -timeout 5000 -server 192.168.19.163:22181

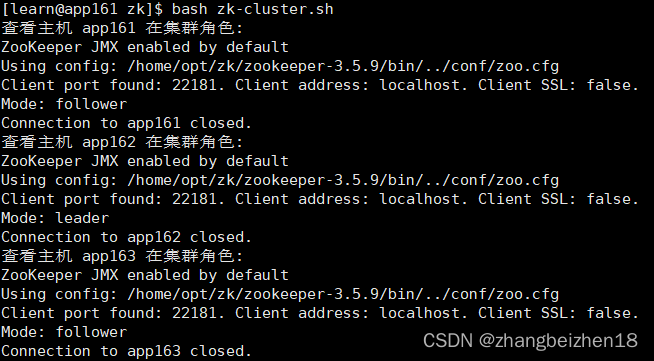

12. View zookeeper cluster information

Screenplay name: zk-cluster.sh

Script content:

#!/bin/bash

for host_name in app161 app162 app163

do

echo "查看主机 ${host_name} 在集群角色:"

ssh -t learn@${host_name} 'cd /home/opt/zk/zookeeper-3.5.9/bin/ ; sh zkServer.sh status '

doneExecute the script: bash zk-cluster.sh

Log information:

Above, thanks.

June 11, 2023