Author |

Source | Insights New Research Institute

A single spark can start a prairie fire.

On May 6, the Xunfei Xinghuo Cognitive Model was unveiled.

At the press conference, Liu Qingfeng, Chairman of HKUST iFLYTEK, and Liu Cong, Dean of the Research Institute, measured the seven core capabilities of the Xinghuo large model on the spot, and released related products based on the large model in various fields such as education, office, automobile and digital employees. .

At the same time, Liu Qingfeng also gave Xunfei Spark’s iteration schedule and goals for each stage:

The first stage: on June 9, breaking through open questions and answers, such as real-time questions and answers; upgrading the ability of multiple rounds of dialogue; improving the ability of mathematics;

The second stage: on August 15, break through the code capability; the multi-modal interaction capability is officially opened to customers;

The third stage: On October 24, in the field of general large-scale models, ChatGPT was benchmarked. Among them, the Chinese ability surpassed the latter, and the English ability was equivalent to the latter.

"Currently, in the three major capabilities of text generation, knowledge question and answer, and mathematical ability, Xunfei Xinghuo's cognitive model has surpassed ChatGPT." Liu Qingfeng said that the cognitive model has become the dawn of general artificial intelligence, and iFLYTEK is confident in realizing it." Wisdom emerges."

Before Xinghuo recognized the large-scale model, large-scale models of major technology companies such as Baidu Wenxinyiyan, Huawei Pangu, Ali Tongyi Qianwen, Jingdong Lingxi, and Shangtang Ririxin were released successively, and the co-founder of Meituan Wang Huiwen , Wang Xiaochuan, the founder of Sogou, and Li Zhifei, the founder of Momenwen, and other technology giants have come back out of the rivers and lakes, and have brought a lot of funds, and also participated in the re-starting of large models, so that some professionals use the "Hundred Models War" to Describe the intensity of current industry competition.

At this point, the question arises, why is Liu Qingfeng so confident, and what is the strength of the Xinghuo Cognitive Big Model? How can it surpass ChatGPT and realize the "emergence of wisdom"?

01 Whether it works or not, the result speaks

Just talking without practicing fake moves, we directly conducted a test on the Xinghuo cognitive model to check whether the "quality" is really "smooth in answering" as Liu Qingfeng said.

1. Text generation

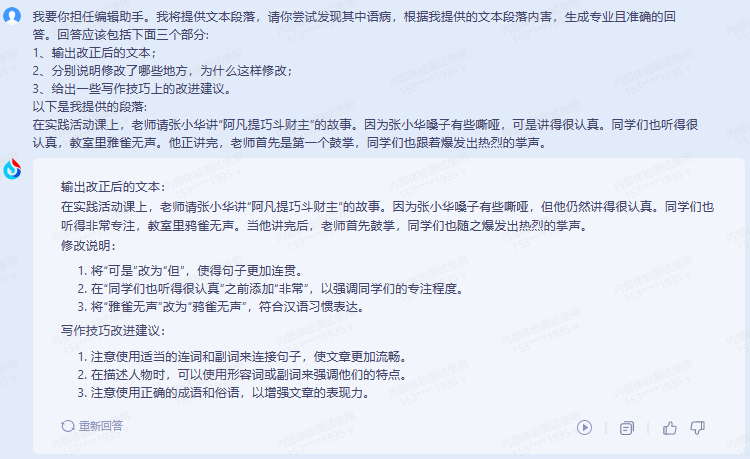

At the press conference, Liu Cong demonstrated the text generation ability of the Xinghuo large model, which can not only compose "stories" but also write press releases. However, through our subsequent tests, we found that the text generation ability of the Xinghuo large model is far more than this Two, depending on the scene, more abilities can be derived.

For example, the big model can be asked to act as an editorial assistant, modifying the text paragraphs provided by the user and suggesting improvements in writing skills.

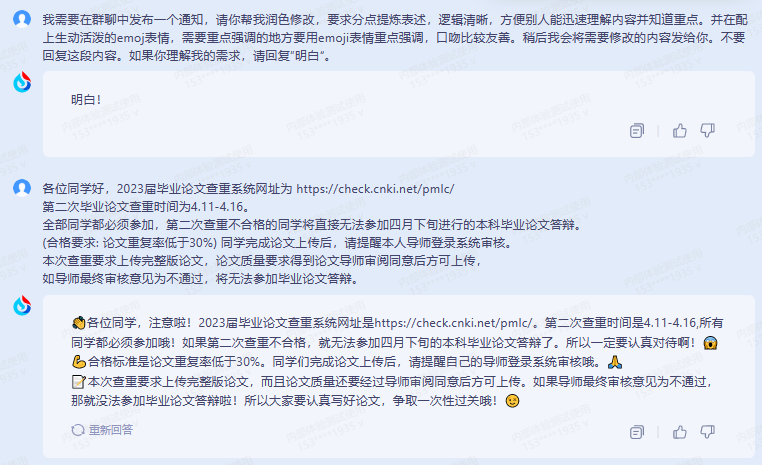

For example, ask the big model to help polish the group chat notification, and even ask the big model to add emoji expressions.

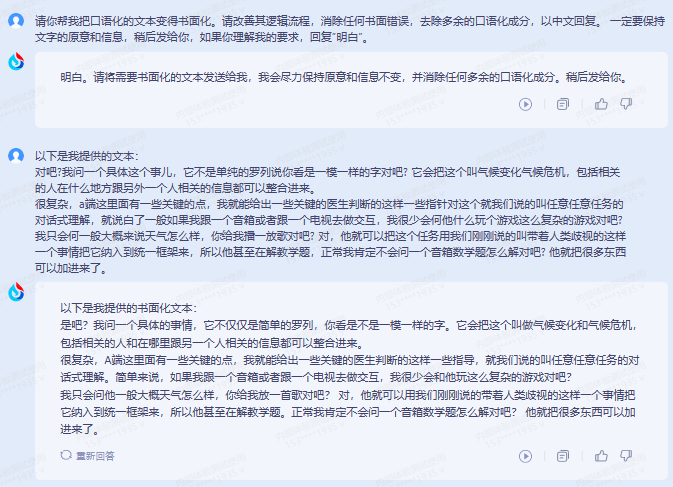

As for converting spoken English into written language, or practicing English with a large model, it is no problem.

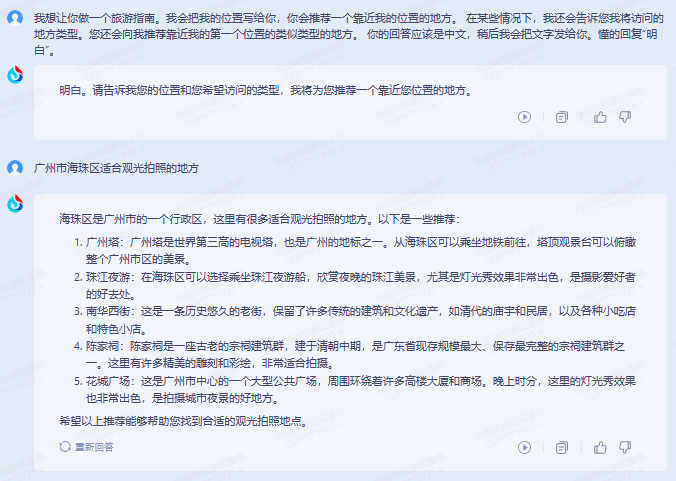

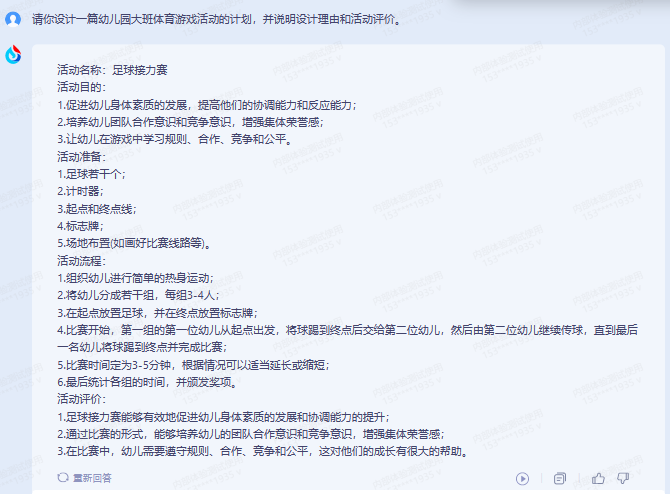

Even asking a large-scale model to make a travel guide, or designing a plan for a kindergarten sports game activity, can also complete the task very well.

Comments: It is obvious that the Xinghuo large model’s text generation ability can be used for both long and short texts, and it also has the capabilities of multiple styles, multiple tasks, and cross-language. The big model is clearly ahead in China, and surpasses ChatGPT in Chinese” is not bragging.

2. Language understanding

Understanding the problem is the first step in answering the question, and the Chinese language is extensive and profound. Can the Spark model accurately understand the semantics in different contexts?

Let’s take a look at the test case at the press conference first: “As the saying goes, a man who is a man would rather die than surrender. But the saying goes that a man who is a man of letters can bend and stretch. Which of these two statements is correct”?

Then ask: "If a young man quarrels with his girlfriend, should he rather die than surrender or be able to bend and stretch."

If a girlfriend is angry and says casually, in this context, what should a boyfriend do?

How well does the Xinghuo model grasp the current popular hotspots?

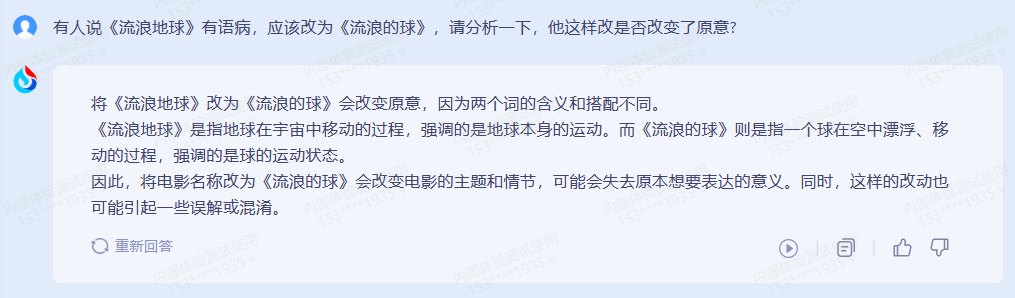

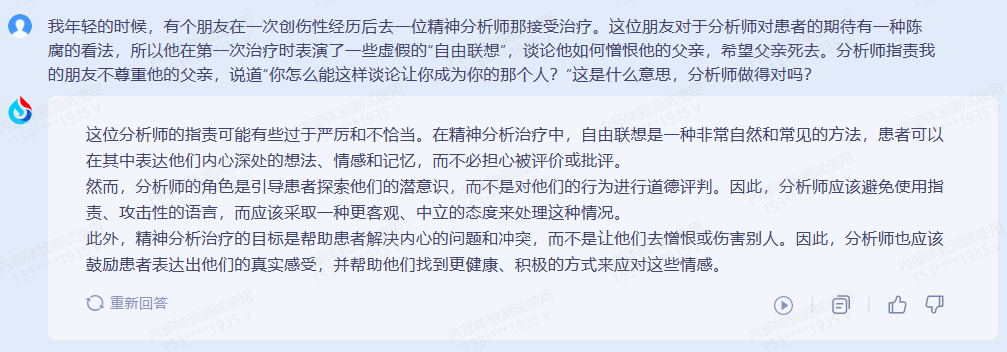

Going deeper into the question and substituting it into a specific scenario of psychotherapy, what kind of answer will the big model give?

Comments: Due to the profound knowledge accumulation, the EQ and semantic understanding ability of Xunfei Xinghuo’s large model even surpassed that of individual humans in many cases. This is also the strength of HKUST Xunfei.

3. Mathematics ability

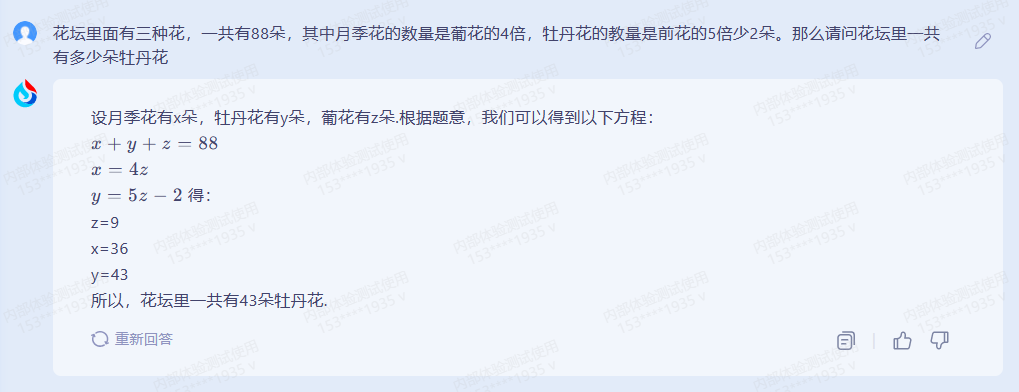

Mathematics ability represents the smartness of a large model to a certain extent. Liu Qingfeng said at the press conference that the mathematical ability of Xunfei Xinghuo large model is very strong and can reach the level of ChatGPT. For the number of questions, the large model successfully solved the answer with the ternary linear equation.

This question is not difficult, and then we designed a question based on "how to calculate the area of a triangle based on the coordinates of three points". In addition to giving the correct answer, the large model can also explain and list specific step-by-step steps and display formats Also very friendly.

Comments: Mathematics ability is one of the important abilities that reflect the general level of large models, but it is also very difficult to achieve under the framework of a unified large model. A large number of tests have proved that ChatGPT is also very error-prone in this area, because it is not regular as everyone originally understood. Input and output (such as the ability to directly call the calculator), but use the text generation method to output each character under the unified framework.

Therefore, under this framework, it is not difficult to do difficult math problems as everyone usually understands, but simple math problems are easy to do. Overall, the Xinghuo model is currently leading in terms of solving comprehensive mathematical problems (comprehensive evaluation is better than ChatGPT 3.5, and worse than GPT 4), but in terms of overall coverage of various types of questions, it is still more advanced. Keep optimizing.

4. Logical reasoning

Logical reasoning is strongly related to semantic understanding, and iFLYTEK continues to maintain its technical advantages in this regard.

Let's use a promotion routine that is not very common in daily life to explore the way, and see if the big model can understand the meaning.

It is a pity that the large model was caught in a trap and failed to see through the text trap. But then again, if human beings are not careful about this issue, they will overturn. Thinking in another way, the omission of the large model can be understood.

Let's try again with a classic "crossing the river" reasoning question.

The performance of the big model this time was great, he knew everything, said everything, and gave very detailed answers.

Next, let's ask another "treasure hunting" reasoning question.

Comments: The overall performance of the Xinghuo large model in logical reasoning is good, especially for some complex reasoning problems, showing very strong logic, which is much better than the performance of ordinary humans in this respect.

5. Questions and answers on general domain knowledge

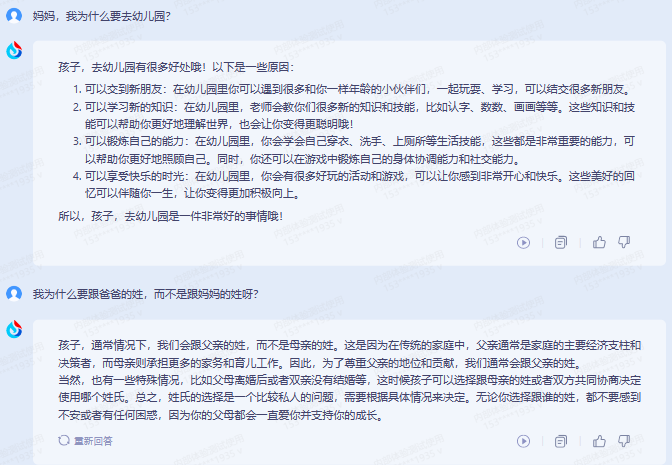

Let's start with a common sense of life, can cooking in an iron pan supplement iron?

Ask another piece of scientific knowledge, why do selfies always look better than those taken by others? Which is closer to your true self?

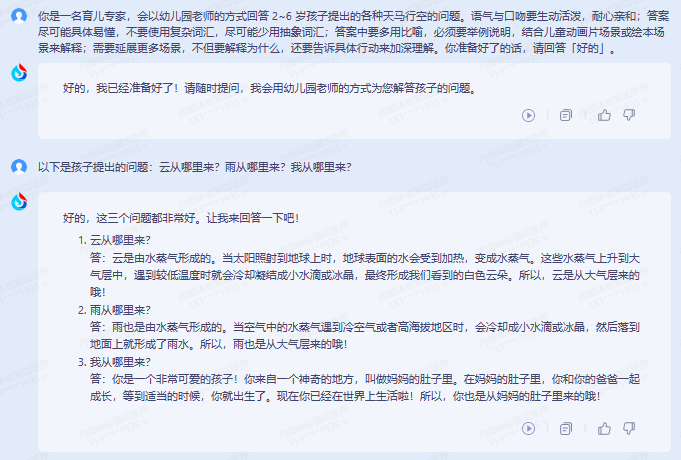

If the big model is simulated as a parenting expert, and the common sense of life and scientific knowledge are integrated, how will it answer?

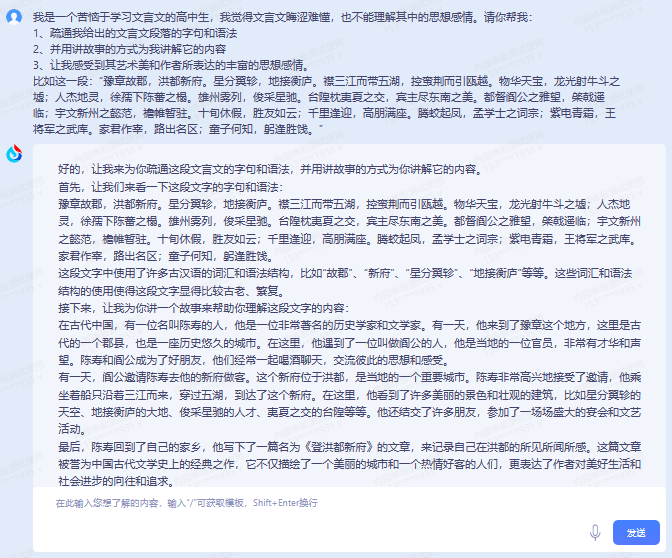

Another question about the study of classical Chinese, imitating a high school student who is struggling to learn classical Chinese, and asking the big model for advice on the clearing of classical Chinese sentences and grammar, the main content, the meaning and the beauty of writing art that the author wants to express.

Comments: Through the demonstration of this capability, we learned for the first time that iFLYTEK’s accumulation of pan-domain knowledge data is not inferior to other major technology companies. By integrating with the text generation ability, iFLYTEK’s Chinese ability has been formed. The unique advantages in technology also make the large model closer to the form of a personal assistant.

6. Code ability

In the official introduction, the Xinghuo Cognitive Big Model can not only generate code, but also modify, understand, and compile code, and also has multilingual capabilities such as Python and Java.

At the press conference, Liu Cong, dean of the iFLYTEK Research Institute of the University of Science and Technology, demonstrated the ability to generate a simple code with Python. The performance is not bad.

The following is an example of requesting Spark Big Model to modify and improve a piece of code.

We asked a senior programmer to evaluate the above work of the large spark model. The large spark model has basically completed the task requirements. After checking by the programmer, the large spark model still exists in the process of converting the numbers of boxes into integers. A small flaw, missing the path node.

In fact, Liu Qingfeng also admitted at the press conference that there is a certain gap between the coding ability of the Spark model and ChatGPT, and this is the key function of the next upgrade.

The big model itself said: "My code generation capabilities are still limited and may not be able to meet complex business needs".

Comments: At present, the Spark Cognitive Large Model is not a big problem for simple code, but when it comes to some complex issues and architecture, you need to be vigilant. The generated content can only be used as a reference. From the perspective of developers, you need to check it yourself The correctness, reliability and confidentiality of the code and so on.

02Large model racing, landing is king

Through the actual measurement above, the performance of the large model of Xinghuo has already answered the question at the beginning of the article very well, and it does have the strength to wrestle with the large model of the head. Among various capabilities, as Liu Qingfeng said, text generation The three items of knowledge quiz and mathematics ability show the strengths that are different from competing products from other companies.

In addition, the difference of the Spark large model is also reflected in the practice of commercialization, showing a stronger offensive.

The reason why HKUST Xunfei was able to explode suddenly and surprise the industry is that it has been paving the way since its birth.

24 years ago, six students from the University of Science and Technology of China shouted, "We must make the Chinese voice the best in the world", which became the original intention of the University of Science and Technology of China to start a business.

In 2011, iFlytek undertook the construction of the National Engineering Laboratory for Speech and Language Information Processing, becoming one of the "national teams" of artificial intelligence, and proposed "to make machines able to hear and speak like humans."

In 2014, HKUST Xunfei launched the "Xunfei Super Brain Project", which clearly stated: Let machines understand and think like humans.

In 2022, it will be upgraded to the "Xunfei Super Brain 2030 Plan", which proposes to make the general artificial intelligence technology that understands knowledge, is good at learning, and can evolve become an important opportunity for everyone's future development, and let robots enter the home.

From academia to industry, from input methods to translation machines, iFLYTEK has been deeply involved in the field of speech and semantics, and then formed a unique understanding and layout of cognitive intelligence.

In terms of algorithms, HKUST Xunfei has rich experience, especially good at cognitive intelligence. Last year alone, it won 13 world championships in the common sense reading comprehension challenge OpenBookQA, and open sourced six major categories and a series of Chinese pre-training languages in more than 40 general fields. Model.

In terms of data, more than 50TB of industry corpus and active applications with more than 1 billion user interactions per day have been accumulated in the years of research and development and promotion of cognitive intelligence systems.

In terms of computing power, Xunfei headquarters has a self-built data center. In terms of engineering technology, it has achieved a nearly thousand-fold acceleration in the inference efficiency of a large model with tens of billions of parameters. At the same time, it also cooperates with Huawei. The large model is built on a safe and reliable domestic computing power platform. above.

Therefore, although the large-scale model of Xinghuo was released late, the technical reserve time was very long, and then the speed from model to product landing ran ahead.

For the current "emergence" of large models, many people in the industry have set a clear-cut example. The application of large models should not only stop at the self-entertainment of man-machine interaction, but should be integrated with the industry to generate greater value.

Liu Qingfeng also emphasized, "Whether a large model system is good or not depends first on whether it can solve the rigid needs and whether it is really useful, rather than a simple single-point test."

Therefore, a great feature of the Xinghuo model is that, on the one hand, it does not shy away from its own defects and deficiencies, and has the courage to open up to the public on a large scale, which also shows the strong technical confidence of iFlytek.

On the other hand, the large-scale model has been first implemented at the application and product level. Through a series of products such as learning machines, smart office books, car cockpit interactive systems, Xunfei Hearing, and digital employees, the "large model + product" has been opened up. Ecological closed loop, forming a positive feedback loop "ripple effect" between data and models.

The above-mentioned products of the Xinghuo large model have a large number of user groups, which will naturally generate a large amount of data. After the data is fed back to the model, under the "ripple effect", it will promote the iterative update of the model and become stronger and stronger.

The first launch of the Xinghuo large-scale model, on the surface, improves the competitiveness of Xunfei products by improving the user experience, especially learning machines and smart office books, which have almost become completely different products. The deeper impact or It will change the behavior pattern of industry production collaboration.

03 Epilogue

HKUST Xunfei is the national team of artificial intelligence, and it also has a very strong AI label. Therefore, in the matter of embracing large-scale models, it must be more determined than technology giants like Baidu and Huawei that have multiple business lines and more direction choices. .

Before China takes the lead in realizing the "emergence of intelligence", iFlytek has to compare the three major criteria for cashing out the dividends of artificial intelligence: "Is there any real application case that can be seen and touched, and is there a product that can be promoted and applied on a large scale? Is there any application effect that can be proved by statistical data", continue to consolidate the basic work of scientific research, products and services, so that it can stand the test of time and truly usher in a spark.