1. 池化层: Extract important information, remove unimportant information, reduce parameters, reduce computational overhead, and prevent overfitting.

2. 全连接层(FC): It acts as a "classifier" in the entire convolutional neural network.

3. 激活函数: Introduce nonlinear factors to neurons, thereby improving the expressive ability of the network.

4. backbone: 主干(骨干)网络, generally refers to the network that extracts features, and its function is to extract the information in the picture for use by the subsequent network.

5. 反向传播: In backpropagation, the movement of the network is backward, the error flows in from the outer layer with the gradient, passes through the hidden layer, and the weight is updated.

6. 超参数: It is a parameter whose value is set before starting the learning process, not the parameter data obtained through training.

7. normalization:Data standardization processing to solve the comparability between data indicators.

Batch NormOn the batch, normalize the input of each single channel;

Layer Normin the direction of the channel, normalize the input of each depth.

8. MLP: The Chinese name is 多层感知机, its essence is the neural network. It is proposed mainly to solve nonlinear problems that single-layer perceptrons cannot solve. Detailed blog introduction

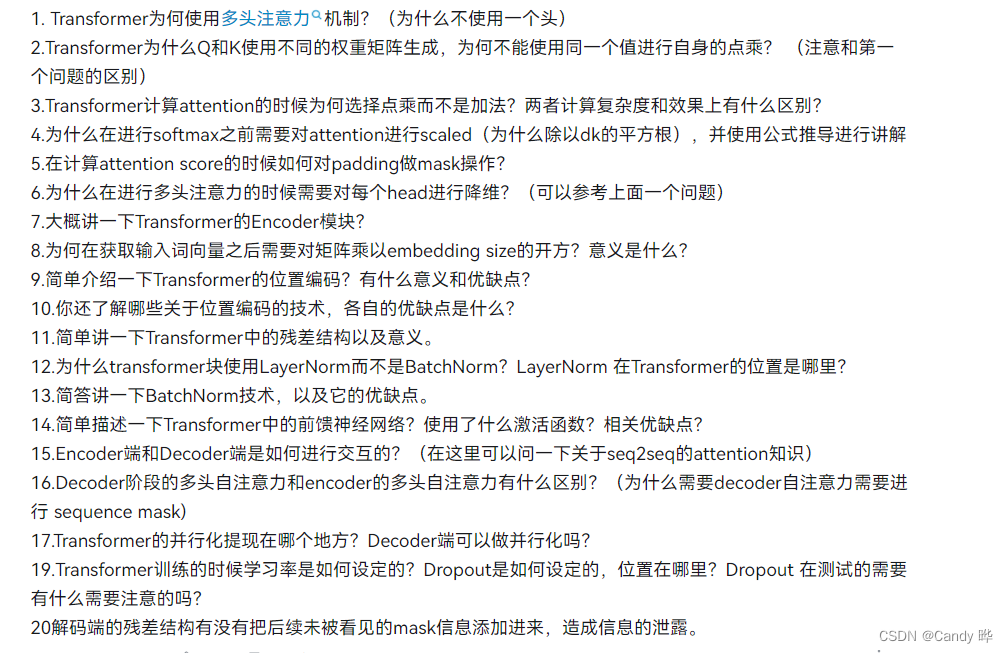

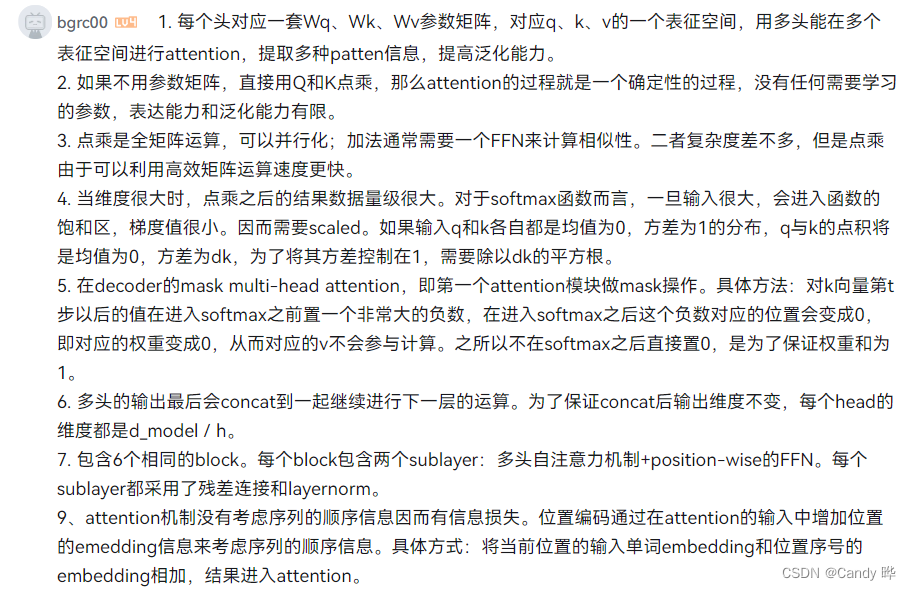

transformer