DataStream Overview

The DataStream API takes its name from a special DataStream class that is used to represent collections of data in Flink programs. Think of them as immutable collections of data that can contain duplicates. These data can be finite or unlimited.

A DataStream in Flink is a general procedure for performing transformations (eg filtering, updating state, defining windows, aggregations) on streams of data. Data streams are initially created from various sources (for example, message queues, socket streams, files). Results are returned via sinks, for example data can be written to a file or to standard output (e.g. a command line terminal). Flink programs can run in various contexts, either independently or embedded in other programs. Execution can be performed in a native JVM. Can also be executed on a cluster.

Flink program infrastructure:

- Get an execution context execution environment

- load initial data

- data flow conversion

- Calculation result storage

- trigger program execution

Among them, data stream transformations (transformations) contain a variety of operators for transforming data streams, which is also the core of the entire flink program. Next, we will introduce in turn:

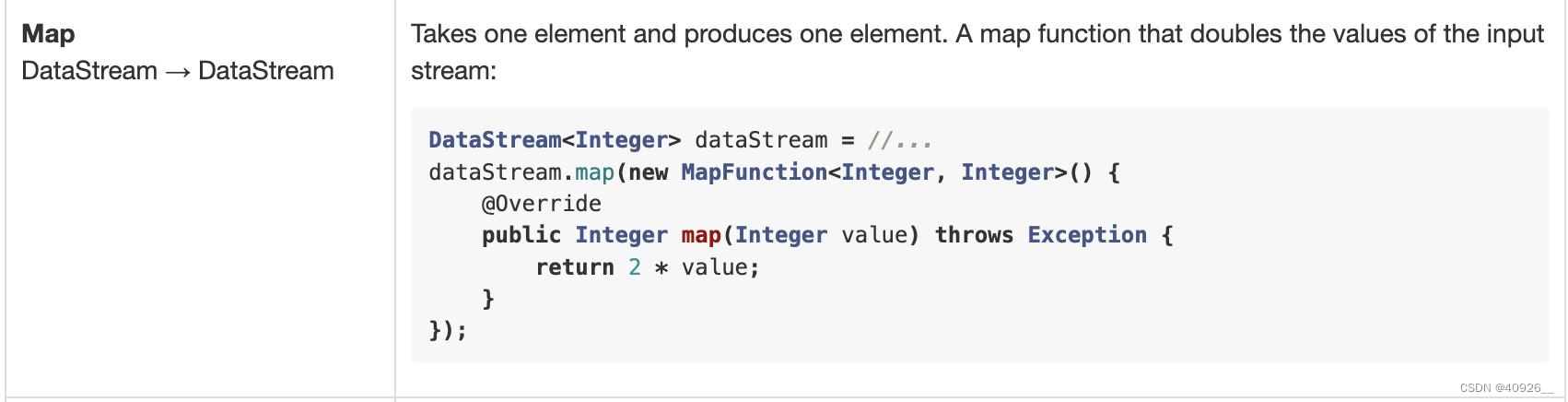

- map

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import java.util.ArrayList;

/**

* map算子,作用在流上每一个数据元素上

* 例如:(1,2,3)--> (1*2,2*2,3*2)

*/

public class TestMap {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

CollectMap(env);

env.execute("com.mapTest.test");

}

private static void CollectMap(StreamExecutionEnvironment env){

ArrayList<Integer> list = new ArrayList<>();

list.add(1);

list.add(2);

list.add(3);

DataStreamSource<Integer> source = env.fromCollection(list);

source.map(new MapFunction<Integer, Integer>() {

@Override

public Integer map(Integer value) throws Exception {

return value * 2;

}

}).print();

}

}

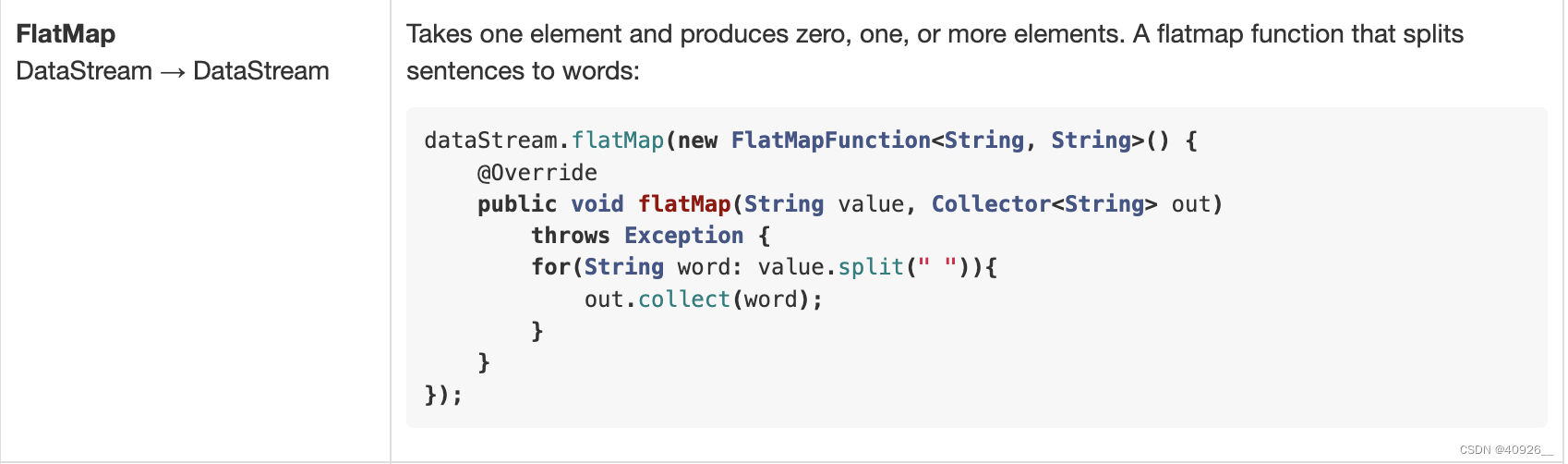

- FlatMap

import org.apache.flink.api.common.functions.FilterFunction;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

/**

* 将数据扁平化处理

* 例如:(flink,spark,hadoop) --> (flink) (spark) (hadoop)

*/

public class TestFlatMap {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

DataStreamSource<String> source = env.socketTextStream("localhost", 9630);

source.flatMap(new FlatMapFunction<String, String>() {

@Override

public void flatMap(String s, Collector<String> collector) throws Exception {

String[] value = s.split(",");

for (String split:value){

collector.collect(split);

}

}

}).filter(new FilterFunction<String>() {

@Override

public boolean filter(String s) throws Exception {

return !"sd".equals(s);

}

}).print();

env.execute("TestFlatMap");

}

}

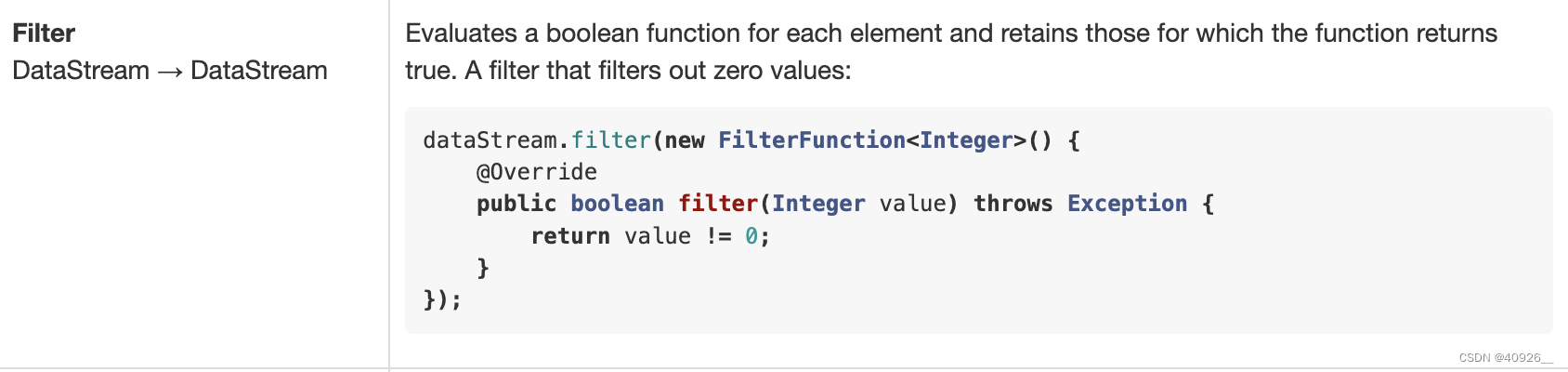

- Filter

import org.apache.flink.api.common.functions.FilterFunction;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

/**

* 过滤器,将流中无关元素过滤掉

* 例如:(1,2,3,4,5) ----> (3,4,5)

*/

public class TestFilter {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

//ReadFileMap(env);

FilterTest(env);

env.execute("com.FilterTest.test");

}

private static void FilterTest(StreamExecutionEnvironment env){

DataStreamSource<Integer> source = env.fromElements(1, 2, 3, 4, 5);

source.filter(new FilterFunction<Integer>() {

@Override

public boolean filter(Integer value) throws Exception {

return value >= 3;

}

}).print();

}

}

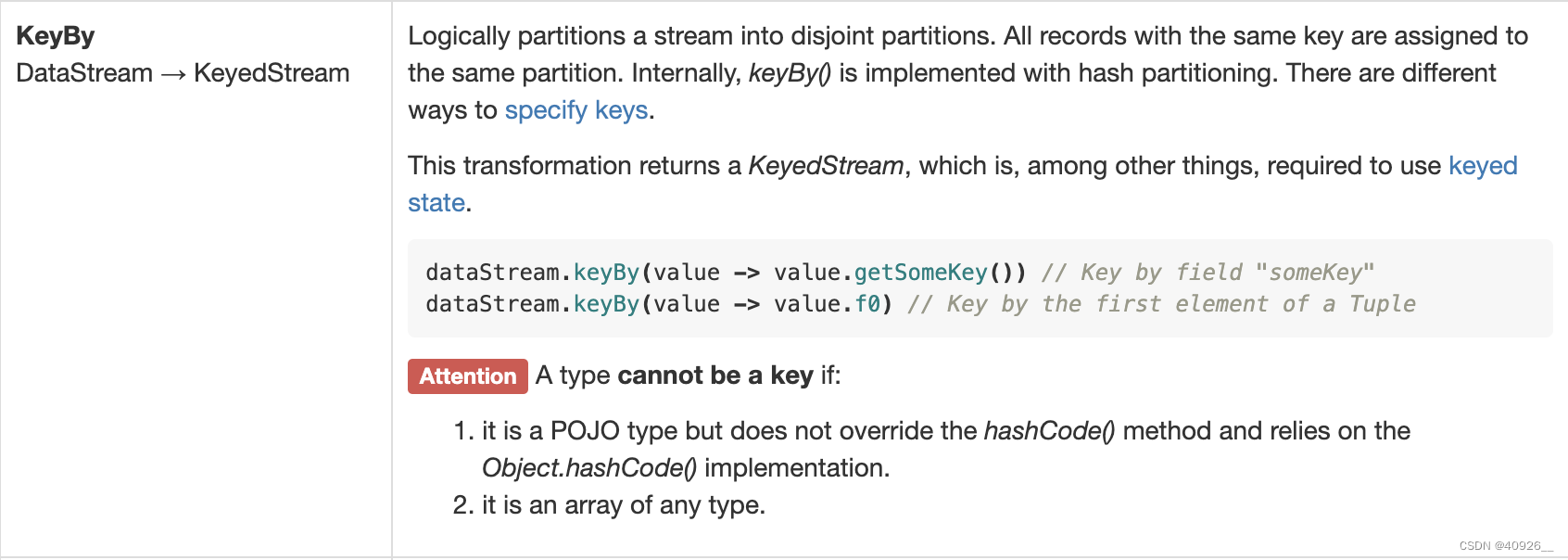

- KeyBy

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

/**

* keyby算子将数据流转换成一个keyedStream,但这里需要注意的是有两种类型无法转换:

* 一个是没有实现hashcode方法的pojo类型,另一个是数组

* 例如:(flink,spark)---> (flink,1),(spark,1)

*/

public class TestKeyBy {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

KeyByTest1(env);

env.execute();

}

private static void KeyByTest1(StreamExecutionEnvironment env){

DataStreamSource<String> source = env.fromElements("flink","spark","flink");

source.flatMap(new FlatMapFunction<String, String>() {

@Override

public void flatMap(String s, Collector<String> out) throws Exception {

String[] split = s.split(",");

for (String value : split) {

out.collect(value);

}

}

})

.map(new MapFunction<String, Tuple2<String,Integer>>() {

@Override

public Tuple2<String, Integer> map(String value) throws Exception {

return Tuple2.of(value,1);

}

})

//过时方法

//.keyBy("f0").print();

//简写方法

//.keyBy(x -> x.f0).print();

.keyBy(new KeySelector<Tuple2<String, Integer>, String>() {

@Override

public String getKey(Tuple2<String, Integer> value) throws Exception {

return value.f0;

}

}).print();

}

}

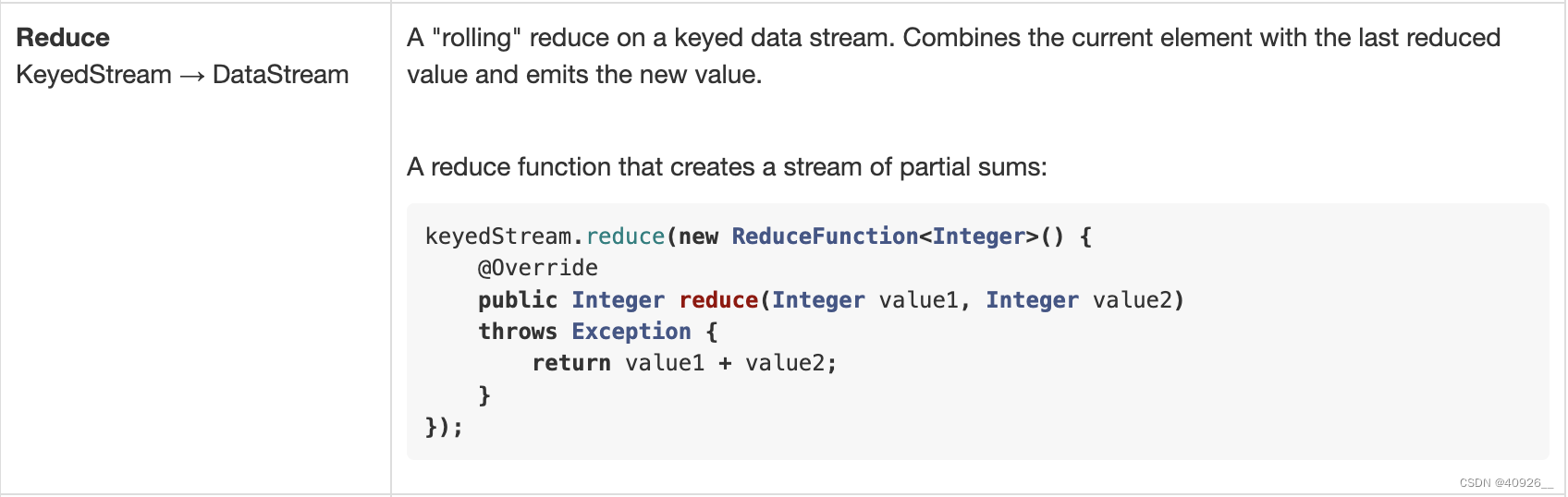

- Reduce

mport org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.functions.ReduceFunction;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

/**

* 1、对传过来的数据按照" ,"进行分割

* 2、为每个出现的单词进行赋值

* 3、按照单词进行keyby

* 4、分组求和

*/

public class TestReduce {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

DataStreamSource<String> source = env.socketTextStream("localhost", 9630);

source.flatMap(new FlatMapFunction<String, String>() {

@Override

public void flatMap(String s, Collector<String> collector) throws Exception {

String[] value = s.split(",");

for (String split:value){

collector.collect(split);

}

}

}).map(new MapFunction<String, Tuple2<String,Integer>>() {

@Override

public Tuple2<String,Integer> map(String s) throws Exception {

return Tuple2.of(s,1);

}

}).keyBy(x ->x.f0)

.reduce(new ReduceFunction<Tuple2<String, Integer>>() {

@Override

public Tuple2<String, Integer> reduce(Tuple2<String, Integer> value1, Tuple2<String, Integer> value2) throws Exception {

return Tuple2.of(value1.f0, value1.f1+value2.f1);

}

}).print();

env.execute();

}

}

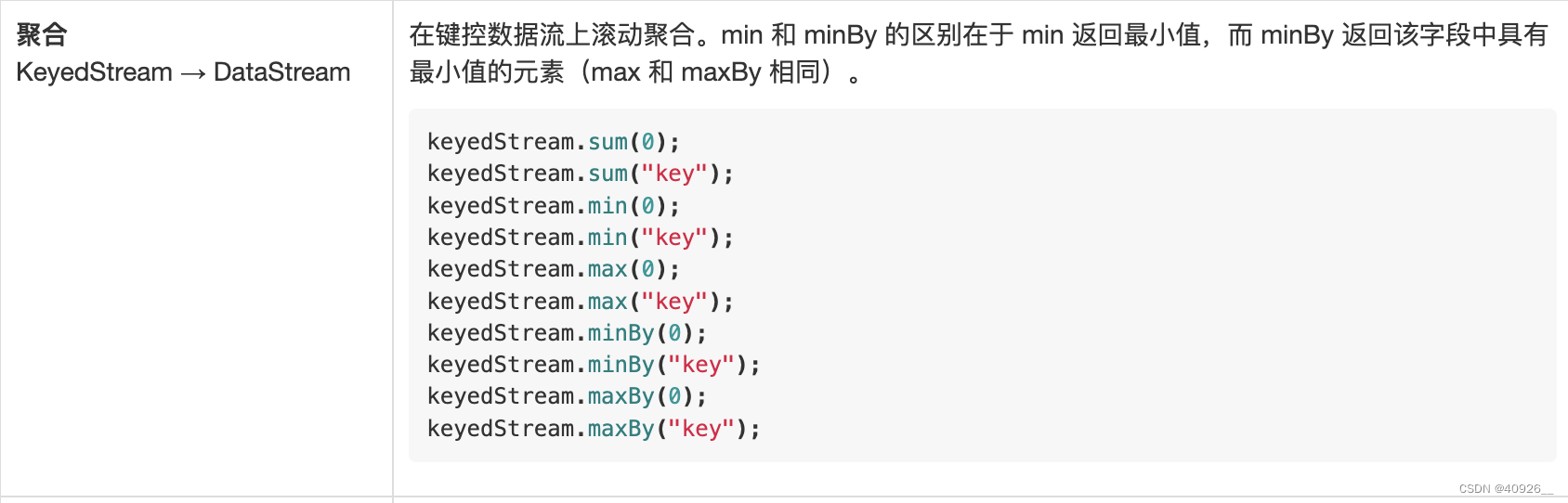

Aggregation function, usually used after the keydeStream stream