Table of contents

1. Introduction to N-gram model

3. Text evaluation of N-gram model

5. Text generation based on N-gram model

6. Defects of statistical language models

0. Introduction

First of all, let's think about such a question: Given a random sentence, how do you judge whether this sentence sounds like a human sentence? What the language model needs to solve is actually this problem: to judge whether the sentence that appears is like natural language, or what is the probability of saying that it looks like human speech. In the early days, we applied some grammatical rules to judge the rationality of sentences, and later, we judged based on statistics and neural networks. This experiment mainly explains the language model based on statistics .

knowledge points

- N-gram model

- Perplexity

- Text Evaluation Based on N-gram Model

- Smoothing of N-gram models

- Text generation based on N-gram model

- Drawbacks of Statistical Language Models

1. Introduction to N-gram model

Due to the diversity and richness of natural language itself, as well as the evolution in time and space, it is difficult for us to simply judge whether a sentence is reasonable or not with artificially regulated grammatical rules. For example, there is such a poem: "The crab is peeling my shell, and the notebook is writing about me. I am falling on the maple leaves and snowflakes all over the sky, and you are thinking about me." From the perspective of the whole poem, many places do not conform to the language rules, but It is a good poem. However, based on statistical language models, from the perspective of big data, it is a more feasible way to judge whether the current utterance is reasonable based on the appearance rules of a large number of corpus, and to calculate the possibility of utterance appearing as a natural language.

Suppose now that we have a huge corpus at hand, covering all Chinese sentences that have appeared in ancient and modern times, and now a sentence S is given, consisting of n words: w1w2w3...wn, then, P( S)=P(w1;w2;w3;...wn) indicates the possibility of this sentence appearing as a human speech. How to calculate this probability? A simple idea is: Let’s see if this sentence has appeared in our corpus. If so, how many times it has appeared. According to the number of occurrences, we can roughly judge the "human level" of this sentence.

The above plan sounds quite logical, but if you think about it carefully, there are some loopholes: What if this sentence is a new and unheard-of sentence similar to the above three-line poem? The probability of occurrence is zero, but it is an exquisite language; on the other hand, we are actually unlikely to have a complete Chinese corpus, and it may be good to have a corpus of more than ten gigabytes, so any given one Sentences may not have appeared in the corpus. The essence of this scheme is that the granularity of the sentence is too large, so another way of thinking is to count the frequency of occurrences of the phrases in the sentence in the corpus. This is a more flexible way, which is the N-gram model ( The basic idea of which is called N-gram grammar model in Chinese).

From a mathematical point of view, the model is based on the assumption that the appearance of the Nth word is only related to the first N-1 words, but not to any other words. The probability of the entire sentence is the conditional probability of each word. The product of , when N is a few, it is called a few-element model. The definitions of univariate model, binary model and ternary model are given below:

- When N=1, it is a Uni-gram Model, that is, the probability of occurrence of each word is calculated separately and multiplied:

- When N=2, it is a binary model (Bi-gram Model), which calculates the conditional probability of each word appearing when the previous word appears and multiplies:

- When N=3, it is a Tri-gram Model, which is to calculate the conditional probability of each word appearing when the first two words appear and multiply:

Note: Commonly used are binary or ternary models.

For example, apply the Bi-gram model to calculate the probability of "the crab is peeling my shell". First, the sentence after word segmentation is "crab/in/peel/my/of/shell", then:

P(crab,in,peel,i,of,shell)=P(in∣crab)∗P(peel∣in)∗P(I∣peel)∗P(of∣me)∗P(shell∣of)

For a conditional probability, how to calculate it? According to Bayes' theorem, it is sufficient to perform relevant counts in the corpus, for example:

P(at∣crab)=Count(crab,at)/Count(crab)

Now we have a basic understanding of how to build an N-gram language model based on statistics, and judge the rationality of sentences based on this. At this point, many students may still be a little confused. Combined with the real scene, what specific application does this language model have? ? In fact, the application direction of language model can be roughly divided into two categories:

- Text evaluation: This is what I said at the beginning of judging the rationality of sentences. Specifically, in some scenarios where speech is generated by machines, such as machine translation, chat systems, text summarization, etc., it is possible to judge whether the generated sentences are usable based on the language model.

- Text generation: Since in the language model, we can know the probability of a word appearing after a certain N words, then, given the previous words, we can use a greedy strategy, that is, take the next word with the highest probability, to Generate phrases or text. Common applications include search engines (Google or Baidu), or guesses or hints for input methods. For example, when using Google, if you enter one or several words, the search box will usually give several possible alternative options in the form of a drop-down menu. These alternatives are actually guessing the word string you want to search for.

2. Perplexity

Based on different corpora, we can actually count different language models. So, how to evaluate the pros and cons of these language models? Here we will talk about the concept of Perplexity (referred to as PPL), which is an indicator to measure the quality of language models in the field of natural language processing. Its main principle is to estimate the probability of a sentence s appearing, and use the sentence length N as a normalization , to calculate the perplexity for a batch of sentences S, the formula is as follows:

It can be seen that the higher the probability of a sentence, the lower the perplexity. Therefore, for language models trained in different corpora or in different ways, as long as the test set (that is, normal natural language text) is prepared, the perplexity of these texts is calculated according to different models, and the average perplexity of these texts is lower. , indicating that the corresponding language model is better.

Here, you may have a doubt, why use perplexity to represent the rationality of the sentence, instead of the probability expression P(s) about the sentence, why not take the average of the probability of occurrence of all sentences in the test set directly? Woolen cloth? There are two reasons for this:

- Multiple conditional probability multiplication operations are likely to cause underflow, so the logarithm should be taken.

- From a mathematical point of view, perplexity is a formula derived based on entropy, which can be considered as an average branch factor (average branch factor), and the calculated value indicates how many choices there are when predicting the next word. The fewer words that can be selected when the model generates the next word, the lower the randomness of the model and the more accurate it can be roughly considered (imagine an extreme situation where each word can be followed by all words in the dictionary and the probability is equal, then the language model and random generation would be indistinguishable). This angle can also explain why the smaller the PPL, the better the model.

In fact, after establishing a language model, we often use perplexity to measure the rationality of a single sentence, that is, text evaluation.

3. Text evaluation of N-gram model

Next, we build a Bi-gram Model based on an English corpus of tweets related to former US President Trump, and use it to evaluate the rationality of any input sentence.

import pandas as pd

df = pd.read_csv(

'https://labfile.oss.aliyuncs.com/courses/3205/tweets.csv') # 读取数据

df.head() # 数据形式如下,text 所在列即为文本数据

Do some basic preprocessing on the text, such as unifying the format, removing special symbols and segmenting into vocabulary.

import re

import unicodedata

def basic_clean(text):

# 定义一个函数用于文本的预处理

text = (unicodedata.normalize('NFKD', text) # 将文本统一表示为规范形式

.encode('ascii', 'ignore')

.decode('utf-8', 'ignore')

.lower())

# 去除文本中的一些特殊符号并且分词后返回列表

# \w 表示字母数字字符,\s 表示空格字符,[^\w\s] 表示除以上两类字符以外的字符

words = re.sub(r'[^\w\s]', '', text).split()

return words

# 例:

text = ";'//i love @this film"

print(basic_clean(text))

# ['i', 'love', 'this', 'film']To process all tweets:

# 将所有文本进行预处理

# 将 Dataframe 中的所有数据取出并合并为一整个 str,再进行预处理

words = basic_clean(''.join(str(df['text'].tolist())))

words[:10] # 查看数据形式

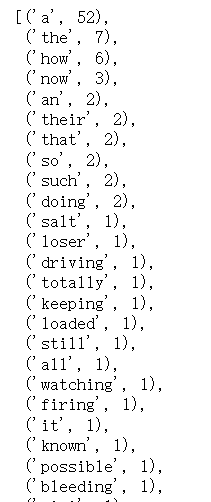

Apply the counting function in NLTK for unary (unary word frequency information is also used when calculating the binary model), binary counting and sorting according to frequency.

import nltk

unigrams = (pd.Series(nltk.ngrams(words, 1)).value_counts())

bigrams = (pd.Series(nltk.ngrams(words, 2)).value_counts())

In fact, the statistical information of binary phrases can also help analyze text data, and visualize the top10 Bi-gram in the English corpus of Trump-related tweets. Can you find any rules?

import matplotlib.pyplot as plt

bigrams_top10 = (pd.Series(nltk.ngrams(words, 2)).value_counts())[:10]

bigrams_top10.sort_values().plot.barh(color='pink', width=.9, figsize=(12, 8))

plt.title('10 Most Frequently Occuring Bi-gram')

plt.ylabel('Bi-gram')

plt.xlabel('# of Occurances')

Next, design a function to calculate perplexity based on the input sentence and the above unary and binary counting information.

Next, design a function to calculate perplexity based on the input sentence and the above unary and binary counting information.

import numpy as np

import math

def compute_sentence_ppl_by_unigram(sentence, unigrams, bigrams):

# unigrams, bigrams 分别存放了某个单词或者某对单词的出现频次

sentence_bigram = list(nltk.ngrams(

basic_clean(sentence), 2)) # 获取输入句子中所有的 bigram

probs = []

for gram in sentence_bigram:

count_bigram = bigrams[gram] # 两个单词共现的频次 ,即 count(word_pre,word_after)

count_unigram = unigrams[(gram[0],)] # 前面单词单独出现的频次, 即 count(word_pre)

# 两者相除即为条件概率 p(word_after|word_pre) = count(word_pre,word_after)/count(word_pre)

probs.append(count_bigram/count_unigram)

len_sentence = (len(sentence_bigram)+1) # 计算句子长度

sentence_ppl = math.pow(2, (-1/len_sentence) *

np.sum(np.log(probs))) # 根据公式计算整个句子的困惑度

return sentence_ppl

Take a perplexity test:

sentence1 = "he is a loser"

sentence2 = "he is a man"

sentence1_ppl = compute_sentence_ppl_by_unigram(sentence1, unigrams, bigrams)

sentence2_ppl = compute_sentence_ppl_by_unigram(sentence2, unigrams, bigrams)

# sentence1 的困惑度稍高于 sentence2,说明基于此语料的二元语言模型认为后者更像是一句自然语言

print("sentence1_ppl:", sentence1_ppl)

print("sentence2_ppl:", sentence2_ppl)

# sentence1_ppl: 1.9368421231042252

# sentence2_ppl: 3.6543042948412325 For the above functions compute_sentence_ppl_by_unigram(), it is relatively common but lengthy, and the code can be written more concisely. Of course, you can choose any one according to your preference.

# 精简版本

def compute_sentence_ppl_by_unigram(sentence, unigrams, bigrams):

sentence_bigram = list(nltk.ngrams(

basic_clean(sentence), 2)) # 输入句子中所有的 bigram

probs = [bigrams[gram]/unigrams[(gram[0],)]

for gram in sentence_bigram] # 计算所有 bigram 对应的概率值

sentence_ppl = math.pow(

2, (-1/(len(sentence_bigram)+1)*np.sum(np.log(probs)))) # 根据公式计算整个句子的困惑度

return sentence_ppl

When there are unregistered words (that is, words that have not appeared in the corpus) or binary phrases in the test data, the function is called again, and the compute_sentence_ppl_by_unigram()program reports an error.

sentence3 = "Mr Huang is a loser"

sentence3_ppl = compute_sentence_ppl_by_unigram(sentence3, unigrams, bigrams)

# KeyError: ('mr', 'huang')4. Smoothing of N-gram model

Through the above error reporting examples, we found a problem, that is, when there are unregistered words or phrases, the general perplexity calculation formula cannot handle it, for example, for:

P(at∣crab)=Count(crab,at)/Count(crab)

If "crab" does not exist in the corpus used to build the language model, the molecule Count(crab) is zero, which is unreasonable; even if "crab" exists, if the collocation of "crab, in" does not exist, then P(in|crab) It is zero, and the logarithm cannot be taken afterwards, which is also unreasonable. So, is it possible to set the probability of sentences in these situations to zero by default? the answer is negative. In general, our corpus does not represent the real distribution of a language, so an entire sentence cannot be rejected just because the word or phrase does not exist in the corpus.

Smoothing technology is to solve this problem. Common methods include Add-One Smoothing, Add-K Smoothing, Good-Turing Smoothing, Linear Interpolation, etc. Here we mainly introduce the Add-One Smoothing method, which defaults to all N-grams at least It will appear once in the corpus, and only need to make some changes when calculating the conditional probability. Assuming that N is the number of words in the corpus (repeated count), and V is the number of words in the dictionary (non-repeated count), then for the Uni-gram model, the formula for calculating the conditional probability of each item after smoothing is:

For the Bi-gram model, the formula for calculating the conditional probability of each item after smoothing is:

Based on the above method, we add a smoothing term to the function of calculating perplexity.

def compute_sentence_ppl_by_unigram_smooth(sentence, unigrams, bigrams):

# unigrams, bigrams 分别存放了某个单词或者某对单词的出现频次

sentence_bigram = list(nltk.ngrams(

basic_clean(sentence), 2)) # 获取输入句子中所有的 bigram

V = len(unigrams) # 语料库中的单词量

probs = []

for gram in sentence_bigram:

# 两个单词共现的频次 ,即 count(word_pre,word_after),如果为未登录bigram,记为 0

count_bigram = bigrams.get(gram, 0)

# 前面单词单独出现的频次, 即 count(word_pre),如果为未登录unigram,记为 0

count_unigram = unigrams.get((gram[0],), 0)

# 两者相除即为条件概率 p(word_after|word_pre) = count(word_pre,word_after)/count(word_pre)

probs.append((count_bigram+1)/(count_unigram+V))

len_sentence = (len(sentence_bigram)+1) # 计算句子长度

sentence_ppl = math.pow(2, (-1/len_sentence) *

np.sum(np.log(probs))) # 根据公式计算整个句子的困惑度

return sentence_ppl

After changing the way of obtaining the frequency and adding the smoothing item, the program runs normally.

sentence3 = "Mr Huang is a loser"

sentence3_ppl = compute_sentence_ppl_by_unigram_smooth(

sentence3, unigrams, bigrams)

print(sentence3_ppl)

# 23.08931252351871# 精简版本

def compute_sentence_ppl_by_unigram_smooth(sentence, unigrams, bigrams):

sentence_bigram = list(nltk.ngrams(

basic_clean(sentence), 2)) # 输入句子中所有的 bigram

V = len(unigrams) # 语料库中的单词量

probs = [(bigrams.get(gram, 0)+1)/(unigrams.get((gram[0],), 0)+V)

for gram in sentence_bigram] # 计算所有 bigram 对应的概率值

sentence_ppl = math.pow(

2, (-1/(len(sentence_bigram)+1)*np.sum(np.log(probs)))) # 根据公式计算整个句子的困惑度

return sentence_ppl

5. Text generation based on N-gram model

In addition to text evaluation, we can also generate text based on the N-gram model. The core idea is simple: select the most likely word to generate based on the previous word. Therefore, first of all, we need to build a dictionary, that is, for each word in the corpus, list the words that may appear after it and their possibilities.

# key 为某一单词,value 为所有可能的后接单词对及其出现频次

word2bigram_count = {}

for bigram in bigrams.keys():

if bigram[0] in word2bigram_count:

word2bigram_count[bigram[0]].append((bigram[1], bigrams[bigram]))

else:

word2bigram_count[bigram[0]] = [(bigram[1], bigrams[bigram])]

For example, is followed by all possible words and their frequency in the corpus (sorted):

word2bigram_count["is"]

Next, we continue to generate based on the given text, first take the last word in the text as the previous word for generation, if these words do not exist in the thesaurus, randomly select a word in the corpus for generation .

import random

def generate_sentence_by_bigram(sentence, generate_len, word2bigram_count):

# generate_len 表示所要继续生成单词的长度,word2bigram_count 存储了每个单词后面所接的可能单词及其频次

pre_word = basic_clean(sentence)[-1] # 获取输入句子中最后一个单词作为初始单词

generate_words = [] # 生成的句子

for i in range(generate_len):

if pre_word in word2bigram_count: # 如果 pre_word 在所统计的语料库中存在

# 选择 pre_word 后面所接的频次最高的单词作为 next_word

next_word = word2bigram_count[pre_word][0][0]

else: # 如果 pre_word 在所统计的语料库中不存在

next_word = random.choice(

list(word2bigram_count.keys())) # 随机选择一个单词作为 next_word

generate_words.append(next_word)

pre_word = next_word

return sentence + " " + " ".join(generate_words) # 将原来句子及新生成的单词拼接并返回

# 例:

sentence4 = "he is"

generate_len = 5

generate_sentence = generate_sentence_by_bigram(

sentence4, generate_len, word2bigram_count)

print(generate_sentence)

# he is a loser who i dontIn order to make the generated sentences more flexible, some randomness is added to the generation process.

def generate_sentence_by_bigram(sentence, generate_len, word2bigram_count, random_prob=0.5):

# generate_len 表示所要继续生成单词的长度,word2bigram_count 存储了每个单词后面所接的可能单词及其频次

pre_word = basic_clean(sentence)[-1] # 获取输入句子中最后一个单词作为初始单词

generate_words = [] # 生成的句子

for i in range(generate_len):

if pre_word in word2bigram_count: # 如果 pre_word 在所统计的语料库中存在

if random.random() > random_prob:

# 选择 pre_word 后面所接的频次最高的单词作为 next_word

next_word = word2bigram_count[pre_word][0][0]

else:

next_words = [i[0]

for i in word2bigram_count[pre_word]] # 获取所有可能的单词

next_word = random.choice(next_words)

else: # 如果 pre_word 在所统计的语料库中不存在

(list(word2bigram_count.keys())) # 随机选择一个单词作为 next_word

generate_words.append(next_word)

pre_word = next_word

return sentence + " " + " ".join(generate_words) # 将原来句子及新生成的单词拼接并返回

# 例:

sentence4 = "he is"

generate_len = 5

generate_sentence = generate_sentence_by_bigram(

sentence4, generate_len, word2bigram_count)

print(generate_sentence)

# he is not antivaccine but little girlIt can be seen that the above method is used to generate a complete sentence, and the result is not ideal. In the application, we are more based on this method to realize the guess or prompt of the search engine (such as Google or Baidu), or the input method . At the same time, the size and content of the corpus should be adjusted according to the corresponding application. So, can the language model really not be used for generation tasks? the answer is negative. Nowadays, language models based on large-scale neural networks based on big data can even write poetry, news, and novels. Next, we will learn something about this part.

6. Defects of statistical language models

N-gram, a language model based on statistics, is to model the occurrence probability of N consecutive words. The assumption is: the estimation of the probability of a word in a certain position can be calculated by calculating the word and the previous N-1 words at the same time The ratio of the occurrence frequency to the simultaneous occurrence frequency of the previous N-1 words is obtained, but this method has the following two major defects:

- Sparsity: Because the N-gram model can only model words or word groups that appear in the text, when a word or word group that does not appear in the training text appears in the test text, it cannot correctly calculate the words that did not appear in these training samples. The expected probability of the word (note that smoothing can only weaken but not fundamentally solve the sparsity).

- Poor generalization ability: relying on fixed word combinations, complete pattern matching is required, otherwise the probability of occurrence of word groups cannot be output correctly, that is, when words or word groups with similar meanings appear in new text, effective generalization cannot be performed. For example, suppose there is a sentence "I go to work, I am happy" in the corpus, and there is a sentence "I work, I am happy" in the test data. This sentence has a similar meaning to "I go to work, I am happy". A good language The model should be able to recognize that the latter sentence is very similar to the previous sentence in terms of grammar and semantics, and should have an approximate probability distribution, but statistical models cannot do this.

The above shortcomings of traditional language models have led people to turn their attention to neural network models, expecting deep learning technology to automatically learn deeper grammatical and semantic features, solve sparsity problems, and improve generalization capabilities. Early neural network models used to train language models include:

- Feedforward neural network model (FFLM) [Solving sparsity problem]

- Recurrent Neural Network Model (RNNLM) [Improve generalization ability and enhance the processing of long context information]

In recent years, language models based on self-attention mechanisms have emerged (such as Bert, GPT series, etc.), and have achieved very good results in quite a few downstream tasks. It is worth noting that when we refer to a series of language models based on the self-attention mechanism, the meaning of "language model" itself has expanded, and it can be considered more as a training method for the model , namely Predicting complete sentences based on partial vocabulary is used as a training task, and a pre-trained language model is trained based on big data . And its application is no longer simple sentence evaluation or generation, but different model structures can be spliced to complete more types of tasks, such as reading comprehension, question answering, text classification and so on.

7. Experiment summary

Language model, personal understanding, can also be considered as how to model and recognize language from the perspective of a machine. Its development process is basically consistent with the technical development history of natural language processing, from rules to statistics, and then from statistics to deep learning. This experiment mainly introduces the following knowledge points about the language model:

- N-gram model

- Perplexity

- Text Evaluation Based on N-gram Model

- Smoothing of N-gram models

- Text generation based on N-gram model

- Drawbacks of Statistical Language Models

After the machine outputs the language, we can evaluate whether it is like a human sentence, so if there is any error, can it be automatically recognized and corrected? In the next experiment, we will study a fundamental and widely used task in natural language processing: text error correction.