written in front

Unity has launched a texture streaming system since version 2018.3, the Texture Streaming System, which is called The Mipmap Streaming System in the official Unity documentation. Although the texture streaming system is a relatively advanced technology, if it is used properly, it can effectively reduce the memory occupied by textures and improve the loading speed, but there are still many pitfalls in the process of using it, which leads to the actual use of the system in the current project. Not much. This article will briefly talk about Unity's texture streaming system, make a reasonable guess about the operating strategy of the system, and test its many parameters and some original parameters of Texture, and briefly analyze and summarize it. If there is something wrong, welcome Criticism is correct.

Unity version: 2021.3.12f1

Platform: Antroid

Rendering pipeline: URP

Test machine: HUAWEI P30 Test project

Github address: GitHub - recaeee/Unity-Mipmap-And-Texture-Streaming

Mipmap

The principle of Mipmap itself will not be introduced here (Mip is actually a downsampled version of the original texture). There are many related articles on the Internet. It is recommended to watch the GAMES 101 course. size mismatch). When a Texture opens Mipmap, the memory it occupies will become 4/3 times the original. It is worth noting that Mipmap itself is for 3D objects. For Texture such as UI, we almost do not need to enable Mipmap for it.

First of all, let me explain in detail how Unity uses Mipmap at runtime (do not enable Texture Streaming, only consider mobile platforms, the principles of each platform are similar, and the memory structure may be different).

Refer to the official Unity documentation:

Mipmaps introduction - Unity Manual

- When rendering a GO that uses a Mipmap texture, the CPU will first load all Mip levels of the Texture from disk into video memory.

- When the GPU samples the texture, it will determine the Mipmap level to use based on the texture coordinates of the current pixel and the two internal values DDX and DDY calculated by the GPU, that is, to find a match based on the actual size of the pixel covered in the scene. Texel size, the Mip level to be used is determined according to the Texel size. As a side note, DDX and DDY provide information about the UVs of pixels next to and above the current pixel, including distance and angle, which the GPU uses to determine how much texture detail is visible to the camera.

Texture Streaming Texture Streaming

In Unity, the texture streaming technology is called The Mipmap Streaming System. Its function is to let Unity only load the texture corresponding to the Mipmap Level into the video memory according to the position of the camera, instead of loading all Mipmap Levels into the video memory and let the GPU according to the camera position. Use the corresponding Mipmap Level.

1. Mipmap loading question

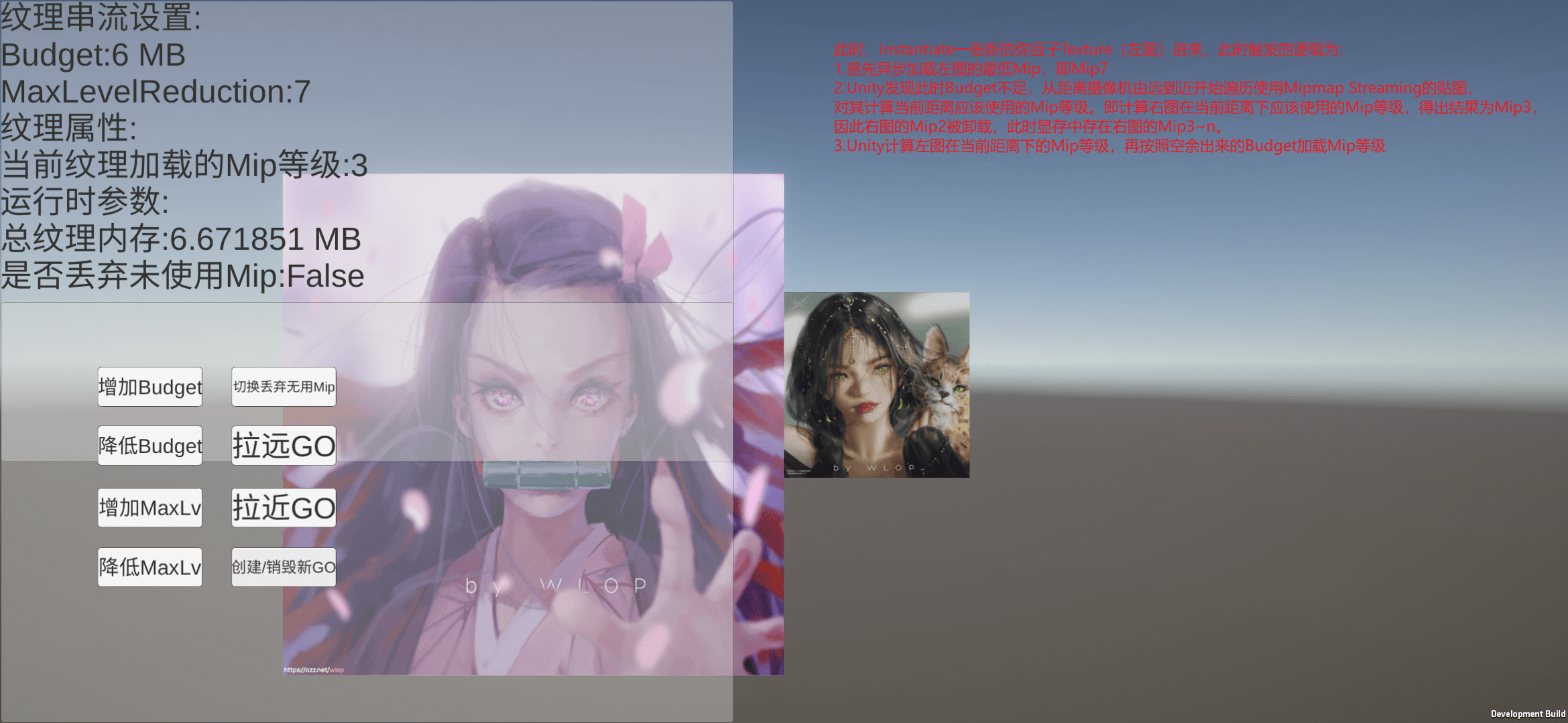

Here, I have a little question. After turning on Texture Streaming, if the loaded Mipmap Level is 2, will the more advanced Mipmap Level be loaded into the video memory? So I set up a test project for experimentation.

In the test project, I used a 2048*2048 texture with Mipmap turned on. First, I did not turn on Texture Streaming, packaged it to the real machine for testing, grabbed the memory, and found that this Texture occupied a total of 2.7M of memory. When Mipmap is not enabled, the memory occupied by the texture is 2.0MB, and the memory occupied by the texture becomes 1.35 times (closer to 4/3 times) after Mipmap is enabled. Because the 0-level Mip occupies 2M of memory, we can roughly calculate that the 1-level Mip occupies 0.5MB of memory, the 2-level Mip occupies 128KB of memory, and the 3-level Mip occupies 32KB of memory.

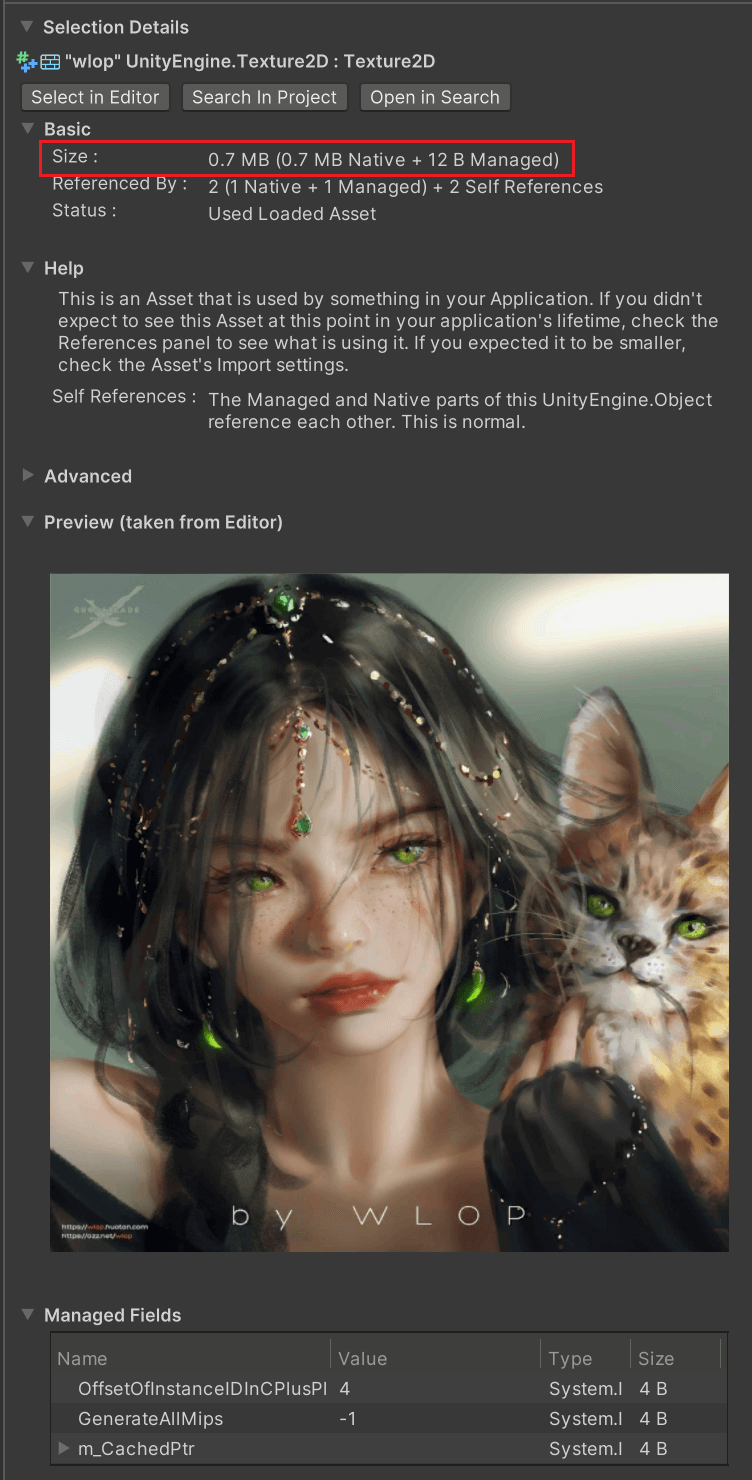

Next, turn on Texture Streaming and load Mip to Mip1 level through Texture Streaming System, and grab the memory. As shown in the figure below, Texture occupies 0.7MB. From this, it can be concluded that only Mip0 is unloaded, and Mip1~n are still in the video memory. .

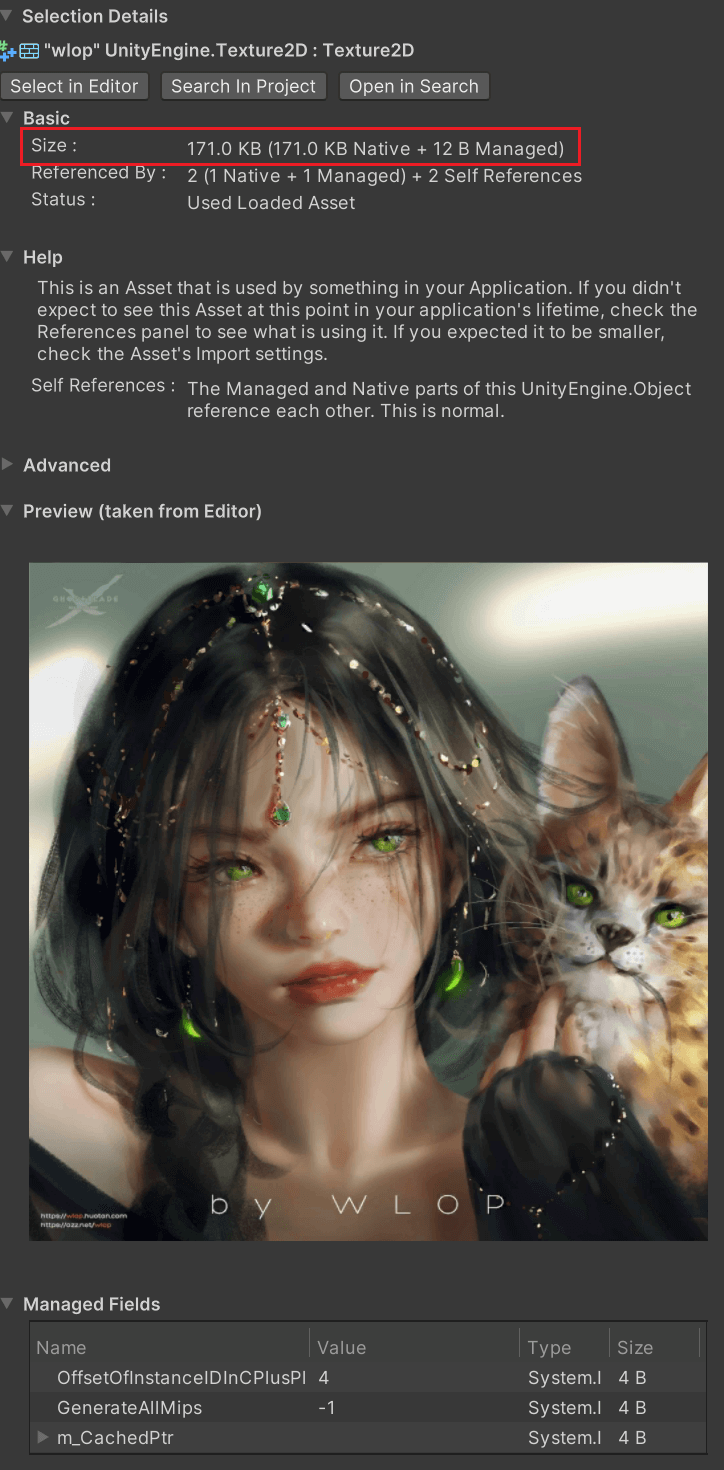

In the same way, let it be loaded into Mip2 and grab the memory. As shown in the figure below, Texture occupies 171KB, and the reasoning can be concluded as (2.7-2-0.5)MB, which is close to 0.2MB.

From this, it can be concluded that a Texture has a Mip of level 0~n. After Texture Streaming is turned on, if the Mip level calculated by Unity is x, Unity will load all the Mip of the Texture into the video memory. It can also be understood as discarding the 0~(x-1) level Mip. (As mentioned later, loading Mip level x means loading Mip level x~n.)

In fact, it is also very reasonable. For a GO that uses a mipmap-enabled texture, we may not only use one Mip, because if the GO is a long strip, the distant pixels may still use a higher-level Mip. It just means that the Texture Streaming System will discard several low-level Mips that must not be used , and will not load them into the video memory (or unload them from the video memory).

2. Texture Streaming loading logic

After enabling Texture Streaming, the way Unity uses Mipmap will change to some extent, because only the Mipmap Level that needs to be loaded will be stored in the video memory. The steps to use it at runtime are roughly as follows (enable Texture Streaming, regardless of factors such as texture streaming budget, MaxLevelReduction, etc., which will be described in detail later).

(1) When rendering a GO that uses a Mipmap texture, the CPU asynchronously loads the Mip of the lowest Mipmap level (artificially set) into the video memory.

(2) The GPU first uses these low-level Mipmaps to render GO.

(3) The CPU calculates that the GO must not use the Mip level. For example, the calculation of x means that only x+1~n level Mip may be used, and the x+1~n level Mip is loaded into the video memory.

(4) When the GPU samples the texture, it will determine the Mipmap level to use based on the texture coordinates of the current pixel and the two internal values DDX and DDY calculated by the GPU, that is, according to the actual size of the pixel covered in the scene. The matching Texel size, according to the Texel size to determine the Mip level to use. As a side note, DDX and DDY provide information about the UVs of pixels next to and above the current pixel, including distance and angle, which the GPU uses to determine how much texture detail is visible to the camera.

2.1 Asynchronous texture loading AUP

has the advantage of using Texture Streaming. When loading an object, it will first load a higher-level Mip asynchronously, so that the object can be rendered faster, and then use a lower-level Mip to show high-precision Texture details. It is reflected in the game that when an object is loaded, a blurry texture is first presented, and then a finer texture is presented.

Here are a few words about the principle of asynchronous loading of textures (Asynchronous Upload Pipeline AUP), mainly referring to official documents and articles "Optimizing Loading Performance: Understanding Asynchronous Upload Pipeline AUP" .

In a synchronous upload pipeline, Unity must simultaneously load the texture or mesh's metadata (header data), each texel of the texture or each vertex data of the mesh (binary data) in a single frame. In an asynchronous upload pipeline, Unity must load only the header data in a single frame and stream the binary data to the GPU in subsequent frames.

In the synchronous upload pipeline , when the project is built, Unity will write both the header data and binary data of the synchronously loaded mesh or texture into the same .res file (res is Resource). At runtime, when a program loads a texture or mesh synchronously, Unity loads the header data and binary data for that texture or mesh from the .res file (in disk) into memory (RAM). When all the data is in memory, Unity then uploads the binary data to the GPU (before DrawCall). Both loading and uploading operations happen in a single frame on the main thread.

In the asynchronous upload pipeline , when the project is built, Unity will write the header data to a .res file, and write the binary data to another .resS file (S should refer to Streaming). At runtime, when a program loads a texture or mesh asynchronously, Unity loads the header data from the .res file (in disk) into memory (RAM). While the header data is in memory, Unity then uses a fixed-size ring buffer (a buffer of configurable size) to stream the binary data from the .resS file (on disk) to the GPU. Unity uses multiple threads to stream binary data over several frames.

In addition, it should be noted that if the project is built on the Android platform, LZ4 compression needs to be enabled to enable asynchronous texture loading (and because the Texture Streaming System uses texture asynchronous loading, the prerequisite for the Texture Streaming System is also LZ4 compression).

Starting from Unity 2018.3 beta, the resource upload pipeline Async Upload Pipeline is used to asynchronously load textures and meshes (readable and writable textures and meshes, compressed meshes are not applicable to AUP).

When AUP loads textures asynchronously, the first frame will load the .res header data (metadata of the texture) into memory, and then stream the .resS binary data (each Texel of the texture). In the process of streaming .resS, AUP will execute the following logic.

(1) Wait for free memory space to appear in the ring buffer on the CPU.

(2) The CPU reads part of the binary data from the .resS file on the disk, and fills it into the free memory of the ring buffer in step 1.

(3) Perform some post-processing such as texture decompression, mesh collision generation, per-platform repair, etc.

(4) Upload on the rendering thread in a time-sliced manner, that is, each frame spends n time-sliced CPU time to transfer the data in the ring buffer (the amount of data is determined by the duration of the time slice) to the GPU.

(5) Release the memory that has been passed to the GPU on the ring buffer.

There are three parameters that can be controlled by AUP at runtime, namely QualitySettings.asyncUploadTimeSlice (that is, the total time of the fourth step time slice in each frame), QualitySettings.asyncUploadBufferSize (the size of the ring buffer), QualitySettings.asyncUploadPersistentBuffer (determined to complete Whether to free the ring buffer after all current read work). For details on how to use these three parameters, please refer to the article "Optimizing Loading Performance: Understanding AUP, Asynchronous Upload Pipeline" , so I won't go into too much detail here.

3. Using Texture Streaming

The following are some instructions for using Texture Streaming.

3.1 Setting parameters

This section refers to official documents, and for more detailed instructions, please refer to official documents .

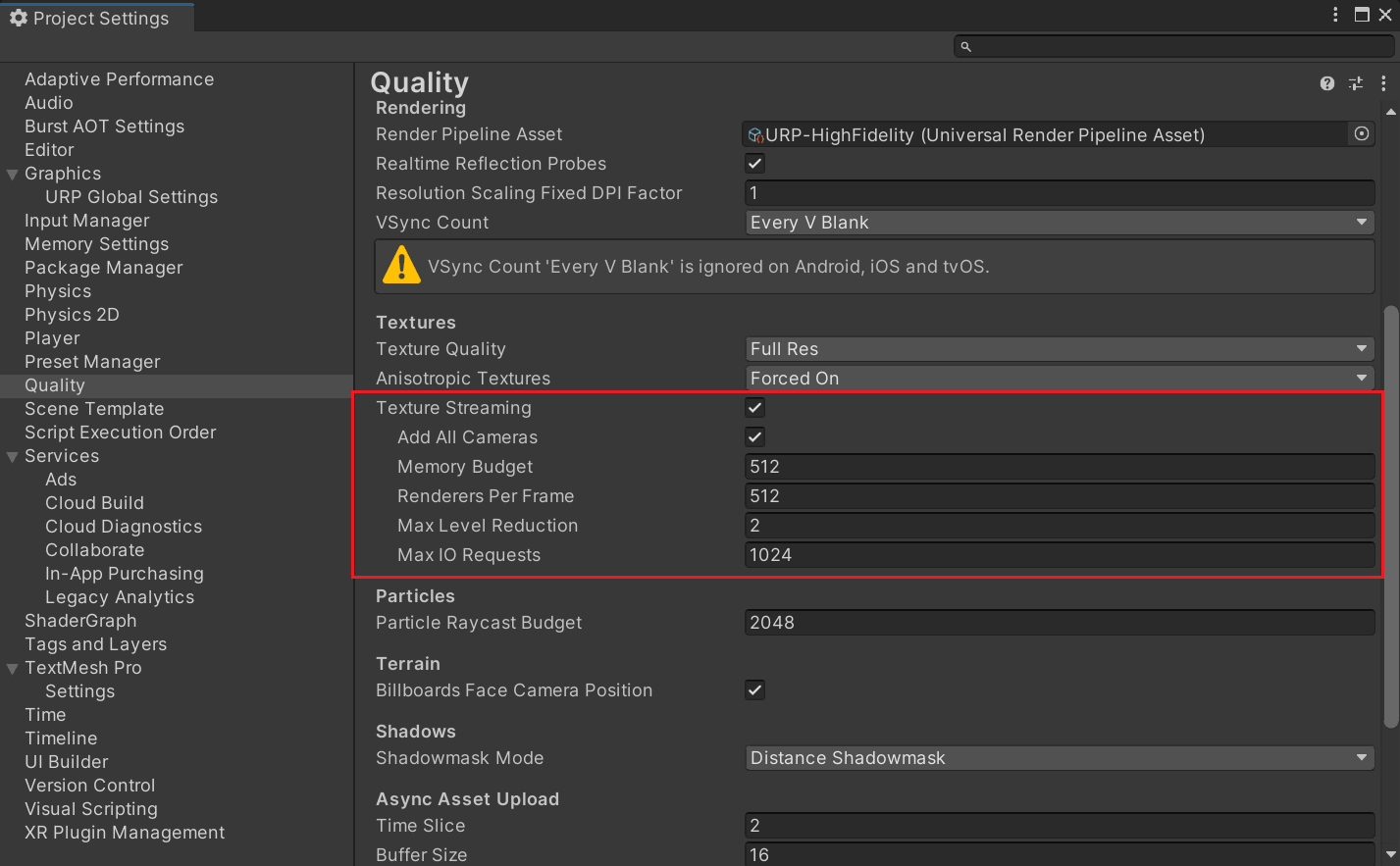

Add All Cameras: Whether to enable texture streaming for all cameras in the project. (enabled by default)

Memory Budget: Set the maximum amount of texture memory loaded when texture streaming is enabled. (default 512MB)

Renderers Per Frame: Set how many Renderers the CPU texture streaming system processes per frame (that is, for a Renderer, calculate which levels of Mipmaps need to be transferred on the CPU side and pass them to the video memory). A lower value will reduce the pressure on the CPU to process texture streaming per frame, but will increase the delay for Unity to load Mipmaps.

Max Level Reduction: Sets the maximum number of Mipmaps that can be discarded by texture streaming when the texture budget is exceeded. (The default is 2, which means discarding at most 2 lowest-level Mipmaps.) At the same time, this value also determines that the Max Level Reduction level Mipmap will be loaded when the texture is initialized and loaded.

Max IO Requests: Set the maximum number of texture file IO requests for the texture streaming system. (default is 1024)

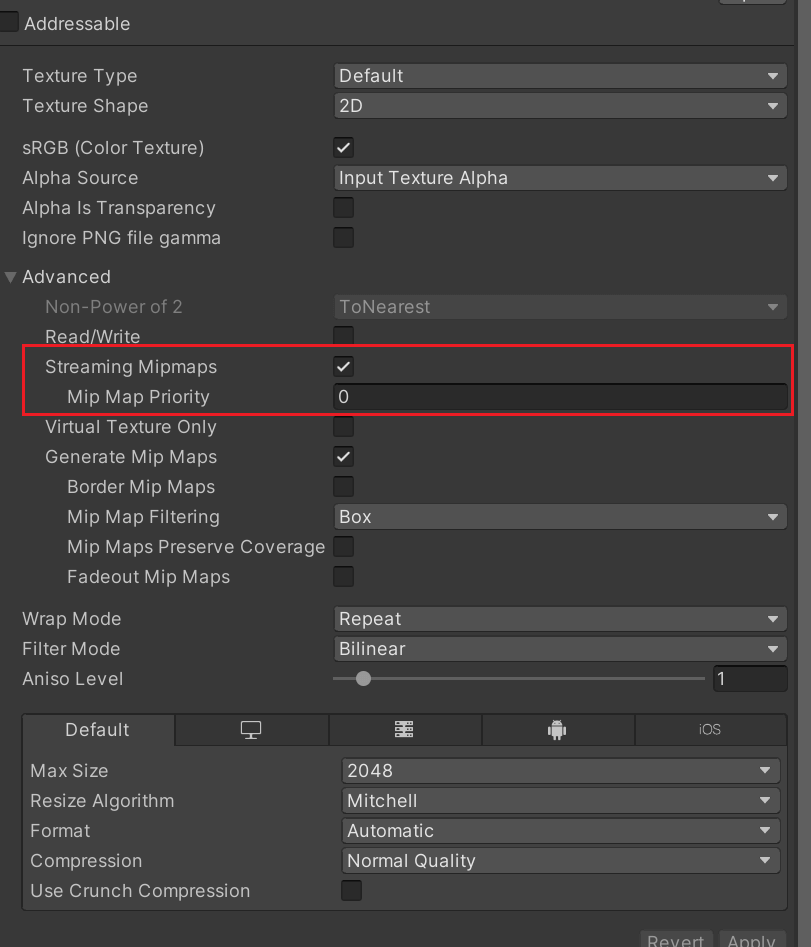

3.2 Let the texture support Mipmap Streaming Select the Texture Asset that needs to enable texture streaming, and check Streaming Mipmaps

under the Advanced tab of the Inspector . For Android platform development, you need to use the LZ4 or LZ4HC compression format in Build Settings. Unity needs these compression methods to implement asynchronous texture loading , which is a necessary technology for implementing a texture streaming system.

After checking Streaming Mipmaps for Texture Asset, the Mip Map Priority attribute appears, which indicates the priority of the texture's allocation of resources in the Mipmap Streaming System. The larger the Priority value, the higher the priority, and its range is [-128,127].

Lightmaps also supports texture streaming in the same way as Texture Asset. But when Unity regenerates the lightmap, its settings are reset to default. Through the Project Settings, you can set the default configuration of the corresponding texture streaming system when generating light maps.

3.3 Configuring Mipmap Streaming

First, we need to configure the Memory Budget, that is, the memory budget. When the runtime Texture occupies more memory than the Memory Budget, Unity will automatically discard unused Mipmaps. You can control the Mipmaps discarded by Unity by setting the Max Level Reduction property. At the same time, Max Level Reduction also represents the Mipmap level loaded by the Mipmap Streaming System when initially loading a Texture.

Note: Max Level Reduction has a higher priority than Memory Budget in the Mipmap Streaming System, which means that even if it exceeds Budget, the texture will still load Mip at the Max Level Reduction level into the video memory.

3.4 Configuring Cameras

When Mipmap Streaming System is enabled, Unity will enable it for all cameras by default. We can configure whether to enable Mipmap Streaming System for all cameras by setting Add All Cameras in Quality Settings .

If we want to configure a separate camera, we need to add a Streaming Controller component to the camera. If we don’t want the camera to enable texture streaming, then disable this component directly. At the same time, this component also allows us to adjust the Mip offset of the camera.

If the UI in the project is rendered with a single camera, then we don't need to enable texture streaming for UICamera, so there is no need to activate Add All Cameras in QualitySettings, just add the Streaming Controller component to the camera that renders the scene.

3.5 Configuration and enabling environment

Mipmap Streaming is only enabled in Play Mode by default. We can set whether it is enabled in Editor and Play modes in Editor Settings.

3.6 Debugging Mipmap streaming

In the Built-in pipeline, there will be a Texture Streaming drawing mode in the drop-down menu in Unity's Scene view, which will be displayed in green, red, and blue according to the state of the game object in the Mipmap system . For details, please refer to the official documentation.

For non-Built-in pipelines, it may be necessary to manually implement Shader replacement in the pipeline (such as inserting a Render Feature).

3.7 Some common parameters

Texture.currentTextureMemory: The amount of memory currently used by all textures.

Texture.streamingTextureDiscardUnusedMips: This value defaults to False. When it is set to True, Unity will force the texture streaming system to discard all unused Mipmaps instead of caching them . Because the texture streaming system also uses a management method similar to the memory pool, assuming that the object is farther away from the camera, the calculated Mip level becomes higher at this time, but the texture streaming system will not immediately unload the low-level Mip and use the calculated Mip, Instead, consider unloading unused Mips in the current memory pool when other textures need to be loaded and the Budget is not enough, that is, to find unused Mips from far to near (this has been tested and verified, and the test results are shown in the following two figures ) .

From this point of view, when Texture.streamingTextureDiscardUnusedMips is not turned on, Mipmap Streaming System's unloading useless Mip logic is passively triggered (similar to events, triggered when new textures are loaded and the budget is insufficient, it should reduce CPU calculations, and also Use the upper cache to reduce IO consumption). And when Texture.streamingTextureDiscardUnusedMips is enabled, the unloading logic of the Mipmap Streaming System becomes active triggering for each frame , that is, each frame will be actually applied to GO strictly according to the level calculated by Mip.

4. Texture Streaming System management strategy

In fact, there are very few specific analyzes of Texture Streaming System on the Internet. Here, I first refer to the UWA School "Quantitative Analysis and Optimization Method of Unity Engine Loading Module and Memory Management" to analyze the Texture Streaming System strategy. Brief summary, interested students can purchase the original course to watch.

For the entire texture streaming system, the two most important parameters are Memory Budget (texture streaming budget) and Max Level Reduction () . Memory Budget determines how much texture memory takes up, and Unity starts to use Texture Streaming System to manage Mipmap memory; Max Level Reduction determines the maximum loaded Mipmap level (the higher the Mipmap level, the more blurred). For Memory Budget, Unity's default value is 512MB, but for general mobile phone projects, it is more appropriate to set it to about 200MB (but the value needs to be modified in practice).

For runtime, the Texture Streaming System management strategy is summarized as follows:

(1) When a Non Streamed Texture (a Texture without Mipmap Streaming enabled) needs to be loaded, it will be fully loaded into memory. If the loaded Texture has Mipmap 0~n, Mipmap 0~n will be loaded into memory .

(2) When loading the Scene, if the Budget is sufficient, the Texture used by the GO in the Scene will be fully loaded, that is, Mipmap 0~n levels will be loaded; if the Budget is insufficient, it will be loaded according to Max Level Reduction.

(3) When the dynamically loaded GO Texture is Loading and Instantiate (the object may not be actually rendered at this time), Unity will always load its Max Level Reduction level Mipmap into memory first. The advantage of this is that the loading speed will increase Faster, because only one Mipmap level needs to be loaded, which takes up less memory, and the Texture Streaming System will use texture asynchronous loading for it.

(4) When we actually need to render GO (after Instantiate GO, we may need to render the object immediately, or the object appears in the camera after the object is active, etc.), the CPU will stream the budget and camera according to the current idle texture The distance between objects and other factors is used to calculate the current Mipmap level that needs to be loaded. If the Budget is sufficient, the calculated Mipmap level is loaded; if the Budget is insufficient, the Mipmap of the Max Level Reduction level is still loaded.

(5) At runtime, when we need to load a new Texture and the memory occupied by the current texture exceeds the budget, the Texture Streaming System will find a way to start reducing the memory occupied by the Texture. For all GOs that come with the Scene, Unity will recalculate in order from far to near to the camera to determine whether it really needs to load the current Mipmap level. If not, it will unload its excessively high Mipmap level, which is given The memory space is given to the newly loaded Texture. At this time, for the new Texture that needs to be loaded, if the calculated Mipmap level can be loaded (that is, enough free memory), then load the calculated Mipmap level; if it cannot be loaded (after unloading some Mipmaps that are not needed by GO according to the strategy, The memory is still not enough), then load the Mipmap of the Max Level Reduction level. It can also be seen from this point that for a Texture, the maximum Mipmap level actually loaded is Max Level Reduction (this level will be loaded even if it exceeds Budget).

5. Several key points

In addition, several key points of using the Texture Streaming System were also mentioned in the UWA classroom, which can be regarded as the pits they have stepped on.

(1) The mobile terminal must set QualitySettings.streamingMipmapsActive = true through code. If you just check it manually in QualitySettings, it will work under the Editor, but it may not work on the mobile terminal.

(2) After the Unity version is upgraded, the texture may become blurred. Solution: Open a new project and copy all Mesh and Texture intact.

(3) Don't trust the Editor Profiler, test it directly on the real machine . This is very important. The memory occupied by the texture under the Editor will be much larger than that of the real machine (maybe the resource path used is different). In the same case, the texture occupied by the Editor may reach 300MB, but it may only be 30MB on the real machine. . Therefore, all tests related to Texture Streaming must be carried out on the real machine!

(4) In the case of not activating Texture.streamingTextureDiscardUnusedMips, there should be only one opportunity for Mip to be discarded (meaning that the loaded Mip is unloaded from the video memory), that is, the current texture streaming budget is insufficient, and a new Mip Streaming Texture needs to be loaded. At this time, find the unused Mip offload from far to near. This also means that directly zooming out the lens will not immediately trigger the reduction of the Mip level, because I thought that the blurred Mip should be used when the lens is zoomed out. This is more important, and I thought it was a bug at first.

6. Mipmap offset MipmapBias

In actual projects, we may need to use Mipmap offsets for models with different performance. There are several ways to achieve Mipmap offset:

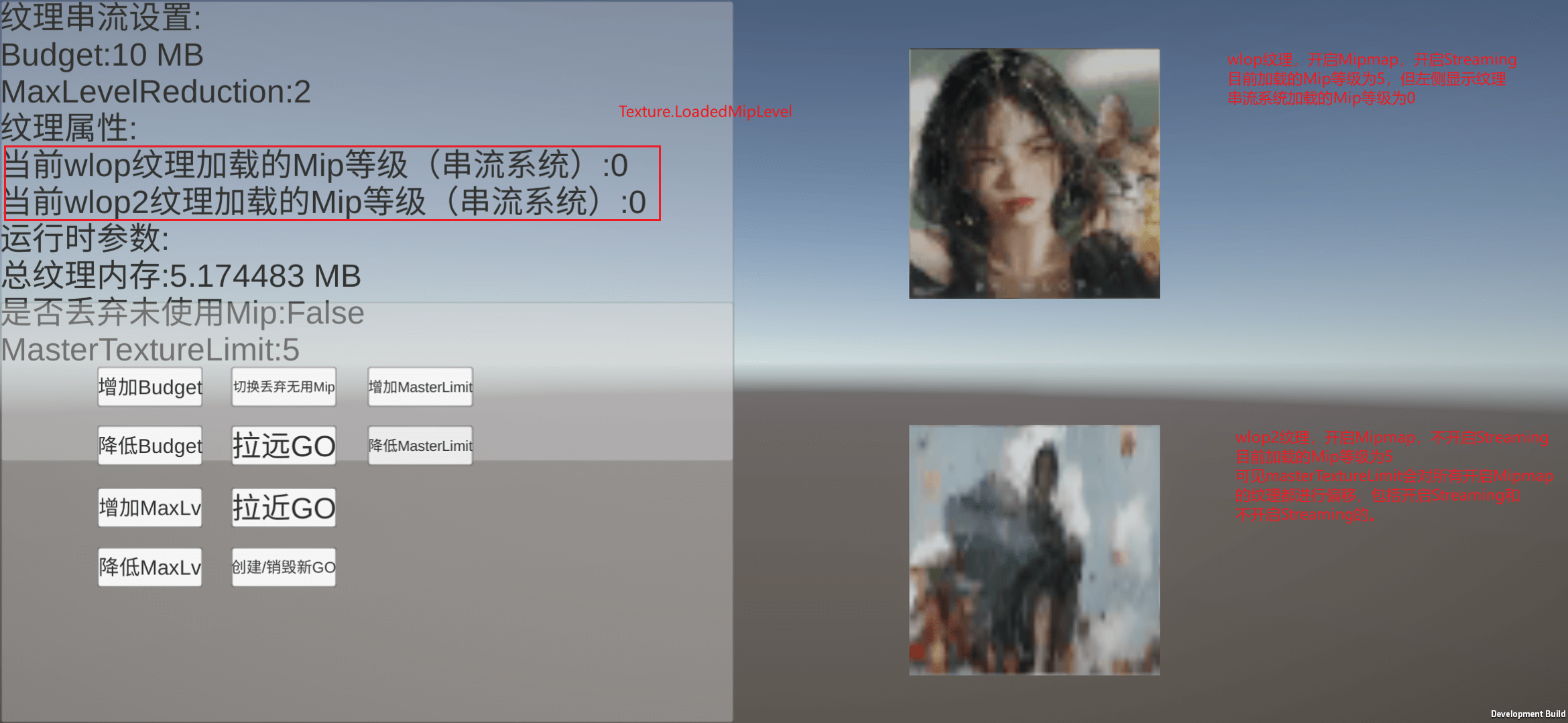

(1) QualitySettings.masterTextureLimit: its default value is 0, if its value is set to x, it will use level x Mipmap for all Texture2D resources with Mipmap enabled (regardless of whether Mipmap Streaming is enabled).

(2) Texture.mipMapBias: Set the Mipmap offset for a single Texture.

(3) Mip Map Bias on the Streaming Controller component: This value only works when QualitySettings.AddAllCamera is not enabled and the Streaming Controller is activated on the Camera. This setting will perform Mipmap offset for the Texture used by the Renderer that the current camera needs to render ( the Texture that enables Mipmap Streaming ). This method is recommended.

In the third method, the priority of MipmapBias is lower than MaxLevelReduction. For example, if the current MaxLevelReduction=3, MipmapBias=2, the calculated Mip level is 2. In theory, Mip4 should be used, but because MaxLevelReduction=3, So Mip3 will be loaded at the end (ie load Mip3 ~ n into video memory).

Next, test the three usage methods.

For method 1 , the Mip level actually used will not be reflected on Texture.loadedMipmapLevel, and Texture.loadedMipmapLevel returns the Mipmap level currently loaded by the streaming system. Mip offset through QualitySettings.masterTextureLimit will unload low-level Mip from video memory to achieve the effect of memory optimization. The test results are shown in the figure below.

For method 2 , it controls the Mipmap offset of a single texture. This method works on textures with Mipmap enabled, regardless of whether Streaming is enabled.

For method 3 , it offsets the Mip in the Mipmap Streaming System. Its use conditions are relatively cumbersome, but it is also the most reasonable method of use, that is, only open the Mipmap offset for the required camera and required texture. . For method 3, because it involves the management and replacement of Mipmap Streaming System, a more detailed test has been carried out.

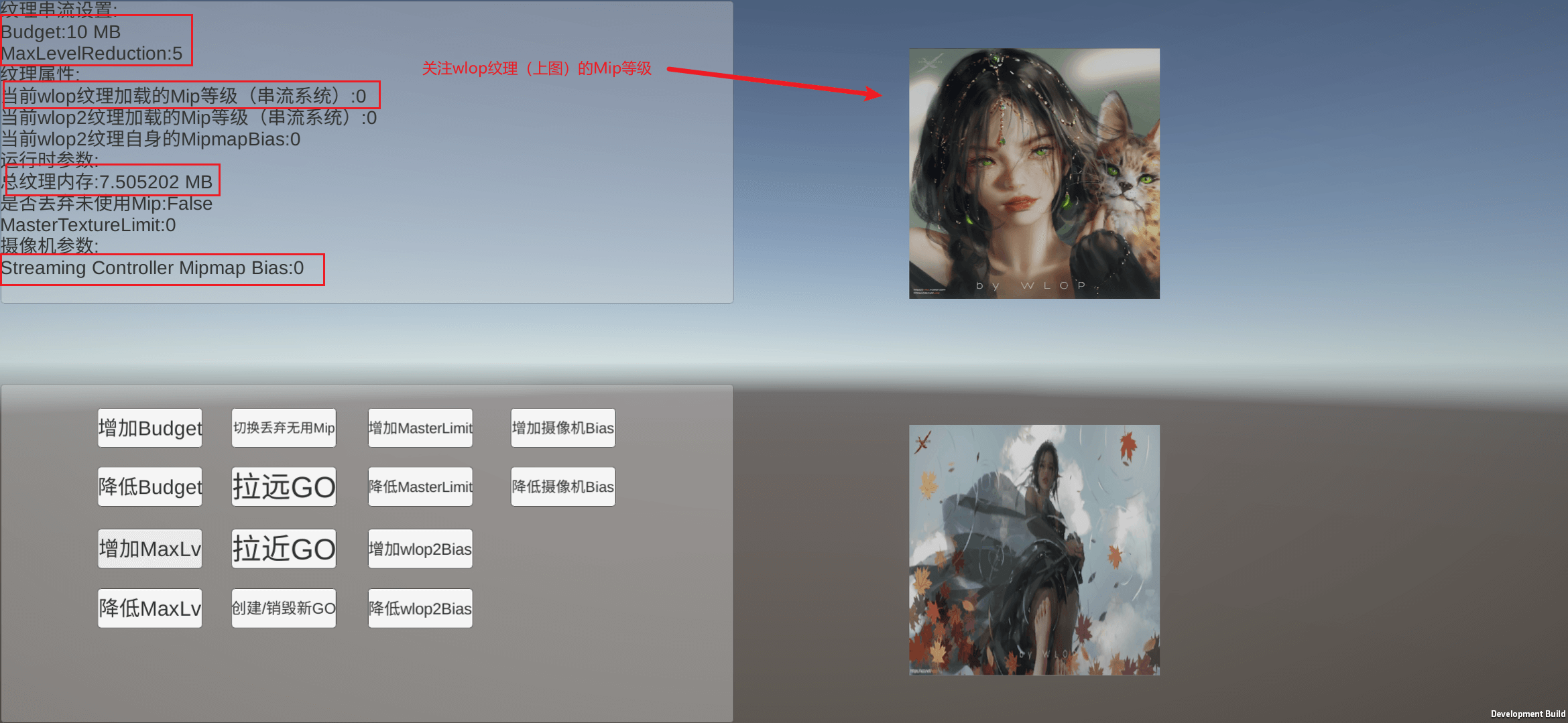

First test the situation where MipmapBias is not enabled, enter the test scene, Budget is 10, MaxLevelReduction is 5, MipmapBias on the camera is 0, and the currently used texture memory is 7.5MB, which is lower than Budget.

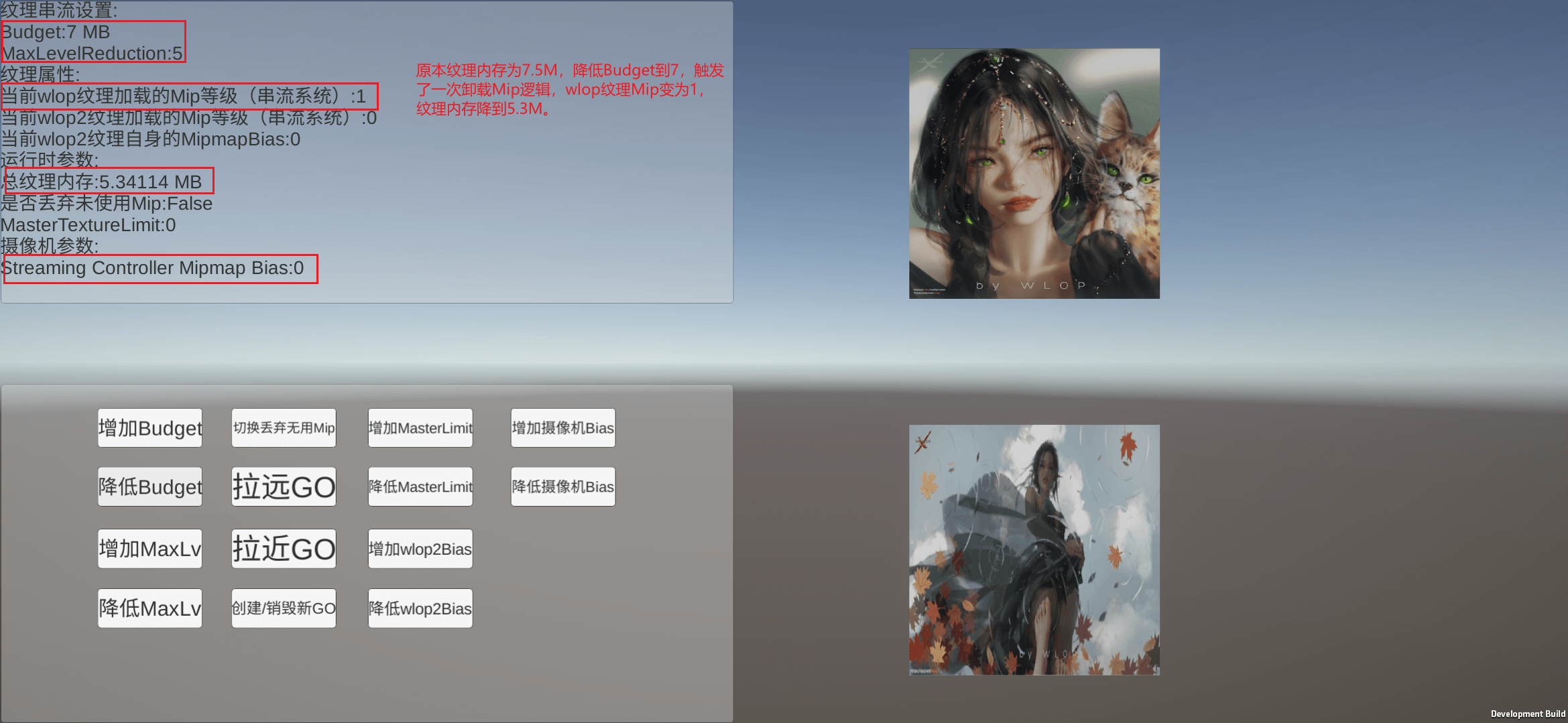

At this time, the Budget is lowered to 7 (the actual memory occupied by the texture is 8), which triggers the logic of unloading Mip when the Budget is insufficient. At this time, the Mip level of the wlop texture becomes 1, that is, Mip0 is unloaded from the video memory.

Next test MipmapBias=2, enter the scene, adjust MipmapBias to 2, as shown in the figure below, although we have adjusted MipmapBias to 2, but the Mip level used by the wlop texture is still 0, and the memory has not changed, so adjust MipmapBias It will not take effect immediately.

Then reduce the Budget to 7 to trigger the unloading logic. As shown in the figure below, the Mip level of the wlop texture changes to 3 (when MipmapBias is 0, it changes to 1), and MipmapBias takes effect at this time.

It can be seen from this point that MipmapBias will only take effect when the Mipmap Streaming System calculates the Mip level (it will take effect in real time after Texture.streamingTextureDiscardUnusedMips is turned on. As mentioned in the previous article, the Mip calculation logic will be triggered every frame when it is turned on), and The timing of calculating the Mip level is also those mentioned in the fourth point Texture Streaming System management strategy. Although here I am triggering the uninstallation logic by adjusting the Budget, as for whether it is necessary to dynamically adjust the Budget in the real project, no relevant person has mentioned it on the Internet (currently, only a small number of commercial projects actually use the system), so I don’t know. Determine whether the method can be applied in practice.

7. Debug texture streaming system

In the built-in pipeline, Unity provides Mipmap Streaming debugging tools natively. For details, please refer to the official documentation . Since this article uses the URP pipeline, Unity native tools cannot be used, so I refer to the official debugging Shader and write a simple Shader for debugging. In order not to add a Pass to each Shader for debugging, I chose to replace all material Shaders with debugging Shaders at runtime. After all, I don’t need to consider performance (I originally considered the RenderFeature method, which is better for hot swapping, but it’s about The problem of how to get the MainTex of the original material is difficult to solve, so this method is used).

In the Shader for debugging, because I don’t consider performance issues, I wrote it relatively rough and used a lot of if judgments. In actual use, you can write Shader yourself according to your needs. The general idea is that, first of all, a Texture.SetStreamingTextureMaterialDebugProperties() needs to be executed in each frame. By executing this function in each frame, Unity will use some Mipmap information of the texture with Mipmap Streaming enabled on each material (such as the total number of Mips of the texture. , the currently used Mip level, etc.) to the shader, which is obtained in the shader by something like "_MainTex_Mipinfo" (written like _MainTex_ST). Then you can reflect different colors in the Shader according to the current Mip level to visualize the Mip level.

The Shader code I wrote is as follows.

Shader "Tool Shaders/MipmapDebugger"

{

Properties

{

_MainTex("Main Tex",2D) = "white"{}

_NonMipColor("Non Mip Color",Color) = (0.5,0,0.2,1)

[Header(Debug Color)]

_Mip0Color("Mip 0 Color",Color) = (0,1,0,1)

_Mip1Color("Mip 1 Color",Color) = (0,0.7,0,1)

_Mip2Color("Mip 2 Color",Color) = (0,0.5,0,1)

_Mip3Color("Mip 3 Color",Color) = (0,0.3,0,1)

_MipHigherColor("Mip Higher Color",Color) = (0,0.2,0,1)

[Header(Debug Settings)]

_BlendWeight("Blend Weight",Range(0,1)) = 0.7

}

Subshader

{

Pass

{

HLSLPROGRAM

#pragma vertex MipmapDebugPassVertex;

#pragma fragment MipmapDebugPassFragment;

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary//Core.hlsl"

TEXTURE2D(_MainTex);SAMPLER(sampler_MainTex);float4 _MainTex_ST;float4 _MainTex_MipInfo;

float4 _NonMipColor, _Mip0Color, _Mip1Color, _Mip2Color, _Mip3Color, _MipHigherColor;

float _BlendWeight;

struct Attributes

{

float3 positionOS:POSITION;

float2 uv:TEXCOORD0;

};

struct Varyings

{

float4 positionCS: SV_POSITION;

float2 uv:TEXCOORD0;

};

int GetMipCount(Texture2D tex)

{

int mipLevel,width,height,mipCount;

mipLevel = width = height = mipCount = 0;

//in参数:mipmapLevel

//out参数:width:纹理宽度,以纹素为单位

//out参数:height:纹理高度,以纹素为单位

//out参数:mipCount:纹理mipmap级别数

tex.GetDimensions(mipLevel,width,height,mipCount);

return mipCount;

}

float3 GetCurMipColorByManualColor(float4 mipInfo)

{

//mipInfo:

//x:系统设置的maxReductionLevel

//y:纹理的mip总数

//z:纹理串流系统计算出应该使用的纹理Mip等级

//w:当前加载的Mip等级

int desiredMipLevel = int(mipInfo.z);

int mipCount = int(mipInfo.y);

int loadedMipLevel = int(mipInfo.w);

if(mipCount == 0)

{

return _NonMipColor;

}

else

{

if(loadedMipLevel == 0)

{

return _Mip0Color;

}

else if (loadedMipLevel == 1)

{

return _Mip1Color;

}

else if(loadedMipLevel == 2)

{

return _Mip2Color;

}

else if(loadedMipLevel == 3)

{

return _Mip3Color;

}

else if(loadedMipLevel > 3)

{

return _MipHigherColor;

}

else

{

return _NonMipColor;

}

}

}

float4 GetCurMipColorByAuto(float4 mipInfo)

{

//mipInfo:

//x:系统设置的maxReductionLevel

//y:纹理的mip总数

//z:纹理串流系统计算出应该使用的纹理Mip等级

//w:当前加载的Mip等级

int desiredMipLevel = int(mipInfo.z);

int mipCount = int(mipInfo.y);

int loadedMipLevel = int(mipInfo.w);

float mipIntensity = 1 - (float)loadedMipLevel / (float)mipCount;

return float4(mipIntensity,0,0,1);

}

Varyings MipmapDebugPassVertex(Attributes input)

{

Varyings output;

output.positionCS = TransformObjectToHClip(input.positionOS);

output.uv = input.uv;

return output;

}

float4 MipmapDebugPassFragment(Varyings input):SV_TARGET

{

float3 originColor = SAMPLE_TEXTURE2D(_MainTex,sampler_MainTex,input.uv);

float3 debugColor = GetCurMipColorByManualColor(_MainTex_MipInfo);

float3 blendedColor = lerp(originColor,debugColor,_BlendWeight);

return float4(blendedColor,1);

}

ENDHLSL

}

}

}

The effect is shown in the video below. Textures with Mipmap Streaming disabled will be displayed in pink, and textures with Mipmap Streaming enabled will be green in varying degrees according to the Mip level currently used. The brighter the green, the lower the Mip level.

8. To sum up,

the Mipmap Streaming System provided by Unity is a relatively advanced technology. If it is used properly, it can reduce the texture memory and speed up the loading speed, but there will be many pitfalls in the process of using it. For example, the relationship between its parameters and some parameters that come with Texture is relatively confusing, and Unity officials have not explained its operating principles in detail. Most of the time, we can only rely on us to do experiments to guess its operating logic, which is a relatively difficult item to control. technology, so it is rare to see projects that use technology and share how to use it (although different types of games will certainly have different usage methods, but I have not seen a set of more mature usage solutions ). At present, my understanding of the Unity texture streaming system is still relatively shallow, and my understanding is relatively shallow. I also hope that some students who have used this technology can share some of their own insights.

reference

- Mipmaps introduction

- The Mipmap Streaming system API

- Optimizing Loading Performance: Understanding Asynchronous Upload Pipeline AUP

- Unity3D Research Institute assigns different mipmaps to each texture to reduce texture bandwidth (114)

- Questions about the use of Texture Streaming

- Unity Texture Streaming

- Talking about Mipmap and Texture Streaming Technology in Unity

- I don't understand Texture Mipmap Streaming (Unity 2018.2)

- Quantitative analysis and optimization method of Unity engine loading module and memory management

Image via WLOP

This is the 1348th article of Yuhu Technology, thanks to the author Euclid Norm for the contribution. Welcome to repost and share, please do not reprint without the authorization of the author. If you have any unique insights or discoveries, please contact us and discuss together.

Author's homepage: Euclidean norm - Know almost

Thanks again for sharing the Euclidean norm. If you have any unique insights or discoveries, please contact us and discuss together.