IMGpedia: A Linked Dataset with Content-Based Analysis of Wikimedia Images

ps: (IMGpedia: released to the public on May 6, 2017)

1. Summary

IMGPedia is a large-scale linkage dataset containing visual information of images from the Wikimedia Commons dataset: it brings together visual content descriptors for 15 million images , 450 million visual similarity relationships between these images , and , as well as the DBpedia resources associated with individual images . In this paper, the creation of the IMGpedia dataset is described, statistics on its schema and its content are outlined, example queries that combine semantic and visual information from images are provided, and other envisioned use cases for the dataset are discussed.

2. Article directory structure

3. Introduction

Take a look at this part

Many datasets are already published on the Web following Semantic Web standards and Linked Data principles. At the heart of the resulting "Data Web" can be found

- Linked datasets such as DBpedia (which contains structured data automatically extracted from Wikipedia), and WIKIDATA (which allows users to add and manage data directly in a structured format).

- Various datasets related to multimedia , such as LINKEDMDB describing movies , BBC MUSIC describing bands and genres , etc. Recently, DBpedia Commons published metadata extracted from Wikimedia Commons , a rich multimedia resource containing 38 million freely available media files (images, audio, and video).

Related work : Among the available datasets describing multimedia , the focus is on capturing high-level metadata of multimedia files (e.g., author, creation date, file size, width, duration), rather than audio or video features of the multimedia content itself . However, as mentioned in previous related work, combining structured metadata with multimedia content-based descriptors may lead to multiple applications, such as semantically enhanced multimedia publishing, retrieval, preservation, etc. While these works have proposed methods for describing audio or video content of multimedia files in Semantic Web formats, we are not aware of any publicly linked datasets containing content-based descriptors for multimedia files . For example, DBpedia commons does not extract any audio/video features directly from Wikimedia Commons multimedia documents, but only captures metadata from documents describing the files.

Contribution : Along these lines, IMGPedia was created: a linked dataset containing visual descriptors and visual similarity relations of Wikimedia Commons images, which is compatible with the DBpedia Commons dataset (which provides metadata for images such as author, license etc.) and DBpedia datasets (providing metadata about resources associated with images) .

4. Image Analysis

This part mainly clarifies three questions:

- Where do the images come from?

- How to calculate the descriptor after obtaining the image?

- What can descriptors be used for? What is the specific implementation process?

-

The first is the image source

In fact, it is the same as the previous article (IMGpedia: Enriching the Web of Data with Image Content Analysis), it is downloaded from the WIKIMEDIA COMMONS dataset , a total of 21TB was downloaded, and it took 40 days; -

After the image is acquired, it proceeds to compute different visual descriptors , which are high-dimensional vectors that capture different elements of the image content (such as color distribution or shape/texture information) . The visual descriptors that need to be calculated are: Gray Histogram Descriptor (Gray Histogram Descriptor GHD), Histogram of Oriented Gradients Descriptor (Oriented Gradient Histogram Descriptor HOG), and Color Layout Descriptor (Color Layout Descriptor CLD), these descriptions The calculation of the descriptor was executed on Debian 4.1.1: a 2.2 GHz 24-core Intel® Xeon® 120GB of RAM. Processor, it took 43h, 107h and 127h respectively ; The same as the previous article, write it down to be more impressive... )

-

Then, these descriptors will be used to calculate the visual similarity between images , if the distance between the descriptors is low, the two images are visually similar; specific steps:

step1 : In order to avoid P(n,2) force comparison, using an approximate search method to compute the 10 nearest neighbors for each image from each visual descriptor;step2 : Divide the image into 16 buckets, where for each image, initialize 16 threads to search the 10 nearest neighbors in each bucket;

step3 : At the end of the execution, there are 160 candidates as the global 10 nearest neighbors, so the 10 with the smallest distance are selected to obtain the final result.

This whole process took about 13 hours to complete using the above hardware device (on a processor with 120GB of RAM.).

5. Ontology and Data

Image visual descriptors and similarity relations form the core of the IMGpedia dataset . In order to represent these relations as RDF, a custom lightweight IMGpedia ontology is established, as shown in the following figure:

An imo: Image is an abstraction resource, representing an image from the Wikimedia Commons dataset, describing the dimensions of the image (height and width), the image URL in Wikimedia Commons , and an owl:sameAs link to a supplementary resource in DBpedia Commons ;

An imo: Descriptor : Links with images via the imo:describes relationship. To keep the number of output triples manageable, vectors of descriptors are stored as strings ; storing a single dimension as (192-288) individual objects bloats the output triples to an unmanageable number; additionally, No SPARQL queries are expected for the individual values of the descriptor;

An imo: ImageRelation (image relationship) : Although Manhattan distance is symmetric, these relationships are based on k-nearest neighbor (k-nn) search. In k-nn, image a is the k-nearest neighbor of image b, which does not mean that a and b has an inverse relation ; thus, the image relation captures source and target images, where the target is in the k-nearest neighbors of the source . We also add the imo:similar relation from the source image to the target k-nn image.

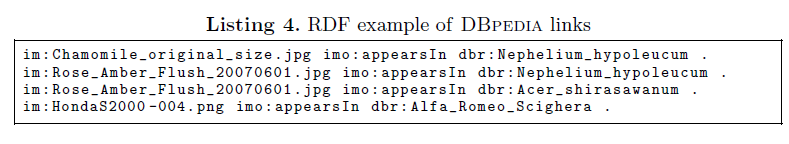

Finally, we provide a link to DBpedia in addition to the link to DBpedia Commons, which provides context for the images . To create these links, we use English Wikipedia's SQL dump and perform a join between the table for all images and the table for all articles, so we can have (image_name, article_name) pairs if an image appears in an article. In Listing 4 below, some sample links to DBpedia are given, which are not provided in DBpedia commons.

6. Use Cases (give some examples of queries that IMGpedia can answer :)

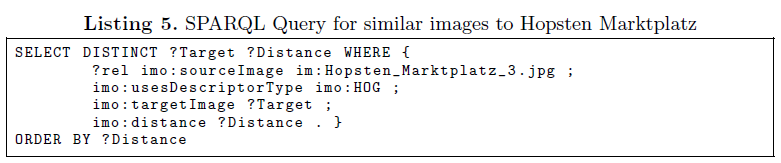

Use case one : Visual similarity relations can be queried to find images that are similar in color, edge, and/or intensity based on nearest neighbor calculations; for example, requesting the nearest neighbors of a Hopsten Marktplatz image using the HOG descriptor (capturing visual similarity of edges). The query statements and query results are as follows:

Use case two : Visual-semantic retrieval of images can be performed using a federated SPARQL query that combines visual similarity of images with semantic metadata by linking to DBpedia; in Listing 6, an example of a federated SPARQL query using the DBpedia SPARQL endpoint is shown, This endpoint fetches images from articles categorized as "Roman Catholic Cathedrals in Europe" and finds similar images from articles categorized as "Museums" . In Figure 3, the retrieved images are shown. For more accurate results, SPARQL property paths can be used to include hierarchical classifications , e.g., dcterms:subject/skos:broader* can be used in the first SERVICE clause to get all cathedrals tagged as a subcategory of cathedrals in Europe, e.g. French cathedral.

7. Summary

In this paper, IMGpedia is introduced: a linked dataset that provides visual descriptors and similarity relations for images from Wikimedia Commons; this dataset is also linked with DBpedia and DBpedia commons to provide semantic context and further metadata. Describes the construction of the dataset, the structure and sources of the data, the statistics of the dataset, and available supporting resources. Finally, some examples of visual semantic queries powered by datasets are shown and potential use cases are discussed.