Table of contents

2. Reentrancy and thread safety

2. The situation of thread safety

5. The link between reentrancy and thread safety

2. Encapsulation of mutex locks based on RAII style

2. Four necessary conditions for deadlock to occur

3. Conditions to avoid deadlock

1. The concept of thread synchronization

3.2 pv primitives of semaphores (atoms, statements)

3.4 Waiting for semaphore (P operation)

3.5 Release semaphore (V operation)

1. Thread conflict

There are a total of 10,000 tickets, and the three threads take turns to grab tickets. If you get to the end, the remaining votes will be -1. It is because the single-core CPU has only one set of registers.

For example, now both new threads are stuck in usleep sleep. During the process of thread switching in usleep, the thread will save the context data in the register. When the thread is scheduled again, 1. Restore the context data 2. Execute the tickets--statement 3. Get the tickets data in the memory and then--4. Write new data back to memory. At this time, the number of votes is 0, but there is still a thread preparing to execute the calculation of tickets--, so the number of votes will appear negative.

2. Reentrancy and thread safety

1. The thread is not safe

- Functions that do not protect shared variables;

- A function whose state changes as it is called;

- A function returning a pointer to a static variable;

- A function that calls a thread-unsafe function.

2. The situation of thread safety

- Each thread has only read access to global variables or static variables, but no write access. Generally speaking, these threads are safe;

- Classes or interfaces are atomic operations for threads;

- Switching between multiple threads will not cause ambiguity in the execution result of this interface.

3. Non-reentrant situations

- The malloc/free function is called, because the malloc function uses a global linked list to manage the heap

- Calls to standard I/O library functions, many implementations of the standard I/O library use global data structures in a non-reentrant manner

- Static data structures are used in reentrant function bodies

4. The case of reentrancy

- Do not use global or static variables

- Do not use the space created by malloc or new

- Non-reentrant functions are not called

- Does not return static or global data, all data is provided by the caller of the function

- Use local data, or protect global data by making a local copy of global data

5. The link between reentrancy and thread safety

- Functions are reentrant, that is thread safe

- The function is not reentrant, so it cannot be used by multiple threads, which may cause thread safety issues

- If a function has global variables, then the function is neither thread-safe nor reentrant.

- A reentrant function is a type of thread-safe function

- Thread safety is not necessarily reentrant, but reentrant functions must be thread safe.

- If the access to critical resources is locked, this function is thread-safe, but if the reentrant function has not released the lock, it will cause deadlock, so it is not reentrant.

3. Mutex lock

Mutual exclusion : I want multiple threads to serially access shared resources;

Atomicity : When accessing a resource, either do not do it or do it. For example, the operation on resources can be completed by using one assembly, which is called atomicity.

Locks protect shared resources by locking critical sections. Multiple threads need to see the same lock, and the lock itself is a shared resource, so who will protect the security of the lock? In order to realize the operation of the mutex, most architectures provide a swap and exchange instruction, which is to exchange the data of the register and the memory unit, which is equivalent to only one instruction, which ensures atomicity. Even on a multi-processor platform, the bus cycles for accessing memory are sequenced. When the swap command on one processor is executed, the swap command of another processor can only wait for the bus cycle. This ensures the security of the pthread_mutex_lock locking process. The locking process is atomic.

If the thread successfully applies for the lock, it has the right to execute backward; if the thread fails to apply for the lock because the lock is taken away by other threads, it will be dormant, blocked and suspended to wait until someone The thread that "holds" the lock returns the lock to release the sleep.

Of course, a certain thread is "holding" the lock and running in the critical section. When the time slice arrives, the thread will be cut off from the CPU, but it is "holding" the lock and leaving, because other threads cannot get the lock. , it is absolutely impossible to access the critical section during this period.

1. When we use locks, we must ensure that the scope of the lock is minimized, just the codes that are stuck at both ends of the critical section, reduce the span of the critical section, and improve the operating efficiency of threads in the critical section.

2. If a certain piece of code needs to be locked, it is necessary to let all threads see the lock. You cannot make a special thread for a certain thread, and do not need to lock for him to run interrupt code. . . .

1. Use of mutex

In order to prevent parallel access of threads from causing abnormal results, a mutex is used to allow threads to access serially.

PTHREAD_MUTEX_DESTROY(3P)

#include <pthread.h>

销毁:int pthread_mutex_destroy(pthread_mutex_t *mutex);

初始化:int pthread_mutex_init(pthread_mutex_t *restrict mutex,

const pthread_mutexattr_t *restrict attr);

初始化:pthread_mutex_t mutex = PTHREAD_MUTEX_INITIALIZER;//相当于使用默认属性调用 pthread_mutex_init()。

返回值:如果成功,pthread_mutex_delete()和pthread_mutex_init()函数将返回零;

否则,将返回一个错误号来指示错误。

如果实现了[EBUSY]和[EINVAL]错误检查,它们的行为就好像是在函数处理开始时立即执行的,

并且应该在修改由互斥对象指定的互斥对象的状态之前导致错误返回PTHREAD_MUTEX_LOCK(3P)

#include <pthread.h>

加锁:int pthread_mutex_lock(pthread_mutex_t *mutex);

//尝试拿到指定的互斥锁。如果互斥锁已经被另一个线程锁定,则该函数立即返回一个返回值为EBUSY的错误。

//如果互斥锁可用,则函数锁定它并返回0

尝试锁定互斥锁:int pthread_mutex_trylock(pthread_mutex_t *mutex);

解锁:int pthread_mutex_unlock(pthread_mutex_t *mutex);

返回值:如果成功,pthread_mutex_lock ()和pthread_mutex_unlock()函数将返回零;

否则,将返回一个错误号来指示错误。

如果在互斥对象引用的互斥对象上获得了一个锁,那么pthread_mutex_trylock()函数将返回零。

否则,错误号返回以指示错误。除了把锁定义成局部的方式,还可以把锁定义成static或全局

这样就可以无需pthread_mutex_init()来初始化锁,可直接使用PTHREAD_MUTEX_INITIALIZER宏来初始化锁

//pthread_mutex_t lock=PTHREAD_MUTEX_INITIALIZER;

struct ThreadData

{

ThreadData(const std::string& threadname,pthread_mutex_t* mutex_p)

:_threadname(threadname)

,_mutex_p(mutex_p)

{}

~ThreadData()

{}

std::string _threadname;//线程名

pthread_mutex_t* _mutex_p;//锁的指针

};

int tickets=10000;

void* getTicket(void* args)

{

//string threadName=static_cast<const char*>(args);

ThreadData* td=static_cast<ThreadData*>(args);//取出参数

while(1)

{

//加锁

pthread_mutex_lock(td->_mutex_p);

if(tickets>0)

{

usleep(1000);

cout<<"新线程"<<td->_threadname<<"->"<<tickets--<<endl;

pthread_mutex_unlock(td->_mutex_p);//解锁

}

else

{

pthread_mutex_unlock(td->_mutex_p);//解锁

break;

}

}

return nullptr;

}

int main()

{

#define NUM 4

pthread_mutex_t lock;//定义互斥锁

pthread_mutex_init(&lock,nullptr);//初始化互斥锁

std::vector<pthread_t> tids(NUM);//线程ID数组

//创建线程

for(int i=0;i<NUM;++i)

{

char buffer[64];

snprintf(buffer,sizeof(buffer),"thread %d",i+1);

ThreadData* td=new ThreadData(buffer,&lock);//传入的是同一把锁

pthread_create(&tids[i],nullptr,getTicket,(void*)td);

}

//主线程循环等待新线程

for(const auto& tid:tids)

{

pthread_join(tid,nullptr);

}

pthread_mutex_destroy(&lock);//释放锁资源

return 0;

}After using the mutex, the votes will end normally when the number of votes reaches 0, which perfectly solves the above problems. You can see the print results output by the console:

1. Program execution slows down. This is because the new thread changes from the original parallel execution to serial execution after locking;

2. There will be a thread that grabs tickets multiple times in a row. This is because the lock only stipulates mutual exclusion access, and does not stipulate which thread should be executed first. This is entirely the result of competition among threads. For the same thread that grabs tickets multiple times in a row, it may be that after the current thread grabs the lock, other processes are suspended, and the current thread immediately competes for the lock as soon as it releases the lock. Here you can let the thread go to sleep after grabbing the ticket, simulate the thread to do other things after grabbing the ticket, so that other threads also have the opportunity to compete.

2. Encapsulation of mutex locks based on RAII style

2.1Mutex.hpp

#pragma once

#include <iostream>

#include <pthread.h>

class Mutex//锁的对象

{

public:

Mutex(pthread_mutex_t* lock_p=nullptr)

:_lock_p(lock_p)

{}

~Mutex()

{}

void lock()

{

if(_lock_p)

{

pthread_mutex_lock(_lock_p);

}

}

void unlock()

{

if(_lock_p)

{

pthread_mutex_unlock(_lock_p);

}

}

private:

pthread_mutex_t* _lock_p;//锁的指针

};

class LockGuard

{

public:

LockGuard(pthread_mutex_t* mutex)

:_mutex(mutex)

{

_mutex.lock();//在构造函数进行加锁

}

~LockGuard()

{

_mutex.unlock();//在析构函数进行解锁

}

private:

Mutex _mutex;

};2.2mythread.cc

#include <iostream>

#include <unistd.h>

#include <pthread.h>

#include "Mutex.hpp"

using namespace std;

pthread_mutex_t lock=PTHREAD_MUTEX_INITIALIZER;

int tickets=10000;

void* getTicket(void* args)

{

string threadName=static_cast<const char*>(args);

while(1)

{

{ //RAII风格的加锁

LockGuard lockgrand(&lock);//在作用域中自动加锁

if(tickets>0)

{

usleep(1000);

std::cout<<threadName<<"正在进行抢票"<<tickets<<std::endl;

--tickets;

}

else

{

break;

}

}//出了作用域自动解锁

usleep(1000);

}

return nullptr;

}

int main()

{

pthread_t t1, t2, t3, t4;

pthread_create(&t1, nullptr, getTicket, (void *)"thread 1");

pthread_create(&t2, nullptr, getTicket, (void *)"thread 2");

pthread_create(&t3, nullptr, getTicket, (void *)"thread 3");

pthread_create(&t4, nullptr, getTicket, (void *)"thread 4");

pthread_join(t1, nullptr);

pthread_join(t2, nullptr);

pthread_join(t3, nullptr);

pthread_join(t4, nullptr);

return 0;

}4. Deadlock

1. The concept of deadlock

Multiple processes or threads fall into an infinite waiting state because they are waiting for each other to release resources. For example, a thread holds its own lock and does not release it, but also wants to take the lock in the other party's hand, and the other party does the same, which forms a deadlock.

A lock can also cause deadlock: apply for a lock repeatedly

pthread_mutex_t lock;

pthread_mutex_lock(&lock);//线程A申请了锁

pthread_mutex_lock(&lock);//线程A再次申请这把锁,毫无疑问,本次申请失败,线程A被挂起阻塞2. Four necessary conditions for deadlock to occur

1. Mutual exclusion: characteristics of locks

2. Request and keep: I want your resources, but I keep mine.

3. No deprivation: do not consider priority and other conditions to snatch other people's locks

4. Loop waiting condition: For example, A->B->C->A forms a circular resource requesting circle, waiting for each other to release resources.

3. Conditions to avoid deadlock

1. Don't use locks if you can use them without locks

2. Ensure that the locking sequence is consistent and destroy the loop waiting;

3. Avoid the scene where the lock is not released;

4. One-time allocation of resources.

5. Deadlock detection algorithm:

- Banker's Algorithm: This algorithm is used to prevent and avoid deadlocks, and can also be used to detect deadlocks. The banker's algorithm predicts and avoids the deadlock state of the system by allocating resources and reclaiming resources.

- Graph theory algorithm: This algorithm regards the processes and resources in the system as nodes, and regards the relationship between them as edges to form a graph. By detecting whether there is a cycle in the graph, it can be judged whether the system is in a deadlock state.

- Waiting graph algorithm: This algorithm regards processes and resources in the system as nodes, and regards the waiting relationship between them as edges to form a waiting graph. By detecting whether there is a cycle in the graph, it can be judged whether the system is in a deadlock state.

- Time slice polling algorithm: This algorithm detects whether they are blocked by polling the processes in the system. If a process is blocked and the resource it is waiting for is already occupied by another process, then the process may be in a deadlock state

Five, thread synchronization

1. The concept of thread synchronization

Under the premise of ensuring data security, threads can access critical resources in a specific order, thereby effectively avoiding starvation problems.

2. Condition variable

A condition variable is a type of variable:

PTHREAD_COND_DESTROY(3P)

#include <pthread.h>

int pthread_cond_destroy(pthread_cond_t *cond);

int pthread_cond_init(pthread_cond_t *restrict cond,

const pthread_condattr_t *restrict attr);//cond:条件变量的指针;attr:条件变量的属性,不需要则设为NULL

pthread_cond_t cond = PTHREAD_COND_INITIALIZER;

返回值:如果成功,pthread_cond _delete()和pthread_cond_init()函数将返回零;

否则,错误号将返回以指示错误

如果实现了[EBUSY]和[EINVAL]错误检查,那么它们的行为应该像在函数处理开始时立即执行的一样

并在修改由 cond 指定的条件变量的状态之前导致错误返回。Condition variables provide an interface for thread waiting and thread wakeup:

PTHREAD_COND_TIMEDWAIT(3P)

#include <pthread.h>

int pthread_cond_timedwait(pthread_cond_t *restrict cond,

pthread_mutex_t *restrict mutex,

const struct timespec *restrict abstime);

int pthread_cond_wait(pthread_cond_t *restrict cond,

pthread_mutex_t *restrict mutex);

返回值:如果在函数执行过程中发生了错误,则会立即返回错误号,

不会修改mutex或cond的状态。如果函数执行成功,则返回0。PTHREAD_COND_BROADCAST(3P)

#include <pthread.h>

唤醒一批线程:

int pthread_cond_broadcast(pthread_cond_t *cond);

唤醒一个线程:

int pthread_cond_signal(pthread_cond_t *cond);//cond:条件变量,用于唤醒一个等待在该条件变量上的线程

返回值:如果成功,pthread_cond_Broadcasting()和pthread_cond_information()函数将返回零;

否则,将返回错误号指示错误。When the condition variable is not satisfied, the corresponding thread must go to some defined condition variables to wait.

void push(const T& in)//输入型参数:const &

{

pthread_mutex_lock(&_mutex);

//细节2:充当条件的判断必须是while,不能用if

//这是因为唤醒的时候存在唤醒异常或伪唤醒的情况

//需要让线程重新使用IsFull对空间就行判断,确保100%唤醒

while(IsFull())

{

//细节1:

//该线程被pthread_cond_wait函数挂起后,会自动释放锁。

//该线程被pthread_cond_signal函数唤醒后,会自动重新获取原来那把锁

pthread_cond_wait(&_pcond,&_mutex);//因为生产条件不满足,无法生产,此时我们的生产者进行等待

}

//走到这里一定没有满

_q.push(in);

//刚push了一个数据,可以试着消费者把他取出来(唤醒消费者)

//细节3:pthread_cond_signal()这个函数,可以放在临界区内部,也可以放在临界区外部

pthread_cond_signal(&_ccond);//可以设置水位线,满多少就唤醒消费者

pthread_mutex_unlock(&_mutex);

//pthread_cond_signal(&_ccond);//也可以放在解锁之后

}1. The second parameter of the pthread_cond_wait() interface must be the mutex that our current context is using. This is because when we call this function, this thread must hold this lock; if this thread is suspended by pthread_cond_wait(), then no one can get this lock? In order to solve this problem, this function will release the lock and suspend the current thread in an atomic way. After the thread is suspended, it will always be stuck in the critical section. When the pthread_cond_signal() function wakes up the thread, the thread will automatically acquire the lock again and continue to execute the code below.

2. The condition judgment must be while but not if

3. The function pthread_cond_signal() can be placed inside or outside the critical section.

3, signal amount

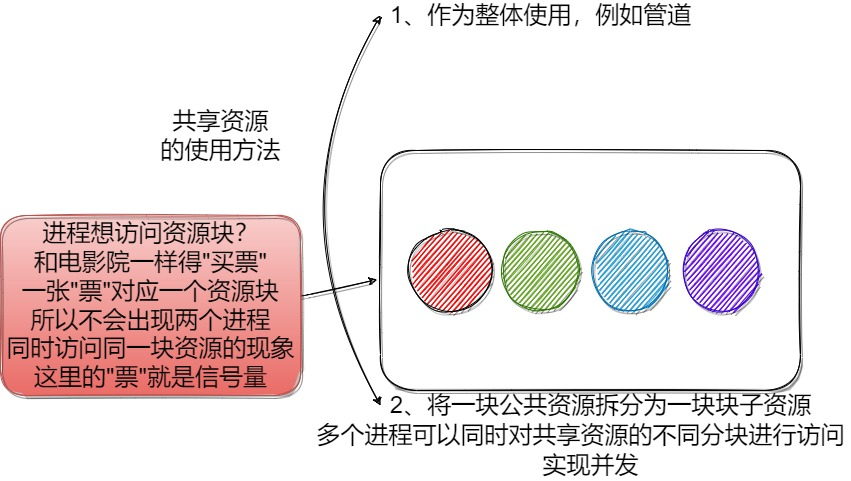

In order to access critical resources, multiple threads mentioned earlier must frequently lock the readiness. The significance of the semaphore is that it provides an effective way to avoid race conditions when multiple processes or threads access a shared resource at the same time, thereby avoiding problems such as deadlock and starvation. At the same time, semaphores can also be used to implement inter-process communication and inter-thread communication. For example, the buffer in the producer-consumer model can use semaphores to coordinate the access of producers and consumers.

3.1 The concept of semaphore

As long as there is a semaphore, the thread will definitely be able to own a part of the critical resource in the future. The essence of applying for the semaphore is to reserve a specific small piece of resource in the critical resource. (Critical resources may be divided into small pieces of resources.) (Similar to buying tickets in a movie theater, as long as you buy a ticket, there must be a place for me in this movie in the future)

Now that I bought the ticket successfully, I don't need to call the movie theater to know if there is a place for me in this movie. In the same way, as long as a thread successfully applies for a semaphore, it will definitely be able to successfully access critical resources in the future. Then you can abandon the need to lock before accessing critical resources, and then detect the operation of the lock, and then determine whether you can access critical resources in the future by applying for semaphores.

3.2 pv primitives of semaphores (atoms, statements)

The semaphore is also a shared resource , which also needs to be accessed safely. The pv primitive of the semaphore guarantees the atomicity of the semaphore ++--:

- Semaphore--: Represents the application resource - P operation

- Semaphore ++: Represents the return of resources. ——V operation

3.3 Initialize the semaphore

SEM_INIT(3)

#include <semaphore.h>

int sem_init(sem_t *sem, int pshared, unsigned int value);

Link with -pthread.Purpose: sem_init is a semaphore initialization function used to initialize an unnamed semaphore.

parameter:

- sem: Pointer to the semaphore to initialize.

- pshared: Specifies the type of semaphore. If pshared is 0, it means that the semaphore can only be used for synchronization between threads in the same process; if pshared is a non-zero value, it means that the semaphore can be used for synchronization between multiple processes.

- value: Specifies the initial value of the semaphore.

return value:

- If the sem_init function is called successfully, the return value is 0.

- If the call fails, the return value is -1 and errno is set to the corresponding error code.

3.4 Waiting for semaphore (P operation)

SEM_WAIT(3)

#include <semaphore.h>

int sem_wait(sem_t *sem);

int sem_trywait(sem_t *sem);

int sem_timedwait(sem_t *sem, const struct timespec *abs_timeout);

Link with -pthread.Purpose: sem_wait is a semaphore P operation function , which is used to decrement the value of the semaphore by 1. If the value of the semaphore is 0, the thread calling the sem_wait function will be blocked until the value of the semaphore is greater than 0.

parameter:

- sem: Pointer to the semaphore to operate on.

return value:

- If the sem_wait function is called successfully, the return value is 0.

- If the call fails, the return value is -1 and errno is set to the corresponding error code.

3.5 Release semaphore (V operation)

SEM_POST(3)

#include <semaphore.h>

int sem_post(sem_t *sem);

Link with -pthread.Purpose: sem_post is a semaphore V operation function , which is used to add 1 to the value of the semaphore. If a thread is blocked because the value of the semaphore is 0, after calling the sem_post function, one of the threads will be awakened.

parameter:

- sem: Pointer to the semaphore to operate on.

return value:

- When the call is successful, the return value is 0.

- When the call fails, the return value is -1, and the error code is stored in errno. Common error codes include: EINVAL (invalid parameter), EPERM (insufficient privileges), etc.

3.6 Destroy the semaphore

#include <semaphore.h>

int sem_destroy(sem_t *sem);

Link with -pthread.#include <semaphore.h>

int sem_destroy(sem_t *sem);

Link with -pthread.

用途:sem_destroy是一个信号量的销毁函数,用于释放已经创建的信号量所占用的资源。

参数:

● sem为需要销毁的信号量的指针。

返回值:sem_destroy函数的返回值为0表示销毁成功,否则表示销毁失败。如果当前有线程在等待该信号量,则sem_destroy函数会返回一个错误码并且不会销毁该信号量。在销毁信号量时,需要保证所有使用该信号量的线程都已经结束。