Author: Dong Hongshuai, Mafengwo micro-service system construction and basic service capacity building experts.

As a travel social platform, Mafengwo is a new type of travel e-commerce driven by data. Based on more than ten years of content accumulation, Mafengwo uses AI technology and big data algorithms to connect personalized travel information with travel product suppliers from all over the world, providing users with a unique travel experience.

With the development of business, Mafengwo's architecture is also changing with the pace of technology, and began to make more extensions based on Kubernetes. Against this technical background, a new round of architecture update needs to be launched for cloud services, such as: building a new bee-effect platform and peripheral facilities for microservice scenarios to support iteration and traffic lane capabilities, and introducing Karmada in multi-Kubernetes cluster scenarios to achieve multiple Cluster management, replace the architecture of Istio + Envoy in the microservice gateway field with the microservice gateway mode where Apache APISIX and Envoy coexist.

The Status Quo of Microservice 1.0 Model

At present, the microservice architecture inside Mafengwo has gone through two iterations. In this paper, the first adjustment to the original architecture is defined as version 1.0. Before building the microservice 1.0 architecture, we made some considerations and aligned goals from the perspectives of publishing system capabilities, Kubernetes containers, service discovery, and microservice gateways. For example, the wide application of Kubernetes requires consideration of multi-language support based on containers, full containerization in the CI/CD link, and support for multiple Kubernetes clusters.

Before the first iteration, the microservice gateway of the internal architecture used NGINX Ingress, but it was actually problematic. For example, configuration changes based on NGINX reload will cause business damage; at the same time, only a single Kubernetes cluster is supported within the scope of the application, and the scene is limited; the built-in resources are too simple, and a large number of matching rules rely on Annotations, which makes the configuration complicated and unfriendly, especially for external service discovery Capacity support is weak.

Therefore, in the process of iterative landing of microservice 1.0, in order to meet some business needs, we have carried out the following actions (select some operations to list):

- In the Kubernetes container, the container network is transformed based on macvlan, and the IDC computer room network communicates with the cloud vendor network (communication basis for container intercommunication); service mutual access is achieved through gateways or direct container connections, and Kubernetes Service is no longer used.

- Based on Kubernetes container scenario deployment; based on Consul physical machine virtual machine deployment.

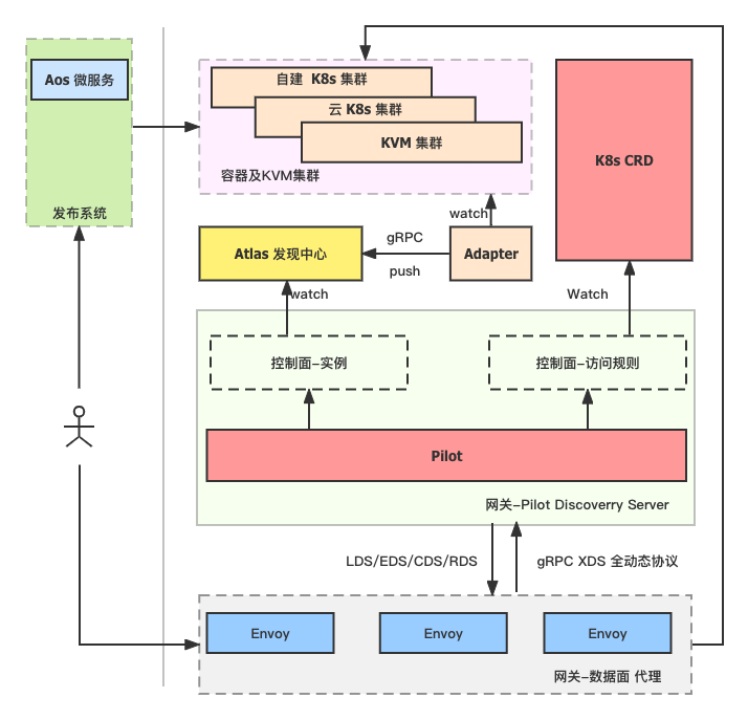

- Increase the unified service discovery capability, build a unified discovery center - Atlas based on Kubernetes and Consul; at the same time expand the microservice gateway, Java ecology, monitoring system, etc. based on Atlas.

- In the selection of the microservice gateway, it is built based on the Istio + Envoy architecture. Secondary development of Pilot in Istio, docking with the Atlas discovery center, since the Atlas data comes from multiple sets of Kubernetes and Consul, and then decoupling the instance discovery from the Kubernetes cluster, indirectly achieving the ability of the gateway to connect to multiple Kubernetes clusters, and realizing the dynamics of the entire gateway Perceived changes in recognition.

Sort out pain points

In the microservice 1.0 architecture, there are still some pain points in practice.

First of all, in terms of publishing system capabilities, the publishing system in Microservice 1.0 is just a publishing system, which cannot effectively integrate the management of project requirements (publishing is also a part of measurement); at the same time, this publishing system is built based on PHP, which cannot be well It fully supports more complex deployment scenarios such as automatic rolling deployment and multi-version rolling deployment capacity change; when multiple people are developing the same project, or a requirement is associated with multiple projects, there is no mechanism to allow traffic to be automatically connected in series in the same iteration (traffic lane). At the same time, it is not convenient to manage multiple Kubernetes clusters. When Kubernetes goes offline, the business side needs to participate, which brings time costs to the business line.

The second is the microservice gateway architecture level. The previous article also mentioned that the gateway under the 1.0 architecture is a secondary development of Pilot based on Istio+Envoy, mainly to connect with the Atlas discovery center. As the number of business applications increases, the number of access rules also increases, which leads to higher and higher latency for our online gateway to take effect. In the peak traffic state, there is a delay of about 15 seconds. This delay mainly comes from CRD resources, almost all of which are under the same namespace, and when going online and offline at the same time, a full update will be triggered, resulting in a high delay.

However, in the early use process, Envoy also experienced connection interruptions during the full data push process, causing 503problems . In addition, Envoy also has the situation that the continuous growth of memory usage leads to OOM. When there is a problem with the gateway, the cost of troubleshooting Envoy and Pilot is high. When using some high-level configurations, you need to use Envoy Filter, which has a high threshold for getting started, and the cost is high for simple functions such as fusing, current limiting, and Auth authentication.

In addition, the two communities (Istio and Envoy) are developing very fast, which also makes it difficult for our architecture to keep up with the development of the upstream community.

Microservice 2.0 model based on APISIX

New platform and new architecture

Faced with the pain points and problems existing in the 1.0 architecture, the internal iteration of this microservice architecture has come to the 2.0 era. In the 2.0 architecture scenario, we have added a new release platform - Fengxiao Platform.

The bee effect platform highlights the following capabilities:

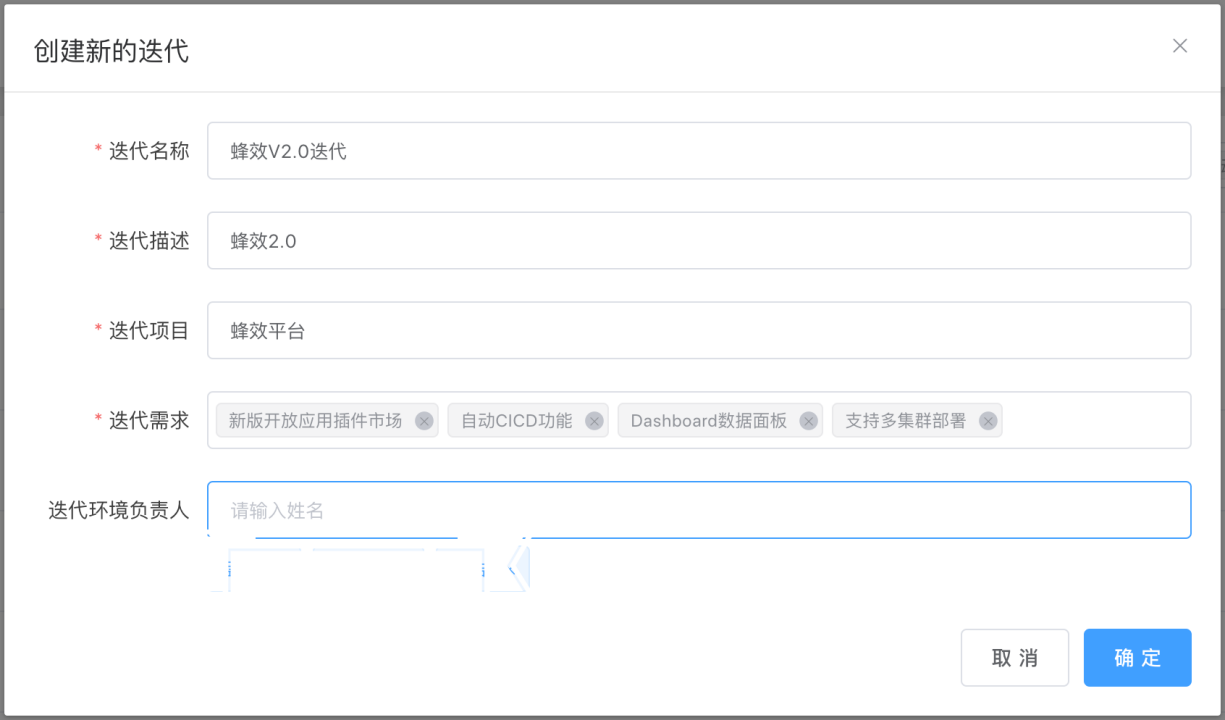

- It integrates requirements management, supports iterative deployment capabilities, and enables the release system to gain metrics.

- Unified container deployment and machine deployment (physical machine deployment) in terms of product capabilities.

- Enhanced fine-grained traffic management capabilities and rollback capabilities (rollback strategies: batches, number of instances, intervals, etc.)

- Integrating and co-constructing with Java ecology, it supports traffic swim lane capability and environment traffic isolation.

- The gateway is reconstructed based on APISIX to solve the high delay of Envoy OOM and rule effectiveness. At the same time, the productization capability of APISIX reduces the cost of troubleshooting and lowers the threshold for expanding and configuring the gateway.

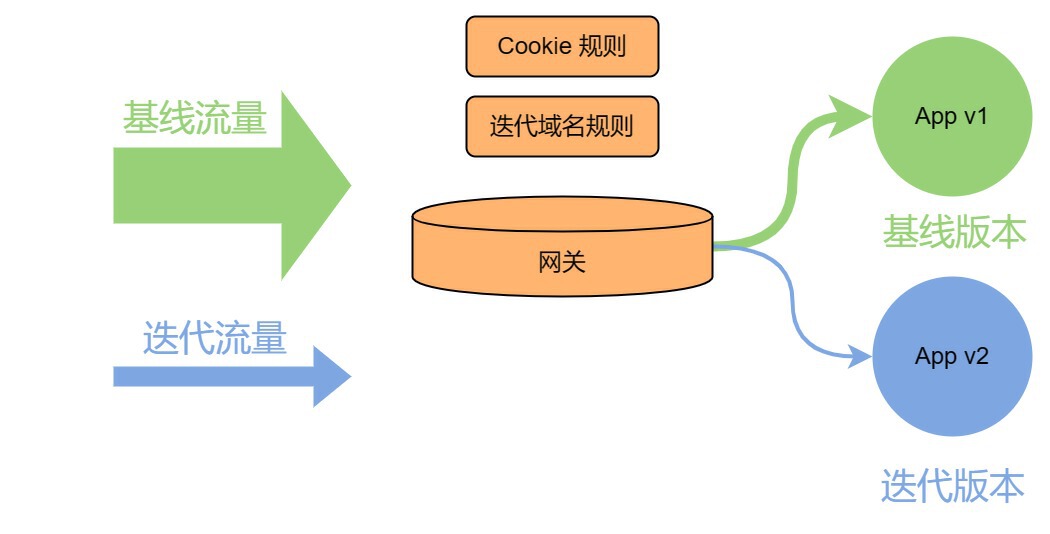

On the product side of the bee effect platform, we combine demand management with iterative deployment, and support a variety of iterative pipeline modes. In terms of traffic management, we have realized the traffic lane capability with the help of env attributes and extended attributes in the Atlas Instance model. Based on the traffic swim lane model, an iterative traffic environment is built on the upper product side to call link isolation.

In terms of surrounding ecological construction, the Java SDK side has implemented isolated link calls in an iterative environment, and the gateway side has also performed similar operations. It's just that the gateway side is used as the entrance of the entire traffic, and we configure it through the rules of cookies, that is, in the way of cookies. The traffic of the baseline environment can only reach the version of the baseline environment, and the traffic of the iterative environment will be forwarded to the iterative version.

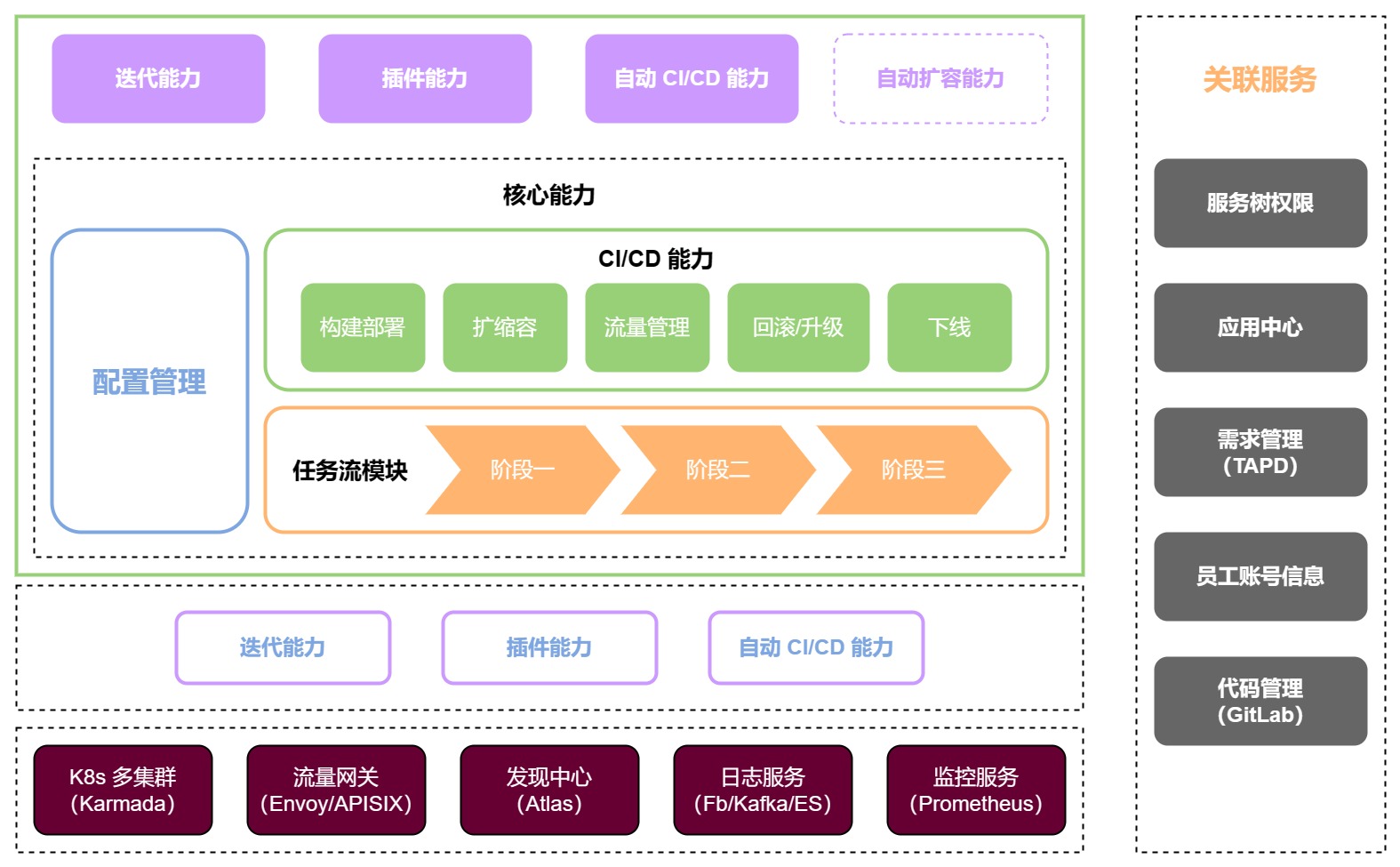

At the same time, at the Kubernetes multi-cluster management level at the deployment layer, we use Karmada to realize the management of a multi-Kubernetes cluster. In the entire architecture (as shown in the figure below), the underlying capabilities are mainly composed of Kubernetes multi-cluster and traffic gateway Envoy and APISIX, discovery center Atlas, log service and monitoring service.

In the entire architecture, the Fengxiao platform mainly performs configuration management, construction and deployment, expansion and contraction, and offline and offline, etc., and also includes task flow modules. At the top are some capabilities of the application market, such as iteration capabilities and plug-in capabilities.

Overall, we have created a new 2.0 architecture based on the application center and services. When the Kubernetes cluster changes, this new architecture can implement deployment and other resource distribution at the Kubernetes cluster level through strategies such as PropagationPolicy and OveridePolicy, reducing the cost of business participation when the cluster changes, and relieving some pressure on the business R&D side.

Gateway selection

In the architecture of the 2.0 model, we mentioned two gateway products for traffic gateways, namely Envoy and APISIX. Envoy was the choice of the previous 1.0 version, and we did not give up completely. In 2.0, we also began to consider new gateway products to replace because of some requirements and product expectations, such as:

- When the access rules change, the gateway's effective speed needs to be controlled at the millisecond level (slow effective will cause the gateway to take effect at different speeds, and may cause business resources to 404 for a long time in the CDN scenario).

- It can completely replace the Istio+Envoy architecture in existing scenarios; it also supports HTTP, gRPC, and is compatible with existing routing strategies.

- It is necessary to reduce the cost of troubleshooting, and it is best to have product support (such as Dashboard).

- The product is stable enough, the community is active, and the function is strong (support for scenarios such as current limiting).

- It can support the replacement of the company's existing architecture without secondary development.

- In the process of replacing the Istio+Envoy architecture, it is necessary to keep the dual architecture available (Istio, Envoy and the new gateway coexist). If there is a problem with the new architecture, it can quickly fall back to the original solution.

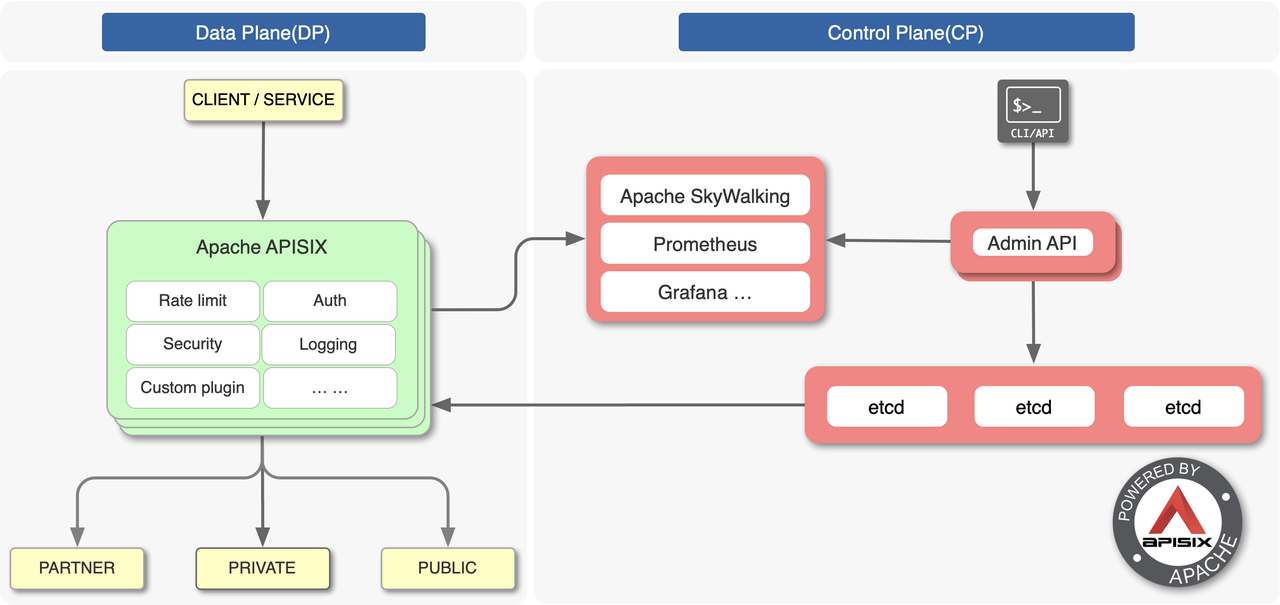

After investigating the models of some key gateway products, we finally locked the solution on Apache APISIX. The architecture of APISIX is also divided into control plane and data plane, and it also comes with Dashboard products. In terms of functional use, APISIX provides a wealth of plug-ins, such as current limiting, circuit breaking, log security and monitoring, and so on. We can fully operate all the capabilities of APISIX through the interface provided by APISIX's Admin API, such as Upstream, Consumer, and various plug-ins. For us, APISIX also has a special advantage, that is, when APISIX is upgraded, it can achieve uniform compatibility with lower version APIs.

In addition, we believe that APISIX has the following advantages:

- The performance of APISIX based on Openresty is very good. Compared with Envoy, the performance loss is very small. After our test, in terms of QPS performance, APISIX loses 3%, while Envoy loses 16%. In terms of delay performance, APISIX takes an average of 2ms for forwarding, while Envoy takes 7ms. The embodiment of the data has demonstrated the superiority of APISIX in terms of performance.

- APISIX also has Dashboard support, which can quickly determine whether the rule configuration is wrong for scenarios such as route matching exceptions.

- As an open source product, the community of APISIX is more active. In terms of product functions, compared with Envoy, it has lower cost and better support in terms of current limiting, authentication, and monitoring.

- Compared with Envoy, APISIX has a very low memory footprint, but it is weaker than Envoy in terms of dynamic configuration changes (almost most of Envoy's configurations can be dynamically delivered), but it is also sufficient to meet the needs.

Therefore, after research and testing, we added Apache APISIX as a traffic gateway under the microservice 2.0 architecture. Since the gateway is the core of the entire microservice traffic, if you switch from an old architecture to a new architecture, the cost is actually relatively high. Therefore, we hope that the changes in the gateway rules of microservices can take effect on the old and new gateways (Envoy and APISIX) at the same time, that is, one set of configurations can be applied to both architectures, so we have made some adjustments for these changes in the 2.0 architecture.

Landing plans and practical issues

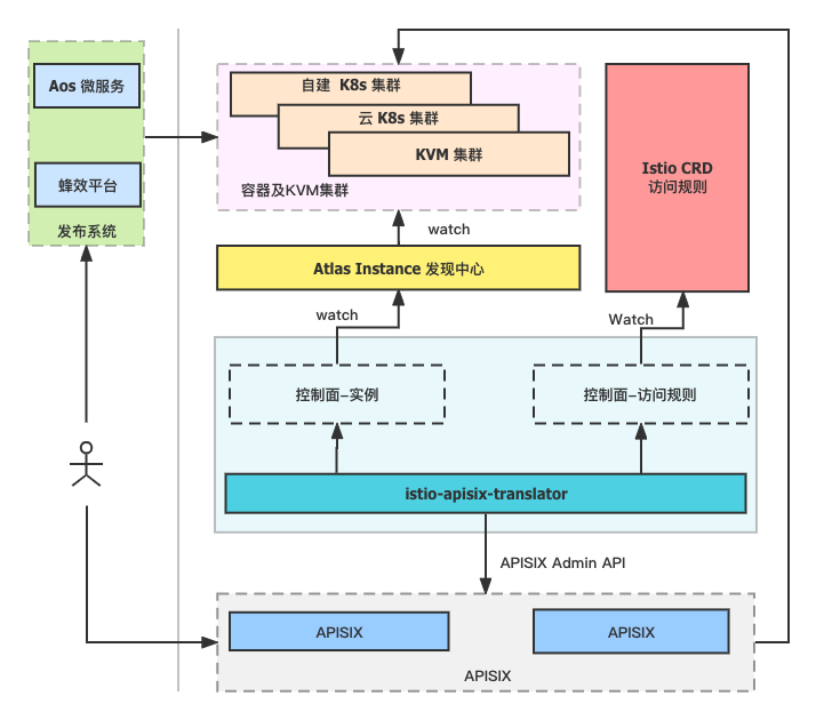

First of all, considering the cost issue, the original Istio architecture remains unchanged and has not been modified. At the same time, in the gateway architecture, a newly developed key component - istio-apisix-translator was introduced .

istio-apisix-translator mainly connects Atlas Discovery Center and Istio CRD data. As a data synchronization service, it converts changes in rules such as VirtualService and DestinationRule into APISIX routing rules in real time, and converts Atlas Instance data into APISIX Upstream rules in real time. Simply put, it is through such a service component that the data support for Atlas and Istio CRD is realized.

With this architecture, we have achieved complete support for both gateways, as shown in the figure below.

The core part of the gateway architecture is APISIX at the bottom of the figure, and the upper istio-apisix-translator acts as a pilot role similar to the Istio architecture. After integrating the Instance and CR data, it is pushed to APISIX through the APISIX Admin API. The example It is the Atlas discovery center connected to internal business. Therefore, no matter the access rule changes or the data source of Atlas changes, the data change can be converted into APISIX data and pushed to APISIX. At present, the entire link is based on the Watch mechanism to ensure real-time processing of data changes. Therefore, in actual application scenarios, data changes can basically take effect at the millisecond level.

Of course, in the process of using APISIX, we also encountered several problems. But all have been resolved and the results have been synchronized to the community for feedback.

The first problem is that when APISIX uses certificates to connect to etcd, if there are many APISIX nodes, it may cause the APISIX Admin API interface to respond very slowly. The reason for this problem is that etcd currently has a bug about HTTP/2. If you operate etcd over HTTPS (HTTP is not affected), the upper limit of HTTP/2 connections is 250 by default in Golang. The control plane of APISIX is different from the control plane of Istio architecture. APISIX nodes are directly connected to etcd. When the number of APISIX data plane nodes is large, once the number of connections between all APISIX nodes and etcd exceeds the upper limit, the interface response of APISIX will be very slow. slow.

In order to solve this problem, we also gave feedback to both the etcd and APISIX communities, and subsequently solved this problem by upgrading the version (etcd 3.4 to 3.4.20 and above, etcd 3.5 to 3.5.5 and above). At the same time, we have also synchronized this result to the Q&A document on the APISIX community official website, so that subsequent users can refer to it when they encounter the same problem.

The second problem is that you will encounter performance jitter in the process of using APISIX.

The first is that there will be 499response jitter, which mainly occurs in the scenario of more than two consecutive too fast post requests (not only post). In this case, NGINX considers the connection to be unsafe, and actively disconnects the client connection. To deal with this problem, just adjust proxy_ignore_client_abortthe configuration of to on.

In addition, when APISIX Admin API interface requests are intensive, a small number of responses from the APISIX data plane will time out. This is mainly because when istio-apisix-translator restarts, it will aggregate Atlas and Istio CR data and synchronize them to APISIX in full. A large number of requests will cause APISIX data changes, and intensive synchronization changes on the APISIX data plane will cause a small number of responses to time out. To this end, we introduce coroutine pool and token bucket current limiting to reduce APISIX data-intensive change scenarios to deal with this problem.

Summary and Development

Mafengwo is currently based on Kubernetes container deployment and Consul-based machine deployment scenarios, and builds its own Atlas service discovery center. At the same time, it does docking and adaptation in the Java ecosystem, microservice gateway, traffic lane of the microservice system, and monitoring system.

In the early stage of the microservice gateway, Pilot was redeveloped based on Istio 1.5.10, and non-container deployment scenarios were also supported on the gateway side. At the current stage, the Istio+Envoy architecture and the APISIX architecture are supported at the same time. By introducing external service components, APISIX can also reuse Istio CRD resources.

From the perspective of gateway development, we will follow some trends of gateways in the future. For example, many products now support the Gateway API, such as APISIX Ingress, Traefik, Contour, etc.; at the same time, the dynamic configuration of the gateway is also a very obvious trend in the future. When it comes to types and iterations, you can also pay more attention to the dynamic configuration of the gateway.