author

Zhong Hua, Tencent Cloud expert engineer, Istio project member and contributor, focuses on containers and service meshes. He has rich experience in containerization and service mesh production and implementation. He is currently responsible for the research and development of Tencent Cloud Mesh.

Istio's xDS performance bottleneck in large-scale scenarios

xDS is the communication protocol between the istio control plane and the data plane envoy, x represents a collection of multiple protocols, such as: LDS for listeners, CDS for services and versions, EDS for instances of services and versions, and for each service Instance characteristics, RDS means routing. The xDS can be simply understood as a collection of service discovery data and governance rules within the grid. The size of the xDS data volume is positively correlated with the grid size.

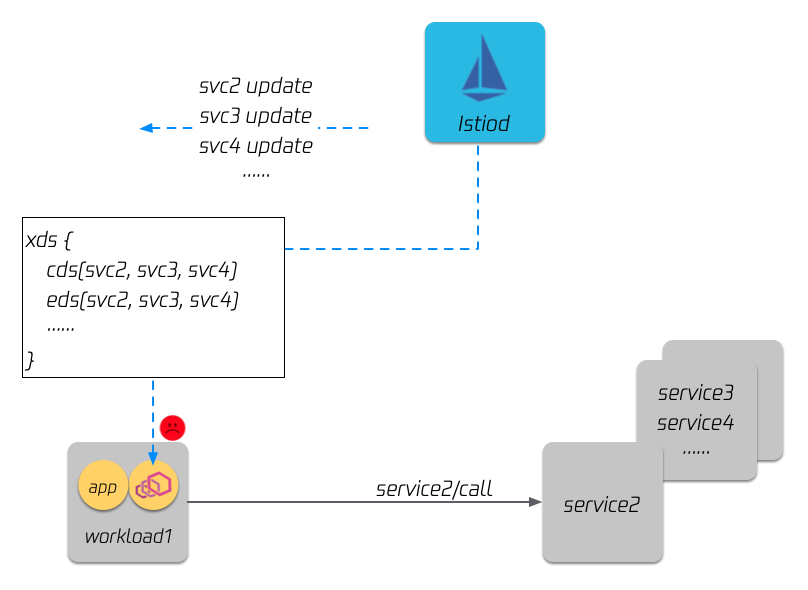

Currently, istio uses the full delivery strategy to deliver xDS, that is, all sidecars in the grid will have all the service discovery data in the entire grid in memory. For example, in the figure below, although workload 1 only depends on service 2 in terms of business logic, istiod will send the full amount of service discovery data (service 2, 3, and 4) to workload 1.

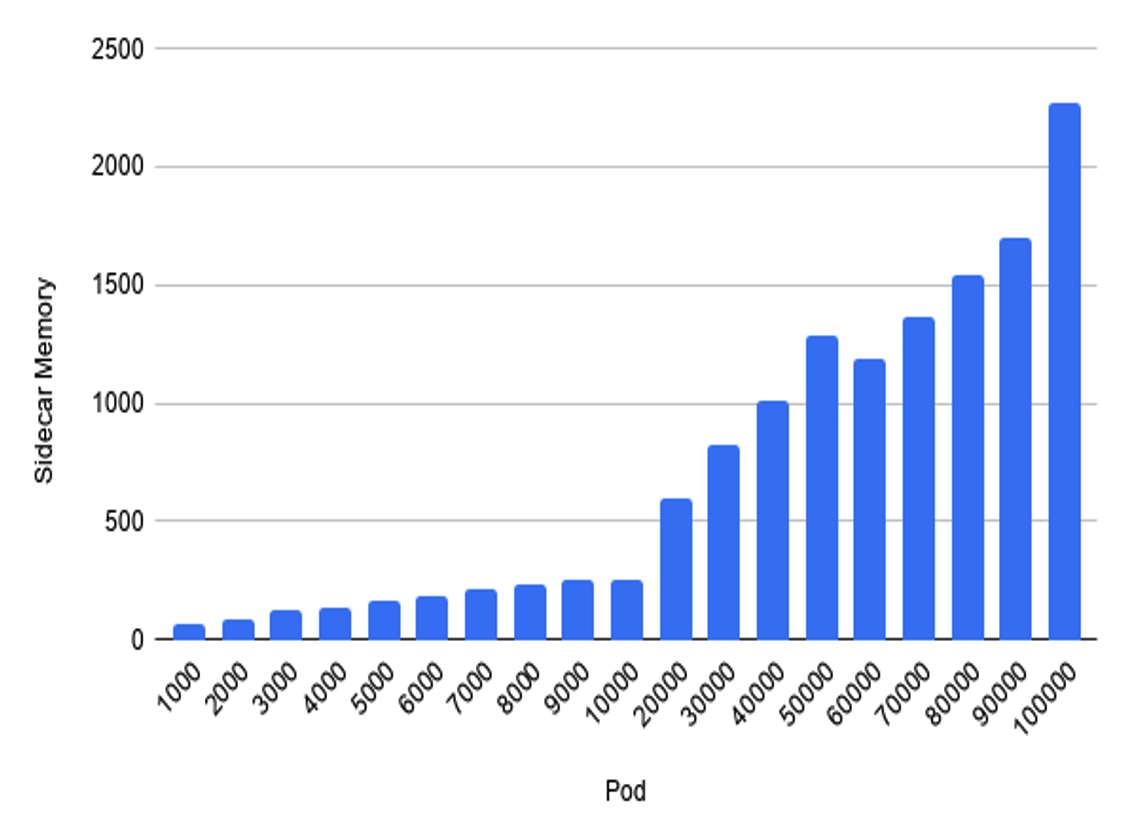

As a result, the memory of each sidecar will grow with the size of the grid. The following figure is a performance test of the size of the grid and memory consumption. The x-axis is the size of the grid, which is how many service instances it contains. , the y-axis is the memory consumption of a single envoy. It can be seen that if the grid scale exceeds 10,000 instances, the memory of a single envoy exceeds 250 megabytes, and the overhead of the entire grid is multiplied by the grid size.

Istio's current optimization plan

In response to this problem, the community has provided a solution, which is the CRD of Sidecar . This configuration can explicitly define the dependency relationship between services, or the visibility relationship. For example, the configuration in the figure below means that workload 1 only depends on service 2. After this configuration, istiod will only deliver the information of service 2 to workload 1.

The scheme itself is valid. However, this method is difficult to implement in large-scale scenarios: first of all, this solution requires users to configure the complete dependencies between services in advance. It is difficult to sort out the service dependencies in large-scale scenarios, and usually the dependencies will change with the business. change with change.

Aeraki Lazy xDS

In response to the above problems, the TCM team designed a non-intrusive xDS on-demand loading solution and open sourced it to the github Aeraki project. These are the specific implementation details of Lazy xDS:

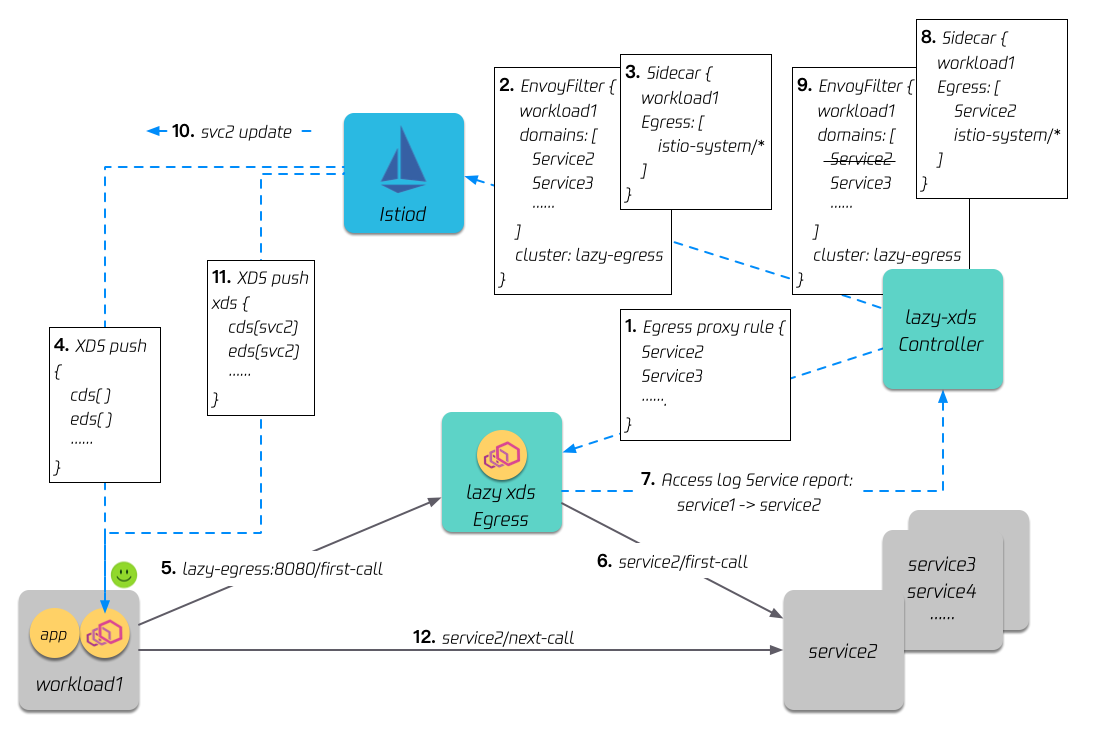

We add two components to the grid, one is Lazy xDS Egress, which acts as the default gateway in a similar grid model, and the other is Lazy xDS Controller, which is used to analyze and complete the dependencies between services.

-

First, configure the service transfer capability of Egress: Egress will obtain all service information in the grid and configure the routes of all HTTP services, so that Egress acting as the default gateway can forward the traffic of any HTTP service in the grid.

-

In the second step, for the service with the on-demand loading feature enabled (Workload 1 in the figure), use envoyfilter to route the traffic to the http service in the grid to egress.

-

Step 3: Use the istio sidecar CRD to limit the service visibility of Workload 1.

-

After step 3, Workload 1 initially only loads the minimized xDS.

-

When Workload 1 initiates access to Service 2, (because of step 2) the traffic is forwarded to the Egress.

-

(Because of step 1) Egress analyzes the received traffic characteristics and forwards the traffic to Service 2.

-

Egress will asynchronously report the access log to the Lazy xDS Controller, and the reporting service uses the Access Log Service .

-

Lazy xDS Controller will analyze the access relationship of the received logs, and then express the new dependencies (Workload 1 -> Service 2) to the sidecar CRD.

-

At the same time, the Controller will also remove (step 2) the rule that Workload 1 needs to forward the traffic of Service 2 to the Egress, so that in the future, when Workload 1 accesses Service 2 again, it will be a direct connection.

-

(Because of step 8) istiod updates the visibility relationship, and then sends the service information of Service 2 to Workload 1.

-

Workload 1 receives service information from Service 2 through xDS.

-

When Workload 1 initiates access to Service 2 again, the traffic goes straight to Service 2 (because of step 9).

Benefits of this program:

-

First of all, there is no need for users to configure dependencies between services in advance, and dependencies between services are allowed to be dynamically increased.

-

In the end, each envoy will only get the xDS it needs, and the performance is optimal.

-

This implementation also has a relatively small impact on user traffic, and user traffic will not be blocked. The performance loss is also relatively small, only the first few requests will be relayed in the Egress, and the subsequent ones are all directly connected.

-

This solution has no intrusion on istio and envoy, and we have not modified the istio/envoy source code, so that this solution can be well adapted to future istio iterations.

At present, we only support on-demand loading of Layer 7 protocol services. The reason is that when traffic is transiting here, Egress needs to determine the original destination through the header in the Layer 7 protocol. The pure TCP protocol has no way to set additional headers. However, because the main purpose of istio is to manage the seven-layer traffic, when most of the requests of the grid are seven-layer, this situation is currently acceptable.

Lazy xDS performance test

Test program

In different namespaces in the same grid, we created 2 groups of book info, the productpage in the left namespace lazy-on enables on-demand loading, and the right namespace lazy-off keeps the default.

Then in this grid, we gradually increase the number of services, using istio's official load testing toolset (hereinafter referred to as "load services"), there are 19 services in each namespace, including 4 tcp services and 15 http services , the initial number of pods for each service is 5, a total of 95 pods (75 http, 20 tcp). We gradually increased the number of namespaces served by the load to simulate grid growth.

Performance comparison

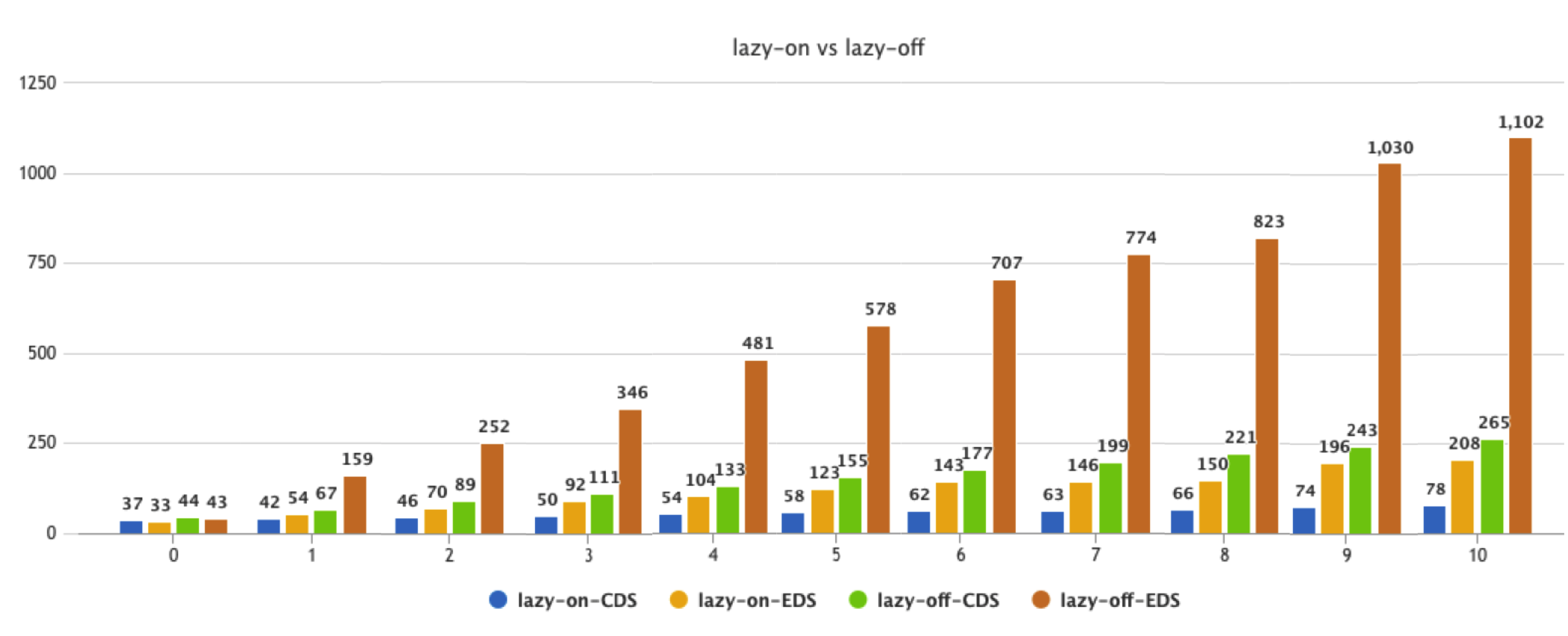

The first is the comparison between CDS and EDS. Each group of data in the figure below represents the increase of the load service namespace. There are 4 values in each group of data: the first two values are CDS and EDS after on-demand loading is enabled, and the last two values are not enabled. CDS and EDS loaded on demand.

Next is the memory comparison. The green data represents the memory consumption of envoy after on-demand loading is enabled, and the red data is not enabled. For a mesh of 900 pods, the envoy memory is reduced by 14M, and the reduction ratio is about 40%; for a mesh of 10,000 pods, the envoy memory is reduced by about 150M, and the reduction ratio is about 60%.

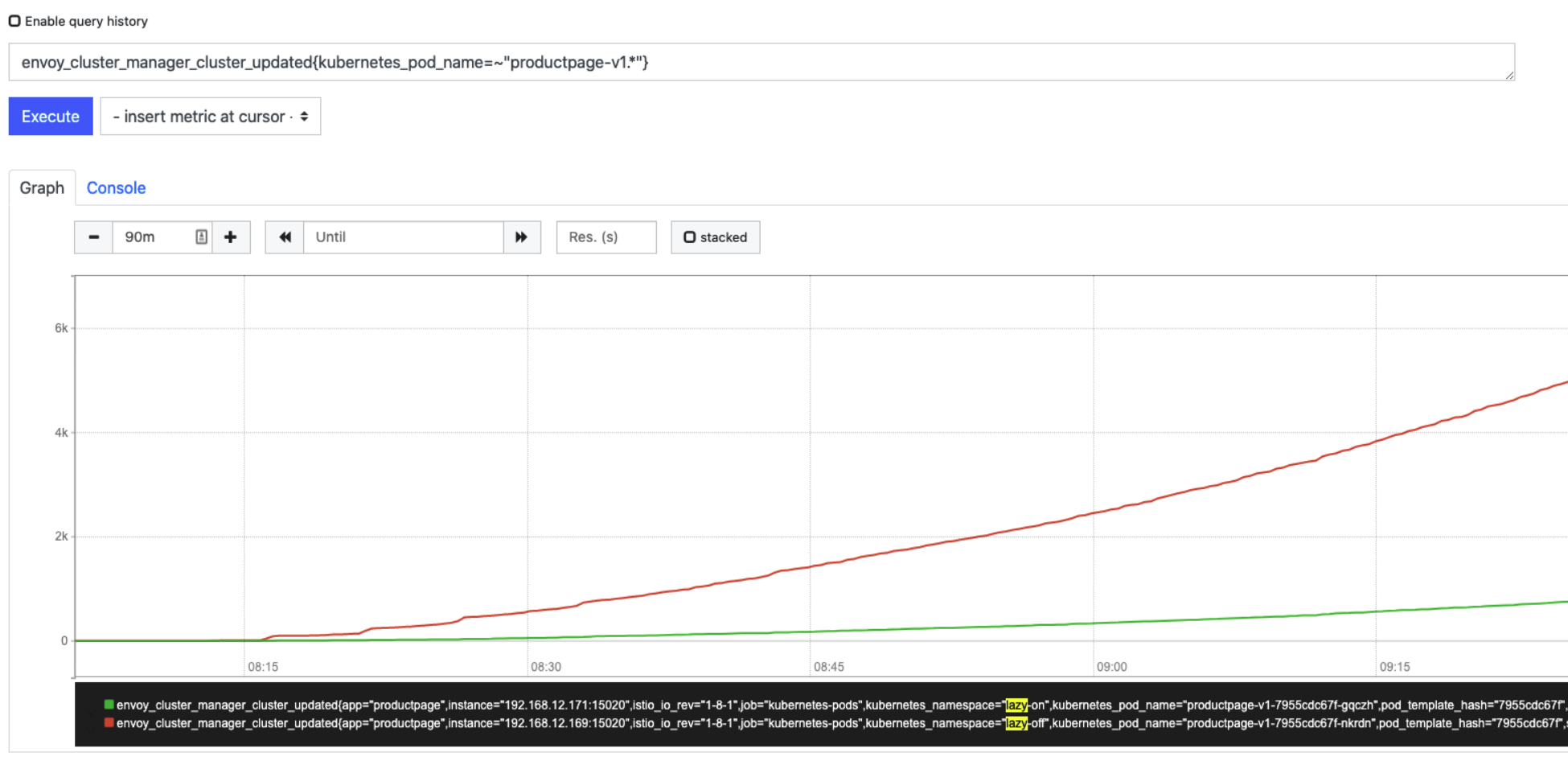

With the limitation of service visibility, envoy will no longer receive the full amount of xDS updates. The following figure is a comparison of the number of CDS updates received by envoy during the test period. After enabling on-demand loading, the number of updates has been reduced from 6,000 to 1,000. Second-rate.

summary

Lazy xDS has been open sourced on github, please visit the lazyxds README to learn how to use it.

The Lazy xDS function is still evolving. In the future, we will support functions such as multi-cluster mode and ServiceEntry on-demand loading.

If you want to learn more about Aeraki, please visit the Github homepage: https://github.com/aeraki-framework/aeraki

about Us

For more cases and knowledge about cloud native, you can pay attention to the public account of the same name [Tencent Cloud Native]~

Welfare:

① Reply to the [Manual] in the background of the official account, you can get the "Tencent Cloud Native Roadmap Manual" & "Tencent Cloud Native Best Practices"~

②The official account will reply to the [series] in the background, and you can get "15 series of 100+ super practical cloud native original dry goods collection", including Kubernetes cost reduction and efficiency enhancement, K8s performance optimization practices, best practices and other series.

③If you reply to the [White Paper] in the background of the official account, you can get the "Tencent Cloud Container Security White Paper" & "The Source of Cost Reduction - Cloud Native Cost Management White Paper v1.0"

[Tencent Cloud Native] New products of Yunshuo, new techniques of Yunyan, new activities of Yunyou, and information of cloud appreciation, scan the code to follow the public account of the same name, and get more dry goods in time! !