Hadoop development environment construction

Thanks for the reference website: http://www.cnblogs.com/huligong1234/p/4137133.html

1. Software preparation

JDK:jdk-7u80-windows-x64.exe

http://www.oracle.com/technetwork/java/javase/archive-139210.html

Eclipse:eclipse-jee-mars-2-win32-x86_64.zip

http://www.eclipse.org/downloads/

Hadoop:hadoop-2.6.4.tar.gz

Hadoop-Src:hadoop-2.6.4-src.tar.gz

http://hadoop.apache.org/releases.html

Ant:apache-ant-1.9.6-bin.zip

http://ant.apache.org/bindownload.cgi

Hadoop-Common:hadoop2.6(x64)V0.2.zip (2.4以后)、(hadoop-common-2.2.0-bin-master.zip)

2.2:https://github.com/srccodes/hadoop-common-2.2.0-bin

2.6:http://download.csdn.net/detail/myamor/8393459

Hadoop-eclipse-plugin:hadoop-eclipse-plugin-2.6.0.jar

https://github.com/winghc/hadoop2x-eclipse-plugin

2. Build the environment

1. Install JDK

Execute "jdk-7u80-windows-x64.exe", and choose the default next step.

2. Configure JDK, Ant, Hadoop environment variables

Unzip hadoop-2.6.4.tar.gz, apache-ant-1.9.6-bin.zip, hadoop2.6(x64)V0.2.zip, hadoop-2.6.4-src.tar.gz to the local disk, Any location.

Configure the system environment variables JAVA_HOME, ANT_HOME, and HADOOP_HOME, and configure the bin subdirectories of these environment variables into the path variable.

Copy hadoop.dll and winutils.exe under hadoop2.6(x64)V0.2 to the HADOOP_HOME/bin directory.

3. Configure Eclipse

Copy hadoop-eclipse-plugin-2.6.0.jar to the plugins directory of eclilpse.

Start eclipse and set up the workspace. If the plugin is installed successfully, you can see the following after startup:

4. Configure hadoop

Open "window" - "Preferenes" - "Hadoop Mep/Reduce" and configure it to the Hadoop_Home directory.

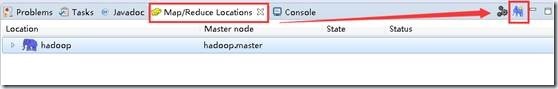

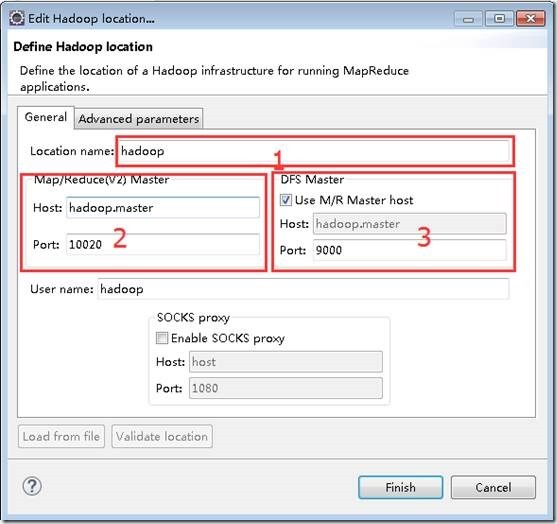

Open "window" - "show view" - "Mepreduce Tools" - "Mep/Reduce Locations", create a Location, and configure it as follows.

The 1 position is the name of the configuration, which is arbitrary.

The 2 location is the mapreduce.jobhistory.address configuration in the mapred-site.xml file.

3 The location is the fs.default.name configuration in the core-site.xml file.

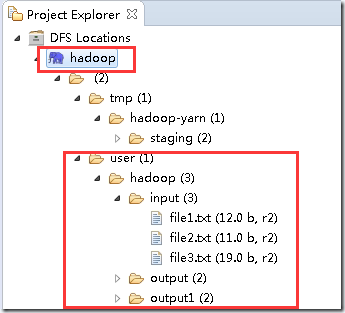

After configuring the above information, you can see the following content in the Project Explorer, which means the configuration is successful.

The above figure shows that the configured hdfs information has been read. There are a total of 3 folders input, output, and output1, and there are 3 files in the input directory.

Note: The above content is created in my own environment, what you see may not be the same as mine.

Content can be executed by executing on hadoop.master

hadoop fs -mkdir input --create folder

hadoop fs -put $localFilePath input --Upload local files to the input directory of HDFS

三、创建示例程序

1. 新建一个WordCount类

打开eclipse,创建一个Map/Reduce Project,并创建一个org.apache.hadoop.examples.WordCount类。

拷贝hadoop-2.6.4-src.tar.gz中hadoop-mapreduce-project\hadoop-mapreduce-examples\src\main\java\org\apache\hadoop\examples下的WordCount.java文件中的内容到新创建的类中。

2. 配置log4j

在src目录下,创建log4j.properties文件

log4j.rootLogger=debug,stdout,R log4j.appender.stdout=org.apache.log4j.ConsoleAppender log4j.appender.stdout.layout=org.apache.log4j.PatternLayout log4j.appender.stdout.layout.ConversionPattern=%5p - %m%n log4j.appender.R=org.apache.log4j.RollingFileAppender log4j.appender.R.File=mapreduce_test.log log4j.appender.R.MaxFileSize=1MB log4j.appender.R.MaxBackupIndex=1 log4j.appender.R.layout=org.apache.log4j.PatternLayout log4j.appender.R.layout.ConversionPattern=%p %t %c - %m% log4j.logger.com.codefutures=DEBUG

3. 配置运行参数

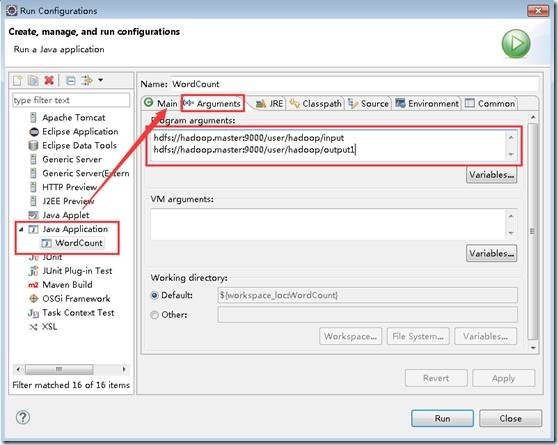

选择“run”-“run configurations”,在“Arguments”里加入“hdfs://hadoop.master:9000/user/hadoop/input hdfs://hadoop.master:9000/user/hadoop/output1”。

格式为“输入路径 输出路径”,如果输出路径必须为空或未创建,否则会报错。

如下图:

注:如果”Java Application”下面没有“WordCount”,可以选择右键,New一个即可。

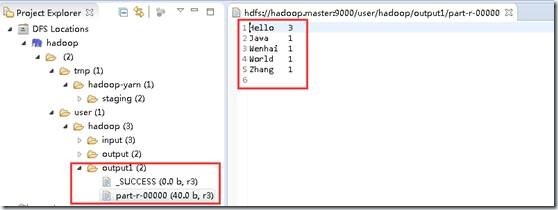

4. 执行查看结果

配置好之后,执行。查看控制台输出以下内容,表示执行成功:

| INFO - Job job_local1914346901_0001 completed successfully INFO - Counters: 38 |

在“DFS Locations”下,刷新刚创建的“hadoop”看到本次任务的输出目录下是否有输出文件。

四、问题FAQ

1. 问题1:NativeCrc32.nativeComputeChunkedSumsByteArray错误

【问题描述】启动示例程序时,报nativeComputeChunkedSumsByteArray异常。控制台日志显示如下:

Exception in thread "main" java.lang.UnsatisfiedLinkError: org.apache.hadoop.util.NativeCrc32.nativeComputeChunkedSumsByteArray(II[BI[BIILjava/lang/String;JZ)V

at org.apache.hadoop.util.NativeCrc32.nativeComputeChunkedSumsByteArray(Native Method)

【原因分析】hadoop.dll文件版本错误,替换对应的版本文件。由于hadoop.dll 版本问题出现的,这是由于hadoop.dll 版本问题,2.4之前的和自后的需要的不一样,需要选择正确的版本(包括操作系统的版本),并且在 Hadoop/bin和 C:\windows\system32 上将其替换。

【解决措施】下载对应的文件替换即可。http://download.csdn.net/detail/myamor/8393459 (2.6.X_64bit)