[From] http://blog.didispace.com/jenkins-pipeline-top-10-action/

The Jenkins Pipeline plugin is a game changer for Jenkins users. Based on the Domain-Specific Language (DSL) in Groovy, the Pipeline plugin enables Pipelines to be scripted and provides a very powerful way to develop complex, multi-step DevOps Pipelines. This article documents some of the best practices and deprecated code examples and instructions for writing Jenkins Pipelines.

1. To use the real Jenkins Pipeline

Don't use old plugins like Build Pipeline plugin or Buildflow plugin. Instead use the real Jenkins Pipiline plugin suite.

This is because the Pipeline plugin is a step of changing and improving the underlying work itself. Unlike Freestyle tasks, Pipeline is resilient to Jenkins host restarts and has built-in functionality that can replace many of the older plugins used to build multi-step, complex delivery pipelines.

For more information on getting started, visit https://jenkins.io/solutions/pipeline/

2. Develop your Pipeline as you would code

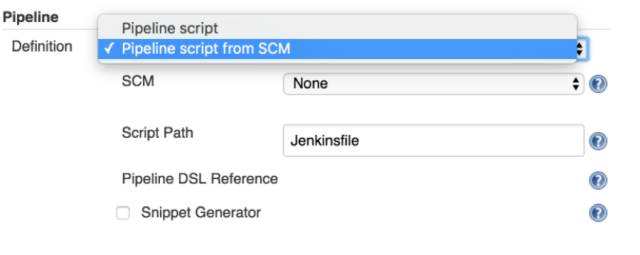

Using this function allows you to store the Pipeline description code in the SCM in the form of Jenkinsfile like other software, and then perform version testing.

Doing so treats Pipeline as code, enforces good practice, and opens up a new area of functionality such as multi-branch, pull request detection, and organizational scanning of GitHub and BitBucket.

The pipeline script should also be called the default name: Jenkinsfile and start with #!groovy script so that IDEs, GitHub and other tools will recognize it as Groovy and enable code highlighting.

3. To work within the Stage block

Any non-installation jobs within the Pipeline should be executed within a Stage block.

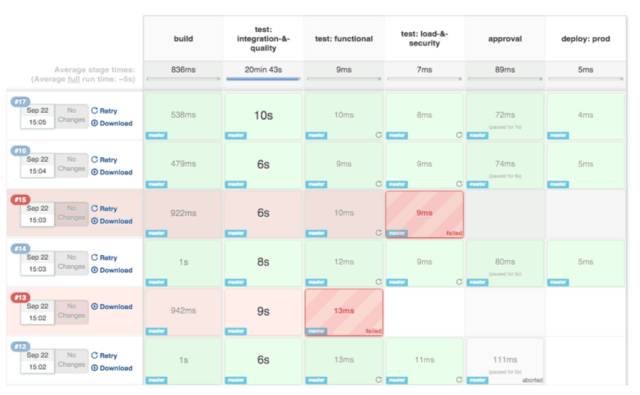

This is because Stage is a logical division of Pipeline. The work can be divided into stages, and the pipeline can be divided into clear steps.

E.g:

stage 'build' |

Even better: The Pipeline Stage View plugin treats each Stage as the only segment of the Pipeline.

4. Execute the actual job inside the node

Substantial work in a Pipeline should all happen within a Node block.

Because by default, the Jenkinsfile script itself runs on the Jenkins host, using a lightweight executor that is expected to use very few resources. During any substantial job process, such as cloning code from a Git server or compiling a Java application, it should take advantage of Jenkins' distributed build capabilities, running in agent nodes.

E.g:

stage 'build' |

5. Do a parallel Step

Pipeline provides a straightforward syntax for dividing your Pipeline into parallel Steps.

This is because assigning work in parallel will make your Pipeline run faster and get feedback from developers and other members of the team faster.

E.g:

parallel 'shifting':{ |

Tip : Use the Parallel Test Executor plugin to let Jenkins automatically determine how to run xUnit compliant tests in the best parallel pool! You can read more about parallel test execution on the CloudBees blog.

6. Using Node in Parallel Step

Why do we get and use a Node in a parallel Step? This is because parallelization has a major advantage: more substantial work can be done at the same time (see Best Practice 4)! In general, we should want to get a Node in a parallel branch of the Pipeline to speed up concurrent builds.

E.g:

parallel 'integration-tests':{ |

7. Input in the Timeout code block of Step

Pipeline has a simple mechanism, that is, any Step in the Pipeline can be timed. As a best practice, we should always plan to use Input inside a Timeout block.

This is for a healthy Pipeline cleanup. The Input in the timeout period will be allowed to be cleaned up (ie aborted) if no approval has occurred within the given window.

E.g:

timeout(time:5, unit:'DAYS') { |

8. File staging is preferred over archiving

Before staging capabilities were added to the Pipeline DSL, archiving was the best way to share files between Nodes or Stages in a Pipeline. If you only need to share files between the Stages and Nodes of the pipeline, you should use staging/fetching instead of archiving.

This is because staging and fetching are designed to share files, such as an application's source code, between Stage and Node. Archives, on the other hand, are designed for long-term file storage (eg, intermediate binaries you build).

E.g:

stash excludes: 'target/', name: 'source' |

9. Don't use Input inside Node blocks

While it's possible to use an Input statement in a node block, we definitely shouldn't.

Because the Input element pauses the Pipeline execution pending approval - either automatically or manually. These approvals will naturally take some time. On the other hand, when stopped due to Input, the node element acquires and holds locks on the workspace and resource-consuming tasks, which will be an expensive resource.

So, create the Input outside of Node.

E.g:

stage 'deployment' |

10. Don't use the Env global variable to set environment variables

Although you can edit the Env global variable to define some environment settings, we should use the withEnv syntax.

The Env variable is a global variable, so we discourage changing it directly, because it changes the global environment, so it is recommended to use the withEnv syntax.

E.g:

withEnv(["PATH+MAVEN=${tool 'm3'}/bin"]) { |

本译文转载自:公众号【JFrog杰蛙DevOPs】