The elastic scaling of cloud platforms can be roughly divided into horizontal scaling (scale in/out) and vertical scaling (scale up/down). Vertical scaling is to modify the configuration of the original cloud server, including the size of the disk, the computing power of the CPU, the size of the memory, and the traffic restrictions of the network card and IP. To upgrade the configuration of traditional machines, it often needs to be shut down; in a virtualized environment, it is technically and operationally easier to upgrade the configuration online, so that customers can still upgrade the configuration when the server load exceeds the estimate and needs to be upgraded. The availability of services can be guaranteed. Huayun cloud host products will combine QEMU memory and CPU hot-plug technology to achieve online system upgrades without interrupting business in the production environment.

This article will give a brief introduction to the use of QEMU's virtual machine memory hot-plug function. QEMU introduced memoryhot-plug support in 2.1, and introduced memoryhot-unplug support in 2.4. The corresponding support in Libvirt was introduced in 1.2.14. This article mainly introduces hot-plug.

Environmental preparation

Here CentOS 7 is used as the host environment. Currently, the QEMU version in the built-in source is 1.5.3, and the Libvirt version is 1.2.8, which needs to be upgraded.

Upgrade QEMU

You can use the SRPM package provided by RedHat to upgrade to 2.1.2. Execute the following command to build the RPM package:

Listing 1. Building the qemu-kvm RPM package

1. yum -y install rpm-build

2. wget http://ftp.redhat.com/pub/redhat/linux/enterprise/7Server/en/RHEV/SRPMS/qemu-kvm-rhev-2.1.2-23.el7_1.9.src.rpm

3. rpm -ivh qemu-kvm-rhev-2.1.2-23.el7_1.9.src.rpm

4. yum -y groupinstall "Development Tools"

5. yum -y install zlib-devel libaio-devel libcurl-devellibssh2-devel libseccomp-devel lzo-devel snappy-devel numactl-devel pixman-devellibcap-devel brlapi-devel check-devel librdmacm-devel libpng-devel libjpeg-develnss-devel libuuid-devel bluez-libs-devel glusterfs-devel glusterfs-api-devellibrbd1-devel librados2-devel SDL-devel gnutls-devel pciutils-devel pulseaudio-libs-devellibiscsi-devel ncurses-devel libattr-devel libusbx-devel usbredir-devel spice-server-develsystemtap-sdt-devel cyrus-sasl-devel texi2html texinfo iasl

6. rpmbuild -bb ~/rpmbuild/SPECS/qemu-kvm.spec

Install the RPM package after the build is complete:

Listing 2. Installing qemu-kvm

1. yum -y install ipxe-roms-qemu seabios-bin seavgabios-binsgabios-bin

2. rpm -ivh ~/rpmbuild/RPMS/x86_64/qemu-*

Upgrading Libvirt

The libvirt package provided by CBS is used here. First, add the CBS source and create the file /etc/yum.repos.d/cbs.repo with the following contents:

Listing 3. CBS libvirt repo configuration

1. name=virt7-xen-46-testing

2. baseurl = http: //cbs.centos.org/repos/virt7-xen-46-testing/x86_64/os/

3. enabled=1

4. gpgcheck=0

Then install libvirt:

Listing 4. Installing libvirt

1. yum -y install libvirt

Use memory hot-plug

In general, virtual machines in QEMU are created through Libvirt. To support memory hot swap, the maxMemory configuration must be specified when creating a virtual machine, and at least one NUMA node (NUMA node in the virtual machine, non-sink) must be specified. in the host):

Listing 5. Virtual machine maxMemory and numa configuration

1. <maxMemory slots='32' unit='KiB'>68719476736</maxMemory>

2. ...

3. <cpu mode='host-model'>

4. <model fallback='allow'/>

5. <numa>

6. <cell id='0' cpus='0' memory='4194304' unit='KiB'/>

7. </numa>

8. </cpu>

In Listing 5, the memory value in <maxMemory> (64 GiB in this case) represents the upper limit of memory (including the virtual machine's initial memory) that can be reached via hot-plug. Where slots represents the number of DIMM slots, each slot can be inserted into a memory device at runtime, the upper limit is 255. While the configuration in <numa> is used to specify the NUMA topology within the virtual machine, the configuration in Listing 5 has only one NUMA node within the virtual machine.

A virtual machine created according to the above requirements can insert a memory device at runtime. For specific operations, you need to create a device information description file first:

Listing 6. Memory device configuration format

1. <memory model='dimm'>

2. <target>

3. <size unit='KiB'>524287</size>

4. <node>0</node>

5. </target>

6. </memory>

The <size> section in Listing 6 specifies the memory capacity of the device (512MiB in this case), and the <node> section specifies which NUMA node to plug into the virtual machine.

Then use the attach-device command to mount the device (assuming the above device information file name is memdev.xml , and the virtual machine name is testdom ):

Listing 7. Mounting the device

1. virsh attach-device --live testdom memdev.xml

Finally, according to the different operating systems in the virtual machine, some operations need to be performed in it to activate the newly inserted memory, which will be described separately for Linux and Windows below.

Linux virtual machine

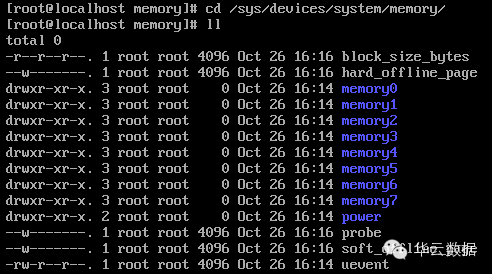

Full support for memoryhot-plug in Linux requires a newer kernel. Taking CentOS 7 as an example, the memory of the test virtual machine is 1GiB and there are 8 memory blocks:

Figure 1. Memory block before inserting memory

Figure 2. Total memory before inserting memory

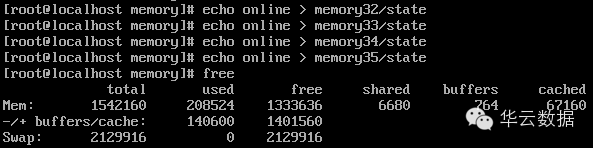

After inserting new memory using attach-device , you can see 4 more memory blocks:

Figure 3. Added memory block

The inserted memory needs to be activated manually. After activation, you can see that the total system memory has changed:

Figure 4. Active memory and total memory change

In order to allow the system to automatically add memory online, you can set udev rules and create the /etc/udev/rules.d/99-hotplug-memory.rules file with the following contents:

Listing 8. udev rules for automatic online memory

1. # automatically online hot-plugged memory

2. ACTION=="add", SUBSYSTEM=="memory",ATTR{state}="online"

It will take effect immediately after the file is saved.

In CentOS 6, since the configuration has been enabled when compiling the kernel, the inserted memory will be automatically online; however, if the kernel version is not high enough, the system will not recognize the memory device after restarting the virtual machine. Upgrading to the latest version of the 2.6.32 kernel can avoid this problem, or you can use the method of manually probing the memory block (see the instructions in the References section), but pay attention to the memory start address can not be wrong, otherwise the system will run when online. collapse.

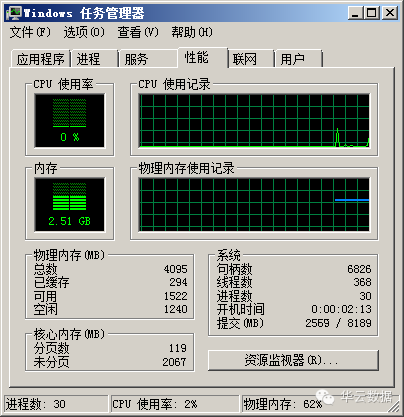

Windows virtual machine

The Windows version tested in this article is Windows 2008 R2 Datacenter x64. After using the attach-device command to mount the memory device, the newly added memory will be automatically activated without additional operations. The total memory information after mounting (see the total physical memory item). It can be observed that the CPU usage increases for a moment when the memory device is inserted, and then falls back down.

Figure 5. Before inserting the memory device

Figure 6. After inserting the memory device

The Windows versions that currently support memory hot-plugging are:

Windows Server 2008 R2, Enterprise Edition and Datacenter Edition

Windows Server 2008, Enterprise Edition and Datacenter Edition

Windows Server 2003, Enterprise Edition and Datacenter Edition

All Windows systems do not support memory hot-plug operations.

References

qemu memory-hotplug.txt QEMU's description of memory hot-plug.

Domain XML Format virtual machine configuration <maxMemory> and <memory> can be seen here.

linux memory-hotplug.txt For information on memory hotplug support in Linux, please refer to Section 4 for the part about memory block probes.

Operating system support for hot-add memory in Windows Server Official Windows hot-plug version support instructions.

vSphere Memory Hot Add/CPU Hot Plug Here is a table of CPU and memory hot plug support for different versions of Windows.

vSphere 5.1: Hot add RAM and CPU Here is another table of CPU and memory hot-plug support for different versions of Windows.

Decreased performance, driver load failures, or system instability may occur on Hot Add Memory systems that are running Windows Server 2003 Windows Server 2003 uses memory hot-plug issues.

[Qemu-devel] [PATCH 00/35] pc: ACPI memory hotplug community information about memory hot-plug.