What is grpc?

gRPC is a high-performance, open-source and general-purpose RPC framework designed for mobile and HTTP/2. Currently, C, Java and Go language versions are available: grpc, grpc-java, grpc-go. The C version supports C, C++, Node.js, Python, Ruby, Objective-C, PHP and C#.

gRPC is designed based on the HTTP/2 standard, bringing features such as bidirectional streaming, flow control, header compression, and multiplexing requests over a single TCP connection. These features make it perform better on mobile devices, save power and space.

focus

Design based on HTTP/2 standard

In this case, grpc naturally inherits the most powerful advantage of http2: multiplexing (Multiplexing)

Multiplexing allows multiple request-response messages to be issued simultaneously over a single HTTP/2 connection.

So what's in it for us?

The benefits are obvious:

for users

Due to the fact that the current http1.1 protocol cannot be multiplexed and browser restrictions (different browsers have restrictions on the number of request connections at the same time), the request limit is directly blocked, and the speed of opening a website that needs to load more resources is very slow, and HTTP greatly reduces resource latency due to multiple request-response messages within the same connection.

For our operation and maintenance

In normal work, the most deployed thing is the reverse proxy.

Usually, it is pre-installed with nginx and then hung on multiple application containers (tomcat)

Since the nginx reverse proxy uses the http1.1 protocol, after receiving the user request, nginx will initiate a request to the back-end application container through the http1.1 protocol

Everyone knows that when http is initiated once, the initiator will temporarily establish a socket as a channel for establishing a connection with the peer. If you encounter high concurrency and high request volume. Due to the limited socket resources, it is easy to cause a series of problems such as network IO blocking.

So how to solve this problem?

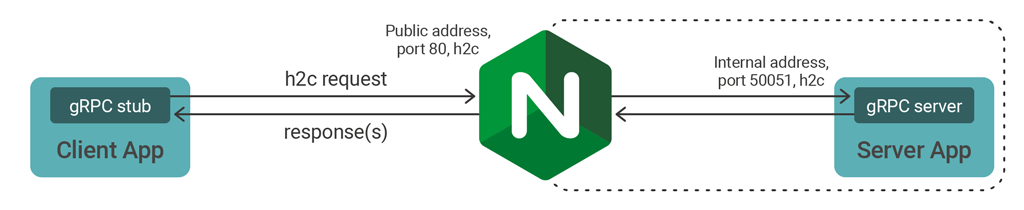

There are many methods, but today we will use grpc to implement (as shown in the figure)

nginx supports grpc since 1.13.10

tomcat supports http2 and h2c from 8.0 and above

Since grpc is designed based on the HTTP/2 standard, it is essentially that http2 can establish an http2 connection when both the server and the client support http2.

nginx (need to install –with-http_ssl_module –with-http_v2_module plugin) just add the original reverse proxy

proxy_pass http://127.0.0.1:8080;

改写成:

grpc_pass grpc://127.0.0.1:8080tomcat just add the following to the corresponding port Connector

<UpgradeProtocol className="org.apache.coyote.http2.Http2Protocol" />Due to the features of HTTP2 multiplexing. When nginx needs to send a large number of proxy requests to the back-end application container, it will avoid most of the network IO blocking due to limited socket resources. At the same time due to the use of multiplexing mechanism. The speed at which requests are sent and received is also accelerated. It greatly improves the speed and ability of the server to process data.

If you are interested, you can try it out~