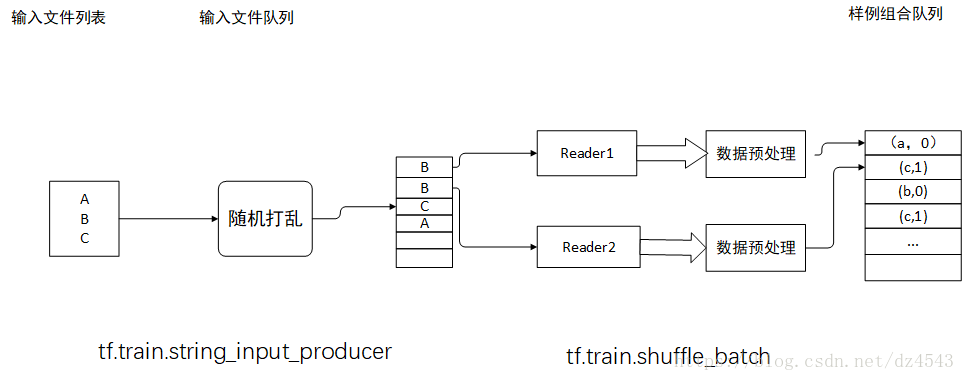

As shown in the figure, it is roughly a schematic diagram of the input data processing flow. The first step in input data processing is to obtain a list of files that store training data. In this figure, the list of files is {A, B, C}. The tf.train.string_input_producer function can optionally shuffle the order of files and add them to the input queue. The tf.train.string_input_producer function generates and maintains an input file queue, which can be shared by file reading functions in different threads.

After reading the sample program, the image needs to be preprocessed. The preprocessing process will also run in multiple threads in parallel through the mechanism provided by tf.train.shuffle_batch. At the end of the input data processing process, the processed single input samples are sorted into batches and provided to the neural network input layer through the tf.train.shuffle_batch function.

import tensorflow as tf

#create file list

files = tf.train.match_filenames_once("Records/output.tfrecords")

#Create file input queue

filename_queue = tf.train.string_input_producer(files, shuffle=False)

# Read the file.

# Analytical data. Suppose image is image data, label is label, height, width, channels give the dimension of the image

reader = tf.TFRecordReader()

_,serialized_example = reader.read(filename_queue)

# Parse the read sample.

features = tf.parse_single_example(

serialized_example,

features={

'image': tf.FixedLenFeature([], tf.string),

'label': tf.FixedLenFeature([], tf.int64),

'height': tf.FixedLenFeature([], tf.int64),

'width': tf.FixedLenFeature([], tf.int64),

'channels': tf.FixedLenFeature([], tf.int64)

})

image, label = features['image'], features['label']

height, width = tf.cast(features['height'], tf.int32), tf.cast(features['width'], tf.int32)

channels = tf.cast(features['channels'], tf.int32)

# Parse the pixel matrix from the original image and restore the image according to the pixel size

decoded_images = tf.decode_raw(features['image_raw'],tf.uint8)

decoded_image.set_shape([height, width, channels])

#Define the size of the image in the input layer of the neural network

image_size = 299

# The preprocess_for_train function is a function that preprocesses images

distorted_image = preprocess_for_train(decoded_image, image_size, image_size,

None)

#Organize the processed images and labels into batches required for neural network training through tf.train.shuffle_batch

min_after_dequeue = 10000

batch_size = 100

capacity = min_after_dequeue + 3 * batch_size

image_batch, label_batch = tf.train.shuffle_batch([images, labels],

batch_size=batch_size,

capacity=capacity,

min_after_dequeue=min_after_dequeue)

# Define the structure and optimization process of the neural network. image_batch can be provided as input to the input layer of the neural network

#label_batch provides the correct answer for the samples in the input batch

logit = inference(image_batch)

loss = calc_loss(logit, label_batch)

train_step = tf.train.GradientDescentOptimizer(learning_rate).minimize(loss)

#declare the session and run the neural network optimization process

with tf.Session() as sess:

#Neural network training preparations, including variable initialization, thread startup

sess.run(

[tf.global_variables_initializer(),

tf.local_variables_initializer()])

coord = tf.train.Coordinator()

threads = tf.train.start_queue_runners(coord=coord)

# Neural network training process

for i in range(TRAINING_ROUNDS):

sess.run(train_step)

#stop all threads

coord.request_stop()

coord.join()

Its code is as follows: