Coding Basics

- ASCII occupies 1 byte, only supports English

- GB2312 occupies 2 bytes, supports 6700+ Chinese characters

- An upgraded version of GBK GB2312, supports 21000+ Chinese characters

- Shift-JIS Japanese characters

- ks_c_5601-1987 Korean code

- TIS-620 Thai Code

Since each country has its own characters, its corresponding relationship also covers the characters of its own country, but the above encodings have limitations, that is: only the national characters are covered, and there is no correspondence between the characters of other countries. The Universal Code came into being, which covers all the correspondence between words and binary in the world.

Unicode 2-4 bytes have included 136,690 characters and are still expanding...

Unicode serves 2 purposes:

- Directly supports all languages in the world, each country can no longer use its own old encoding, just use unicode. (Just like English is the universal language)

- unicode contains the mapping relationship with all country codes in the world

Unicode solves the correspondence between characters and binary, but using unicode to represent a character is a waste of space. For example, using unicode to represent "Python" requires 12 bytes to represent, which is double the original ASCII representation.

Because the memory of the computer is relatively large, and the string is not particularly large when represented in the content, the content can be processed by unicode, but there will be a lot of data in storage and network transmission, so doubling it will not be tolerated. ! ! !

In order to solve the problem of storage and network transmission, Unicode Transformation Format, academic name UTF, that is: to convert in unicode, so as to save space during storage and network transmission!

- UTF-8: Use 1, 2, 3, and 4 bytes to represent all characters; if 1 character is used first, if it cannot be satisfied, one byte will be added, up to 4 bytes. English accounts for 1 byte, European languages account for 2, East Asia accounts for 3, and other and special characters account for 4.

- UTF-16: Use 2 or 4 bytes to represent all characters; 2 bytes are preferred, otherwise 4 bytes are used.

- UTF-32: Use 4 bytes to represent all characters.

Summary: UTF is a space-saving encoding scheme designed for unicode encoding in storage and transmission.

Characters are stored on the hard disk in binary format

encoding conversion

Although the international language is English, everyone still speaks their own language in their own country, but when you go abroad, you have to know English coding as well. Although there are unicode and utf-8, due to historical problems, various countries are still in Use a lot of your own encoding, such as Chinese windows, the default encoding is still gbk, not utf-8.

Based on this, if Chinese software is exported to the United States, it will display garbled characters on American computers because they do not have gbk encoding.

If you want Chinese software to be displayed on American computers normally, there are only two ways to go:

- Let Americans have gbk code installed on their computers

- encode your software in utf-8

The first method is almost impossible to achieve, the second method is relatively simple. But only for newly developed software. If the software you developed before was encoded in gbk, millions of lines of code may have been written, and re-encoding to utf-8 format would be a lot of effort.

so , for projects that have been developed with gbk, the above two solutions cannot easily make the project display normally on American computers, is there no other way?

Yes, I still remember that one of the functions of unicode is that it contains the mapping relationship with all national codes in the world, which means that you wrote "Luffy Xuecheng" of gbk, but unicode can automatically know its "" in unicode. What is the encoding of "Luffy Xuecheng"? If so, does it mean that no matter what encoding you store the data in, as long as your software reads the data from the hard disk into the memory and converts it to unicode for display, it will That's it. Since all systems and programming languages support unicode by default, put your gbk software on a US computer, load it into memory, and become unicode, and Chinese can be displayed normally.

Execution process of python3 code

Before looking at the actual code example, let's talk about the process of python3 executing code

- The interpreter finds the code file, loads the code string into memory according to the encoding defined by the file header, and converts it to unicode

- Interpret code strings according to grammar rules

- All variable characters are declared in unicode encoding

Actual code demonstration, write your code in utf-8 on py3, save it, and execute it on windows

The code looks like this

#coding:utf-8 s = "Luffy learns" print(s)

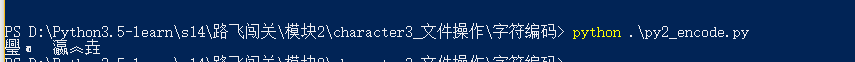

Execute as shown below in windows

It is normal to execute the above code under windows using python3

Reason for normal execution: In python3, the reason why the above utf-8 encoding can be displayed normally under the terminal of windows gbk is because the python interpreter converts utf-8 to unicode in the memory

Execute the following code file py2_encode in python2

The code looks like this

#coding:utf-8 s = "Luffy learns" print(s)

Using python2, the execution under windows is garbled

Reason for garbled characters: The python2 interpreter only interprets your code with the encoding declared in the file header. After loading it into memory, it will not automatically convert it to unicode for you. That is to say, your file encoding is utf-8 and loaded into memory. , your variable string is also utf-8, do you know what it means? . . . It means that your files encoded in utf-8 are garbled in windows. To make the file display normally, you need to do the following two aspects: 1. The string is displayed in GBK format, 2. The string is unicode encoding

python3 automatically converts the file to unicode encoding, mainly for decoding and encoding

UTF-8 ---> decode decoding ---> Unicode Unicode --->encode encoding ---> GBK/UTF-8

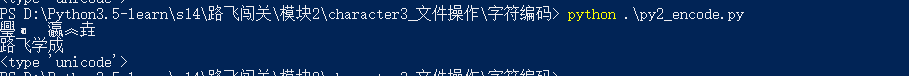

Example demonstration:

decode decode

#coding:utf-8

s = "Luffy learns"

print(s)

s2 = s.decode('utf-8')

print(s2) # s2 is displayed in Unicode format and will be displayed normally

print(type(s2))

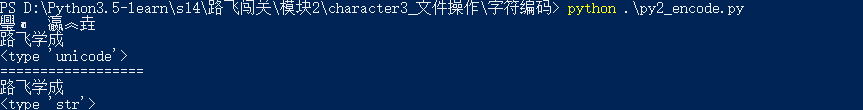

encode encoding

#coding:utf-8

s = "Luffy learns"

print(s)

s2 = s.decode('utf-8')

print(s2)

print(type(s2))

print("==================")

s3 = s2.encode('GBK') # encode unicode to gbk

print (s3)

print(type(s3)) # str

The code execution looks like this

Python bytes type

In Python2

>>> s = "路飞" >>> s '\xc2\xb7\xb7\xc9'

But when you call the variable s directly, what you see is the binary bytes represented by hexadecimal one by one. How do we call such data? Directly called binary? It is also possible, but compared with 010101, this data string is converted from binary to hexadecimal in the representation form. Why is this? Ha, just to make it more readable for people. We call it the bytes type, that is, the byte type. It calls a group of 8 binary a byte, which is represented by hexadecimal. Well, yes, in python2, bytes == str, which is actually the same thing . There is also a separate type in python2 which is unicode, after decoding the string, it will become unicode

>>> s = "路飞"

>>> s

'\xc2\xb7\xb7\xc9' #GBK format

>>> s.decode('GBK')

u'\u8def\u98de' #The corresponding position in the unicode encoding table

>>> print(s.decode('GBK'))

Luffy # #unicode format characters

Due to the limitations of the Python founder's cognition in the early stage of development, he did not expect that python would develop into a globally popular language. As a result, in the early stage of its development, it did not take supporting the languages of various countries around the world as an important thing to do, so it was frivolous. Use ASCII as the default encoding. When people's voice for supporting Chinese characters, Japanese, French and other languages became more and more loud, Python was ready to introduce unicode, but it is unrealistic to directly change the default encoding to unicode, because many software are based on the previous default encoding. Developed in ASCII, once the encoding is changed, the encoding of those software will be messed up. So Python 2 directly created a new character type, called unicode type. For example, if you want your Chinese to be displayed normally on all computers in the world, you have to store the string as unicode type in memory.

In python3, in addition to changing the encoding of strings to unicode, str and bytes are also clearly distinguished. str is a character in unicode format, and bytes is a simple binary.

>>> s = "路飞"

>>> s

'Luffy'

>>> print(type(s))

<class 'str'> # str in python3 is equal to unicode type in py2

>>> s.encode("GBK")

b'\xc2\xb7\xb7\xc9' # bytes type

The last question, why in py3, after encoding unicode, the string becomes bytes format? Can you show me the characters that are directly printed as gbk? I think the design of py3 is really painstaking. I just want to tell you clearly in this way that if you want to see characters in py3, you must be unicode encoding, and other encodings will be displayed in bytes format.

Common debugging methods of python coding

- The default encoding of the Python interpreter

- Python source file file encoding

- Encoding used by Terminal

- After the language setting of the operating system has mastered the relationship before encoding, it is good to debug one by one.