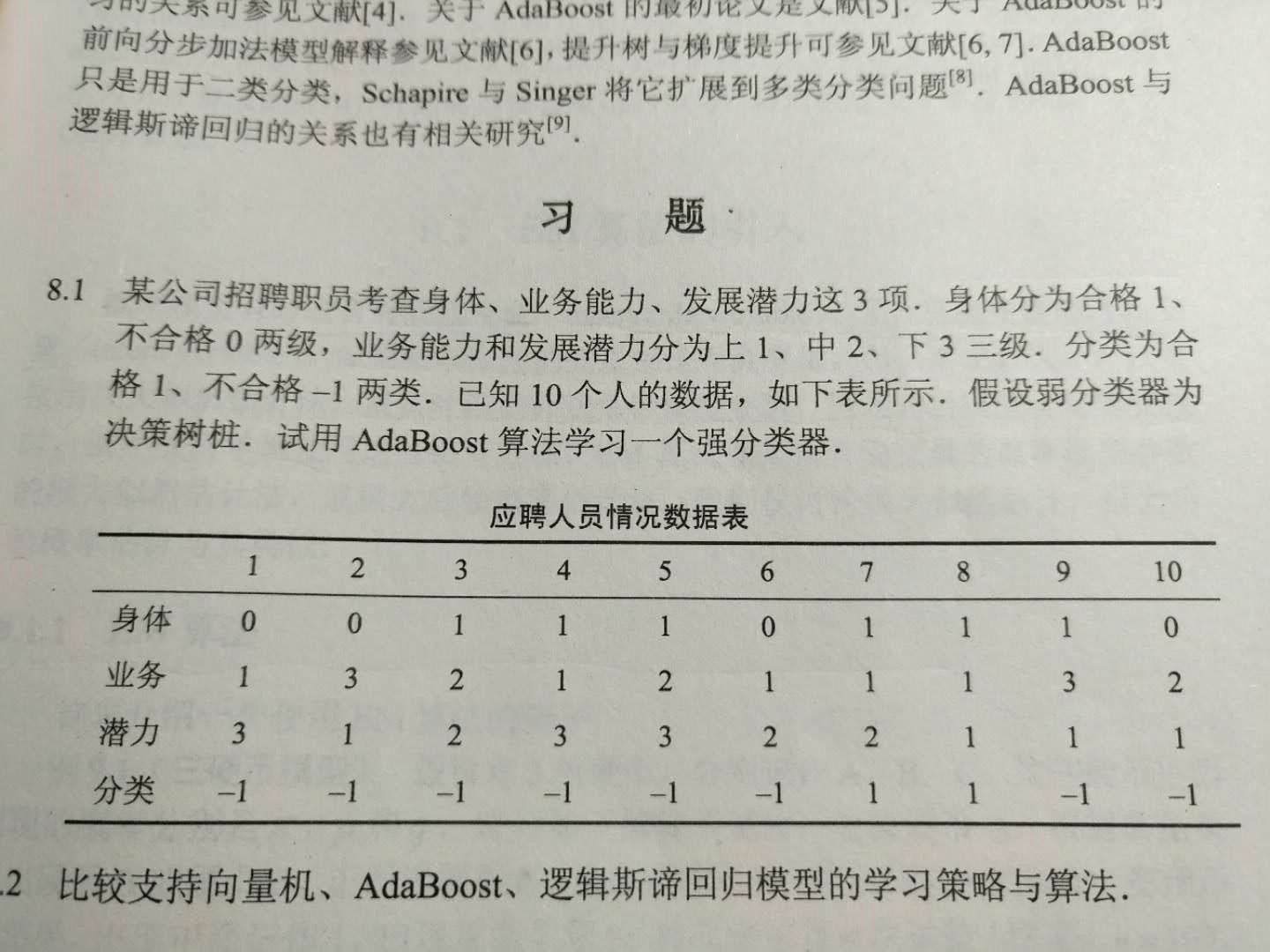

Problem solving:

Because the weak classifier is assumed to be a decision tree in the question, the CART binary classification tree can be used.

1. Initialize the data weight distribution:

D = (w11, w12, ..., w110) = (0.1,0.1, ..., 0.1)

w1i =0.1 ,i = 1,2,....,10

2. Calculate the Gini coefficient of each feature (for the convenience of calculation, take a tree with a depth of 1):

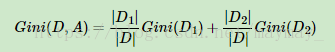

According to the calculation formula of Gini coefficient:

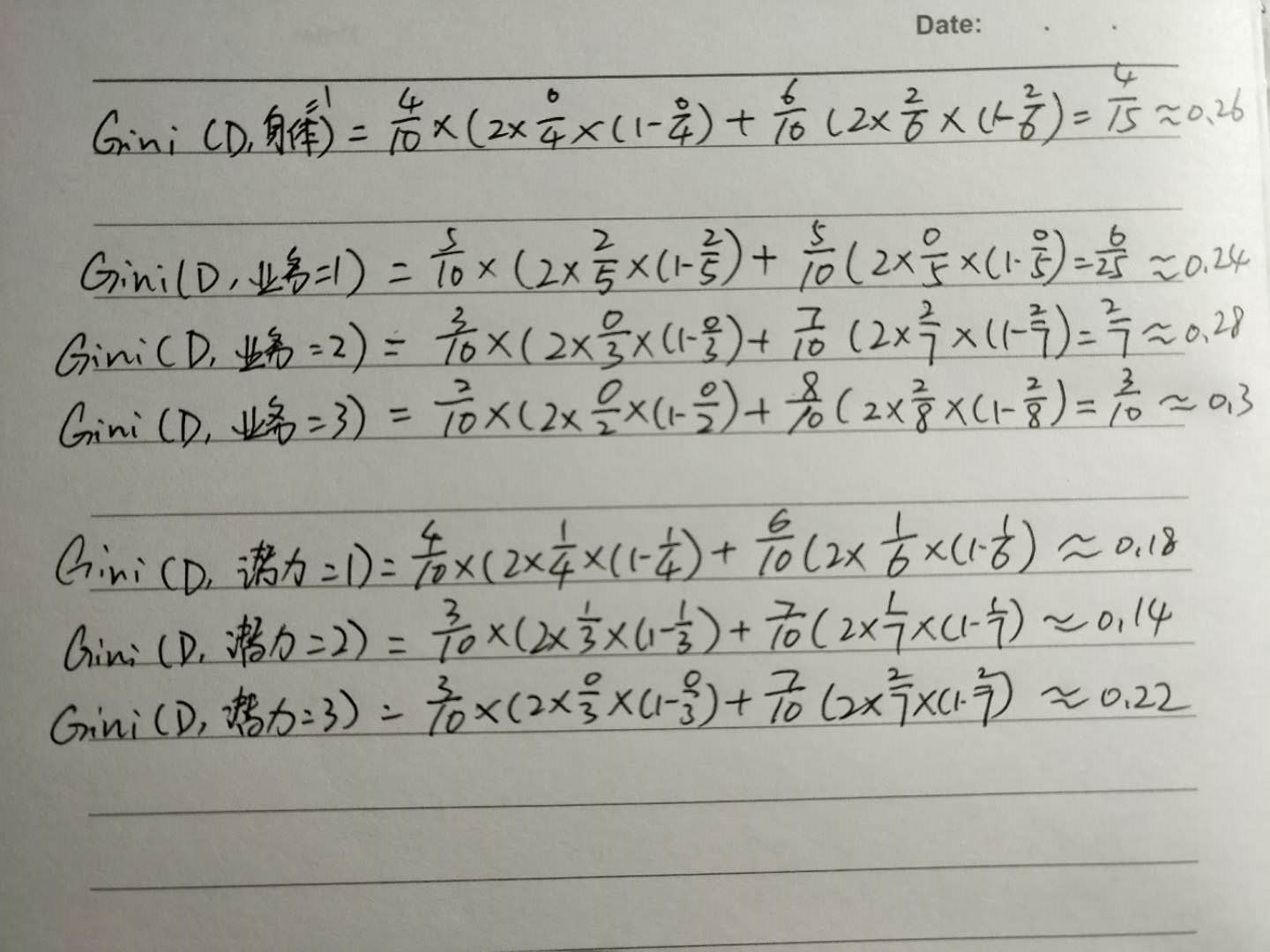

Get:

The Gini coefficient of gini(D, potential=2) is the smallest, so the first one chooses the potential equal to 2 and non-2 as the dividing point.

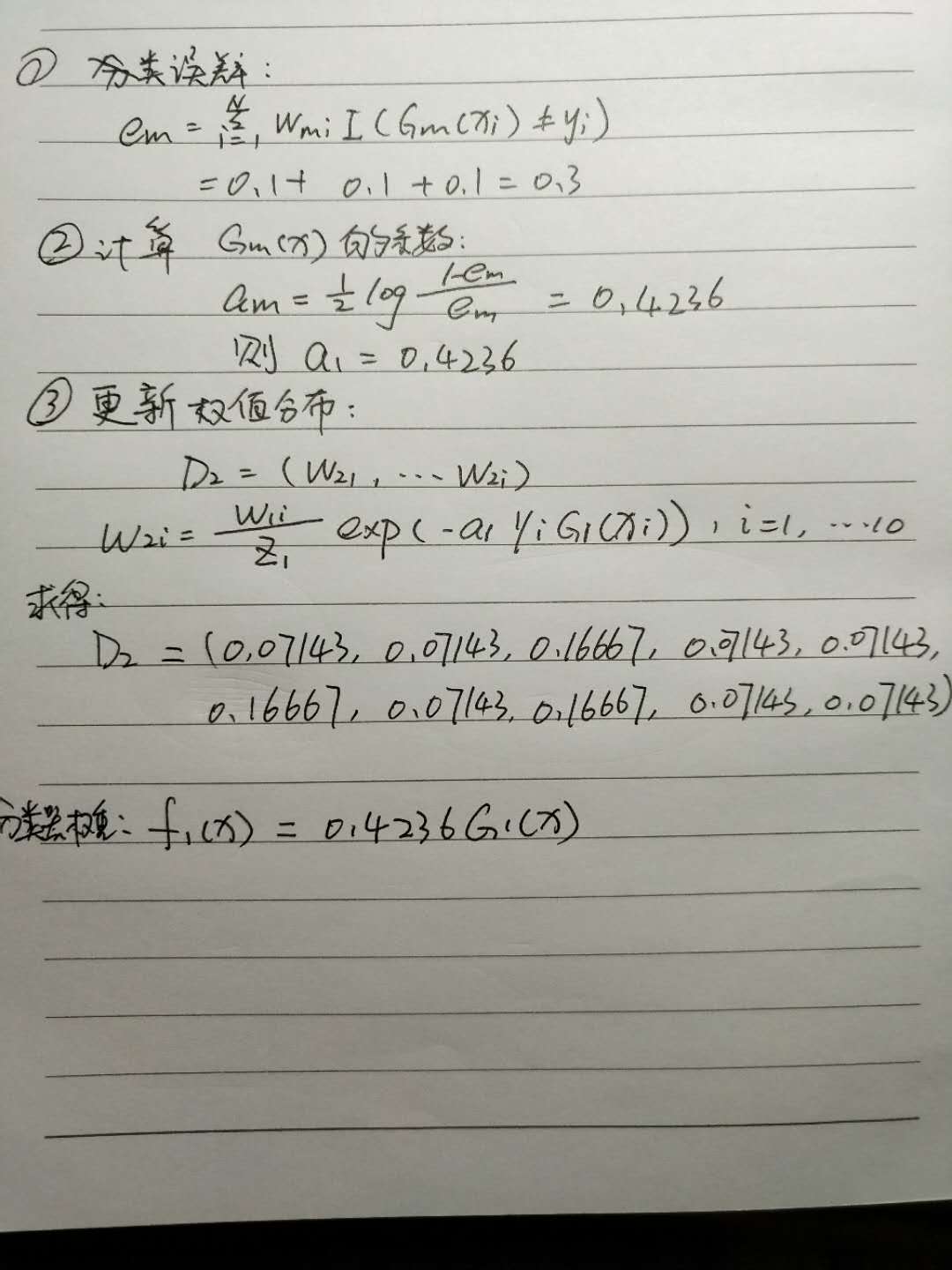

3. Calculate the error rate, the weight of the classifier, and the updated weight:

According to the above division, it can be known that 3, 6, and 8 are misclassified

Then the calculation is as follows:

4. Repeat steps 2 and 3 in this way until the error on your classifier is 0, or when it is lower than your threshold, you can stop.

ps: The correctness of the calculation, I don't know if my calculation is correct, but it should be correct