Common locking strategies

Pessimistic locking vs optimistic locking

Pessimistic lock:

Always assume the worst case, every time you go to get the data, you think that others will modify it, so every time you get the data, it will be locked, so that others who want to get the data will block until it gets the lock.

Expect a high probability of lock conflicts

Optimistic locking:

It is assumed that the data will not generate concurrency conflicts in general, so when the data is submitted and updated, it will formally detect whether the data has concurrency conflicts. Do it.

Expect a low probability of lock conflicts

example

Classmate A and Classmate B want to ask the teacher a question.

Classmate A thinks that "the teacher is busy, I will ask the question, the teacher may not be free to answer it". Therefore, Classmate A will send a message to the teacher first: "Teacher,

are you busy? Can I come to you to ask a question at two o'clock in the afternoon?" (equivalent to a lock operation) Only after getting a positive answer will I really come to ask a question.

If I get a negative answer, then wait for a while, next time Then confirm the time with the teacher. This is a pessimistic lock.

Classmate B thinks that "the teacher is relatively free, I will ask questions, and the teacher will most likely be free to answer". Therefore, classmate B directly comes to the teacher. (No

lock, Direct access to resources) If the teacher is really busy, then the immediate problem will be solved. If the teacher is really busy, then classmate B

will not disturb the teacher, and will come back next time (although it is not locked, but it can identify the data access Conflict). This is optimistic locking.

These two ideas cannot be said to be superior or inferior, but to see whether the current scene is suitable.

If the current teacher is really busy, then the strategy of using pessimistic locking is more appropriate, using optimistic locking will lead to " Many times in vain”, which consumes

additional resources.

If the current teacher is really idle, the strategy of using optimistic locking is more suitable, and using pessimistic locking will make the efficiency lower.

In general

Pessimistic lock, more work, more cost, less efficient

Optimistic lock, less work, lower cost, more efficient

Read-write lock vs ordinary mutex

For ordinary mutex, there are only two operations, lock and unlock

Mutual exclusion occurs as long as two threads lock the same object

For read-write locks, it is divided into three operations.

Add read lock: if the code only performs a read operation, add a read lock

. Add a write lock: if the code is modified, add a write lock.

Unlock

For read locks and read locks, there is no mutual exclusion relationship between

read locks and write locks, and between write locks and write locks, only mutual exclusion is required

Heavyweight Locks vs Lightweight Locks

Heavyweight locks do more things with more overhead

Lightweight locks do less things with less overhead

Usually, it can be understood that pessimistic locks are heavyweight locks, and optimistic locks are lightweight locks

Among the locks used, if the lock is implemented based on some functions of the kernel (such as calling the mutex interface of the operating system), it is generally considered to be a heavyweight lock (the lock of the operating system will do a lot of things in the kernel, such as Let the thread block and wait...)

If the lock is implemented in pure user mode, it is generally considered to be a lightweight lock at this time (the code in user mode is more controllable and efficient)

Suspend wait lock vs spin lock

Suspended waiting locks are often implemented through some mechanisms of the kernel, which are often heavier [a typical implementation of heavyweight locks]

spin locks are often implemented through user-mode code, and are often lighter [lightweight locks] A typical implementation of ]

Understanding spinlocks vs suspend-wait locks

Imagine going after a goddess. When the boy confessed to the goddess, the goddess said: You are a good person, but I have a boyfriend~~

Hang up the lock and wait for the lock: I can't extricate myself from the sinking... After a long, long time, suddenly the goddess sent me Message, "Should we try it?" (note that in

this long time interval, the goddess may have changed several male votes).

Spinlock: dead skin and perseverance. Still talking to the goddess every day Good morning and good night. Once the goddess breaks up with the previous one, then you can

immediately seize the opportunity to take the position

Fair lock vs unfair lock

These two may be easy to confuse, remember

Fair lock: when multiple threads are waiting for a lock, whoever comes first will get the lock first (following the principle of first come, first come)

Unfair lock: multiple threads Waiting for the same lock, does not follow the principle of first come, first served, the probability of each person waiting for the thread to acquire the lock is equal; (for the operating system, the scheduling between its own threads is random (equal opportunity), the mutex lock provided by the operating system , is an unfair lock

Reentrant locks vs non-reentrant locks

A thread for a lock can lock twice in a row, it will deadlock, it is a non-reentrant lock, if it does not deadlock, it is a reentrant lock

Understand "lock yourself up"

A thread does not release the lock, and then tries to lock again

// the first lock, the lock is successful

lock();

// the second lock, the lock has been occupied, blocking waiting.

lock();

Example:

a People flashed into the locked toilet, can't get out from inside, can't get in from outside

Talk about the commonly used synchronized lock

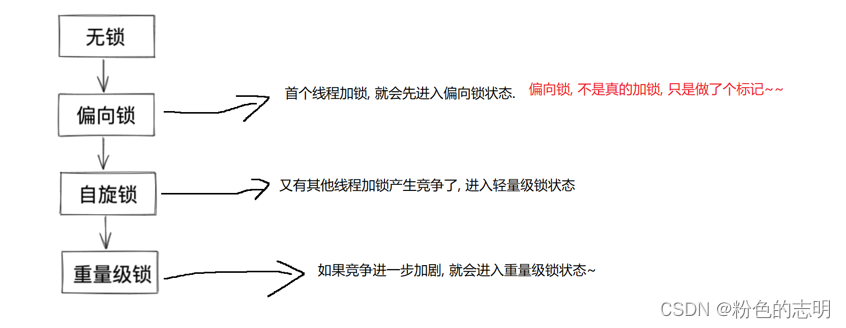

1: It is both an optimistic lock and a pessimistic lock (according to the intensity of competition, adaptive)

2: It is not a read-write lock, but an ordinary mutex lock

3: It is both a heavyweight lock and a lightweight lock (according to the Intensity of competition, adaptive)

4: Lightweight locks are partially implemented based on spin, and heavyweight locks are implemented based on pending locks

5: Unfair locks

6: Reentrant locks

CAS

What is CAS:

CAS: Full name Compare and swap, literally: "Compare and swap", a CAS involves the following operations:

we assume the original data V in memory, the old expected value A, and the new value B that needs to be modified.

- Compare A and V for equality. (Compare)

- If it compares equal, write B to V. (exchange)

- Returns whether the operation was successful.

When multiple threads perform CAS operations on a resource at the same time, only one thread can successfully operate, but other threads will not be blocked, and other threads

will only receive a signal that the operation fails.

CAS can be regarded as an optimistic lock. (Or it can be understood that CAS is an implementation of optimistic lock) The

biggest significance of CAS is that we write this thread-safe code, which provides a new idea and direction for

both CAS What can 2 do? How to help us solve some thread safety problems??

"Atomic class" can be realized based on CAS

(The java standard library provides a set of atomic classes, which are commonly used to encapsulate some int, long, int array... for locks, which can be modified based on CAS, and are thread-safe)

import java.util.concurrent.atomic.AtomicInteger;

public class Demo27 {

public static void main(String[] args) throws InterruptedException {

AtomicInteger num = new AtomicInteger(0);

Thread t1 = new Thread(() -> {

for (int i = 0; i < 50000; i++) {

// 这个方法就相当于 num++

num.getAndIncrement();

}

});

Thread t2 = new Thread(() -> {

for (int i = 0; i < 50000; i++) {

// 这个方法就相当于 num++

num.getAndIncrement();

}

});

// // ++num

// num.incrementAndGet();

// // --num

// num.decrementAndGet();

// // num--

// num.getAndDecrement();

// // += 10

// num.getAndAdd(10);

t1.start();

t2.start();

t1.join();

t2.join();

// 通过 get 方法得到 原子类 内部的数值.

System.out.println(num.get());

}

}

There is no thread safety problem in this code. Based on the ++ operation implemented by CAS, it can be guaranteed that it is both thread safe and more efficient than synchronized.

There is no thread safety problem in this code. Based on the ++ operation implemented by CAS, it can be guaranteed that it is both thread safe and more efficient than synchronized.

Synchronized will involve lock competition, and two threads have to wait for each other.

CAS does not involve Thread blocking waiting

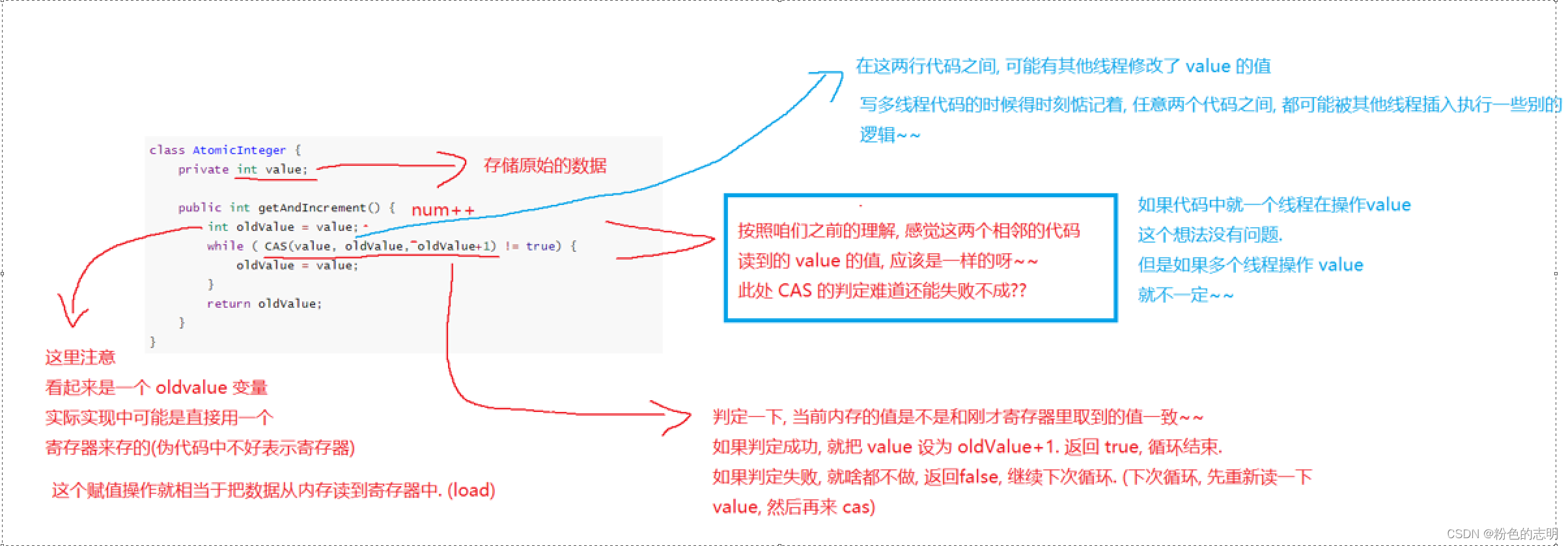

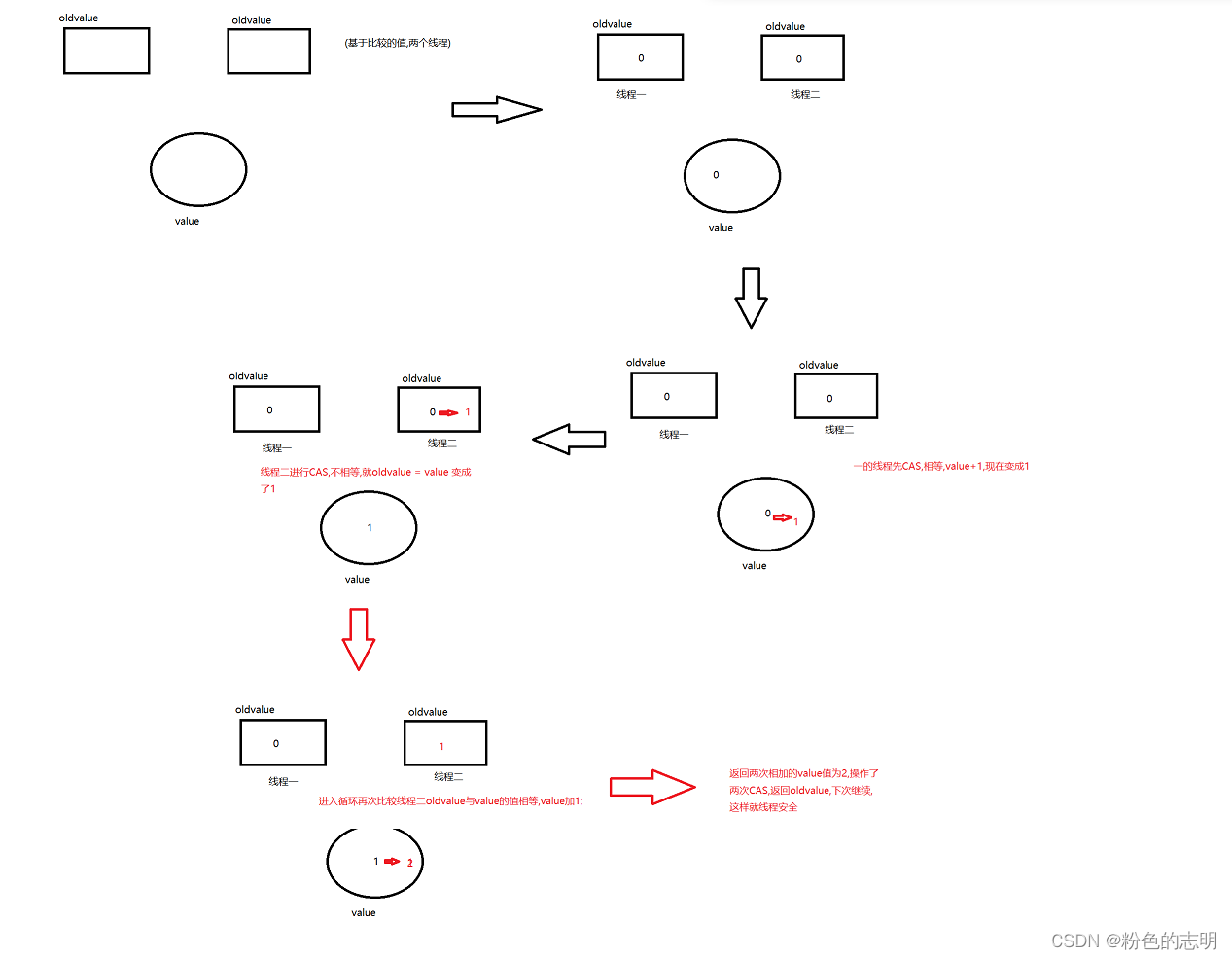

for appeal why ++ is thread safe

Here we talk about CAS

== key understanding: == This entire code implements ++, and CAS also performs comparison, +1, and exchange of these three actions. If it fails, there is no exchange.

Pseudo code:

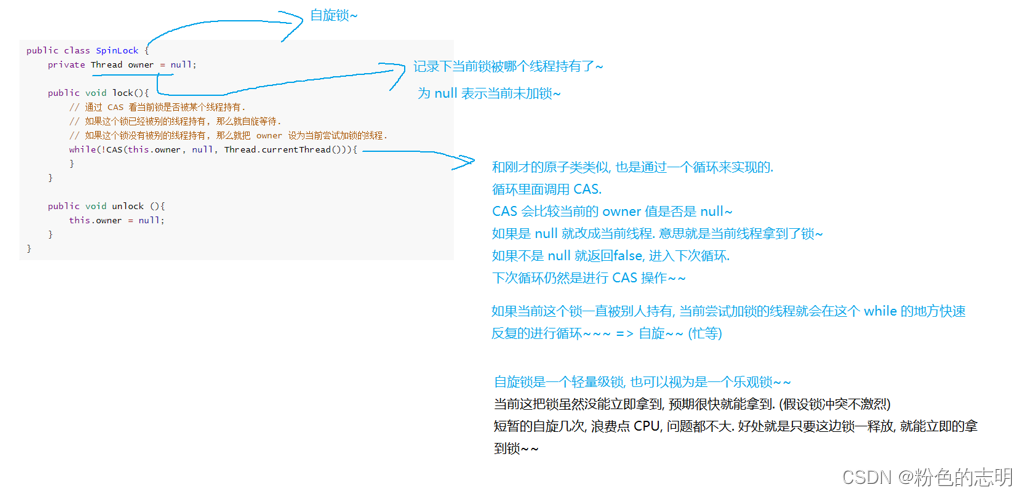

Spinlock can be implemented based on CAS

Fake code:

ABA Problems in CAS

ABA Questions:

Suppose there are two threads t1 and t2. There is a shared variable num with an initial value of A.

Next, if thread t1 wants to use CAS to change the value of num to Z, it needs

to read the value of num first and record it in the oldNum variable .Use CAS to determine whether the current value of

num is A, if it is A, change it to Z.

However, between the two operations performed by t1, the t2 thread may change the value of num from A to B, and from B again Changed to

the CAS of thread t1 of A, it is expected that num will remain unchanged. However, the value of num has been changed by t2. It is just changed to A. At this

time, does t1 want to update the value of num to Z?

At this point, the t1 thread cannot distinguish whether the current variable is always A or has undergone a change process.

BUG caused by ABA problem

In most cases, a repeated horizontal jump change such as the t2 thread has no effect on whether t1 modifies num. However, some special cases cannot be ruled out.

Suppose the funny man has 100 deposits. Funny wants to withdraw 50 dollars from the ATM. The ATM creates two threads to perform the -50

operation concurrently.

We expect one thread to execute -50 successfully and the other thread -50 to fail.

If Using CAS to complete this deduction process may cause problems.

The normal process

- Deposit 100. Thread 1 gets the current deposit value of 100 and expects to update it to 50; thread 2 gets the current deposit value of 100 and expects

to update it to 50. - Thread 1 executes the deduction successfully, and the deposit is changed to 50. Thread 2 is blocked and waiting.

- It is the turn of thread 2 to execute, and it is found that the current deposit is 50, which is different from the 100 read before, and the execution fails.

The abnormal process - Deposit 100. Thread 1 gets the current deposit value of 100 and expects to update it to 50; thread 2 gets the current deposit value of 100 and expects

to update it to 50. - Thread 1 executes the deduction successfully, and the deposit is changed to 50. Thread 2 is blocked and waiting.

- Before the execution of thread 2, the funny friend just transfers 50 to the funny, and the account balance becomes 100 !!

- It is the turn of thread 2 to execute, and it is found that the current deposit is 100, which is the same as the 100 I read before, and the deduction operation is performed again.

At this time, the deduction operation is performed twice!!! It is all the ghost of the ABA problem.

solution

Introduce the version number for the value to be modified. When CAS compares the current value of the data with the old value, it also compares whether the version number is as expected.

The CAS operation also reads the version number while reading the old value. The

real modification When the

current version number is the same as the read version number, modify the data and add the version number + 1.

If the current version number is higher than the read version number, the operation fails (it is considered that the data has been modified) .Compare

to the above transfer example .

Suppose that the funny man has a deposit of 100. Funny wants to withdraw 50 yuan from the ATM. The ATM creates two threads to perform the -50

operation concurrently.

We expect one thread to execute -50 successfully, and the other A thread -50 failed.

To solve the ABA problem, assign a version number to the balance, initially set to 1.

- Deposit 100. Thread 1 obtains a deposit value of 100, version number 1, and expects an update to 50; thread 2 obtains a deposit value of 100,

version number 1, and expects an update to 50. - Thread 1 executes the deduction successfully, the deposit is changed to 50, and the version number is changed to 2. Thread 2 is blocked and waiting.

- Before the execution of thread 2, the funny friend just transfers 50 to the funny, the account balance becomes 100, and the version number becomes 3.

- It is the turn of thread 2 to execute, and it is found that the current deposit is 100, which is the same as the 100 read before, but the current version number is 3,

the version number read before is 1, the version is smaller than the current version, and the operation is considered to have failed.

Optimization mechanism in synchronized

Lock bloat/lock escalation

It reflects the ability of synchronized to adapt to this

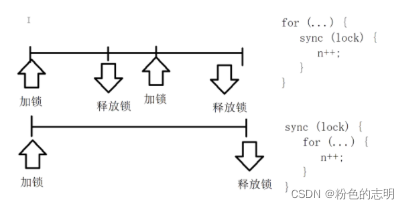

lock coarsening

Refinement, coarsening, here the thickness refers to the "granularity of the lock"

and the range involved in the locking code

. The larger the range of the bold code, the coarser the granularity of the lock.

The finer the range, the finer the

granularity . If it is relatively thin, the concurrency between multiple threads is higher.

If it is thicker, the overhead of locking and unlocking is smaller.

The compiler will have an optimization, and it will automatically determine that if the granularity of the lock somewhere is too fine, it will be coarsened

. This optimization will be performed, and if the interval is small (less code in the middle interval), this optimization may be triggered

lock removal

Some codes obviously don't need to be locked. If you add a lock, the compiler finds that the lock is unnecessary and just kills the lock.

Sometimes the locking operation is not very obvious, and this wrong decision is made inadvertently.

StringBuffer, Vector... are locked in the standard library, and the appealed class is used in a single thread, that is, a single thread is locked