1. Why do you need an operating system?

Modern computer systems are mainly composed of one or more processors, main memory, hard disks, keyboards, mice, monitors, printers, network interfaces and other input and output devices.

Generally speaking, a modern computer system is a complex system.

One: If every application programmer must master all the details of the system, it will be impossible to write code (severely affects the development efficiency of programmers: it may take 10,000 years to master all these details....)

Second: It is a very challenging job to manage these components and optimize their use, so computing installs a layer of software (system software) called an operating system.

Its task is to provide a better, simpler and clearer computer model for user programs and to manage all the devices just mentioned.

Summarize:

Programmers cannot understand all the details of hardware operation. It is very tedious work to manage these hardware and optimize its use. This tedious work is done by the operating system. With him, programmers are freed from these tedious tasks. You only need to consider the writing of your own application software. The application software directly uses the functions provided by the operating system to indirectly use the hardware.

2. What is an operating system

In short, an operating system is a control program that coordinates, manages and controls computer hardware and software resources.

(1) The location of the operating system. As shown in the figure

PS: The operating system is located between the computer hardware and the application software, and is essentially a software. The operating system consists of two parts: the kernel of the operating system (running in kernel mode, managing hardware resources) and system calls (running in user mode, providing system call interfaces for applications written by application programmers). Therefore, it is inaccurate to simply say that the operating system runs in kernel mode.

In detail, the operating system should be divided into two parts:

1. Hide the ugly hardware call interface and provide application programmers with a better, simpler and clearer model (system call interface) for calling hardware resources. After application programmers have these interfaces, they no longer need to consider the details of operating the hardware and can concentrate on developing their own applications. For example, the operating system provides the abstract concept of a file. The operation of the file is the operation of the disk. With the file, we no longer need to consider the read and write control of the disk (such as controlling the disk rotation, moving the head to read and write data, etc.).

2. The application's race request for hardware resources becomes orderly. For example, many application software actually share a set of computer hardware. For example, there may be three application programs that need to apply for a printer to output content at the same time. Then program a will print when it competes for printer resources. Then it may be that b competes for printer resources, or it may be c, which leads to disorder, The printer may print a section of a and then print c..., one of the functions of the operating system is to turn this disorder into order.

3. The difference between operating system and ordinary software

1. The main difference is:

You don't want to use Baofengyingyin, you can choose to use Thunder Player or simply write one yourself, but you can't write a program that is part of the operating system (clock interrupt handler), the operating system is protected by hardware and cannot be modified by users.

2、

The difference between an operating system and a user program is not the position of the two. In particular, an operating system is a large, complex, long-lived software,

- Large: The source code for linux or windows is on the order of five million lines. According to a book with 50 lines per page and a total of 1000 lines, 5 million lines should have 100 volumes, and an entire bookshelf should be used to arrange it. This is only the core part. User programs, such as GUIs, libraries, and basic applications (such as Windows Explorer, etc.), can easily reach 10 or 20 times this amount.

- Longevity: Operating systems are hard to write, such a large amount of code that once completed, the operating system owner will not easily throw it away and write another one. Rather, it improves upon the original. (basically, windows95/98/Me can be seen as one operating system, while windows NT/2000/XP/Vista are two operating systems, and they are very similar to users. There are also UNIX and its variants and Clone versions have also evolved over the years, such as System V version, Solaris and FreeBSD are all original versions of Unix, but although linux is very imitated according to the UNIX model and is highly compatible with UNIX, linux has a completely new code base)

2. History of operating system development

The first generation of computers (1940-1955): vacuum tubes and punch cards

(1)

Generate background:

(1)

Generate background:

no concept of operating system

All programming is direct manipulation of the hardware

The programmer makes an appointment on the timetable on the wall for a period of time, and then the programmer takes his plug-in board to the computer room and puts his plug-in board into the street computer. During these few hours, he has exclusive use of the entire computer resources. The batch of people has to wait (more than 20,000 vacuum tubes are often burned out).

Later, punched cards appeared, and programs could be written on the card and read into the machine without the need for a plug-in board.

(4) Advantages:

Programmers have exclusive access to the entire resource during the application period, and can debug their own programs in real time (bugs can be dealt with immediately)

(5) Disadvantages:

A waste of computer resources, only one person uses it at a time.

Note: There is only one program in the memory at the same time, which is called and executed by the CPU. For example, the execution of 10 programs is serial.

Second Generation Computers (1955~1965): Transistors and Batch Systems

(1) Generate background:

Since computers were very expensive at the time, it was natural to think of ways to reduce the waste of time. The usual approach is a batch system.

(2) Features:

Designers, production staff, operators, programmers and maintenance staff directly have a clear division of labor, the computer is locked in a special air-conditioned room, run by professional operators, this is the 'mainframe'.

With the concept of operating system

With programming language: FORTRAN language or assembly language, write it on paper, then punch it into a card, and then take the card box to the input room, hand it to the operator, and drink coffee and wait for the output interface

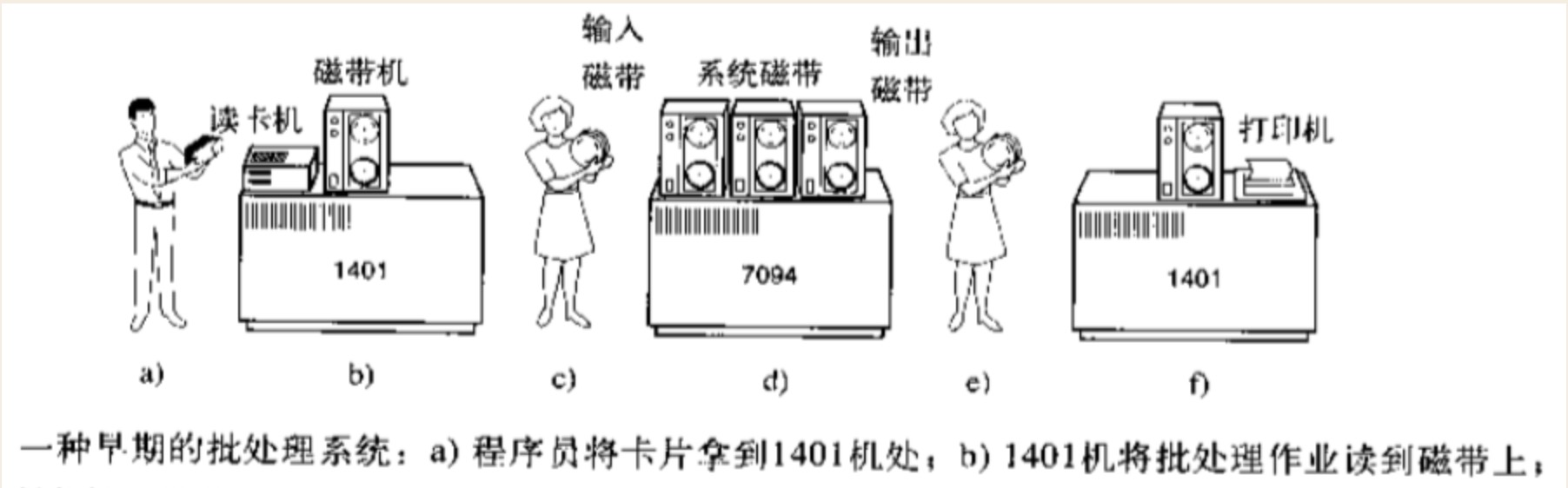

(3) How the second generation solves the problems/disadvantages of the first generation:

1. Save the input of a bunch of people into a large wave of input,

2. Then calculate it sequentially (this is problematic, but the second generation calculation has not solved it)

3. Save the output of a group of people into a large wave of output

(4) Advantages:

Batch processing saves time

(5) Disadvantages:

1. The whole process requires people to participate in the control and move the tape around (two little people in the middle)

2. The calculation process is still sequential calculation - "serial"

3. The computer that the programmer used to enjoy for a period of time must now be planned into a batch of jobs, and the process of waiting for the result and re-commissioning needs to be completed by other programs of the same batch (this has a great impact on Program development efficiency, unable to debug the program in time)

The third generation of computers (1965~1980): integrated circuit chips and multiprogramming

In the early 1960s, most computer manufacturers had two completely incompatible product lines. One is word-oriented: large scientific computers, such as IBM 7094 , see above, mainly used for scientific computing and engineering computing The other is character-oriented: commercial computers, such as the IBM 1401 , pictured above, are mainly used by banks and insurance companies for tape filing and printing services It is expensive to develop and maintain completely different products, and different users use computers for different purposes. IBM tries to satisfy both scientific computing and commercial computing by introducing the system / 360 series. The low-end 360 series is comparable to the 1401, and the high-end is much more powerful than the 7094. Different performance sells at different prices. The 360 was the first mainstream model to use a (small-scale) chip (integrated circuit), and compared to the second generation of computers using transistors, the price/performance ratio was greatly improved. Descendants of these computers are still used in large computer centers, the predecessors of today's servers, which handle no less than a thousand requests per second.

(2) How to solve problem 1 of the second generation computer:

After the card is taken to the computer room, the job can be quickly read from the card to the disk, so when a job ends at any time, the operating system can read a job from the tape and put it into the vacated memory area to run. The technique is called

Simultaneous External On-Line Operation: SPOOLING, which is used for simultaneous output. When this technology is adopted, the IBM 1401 machine is no longer needed, and the tapes do not have to be moved around (the two little people in the middle are no longer needed)

(3) How to solve problem 2 of the second generation computer:

The operating system of the third-generation computer widely uses the key technology that the operating system of the second-generation computer does not have: multi-channel technology

In the process of executing a task, if the cpu needs to operate the hard disk, it sends an instruction to operate the hard disk. Once the instruction is issued, the mechanical arm on the hard disk slides to read the data into the memory. During this period of time, the cpu needs to wait, and the time may be very short. , but for the cpu, it has been very long, long enough to allow the cpu to do many other tasks, if we let the cpu switch to do other tasks during this time, so the cpu will not be fully utilized. This is the technical background of the multi-channel technology

(4) Multi-channel technology:

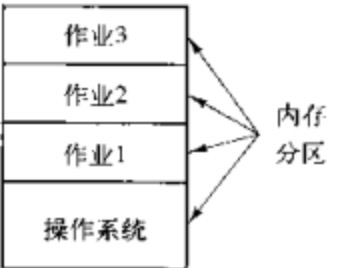

Multi-channel in multi-channel technology refers to multiple programs. The implementation of multi-channel technology is to solve the orderly scheduling problem of multiple programs competing or sharing the same resource (such as cpu). The solution is multiplexing. Multiplexing is divided into multiplexing in time and multiplexing in space.

Spatial reuse: divide the memory into several parts, and put each part into a program, so that there are multiple programs in the memory at the same time.

Time multiplexing: when one program is waiting for I/O, another program can use the cpu. If enough jobs can be stored in the memory at the same time, the cpu utilization rate can be close to 100%, similar to our primary school mathematics institute. learning co-ordination methods. (After the operating system adopts multi-channel technology, it can control the switching of processes, or compete for the execution authority of the CPU between processes. This switching will not only be performed when a process encounters io, but also a process that occupies the cpu for too long will also switch, or the operating system will take away the execution authority of the cpu)

(4) The biggest problem of spatial multiplexing is:

The memory between programs must be divided, and this division needs to be implemented at the hardware level and controlled by the operating system. If the memory is not divided from each other, one program can access the memory of another program,

The first loss is security. For example, your qq program can access the memory of the operating system, which means that your qq can get all the permissions of the operating system.

The second loss is stability. When a program crashes, it may also reclaim the memory of other programs. For example, if the memory of the operating system is reclaimed, the operating system will crash.

(5) The operating system of the third-generation computer is still batch processing

Many programmers miss the first generation of exclusive computers that could debug their programs on the fly. In order to satisfy programmers who can get a response quickly, the time-sharing operating system appeared

(6) How to solve the problem 3 of the second generation computer: time-sharing operating system

Time-sharing operating system: Multiple online terminals + multi-channel technology 20 clients are loaded into the memory at the same time, 17 are thinking, 3 are running, the CPU uses a multi-channel method to process these 3 programs in the memory, because the client submits are generally short instructions and seldom consumes energy. Over time, indexing computers can provide fast interactive services to many users, all of whom think they have exclusive access to computer resources CTTS: MIT successfully developed a CTSS-compatible time-sharing system on a modified 7094 machine. Time-sharing systems only became popular after the third generation of computers widely adopted the necessary protective hardware (memory isolation between programs) After the successful development of CTTS, MIT, Bell Labs and General Electric decided to develop MULTICS that can support hundreds of terminals at the same time (its designers aim to build a machine that meets the computing needs of all users in the Boston area), it is obvious that it is going to be heaven, Finally fell to his death. Later, Ken Thompson, a Bell Labs computer scientist who had participated in the development of MULTICS, developed a simple, single-user version of MULTICS, which became the UNIX system. Based on it, many other Unix versions have been derived. In order to enable programs to run on any version of Unix, IEEE proposed a Unix standard, posix (Portable Operating System Interface) Later, in 1987, a small clone of UNIX, the minix, appeared for educational use. Finnish student Linus Torvalds wrote Linux based on it

Fourth generation computer (1980~present): personal computer

3. Summary

The ability to support (pseudo) concurrency is guaranteed even if there is only one cpu available (early computers did). Turn a single cpu into multiple virtual cpus (multi-channel technology: time multiplexing and space multiplexing + isolation supported on hardware), without the abstraction of processes, modern computers will cease to exist.

Necessary theoretical foundation:

The role of an operating system:

1: Hide ugly and complex hardware interfaces and provide good abstract interfaces

2: Manage, schedule processes, and make the competition of multiple processes for hardware in an orderly manner

Two multi-channel technology:

1. Generation background: for single core, achieve concurrency

ps: The current host is generally multi-core, so each core will use multi-channel technology to have 4 CPUs,

A program running on cpu1 encounters io blocking and will wait until io ends before rescheduling

, will be scheduled to any one of the 4 CPUs, which is determined by the operating system scheduling algorithm.

2. Spatial reuse: For example, there are multiple programs in memory at the same time

3. Time multiplexing: multiplexing the time slice of a cpu

Emphasis: When io cuts are encountered, it takes up too long to cut the CPU. The core is to save the state of the process before cutting.

In this way, it can be ensured that when the next switch comes back, it can continue to run based on the position cut off last time.