In December 2017, the WeChat applet opened up real-time audio and video capabilities to developers, bringing broad imagination to the industry. Lianmai live broadcast technology has become the standard for live video in the live broadcast outlet in 2016, but only on the native APP can a good user experience be guaranteed. At that time, it was not possible to live broadcast with the microphone in the WeChat applet. The WeChat applet announced the opening of real-time audio and video capabilities in December last year, and Apple announced in June last year that it would support WebRTC. What kind of chemical effect can Lianmai live broadcast technology, WeChat applet and WebRTC have? What do developers need to know and consider when implementing Lianmai live streaming technology on WeChat applet or browser WebRTC?

On Saturday, March 17, 2018, at the Zego Meetup Beijing Station, a technology salon hosted by Instant Technology, Xian Niu, a senior technical expert and architect of Instant Technology, shared with the participants the live broadcast technology and WeChat of the Instant Technology team. Small programs combine thinking and practice.

On the same day, heavy snow fell in Beijing early in the morning, but it could not stop the enthusiasm of the participants to learn and communicate, and the event site was packed. Ruixue Zhao has a good year, 2018 will be a good year for entrepreneurs.

Technical difficulties and solutions of Lianmai live broadcast

Let's first review the Lianmai interactive live broadcast technology, which starts from the application scenario.

The first type of application scenario is the most common multi-host connection microphone scenario in live video. Since 2016, it has developed from one-way live broadcast to two-person, three-person, and gradually to multiple people. Two-person Lianmai refers to the interaction between two anchors in the live video scene. The specific program forms include talk, talk show, K song or chorus. In live video broadcasts, it is very common for two to three anchors to connect to the microphone, and sometimes viewers are allowed to connect to the microphone. The application scenarios of multi-person Lianmai include werewolf killing, multi-person video group chat, and group live broadcast to answer questions, etc. The number of users who interact with Lianmai in the same room on the mobile terminal often reaches a dozen or twenty.

The second type of application scenario is online doll catching, or live doll catching, which is also a product form of live video, a combination of live video and the Internet of Things. In addition to live video, the online doll catching technology also adds signaling control, which can remotely watch the doll machine and control the doll catching crane. At the same time, the anchor and the audience can interact through text, as well as voice and video. Lianmai Interactive. This is an outlet at the end of 2017, bringing the Lianmai interactive live broadcast technology to the scene of the combination of live video and the Internet of Things. It is believed that more application scenarios of the combination of live video and the Internet of Things will emerge this year.

The third type of application scenario is live answering, which is a craze that emerged in January 2018, and is an exploration of answering programs in live video scenarios. Based on the basic requirements of low latency, smoothness and high definition, this application scenario also requires that the answer questions and video images must be synchronized. In addition, the number of users in the live answering room of Huajiao Live once exceeded five million, so the live answering technology must support millions of concurrency. Although the entry threshold was increased due to regulatory reasons during the Spring Festival, I believe that there will be other new gameplays in the future. Some new gameplays discussed in the industry are also shared with you here: the host can invite guests Lianmai to answer the questions, and users who participate in the live broadcast can set up a sub-room to answer the questions. These innovative gameplays are technically achievable. In essence, this is the combination of live answering technology and Lianmai interactive live broadcast technology.

What are the requirements for live video technology in these three application scenarios?

The first is that the latency should be low enough . If the one-way delay cannot be lower than 500 milliseconds, the interactive experience of video calls cannot be guaranteed.

The second is echo cancellation. Because during a video call between user A and user B, user A's voice is collected and fed back when it is transmitted to user B, and user A will hear an echo after a certain delay, which is a very good experience for the call. effect, so echo cancellation must be done.

The third is to be smooth and not stuck. Why is fluency necessary? Because of the requirement of ultra-low latency, smoothness and latency are a pair of contradictory technical requirements. If the latency is low enough, the jitter buffer is required to be small enough, so that network jitter is easily manifested, resulting in the appearance of too fast, Too slow, or stuck.

Let's take a detailed look at how to solve the core technical requirements of these three live video broadcasts.

1. Ultra-low latency architecture

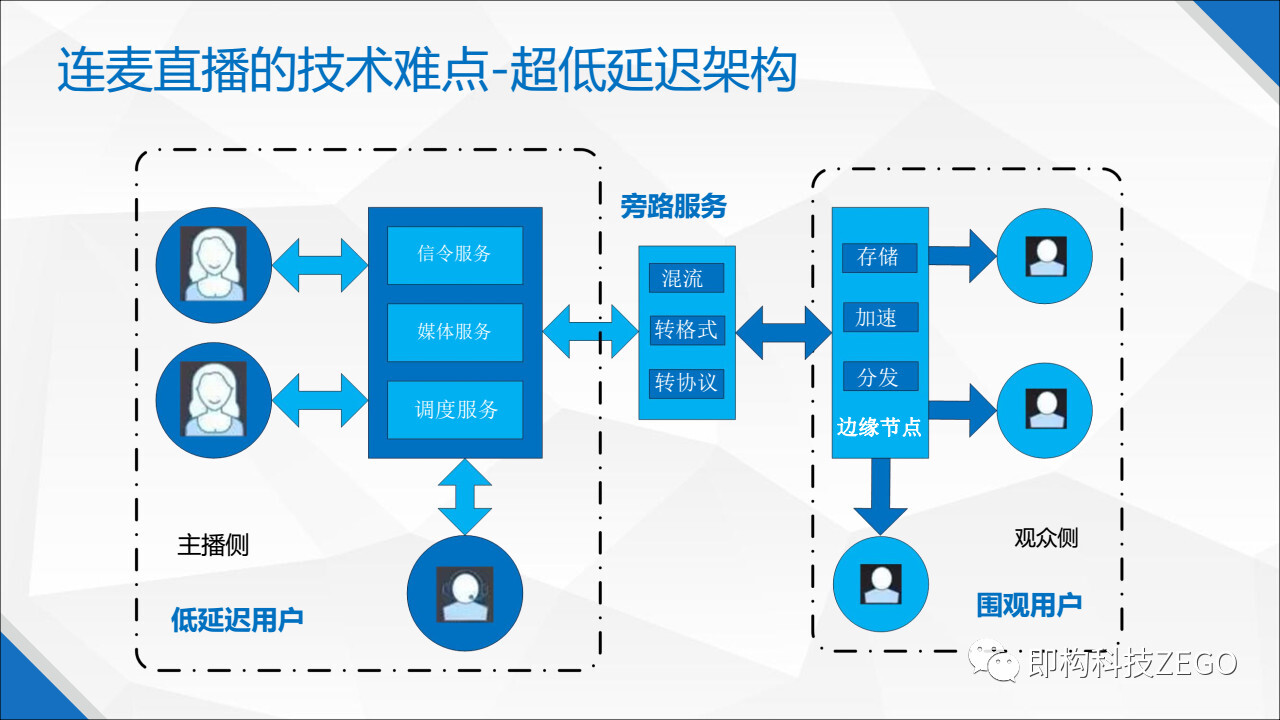

The system architecture of the Lianmai live broadcast solution on the market generally looks like this. The left side is the low-latency network. It provides Lianmai interactive live broadcast services for users who need low latency, and the cost is high. On the right is the content distribution network, which provides live video services for onlookers. Although the delay is slightly higher, the cost is relatively low and it supports higher concurrency. The middle is connected through a bypass service. The bypass server pulls the audio stream and video stream from the low-latency real-time network, selectively performs mixing, format conversion or protocol conversion, etc., and then forwards it to the content distribution network, and then distributes it to the onlookers through the content distribution network. .

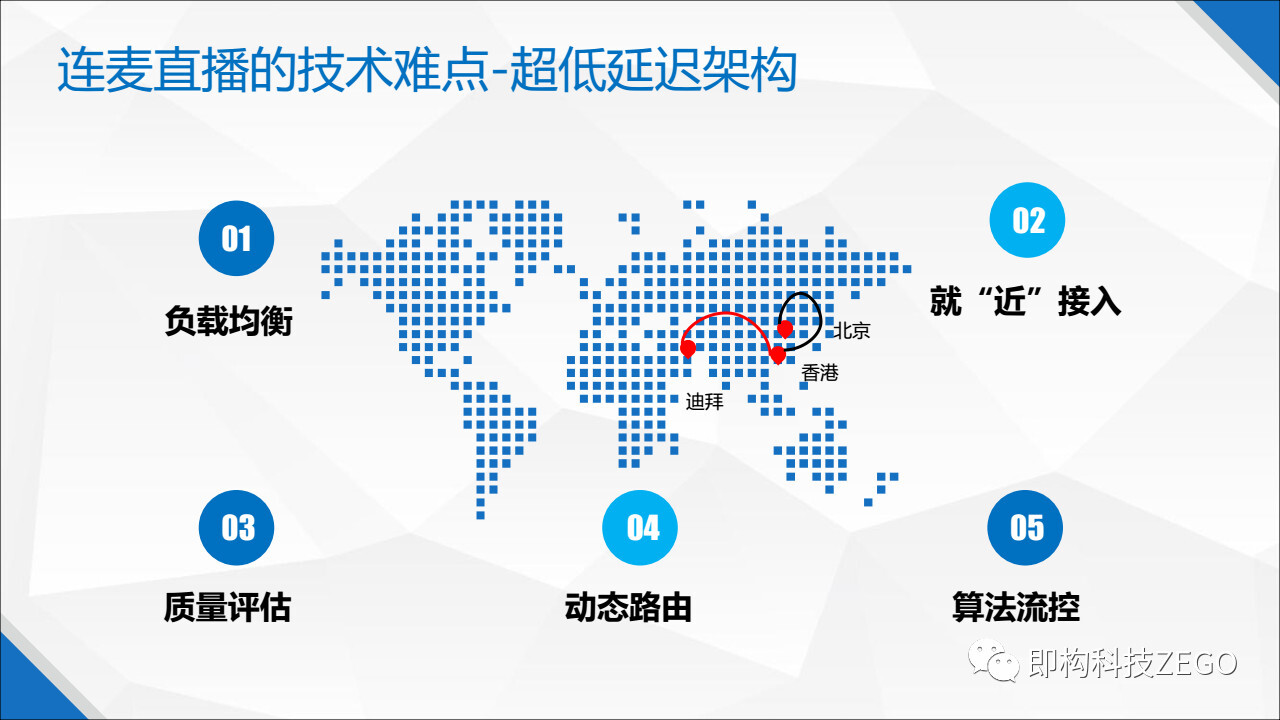

To build an ultra-low latency real-time system architecture, the following points need to be considered:

1. Load balancing - The ultra-low latency architecture must achieve load balancing, which means that any network node must load users in a balanced manner. If the user access volume of a certain network node exceeds the upper limit that it can carry, it is prone to a large number of packet loss, which will trigger network congestion, resulting in more packet loss and poor user experience.

2. Nearest access - "Nearby" on the network is different from the nearness on a straight line as we understand it. This can be compared to a transportation network. Suppose you see another point close to you when you are driving, but it may not actually be close. Two points should be considered: the first point is connectivity, although points A and B seem to be very close. However, there is no direct road from point A to point B, which is equivalent to the disconnection of the network. The second point is the congestion situation. If the road is very short and there is congestion, it is not necessarily close. For example, if a user in Dubai and a user in Beijing are connected to Mai, it seems that it is the closest to push the stream directly from Dubai to Beijing, but in fact this direct path may not work, so you need to detour to Hong Kong to continue the transfer and take a detour. The distance on the network may be "closer".

3. Quality assessment - The static method in quality assessment is post-assessment, specifically reviewing past data, analyzing the data that users in a certain area pushed to a certain area at various time points, and summarizing which time point to take which path to compare A good solution, and then artificially configure the relevant data to be transmitted to the network in real time, can improve the transmission quality.

4. Dynamic Routing - Another method of quality assessment is dynamic assessment, that is, dynamic assessment of quality based on historical data. The transmission network will accumulate a lot of user data after a period of operation. For example, users in Shenzhen push traffic to the optimal route in Beijing under different network conditions in the morning, noon, and night. The accumulated data can be used to dynamically formulate routing strategies. As a basis, this is dynamic routing.

5. Algorithmic flow control - In the real-time transmission network, we need to select an optimal path to push the flow. If the optimal path does not meet the requirements of ultra-low latency, we need to make some compensation in the algorithm at this time, such as channel protection, by adding redundancy to protect the data in the channel. There are also some flow control strategies when pushing streams. In the uplink network, if network jitter is detected, or if the network is weak, the bit rate will be reduced, and if the network situation becomes better, the bit rate will be increased. In the downlink network, video streams with different bit rates can be selected for users in different network environments through layered coding.

2. Echo Cancellation

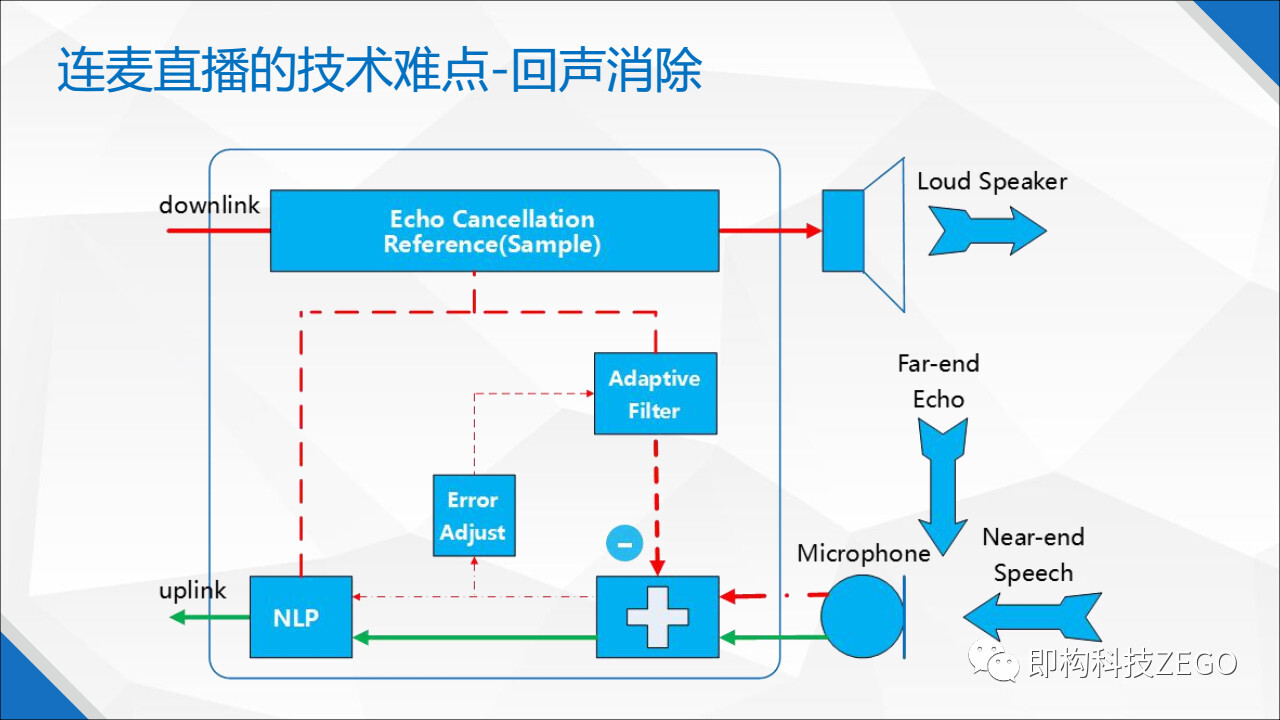

What is an echo? For example, if you are a near-end user and receive the voice of a far-end user, the sound will be played through speakers, and will spread in the room. The microphone collects it and transmits it to the far end. After a delay of one or two seconds, the far-end user will hear his own voice again, which is an echo to the far-end user. In order to ensure user experience, echo cancellation must be done. For the audio and video engine, the sound collected by the microphone contains the echo of the far-end user and the real sound of the near-end user. Kind of like blue ink mixed with red ink, it's hard to separate.

Is there no way then? In fact, we still have some solutions. The original sound transmitted from the far end is the reference signal. Although it is related to the echo signal, it is not exactly the same. It is wrong to subtract the original sound directly from the sound captured by the microphone. Because the echo is formed after the reference signal is played, bounced and superimposed in the air, it is related to the reference signal, but not the same. We can understand that the echo signal and the reference signal have a certain functional relationship, and all we need to do is to solve this functional relationship. Using the reference signal as the input of the function, simulate the echo signal, and then subtract the analog echo signal from the sound signal collected by the microphone, and finally achieve the purpose of echo cancellation. We implement this function through a filter. The filter will continue to learn and converge, simulate the echo signal, and make the analog echo as close to the echo signal as possible, and then subtract the analog echo signal from the sound signal collected by the microphone to achieve the purpose of echo cancellation. . This step is also called linear processing.

Echo has three scene types: silent, single talk and double talk. For single-speaking (that is, one person speaking), the effect of suppression after linear processing will be better, and the echo cancellation will be relatively clean. For double talk (that is, multiple people talking at the same time), the effect of linear processing is not so good, and then the second step needs to be taken: nonlinear processing to eliminate the remaining echoes. There are not many open source things as a reference for nonlinear processing. It depends on each manufacturer to research it, which can very well reflect the technical accumulation of each manufacturer.

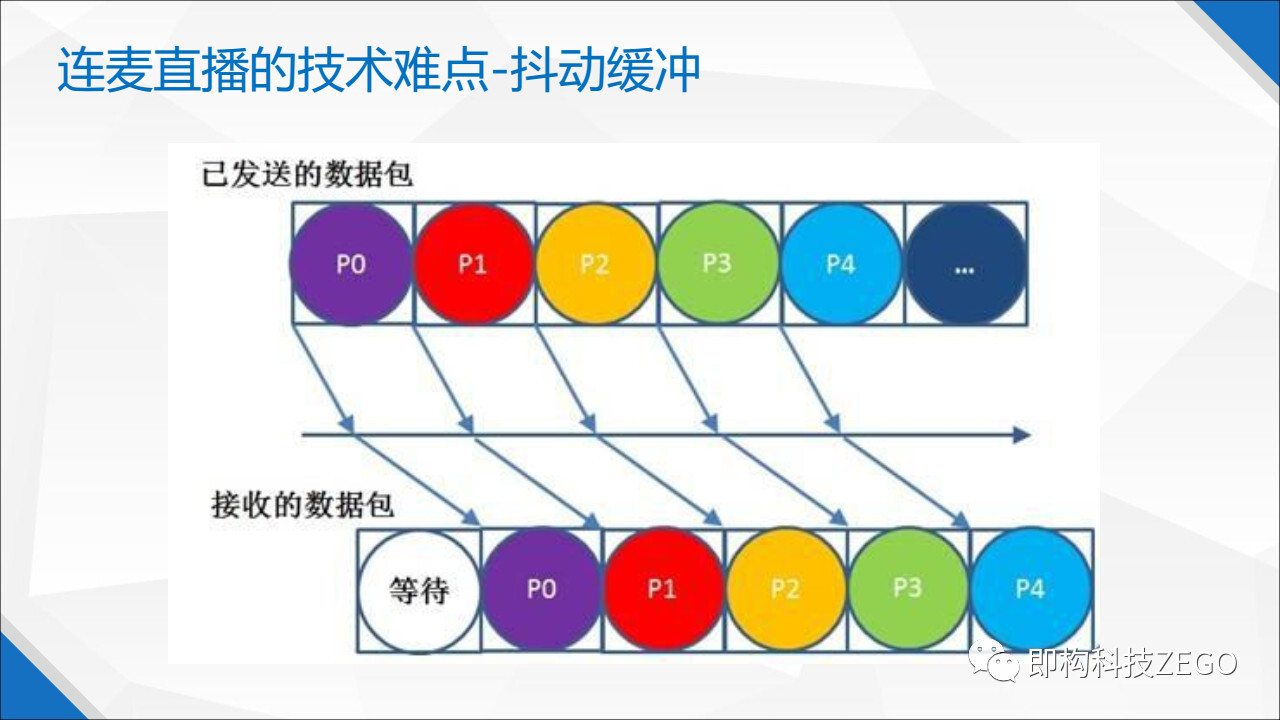

3. Jitter buffer

The network has congestion, packet loss, disorder and jitter, so network transmission will bring data damage. Especially when using a UDP-based private protocol to transmit voice and video data, jitter buffering is required. Taking WebRTC as an example, the jitter buffer for audio data is called NetEQ, and the buffer for video data is called JitterBuffer, which are very valuable parts of the WebRTC open source project. Jitter buffering is to buffer and sort data packets to compensate for network conditions such as packet loss and out-of-order to ensure fluency. The queue length of the jitter buffer is essentially the queue delay time. If it is too long, the delay will be large. If it is too short, jitter will be displayed, and the user experience will be bad. Regarding the setting of the length of the jitter buffer, each manufacturer has different practices, and some use the maximum equation of the jitter time of the network packet as the length of the buffer queue. This is an open topic that requires manufacturers to think for themselves.

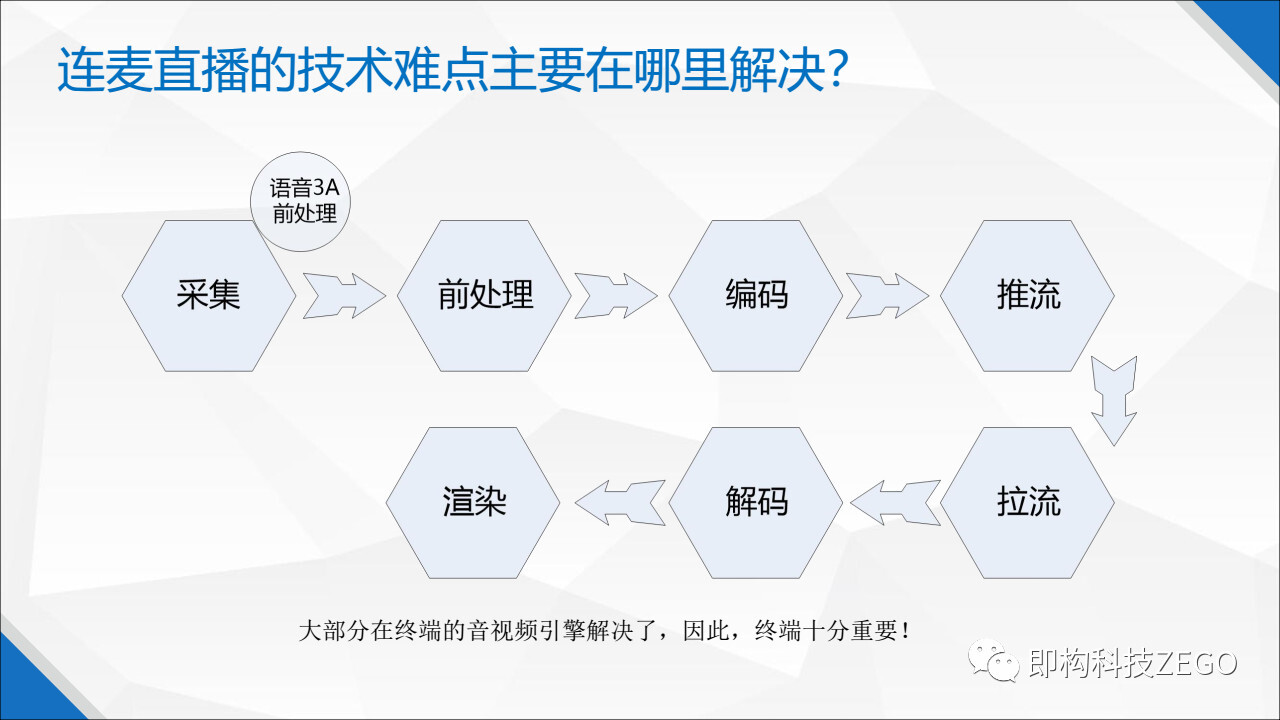

Here we make a summary of the stages. From the push stream end to the pull stream end, the whole process includes seven steps: acquisition, preprocessing, encoding, push stream, pull stream, decoding and rendering. So let's take a look at the above three technical difficulties in which links?

1) Low latency . Basically, there are three types of links that introduce latency: acquisition and rendering, encoding and decoding, and network transmission. The first category is the acquisition and rendering process, which brings a relatively large delay, especially in rendering. Almost no mobile terminal system can guarantee a 100% delay of 50 milliseconds, which is caused by some hardware limitations. The second type is the codec link, especially the audio codec is encoded forward, which itself will bring a delay, and even some audio codecs can bring a delay of 200 milliseconds. The third type is network transmission. In the real-time transmission network of Instant Technology, the round-trip transmission delay can be less than 50 milliseconds. Among them, acquisition, rendering, encoding and decoding are all implemented in the terminal.

2) Echo cancellation , which belongs to voice pre-processing 3A, needs to be carried out in the pre-processing link, that is, it is implemented in the terminal.

3) Jitter buffering is implemented at the receiving end. The jitter buffer at the receiving end determines the time interval at which the sending end should send data packets.

To sum up, the three technical difficulties just mentioned are all implemented in the terminal, so the terminal is very important. Below we focus on comparing the implementation of Lianmai live broadcast technology on various terminals.

Comparison of Lianmai Live Streaming in Various Terminals

The terminals of Lianmai live broadcast mainly include: native APP, browser H5, browser WebRTC, and WeChat applet. The applications on the browser include H5 and WebRTC, the former can be pulled and watched, and the latter can be pushed and pulled.

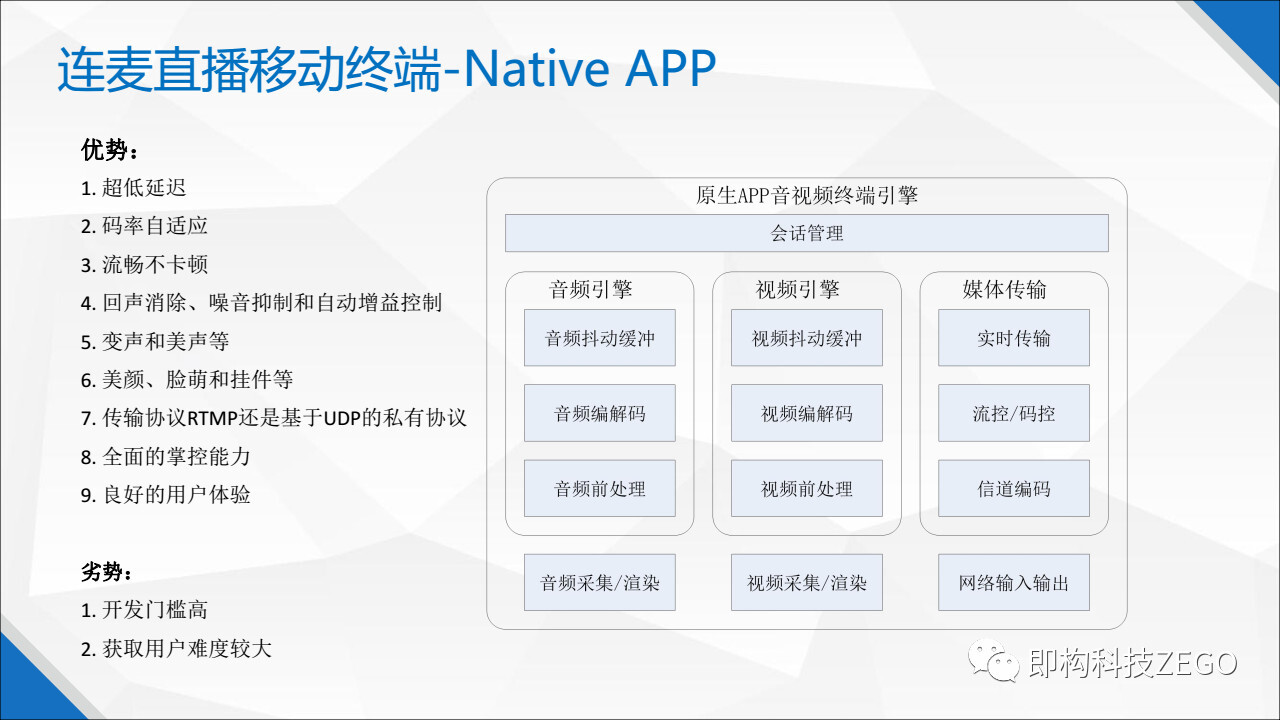

Lianmai Live Mobile Terminal-Native APP

The structural block diagram of the native APP terminal audio and video engine is as follows, basically including the audio engine, video engine and network transmission, collectively called the real-time audio and video terminal engine. It also includes the underlying audio and video capture and rendering, as well as the input and output capabilities of the network, which are open capabilities of the operating system.

The native APP has a natural advantage. It directly deals with the operating system, and it can directly use the resources and capabilities opened by the operating system, such as audio and video capture and rendering, as well as network input and output. Applying a fashionable slogan: "There is no middleman to make the difference", directly connecting with the operating system, you can get a better user experience.

The advantage of implementing Lianmai live broadcast on the native APP is that it has better control over the seven links mentioned above, can obtain relatively low latency, and can realize self-developed 3A algorithms for voice preprocessing, including echo cancellation, and It has better control over the jitter buffer strategy and the bit rate adaptive strategy. In addition, you can choose whether to use the RTMP protocol or the UDP-based private protocol, which is more secure against weak network environments.

The more popular pre-processing technologies on the market, such as beauty, pendant, voice changing, etc., native APPs can allow developers to implement or connect these technologies by opening the pre-processing interface. Why stress this? Because neither the browser WebRTC nor the WeChat applet has an open pre-processing interface, developers have no way to implement or connect to third-party beauty or pendants and other technical modules.

On the native APP, developers can get a comprehensive control ability, so that users can get a better experience. The mainstream video live broadcast platforms have their own native APP platforms, while browsers and WeChat mini-programs are relatively auxiliary. The user experience of native apps is the best and the most controllable for developers.

What are the disadvantages of implementing Lianmai live broadcast on native APPs? The development threshold is high, the development cycle is long, and the labor cost is high. In addition, from the perspective of acquiring users and dissemination, it is not as convenient as browsers and WeChat mini-programs.

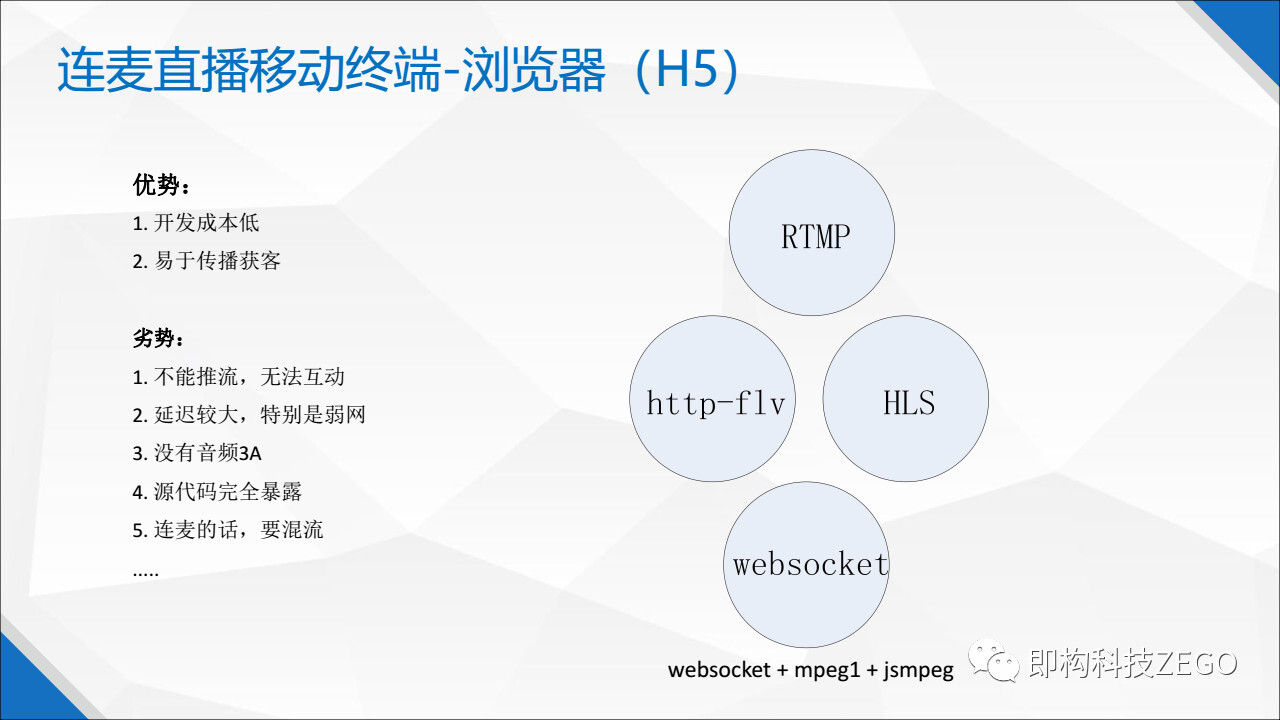

Lianmai Live Mobile Terminal - Browser (H5)

Browser H5 is like a coin with two sides. It has advantages and disadvantages. The advantage is that the development cost is low and it is easy to spread. In addition, the delay on the browser H5 is also relatively large. If you use RTMP or HTTP-FLV, the delay will be between 1 second and 3 seconds. If you use HLS, the delay will be more than 8 seconds or even 10 seconds. Such a large delay does not allow live broadcast.

Using these three protocols is played through the player in the browser H5. In the scenario where multiple anchors interact with each other, only one video stream can be played in one player, and three anchors need three players. Therefore, it is impossible to see the situation of multiple anchors interacting with each other in the same frame. If you want to see the pictures of multiple anchors interacting in the same frame, you must mix the multiple streams into one stream and play them in a single player.

Also, the source code of browser H5 is open. If the audio and video terminal engine is implemented on the browser, it is equivalent to exposing all the core source codes to the outside world. Therefore, I have not seen any manufacturer that has completely made the audio and video engine on the browser H5. Even if you are willing to do it, the browser will not allow you to do so. The browser is separated from the developer and the operating system. If the browser does not open the core capabilities of the operating system to the developer, the developer cannot collect and render it autonomously. , cannot control the network input and output, and functions such as flow control code control cannot be realized.

In the browser H5, it can also be transmitted through websocket, played with jsmpeg, and the video codec format is mpeg1. mpeg1 is an older media format that all browsers support. Use jsmpeg player to play mpeg1 in browser, all browsers can also support. In this way, a relatively low delay can be obtained, but it is still impossible to push the stream, and there is no way to achieve a live broadcast.

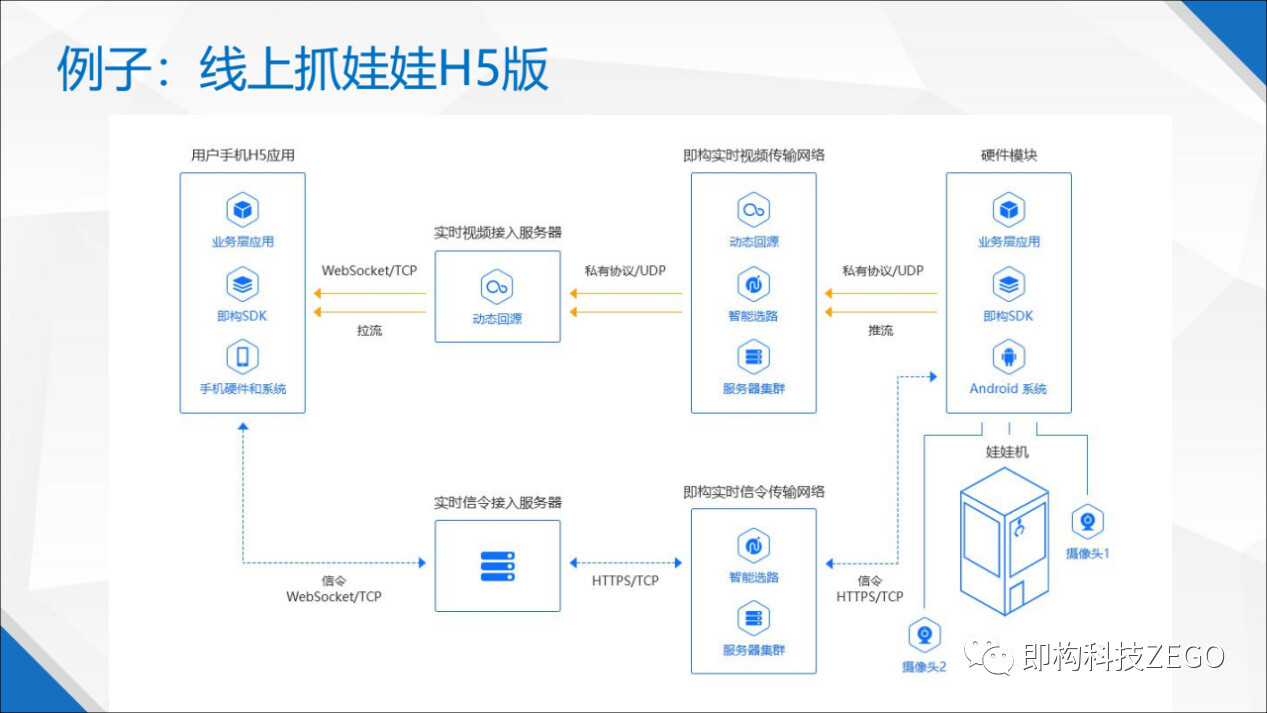

Example: Online Catching Doll H5 Version

The following uses the H5 version of the online grabbing doll as an example to briefly introduce the application of websocket on the browser H5. As can be seen from the upper left corner of the figure below, when the browser H5 terminal is connected to the instant real-time transmission network, we have added a video access server, and the right is the instant real-time transmission network, using a UDP-based private protocol. The protocol conversion and media format conversion are realized through the access server: websocket and UDP-based private protocol conversion, mpeg1 and H.264 conversion. If the native APP is connected, no conversion is required. Although there is an access server, the conversion will not be performed.

In addition, the H5 version of the online catching doll has no sound. In addition to the characteristics of the application scenario, the audio engine must be implemented with H5 to have sound. If the audio engine is implemented on the browser H5, it is equivalent to open source the technology, and no manufacturer has yet seen it.

Lianmai Live Mobile Terminal - Browser (WebRTC)

You may find it a pity that although the browser H5 is easy to spread and easy to develop, the experience is not good, and it cannot be broadcast live. So, can you push the stream on the browser, and can you realize Lianmai live broadcast? The answer is yes, then use WebRTC.

The WebRTC mentioned here refers to the WebRTC that has been embedded in the browser and is supported by the browser, not the source code of WebRTC. Some mainstream browsers have embedded WebRTC, which opens up the browser's real-time audio and video capabilities to developers.

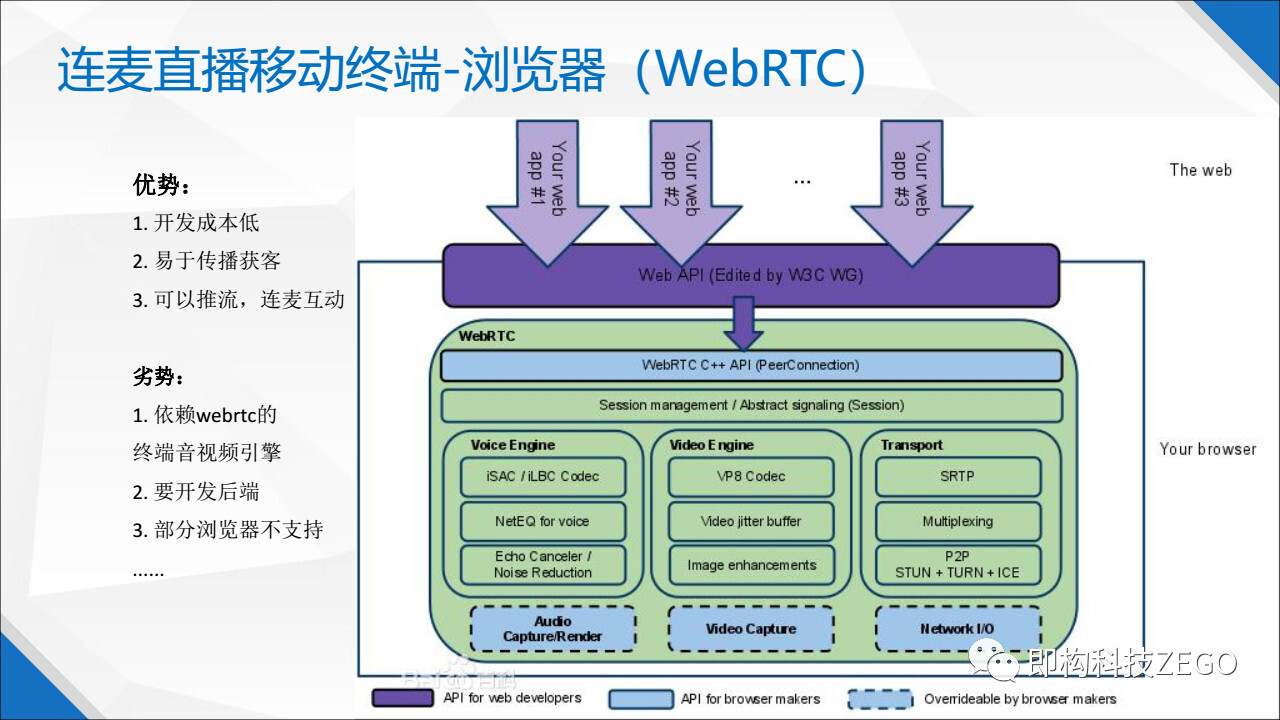

The above picture is the structure diagram of WebRTC. We can see that WebRTC includes audio engine, video engine, transmission engine, etc. The bottom dotted box indicates that it can be overloaded, that is to say, the browser opens the bottom layer capabilities of audio and video rendering and network transmission to developers. Developers can choose whether to reload according to their own needs. In the audio engine, two codecs are included: iSAC and iLBC, the former is for wideband and ultra-wideband audio codecs, and the latter is for narrowband audio codecs. The audio engine also includes audio jitter buffering, echo cancellation and noise suppression modules. The NetEQ algorithm in the jitter buffer can be said to be one of the essences in WebRTC. The video engine includes video codecs for VP8 and VP9, and even the upcoming AV1. The video engine also includes modules such as video jitter buffering and image quality enhancement. The transmission engine, WebRTC uses the SRTP (Secured Realtime Transport Protocol) secure real-time transport protocol. Finally, WebRTC adopts the P2P communication method, and there is no back-end implementation such as a media server. The above is a brief introduction to WebRTC.

The general advantages and disadvantages of browser WebRTC will not be repeated here. Please Baidu by yourself, and only the key points are mentioned here. The advantage of browser WebRTC is that it implements a relatively complete audio and video terminal engine, which allows streaming on the browser, and can realize live broadcast with microphone. However, browser WebRTC also has shortcomings:

1) There is no open pre-processing interface, and modules such as beauty and pendants cannot be connected to third-party or self-developed solutions.

2) The backend of the media server is not implemented. The developer needs to implement the media server and then access it through an open source WebRTC gateway (such as janus).

3) The capabilities of codec, jitter buffer and voice pre-processing 3A can only rely on WebRTC and cannot be customized.

4) Some mainstream browsers do not support WebRTC, especially Apple's browsers. Although Apple announced support for WebRTC last year, the latest version of iOS Safari does not support WebRTC well. The mainstream version of iOS Safari does not support WebRTC, and the WeChat browser on iOS does not support WebRTC.

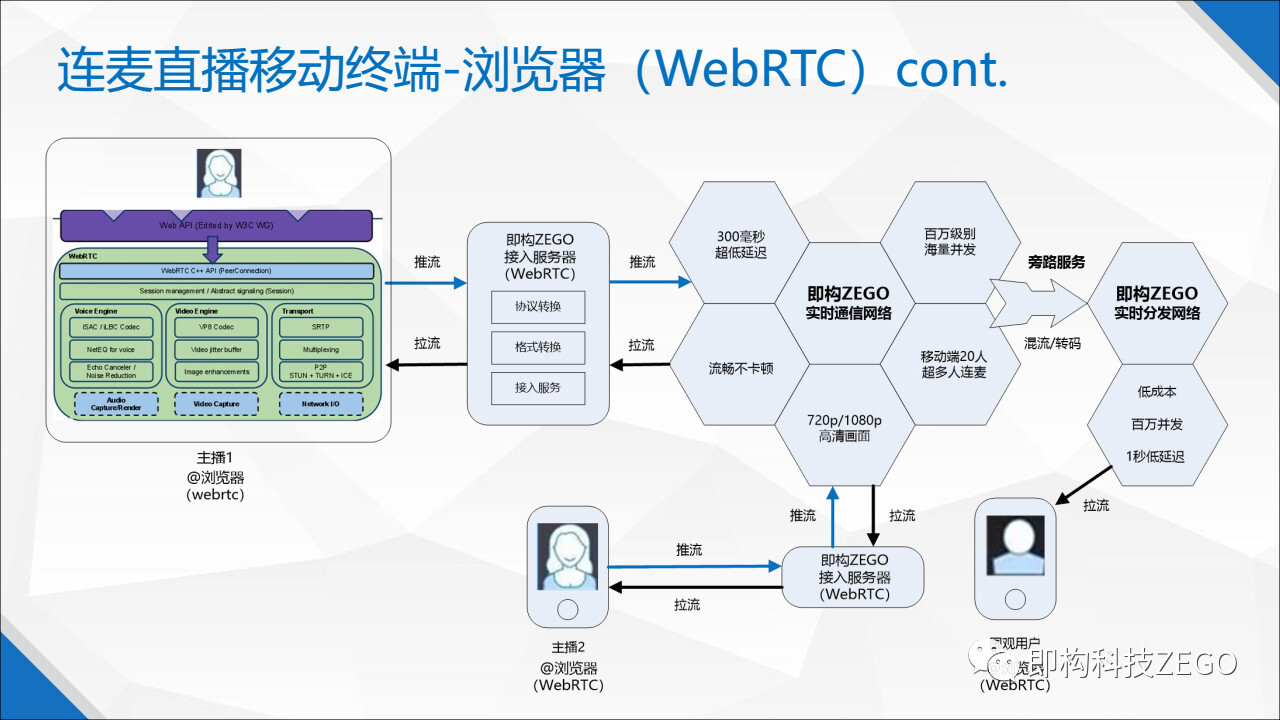

As shown in the figure above, since WebRTC does not provide the implementation of the media server, it is necessary to connect the browser WebRTC to the backend of the media server, which can be self-developed or a third-party service. The protocol and media format between the browser WebRTC and the media server backend are different, so the protocol and format must be converted. The UDP-based SRTP used by WebRTC needs to be converted into the UDP-based private protocol of the media server. In addition, the media format also needs to be converted, because the voice and video format in WebRTC uses VP8 or VP9 by default. At the same time, some adjustments need to be made about the signaling scheduling in the real-time transmission network. The access layer between the browser WebRTC and the media server backend can also be implemented using an open source WebRTC Gateway (such as janus).

The browser is a super application similar to the operating system. It has an important traffic portal, but it is also a "middleman" between the developer and the operating system. Developers obtain real-time audio and video capabilities opened by browsers through WebRTC, but they must also bear the pain caused by WebRTC.

Lianmai Live Mobile Terminal-WeChat Mini Program

The title of this speech is "Lianmai Interactive Live X WeChat Mini Program", why didn't we start discussing Mini Programs until here? Allow me to explain why. What is WeChat Mini Program? It is a light application running on WeChat. what's Wechat? It is a super application of the class operating system. Are these features close to browsers and H5? H5 is a lightweight application supported by browsers, and browsers are super-applications like operating systems. Behind the browser are major international technology giants. Unlike WeChat, there is only one Internet giant behind it. Therefore, from this point of view, WeChat applet, browser WebRTC and H5 have similarities.

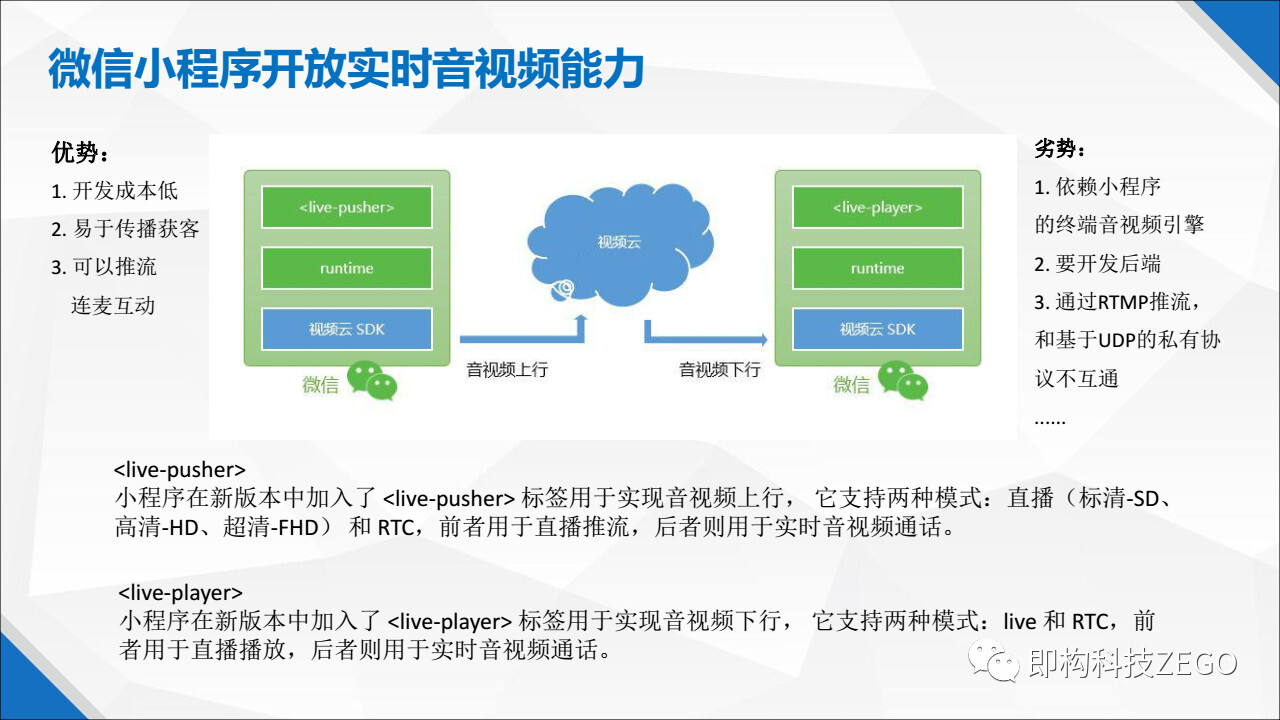

WeChat applet can be compared to the structure of client and server like browser H5. HTML corresponds to WXML of WeChat applet, CSS corresponds to WXSS of applet, and the scripting language of applet is the same as JS, but the framework is different. WeChat Mini Program provides two tabs, one is and the other is . It is a push stream, or a pull stream, which can realize one-way live broadcast or continuous microphone live broadcast. The applet provides two modes: LIVE and RTC, LIVE supports one-way live broadcast, and RTC supports low-latency continuous microphone live broadcast. At present, the WeChat applet push stream adopts the RTMP protocol. If you want to communicate with a private protocol, you need to perform protocol conversion.

The WeChat applet opens up real-time audio and video capabilities, which is a major benefit to the industry. However, based on the above information and logic, we also see the advantages and disadvantages of using WeChat mini-programs to realize Lianmai interactive live broadcast.

There are three benefits :

1) The development cost is low, the development cycle is short, and the development difficulty is basically the same as that of H5;

2) It is easy to spread and acquire customers, and make full use of the high-quality traffic of WeChat;

3) It can push and pull streams, allowing to achieve live broadcast and real-time voice and video calls.

There are four shortcomings :

1) You will be limited by the real-time audio and video capabilities of the WeChat applet. For example, if there are some problems with its echo cancellation, you can only wait for the WeChat team to optimize at its own pace, and there is no way to optimize it yourself.

2) The applet does not have an open pre-processing interface, and can only use the beauty or voice changing function (if any) that comes with the applet, and cannot be connected to self-developed or third-party beauty or voice changing modules.

3) Push and pull streams through the RTMP protocol, and cannot communicate with the UDP-based private protocol. If you want to communicate with the UDP-based private protocol, you must add an access layer to convert the protocol format and even the media format.

4) Without implementing the back-end media server, developers must implement the media server by themselves, or connect the WeChat applet to the third-party real-time communication network.

The browser opens up the browser's real-time audio and video capabilities through WebRTC, while WeChat opens up WeChat's real-time audio and video capabilities through applets, allowing developers to implement live streaming and real-time audio and video calls on two operating system platforms. . However, whether WebRTC or the applet just takes you on the terminal, there is still a lot of work to be done for developers to truly implement the entire system.

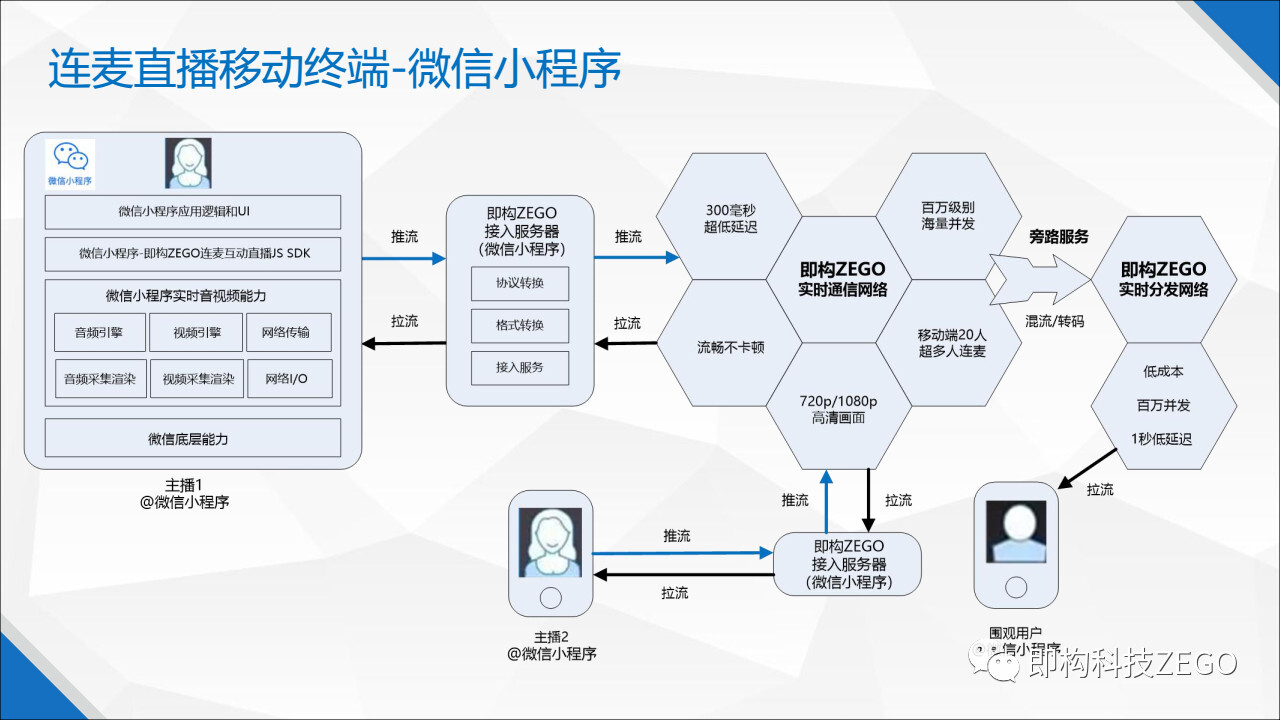

The figure below shows how the WeChat applet connects to the real-time audio and video transmission network. The audio and video terminal engine of the WeChat applet also includes an audio engine, a video engine and a transmission engine. The audio engine is responsible for capturing and rendering, audio jitter buffering, speech preprocessing and encoding and decoding. The video engine is responsible for capturing and rendering, video jitter buffering, video preprocessing and encoding and decoding. Regarding the transmission engine, the WeChat applet uses the RTMP protocol to push and pull streams. It is unclear whether the underlying RTMP protocol is the TCP protocol, or whether it uses QUIC to use a UDP-based private protocol. If the lower layer of RTMP is a private protocol based on UDP, the resistance in the weak network environment will be relatively better, while the TCP protocol is a fair protocol, and the controllability of each link is not strong. The experience in the network environment is relatively poor.

If the WeChat applet is to be connected to the real-time audio and video transmission network, there must be an access server in the middle, which we call the access layer. At the access layer, we need to convert the protocol. For example, if the real-time audio and video transmission network uses a UDP-based private protocol, then the RTMP protocol should be converted to a UDP-based private protocol. There is also media format conversion. If it is different from the media format of the real-time transmission network, it needs to be converted.

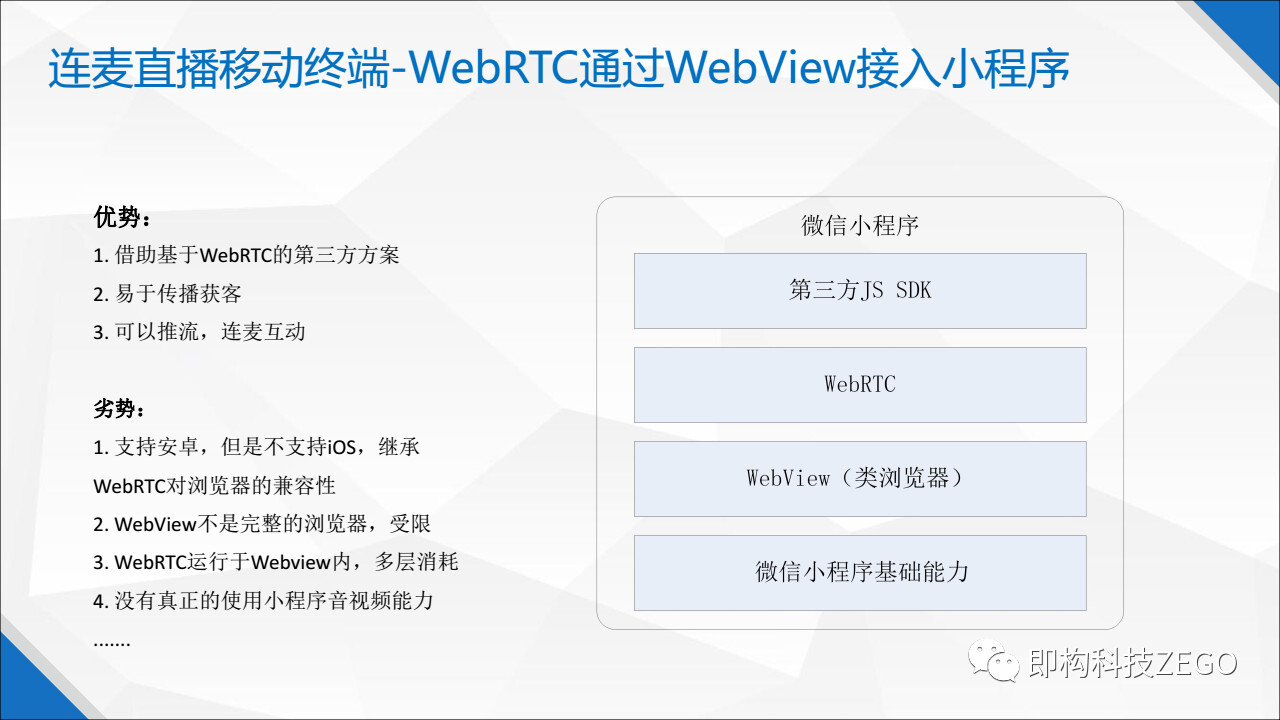

Lianmai live mobile terminal - WebRTC accesses the applet through WebView

Is there any other way to interact with Lianmai Live on Mini Programs? Do I have to use the voice and video capabilities opened by the WeChat applet? maybe. The figure below shows a technical solution I have seen on the market. It bypasses the real-time voice and video capabilities of the WeChat applet, and implements a live broadcast solution through the WebView component of the WeChat applet. Share with you here.

The basic idea of this solution is to use the browser features of WebView and use WebRTC's Web API in WebView to obtain real-time audio and video capabilities on the applet. The figure above is a topology diagram of this scheme. The bottom layer is the basic capabilities of WeChat Mini Programs. The upper layer is WebView. WebView is a control of WeChat applet. It can be simply regarded as a browser-like component that provides some features of the browser, but it is not a complete browser. The WebView of the WeChat applet is similar to a browser, so it may support WebRTC. However, it must be noted that the WebView of the WeChat applet supports WebRTC on the Android platform, but does not support WebRTC on the iOS platform. Although this solution can theoretically realize Lianmai live broadcast on WeChat applet, it has the following limitations:

1) On the iOS platform, the WeChat applet does not support this scheme, as mentioned above.

2) The applet WebView is not a complete browser, it performs worse than ordinary browsers and has many limitations.

3) There are several layers between the developer and the operating system: the bottom layer of WeChat, the applet, WebView, WebRTC, and then the applet application of the developer. Each layer of abstraction will bring performance consumption and affect the final experience.

This solution is essentially a WebRTC-based solution. It does not use the real-time audio and video capabilities opened by the WeChat applet. Instead, it quickly uses the WebView component to take the lead and use WebRTC in the WeChat applet.

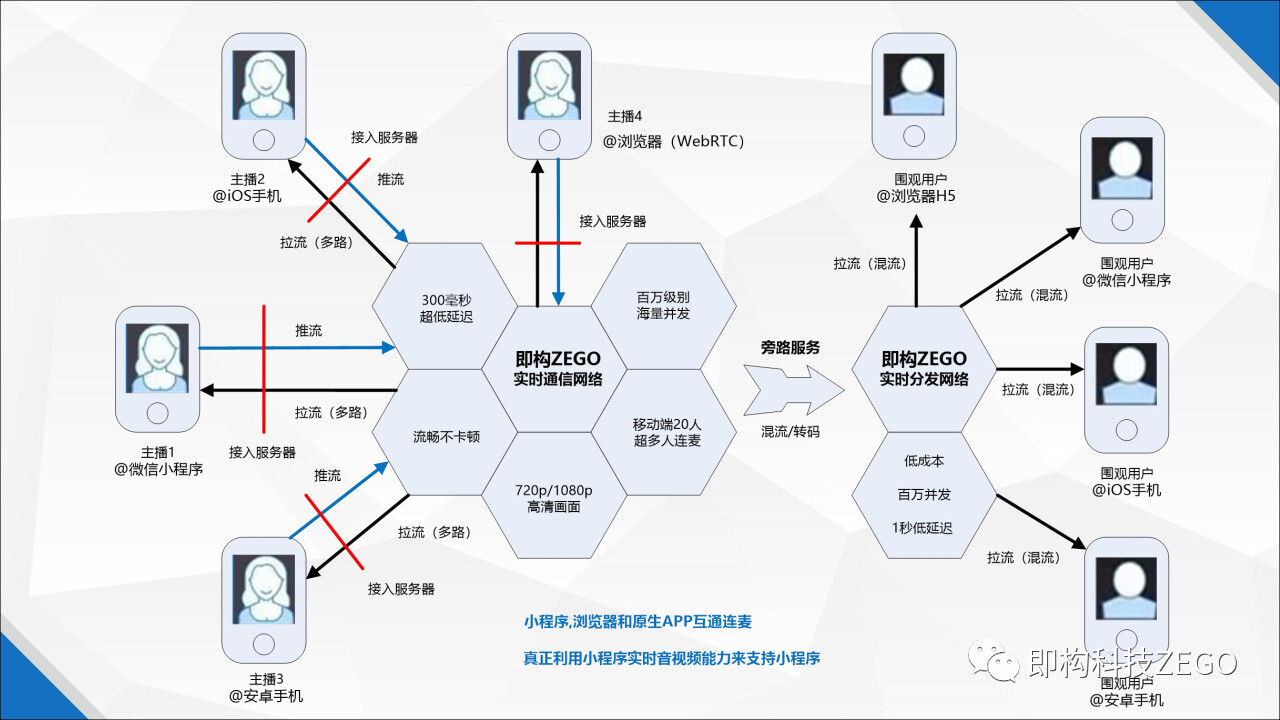

Intercommunication of Lianmai live broadcast on various terminals

With the gradual realization of the Lianmai interactive live broadcast technology on various terminals, we will face a question: Can Lianmai interoperate on various terminals? For example, can user A communicate with user B on the native APP on the WeChat applet?

Let's start with the scenario mentioned above. User A uses the RTMP protocol to push and pull streams on the WeChat applet. If user B uses the RTMP protocol to push and pull streams in the native APP, then the two can naturally communicate with each other. If the native APP push and pull use the private protocol based on UDP, then the microphone cannot be directly connected to the microphone, and the protocol and format must be converted through the access layer to interact with the microphone. This scenario can also be extended: Can user A communicate with user C on the browser WebRTC on the WeChat applet? The logic behind is the same.

Taking ZEGO's solution as an example, ZEGO's native APP SDK has two versions: one that supports RTMP protocol and a private protocol based on UDP. Interactive Lianmai, if the native APP SDK based on the UDP private protocol is used, then the protocol and format must be converted through the access server.

The private protocol based on UDP will perform better in the weak network environment, and the RTMP protocol will perform quite well in the non-weak network environment, and it is well compatible with the CDN content distribution network. For example, the Lianmai live broadcast solution of Huajiao Live has always been a technical solution using the RTMP version provided by Instant Technology. It has been running online for two years and has always maintained a good user experience.

Epilogue

Lianmai live broadcast technology is gradually extended to native APP, browser H5, browser WebRTC, WeChat applet, resulting in a richer ecology, providing a more convenient and good user experience, which is good news for video live broadcast platforms and users. . However, if you want to wear a crown, you must bear its weight. Especially in the browser WebRTC and WeChat applet, developers must fully understand the characteristics and limitations of these types of terminals, in order to better use the Lianmai live broadcast technology to innovate and serve users.

About ZEGO

Instant Technology was founded in 2015 by Lin Youyao, the former general manager of QQ, and received IDG investment in the A round. The core team is from Tencent QQ, bringing together top voice and video talents from manufacturers such as YY and Huawei. That is, ZEGO is committed to providing the world's clearest and most stable real-time voice and video cloud services, helping enterprises to innovate their businesses and changing the way users communicate online. That is, ZEGO has been deeply involved in video live broadcast, video social networking, game voice, online doll catching and online education, etc., and has won awards such as Inke, Huajiao Live, Yizhuo, Himalaya FM, Momo Games, Freedom Fight 2, and Good Future, etc. Trusted and trusted by top manufacturers.