Click the card below to follow the " CVer " public account

AI/CV heavy dry goods, delivered as soon as possible

Reprinted from: Heart of the Machine

Professor Lu Cewu's team from Shanghai Jiaotong University has been working on behavior understanding research for many years, and the latest results have been published in "Nature".

When a behavior subject performs a certain behavior, does the brain produce a corresponding stable brain neural pattern map? If stable maps exist, can machine learning methods be used to discover unknown behavioral neural circuits?

In order to answer the essential questions of this series of behavioral understanding, a recent work published in the top international academic journal Nature conducted research on the mechanism of behavioral understanding. The two co-corresponding authors of the paper are Professor Lu Cewu of Shanghai Jiao Tong University and Professor Kay M. Tye of Salk Research Institute.

Complex sequential understanding through the awareness of spatial and temporal concepts

Paper link: https://www.nature.com/articles/s41586-022-04507-5

Based on computer vision technology, this achievement quantitatively explained the internal relationship between machine vision behavior understanding and brain nerves, and established its stable mapping model for the first time. Forming computer vision behavior analysis to discover behavioral neural circuits, a new research paradigm that uses artificial intelligence to solve basic problems in neuroscience The neural circuit of "Social Hierarchy" is oriented to answer the question of how mammals judge the status of other individuals and themselves in social groups and make behavioral decisions. The new research paradigm formed by it also further promotes artificial intelligence and basic science issues. The development of frontier intersection (AI for Science) field.

The specific research contents are as follows:

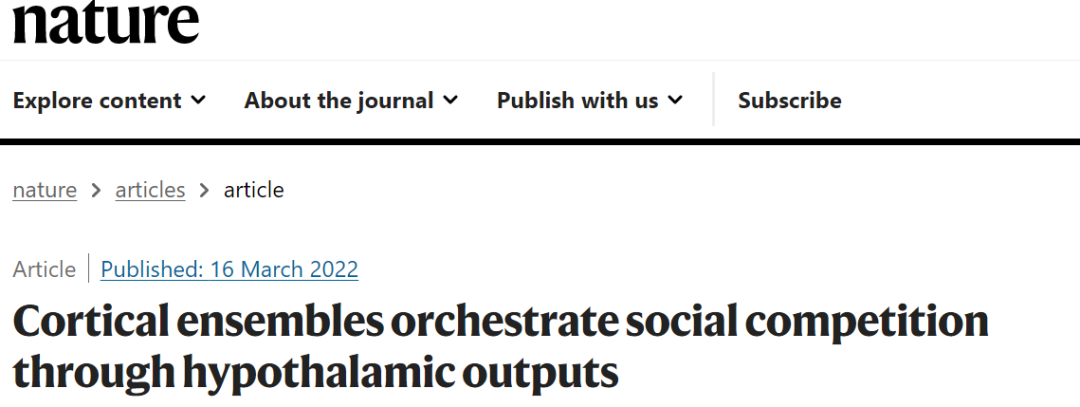

Figure 1. Visual behavior detection-brain neural signal correlation model: (a) mouse visual behavior understanding (b) system framework and model learning.

Visual behavior detection-brain nerve signal correlation model: We used a group of mice as the experimental object, and each mouse wore a radiophysiological recording device to record the sequential cranial nerves of the medial prefrontal cortex (mPFC) in a specific brain region in social activities Signal. At the same time, each mouse is tracked and positioned through multiple cameras, and behavioral semantic labels are extracted based on the research results of pose estimation (such as alphapose) and behavior classification researched and developed by Professor Lu Cewu's team, so that the accuracy of mouse pose estimation is higher than that of the human eye. Based on a large amount of data automatically collected by the proposed system, the Hidden Markov Model trained a regression model from "neural activity signals in mouse mPFC brain regions" to "behavioral labels", and found that there was still a stable mapping relationship on the test set after training, revealing that There is a stable mapping relationship between the behavioral visual type and the brain nerve signal pattern in the brain of the behavior subject.

Model application: Controlling behavioral neural circuits of animal social hierarchy (Social Hierarchy) Discovery: Based on the visual behavior detection-brain neural signal correlation model, we can discover new behavioral neural circuits. "Animal social hierarchy" behavioral neural control mechanisms (for example, low-ranking mice will give high-ranking mice priority to eat, and low-ranking mice will show obedience behavior) has always been an important issue in the academic world, that is, how mammals judge other individuals and other individuals. What is your social group status? What is the neural control mechanism behind it? Since animal social-level behavior is a complex behavioral concept, this problem has always been a difficult problem that has not been broken through by the academic community. In a large-scale mouse group competition video, we positioned the behavior of "animal social hierarchy" based on the above systems and models, and recorded the brain activity state of animal social hierarchy behavior at the same time, and deeply analyzed the animal social hierarchy behavior in the brain. The formation mechanism, namely the discovery that the medial prefrontal cortex-lateral hypothalamus (mPFC-LH) circuit functions to control social-hierarchical behavior in animals, was confirmed by rigorous biological experiments. This research has formed a new research paradigm for discovering neural circuits of unknown behavioral functions based on machine vision learning, and further promoted the development of artificial intelligence to solve basic scientific problems (AI for Science).

Research on Lu Cewu's Team Behavior Understanding

The above work is part of the accumulation of behavioral understanding of Lu Cewu's team for many years. How machines understand behavior requires comprehensive answers to the following three questions:

1. The machine cognition perspective: how to make machines understand behavior?

2. Neurocognitive perspective: What is the intrinsic relationship between machine cognitive semantics and neurocognitiveness?

3. Embodied cognition perspective: how to transfer behavioral understanding knowledge to robotic systems?

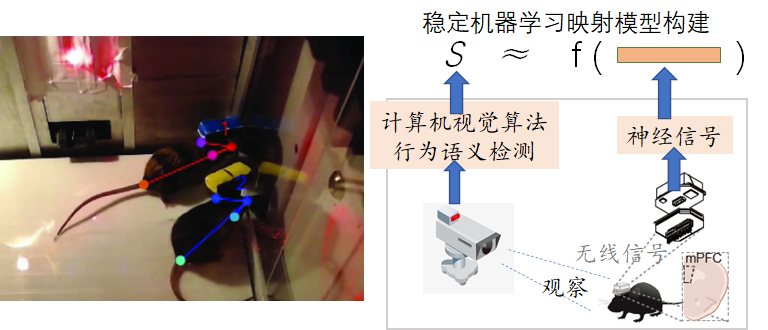

Figure 2. The main work of Lu Cewu's team around behavior understanding

The work published this time in "Nature" is to answer the second question. For the other two questions, the main work of the team is as follows:

1. How to make the machine understand the behavior?

The main work includes:

Human Activity Knowledge Engine HAKE (Human Activity Knowledge Engine)

In order to explore generalizable, interpretable, and scalable behavior recognition methods, it is necessary to overcome the fuzzy connection between behavior patterns and semantics, and the long tail of data distribution. Different from the general "black box" model of direct deep learning, the team has built a knowledge-guided and data-driven behavioral reasoning engine HAKE (open source website: http://hake-mvig.cn/home/):

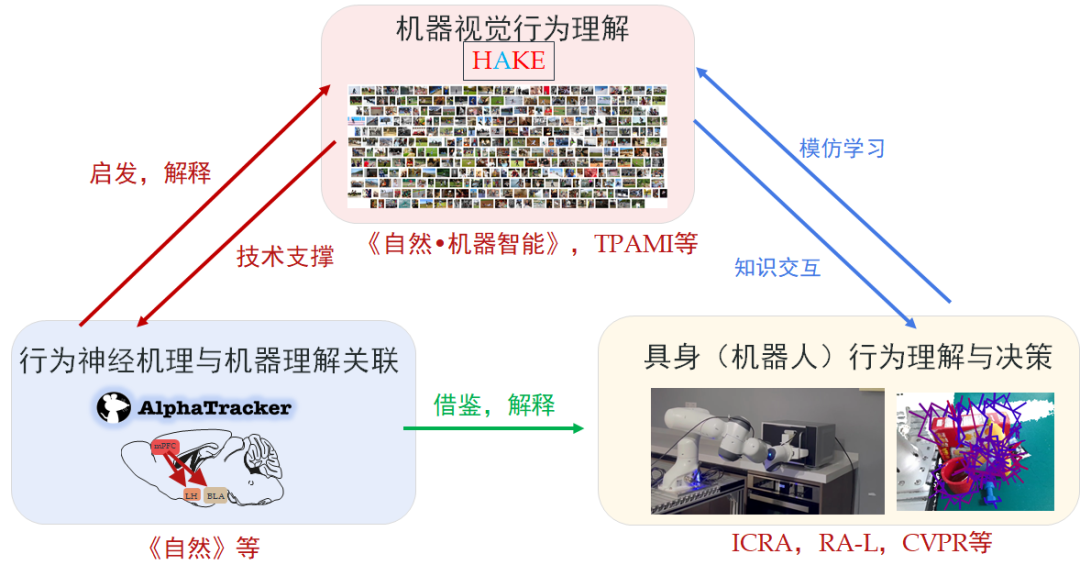

Figure 3. HAKE system framework

HAKE divides the task of behavior understanding into two stages. First, the visual patterns are mapped to the local state primitive space of the human body, and various behavior patterns are expressed by the primitives that are limited and close to complete atoms; then the primitives are programmed according to logical rules to Reasonable behavioral semantics. HAKE provides a large knowledge base of behavioral primitives to support efficient primitive decomposition, and completes behavioral understanding with the help of combinatorial generalization and differentiable neural symbolic reasoning. articles):

(1) Rules can be learned: HAKE can automatically mine and verify logical rules based on a small amount of prior knowledge of human behavior-primitives, that is, summarize the rules of primitive combination, and perform deductive verification on actual data to find effective And generalizable rules to discover unknown behavior rules, as shown in Figure 4.

Figure 4. Learning Unseen Behavior Rules

(2) Human performance upper bound: On the 87-class complex behavior instance-level behavior detection test set (10,000 images), the performance of the HAKE system with complete primitive detection can even approach human behavior perception performance, verifying its huge potential .

(3) Behavioral understanding "Turing test":

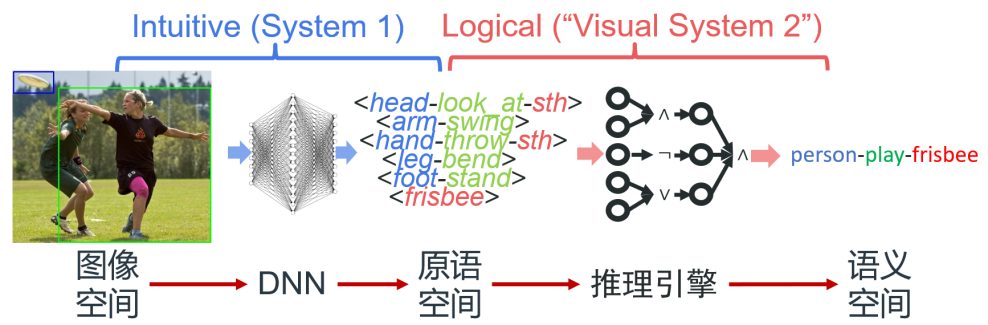

Figure 5. Letting machines (HAKE) and humans erase some pixels makes it impossible to understand the behavior of the picture. Turing test shows that HAEK's "erasing method" is very similar to humans.

We also propose a special "Turing test": if a machine can erase key pixels from an image so that human subjects cannot distinguish the behavior, it is considered to be able to understand the behavior better. Let HAKE and humans do this erasing operation, respectively. And ask another group of volunteers to do the Turing test, asking whether this erasure operation is a human or a HAKE operation. The correct rate of human discrimination is about 59.55% (random guessing 50%), indicating that HAKE's "erasing technique" is very similar to that of humans, which confirms that the understanding of behavior "interpretability" is similar to that of humans.

Generalizable Brain-Inspired Computational Models for Behavioral Objects (Nature Machine Intelligence)

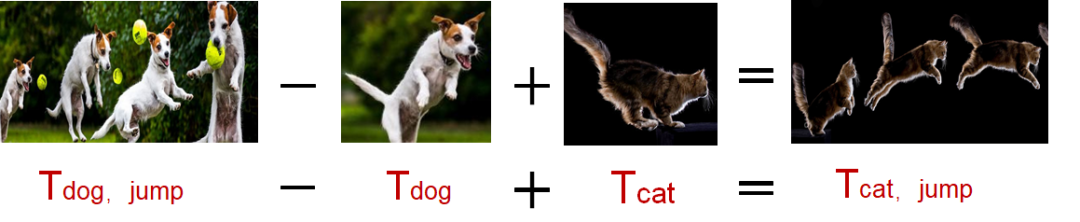

For a specific behavior (such as "washing"), the human brain can abstract the generalized dynamic concept of behavior, which is applicable to different visual objects (such as clothes, tea sets, shoes), and then make behavior recognition. Research in the field of neuroscience has found that for continuous visual signal input, in the process of human memory formation, spatiotemporal dynamic information and object information reach the hippocampus through two relatively independent information pathways to form a complete memory, which brings behavioral objects. possibility of generalizability.

Figure 6. Decoupling of behavioral object concepts and behavioral dynamics concepts, resulting in generalization.

Based on the inspiration of brain science, Lu Cewu's team proposed a semi-coupled structural model (SCS) suitable for high-dimensional information by imitating the mechanism of human cognitive behavioral objects and dynamic concepts working independently in various brain regions to achieve awareness. The concept of behavioral visual objects and the concept of behavioral dynamics are memorized and stored in two relatively independent neurons. A decouple back-propagation mechanism is designed under the framework of the deep coupling model to constrain the two types of neurons to focus only on their own concepts, which preliminarily realizes the generalization of behavior understanding to behavior subject objects. The proposed semi-coupled structural model work was published in "Nature Machine Intelligence", and won the 2020 World Artificial Intelligence Conference Outstanding Young Paper Award.

video sequence object neuron dynamic neuron

Figure 7. Visualization of neurons representing "visual objects" and "behavioral dynamic concepts" "Nature Machine Intelligence"

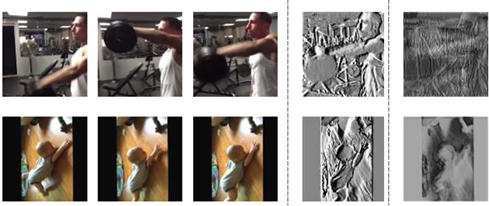

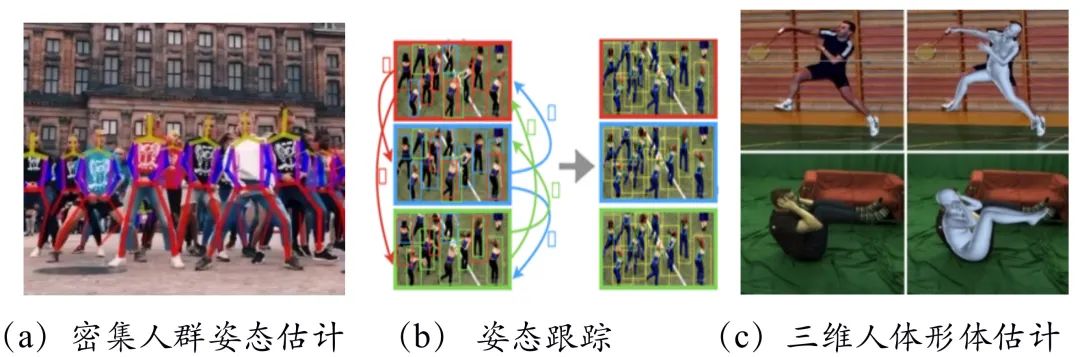

Human Pose Estimation

Human pose estimation is an important basis for behavior understanding. This problem is a problem of obtaining accurate perception under structural constraints. Focusing on the perception problem under structural constraints, graph competition matching, global optimization of attitude flow, and neural-analytic hybrid inverse motion optimization are proposed. Algorithms to systematically solve the problems of dense crowd interference, unstable posture tracking, and serious common sense errors of 3D human body in the sense of human motion structure, and published more than 20 papers in CVPR, ICCV and other top computer vision conferences;

Figure 8. Structure-aware work.

Relevant research results have been accumulated to form the open source system AlphaPose (https://github.com/MVIG-SJTU/AlphaPose), which has won 5954 Stars (1656 forks) on the open source community GitHub, and GitHub ranks 1.6 out of the top 100,000. It is widely used in the field of sensors, robotics, medicine, and urban construction. After pose estimation, the team further formed Alphaction, an open source video behavior understanding open source framework (https://github.com/MVIG-SJTU/AlphAction).

2. How to transfer behavioral understanding knowledge to robotic systems?

Explore the understanding of the nature of human behavior in combination with a first-person perspective, from simply considering "what she/he is doing" to jointly considering "what am I doing". This research paradigm is also the research idea of "Embodied AI". Exploring the transfer of this understanding ability and learned behavioral knowledge to an embodied intelligent ontology (humanoid robot), so that the robot initially has "human behavioral ability", and finally drives the robot to complete some tasks in the real world, laying the foundation for general service robots. The solution to the above scientific problems will: (1) greatly improve the performance of behavioral semantic detection and improve the scope of semantic understanding; (2) effectively improve the ability of agents (especially humanoid robots) to understand the real world. Feedback from the world tests the machine's understanding of the nature of behavioral concepts, laying an important foundation for the realization of general-purpose intelligent robots.

In recent years, Lu Cewu's team has cooperated with Feixi Technology in the field of embodied intelligence to build GraspNet (https://graspnet.net/anygrasp.html), a universal object grasping framework, which realizes rigid bodies, deformable objects, and transparent objects in any scene. For the grasping of unseen objects of this type, the PPH (picks per hour) index surpassed the human level for the first time, which was three times that of the previous best-performing DexNet algorithm, and related papers were cited 70 times within one year of publication. Object grasping is the first step in robotic manipulation and lays a good foundation for this project.

Robot Behavior-Object Model Interaction Perception

Realize the joint learning and iterative improvement of robot behavior execution ability and object knowledge understanding, essentially reduce the perception estimation error of the object model through robot interaction, and further improve the robot behavior execution ability based on the understanding of object knowledge. Compared with the previous pure visual object recognition, the interaction brings new information sources and brings about an essential improvement in perception performance. As shown in Figure 9 and the video,

Figure 9. Object knowledge model - iterative improvement of robot behavior decision-making

Figure 10. Interaction perception: joint learning of robot behavior (top) and model comprehension (bottom) (performing behavior while improving corrective perception)

The related work is the paper "SAGCI-System: Towards Sample-Efficient, Generalizable, Compositional, and Incremental Robot Learning" (SAGCI System: A Sample-Oriented Efficient, Scalable, Composable, and Incremental Robot Learning Framework) published on ICRA 2022 ).

Website: https://mvig.sjtu.edu.cn/research/sagci/index.html

Video: https://www.bilibili.com/video/BV1H3411H7be/

Cewu Lu, professor and doctoral supervisor of Shanghai Jiaotong University, his research direction is artificial intelligence. In 2018, he was selected by the MIT Technology Review as one of the 35 innovative elites under the age of 35 in China (MIT TR35). In 2019, he was awarded the Outstanding Young Scholar by Qiushi.

ICCV and CVPR 2021 Paper and Code Download

Backstage reply: CVPR2021, you can download the CVPR 2021 papers and open source papers collection

Background reply: ICCV2021, you can download the ICCV 2021 papers and open source papers collection

Background reply: Transformer review, you can download the latest 3 Transformer reviews PDF

目标检测和Transformer交流群成立

扫描下方二维码,或者添加微信:CVer6666,即可添加CVer小助手微信,便可申请加入CVer-目标检测或者Transformer 微信交流群。另外其他垂直方向已涵盖:目标检测、图像分割、目标跟踪、人脸检测&识别、OCR、姿态估计、超分辨率、SLAM、医疗影像、Re-ID、GAN、NAS、深度估计、自动驾驶、强化学习、车道线检测、模型剪枝&压缩、去噪、去雾、去雨、风格迁移、遥感图像、行为识别、视频理解、图像融合、图像检索、论文投稿&交流、PyTorch、TensorFlow和Transformer等。

一定要备注:研究方向+地点+学校/公司+昵称(如目标检测或者Transformer+上海+上交+卡卡),根据格式备注,可更快被通过且邀请进群

▲扫码或加微信: CVer6666,进交流群

CVer学术交流群(知识星球)来了!想要了解最新最快最好的CV/DL/ML论文速递、优质开源项目、学习教程和实战训练等资料,欢迎扫描下方二维码,加入CVer学术交流群,已汇集数千人!

▲扫码进群

▲点击上方卡片,关注CVer公众号

整理不易,请点赞和在看