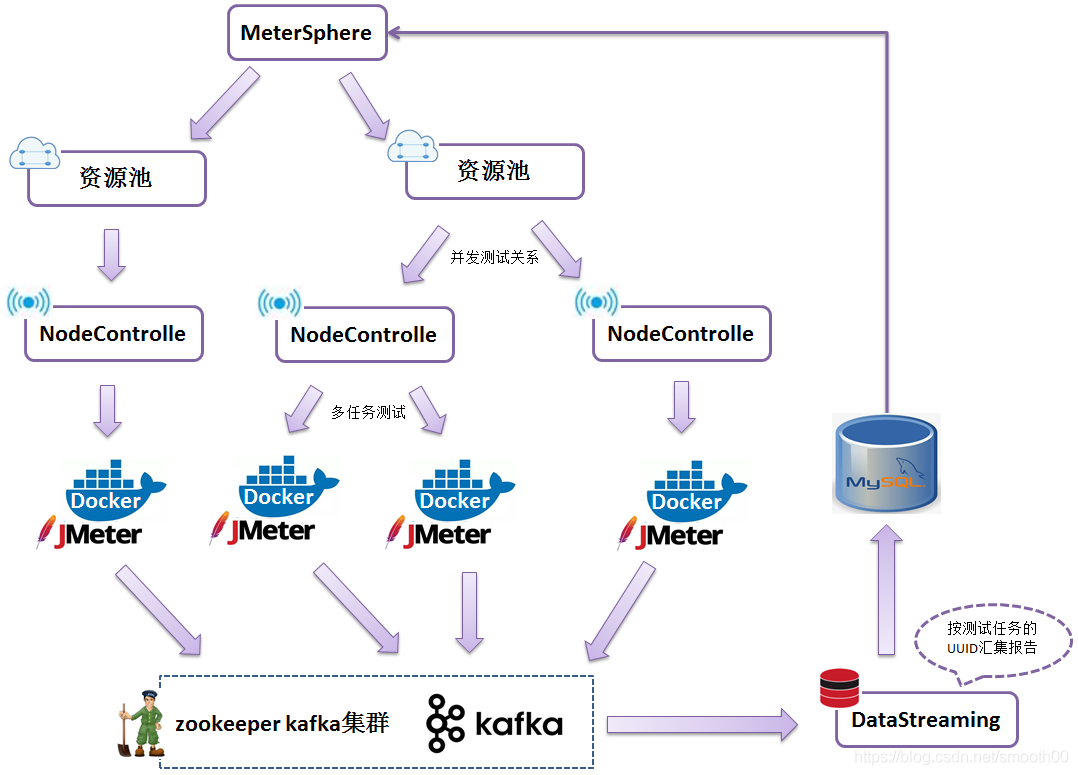

MeterSphere is positioned as a "one-stop open source continuous testing platform" . It mainly covers test tracking, interface testing, performance testing, team collaboration and other functions, and is compatible with mainstream open source standards such as JMeter, which can effectively help development and testing teams make full use of the elasticity of the cloud for highly scalable automated testing. Since I do performance testing myself, I pay more attention to the implementation of performance testing. Here is the officially described architecture:

It can be seen that this platform is based on the cluster deployment architecture of Docker, and the distributed stress test deployment is realized through NodeController. The performance test task will be sent to the NodeController, and a NodeController is a resource pool (this concept can be seen on the interface). ).

After NodeController receives the test task, it will create a Docker Jmeter engine. This jmeter instance is not the slave or jmeter-server process we usually understand, but an independent jmeter (master), so there is no port between them. 1099 conflict, so that you can create multiple Docker engines indefinitely, such as creating NodeControllers on multiple hosts, as follows:

Under one NodeController, multiple instances of jmeter-docker can be created (one instance is created for each task, and automatically reclaimed after the stress test), as follows:

Each jmeter-docker instance (multitasking) will retain an independent test report after the stress test (equivalent to multiple jmeter independent parallel tests):

Therefore, this platform is very suitable for multi-task parallel testing, and at the same time, it is convenient to realize deployment and expansion on the cloud (based on the advantages of docker). The most important thing is that it is open source, so this platform has many highlights.

Although it supports multiple jmeter nodes (multitasking) to carry out tests independently, but at first glance, I did not find the functions of single-task multi-node distributed stress measurement and test data aggregation report (and from the document link provided by VincentWzhen https:// This function can be seen in metersphere.io/docs/faq/#q ), after all, the traditional jmeter pressure test idea is to distribute a test task to distributed nodes (jmeter-slave), and finally gather test reports; and this platform It is to associate multiple nodes through the concept of resource pool (and each NodeController starts an independent jmeter docker instance according to the task), and introduces Kafka as the cache component of the test results. From the official documents, we can see the support of distributed pressure testing. Way:

MeterSphere supports distributed performance testing by adding multiple test execution nodes to the test resource pool. When we add a node to a test resource pool, in addition to the node's IP and port information, we also need to configure the maximum number of concurrency that the node can support according to the node's machine specifications. When we select a test resource pool during the performance test, MeterSphere will proportionally split the number of concurrent users defined in this performance test according to the maximum number of concurrent users supported by the nodes of the selected test resource pool ( At present, it is found that there is a bug , that is, when each NodeController is started concurrently, the corresponding jmeter-docker is named with the task ID testId , which may fail to start due to the same name in the container cluster, I hope subsequent versions can be avoided ), after the test starts to execute , each test execution node will transmit test results, test logs and other information to the executed Kafka queue, and the data-streaming component in MeterSphere will collect this information from Kafka and aggregate it (according to the UUID key corresponding to the test task) Aggregated report).

For the performance testing part of the platform, the relationship architecture diagram of each component drawn according to my own understanding is as follows:

According to a further understanding of the concept of resource pools, I added another layer to the above relationship diagram, and it becomes as follows:

The new relational architecture diagram shows that this platform not only supports multi-task parallel testing (starting multiple Jmeter Docker instances through NodeController, and the started jmeter instances do not depend on ports), but also supports distributed concurrent testing (scheduling multiple Jmeter Docker instances through resource pools) NodeController pressure test together). In this way, the entire relationship of this platform is relatively clear, and it avoids the Jmeter-slave contention of the traditional Jmeter distributed stress testing platform during multi-task operation (everyone knows that one jmeter-slave has exclusive use of one). 1099 port, which cannot be called in parallel by multiple tasks at all), and can meet the requirements of distributed concurrent testing by task.

Description: The way Jmeter sends the test results to Kafka is also very simple, that is, it uses the third-party backend listener jmeter-backend-listener-kafka to send the real-time monitoring data of Jmeter to kafka, and kafka can handle high throughput and Distributed traffic data features to achieve high concurrent test result data aggregation capability of Jmeter cluster.

MeterSphere official website address: MeterSphere - Open Source Continuous Testing Platform - Official Website

github source address: https://github.com/metersphere

Release address: https://github.com/metersphere/metersphere/releases

My open source project recommendation: Jmeter-based performance stress testing platform implementation - Programmer Sought

Good tools are not enough for performance testing. You also need to be armed with theoretical and practical skills. Please pay attention to my recorded and broadcast course " Understanding the Core Knowledge of Performance Testing "