Concurrent knowledge points finishing

- Concurrent

-

- Atomicity, visibility, orderliness

- Two-process three-state

- Three state in the JVM thread

- Four CAS operation analysis (an optimistic lock with extremely low lock granularity)

- Five heavy locks brought by synchronized, and the biased locks at the beginning of the thread, and lightweight locks

- Six ThreadPoolExecutor thread pool concept and parameter analysis

- Seven concurrent collections in java

Concurrent

Atomicity, visibility, orderliness

1) Atomicity: The atomic variable operation of the memory model

2) Visibility: Because in the execution process, each thread has its own separate memory space, only through (volatile, synchronized, final) new values can be entered immediately In the main memory

3) Orderliness: order within threads, disorder between threads. Passed, first occurrence principle and, volatile, synchronized

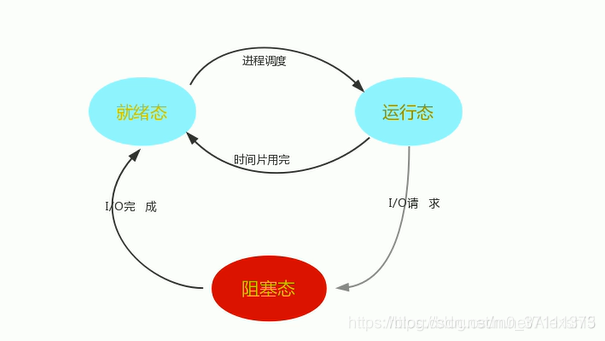

Two-process three-state

1)

Running state —> blocking state: the executing process enters the blocking state

running state —> ready state: the time slice allocated to each process is limited, and the running time slice is used completely After that or a higher priority process appears, it enters the ready state.

Ready state —> Running state: Scheduled by the CPU, select a process to allocate a time slice for execution.

Blocking state —> Running state: The event waiting for the process has occurred

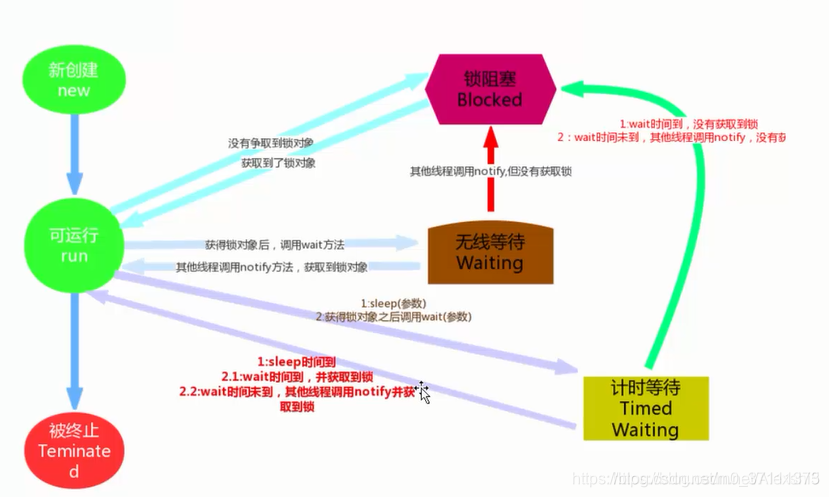

Three state in the JVM thread

- Thread creation

- The thread is in a runnable state

- When the thread does not obtain the lock object, the thread enters the lock block, waits to acquire the lock object, and enters the runnable state

- The thread acquires the lock object, but calls the wait() method, enters the wireless waiting, and waits for other threads to call notify(). Only when the lock object is acquired can it enter the runnable state

- Sleep time is up, or wait time is up, it enters the timer waiting, and the lock object is acquired before it can enter the runnable state, and the lock object is entered into the lock blocking state when the time is up.

- Thread termination

Four CAS operation analysis (an optimistic lock with extremely low lock granularity)

AtomicInteger.incrementAndGet();

public final int incrementAndGet() {

return unsafe.getAndAddInt(this, valueOffset, 1) + 1;

}

public final int getAndAddInt(Object var1, long var2, int var4) {

int var5;

do {

var5 = this.getIntVolatile(var1, var2);

} while(!this.compareAndSwapInt(var1, var2, var5, var5 + var4));

return var5;

}

1. It contains 3 parameters CAS (V, E, N), V represents the value of the variable to be updated, E represents the expected value, and N represents the new value. Only when the value of V is equal to the value of E, will the value of V be set to N. If the value of V and E are different, it means that another thread has done two updates, and the current thread will do nothing. Finally, CAS returns the true value of the current V.

2.compareAndSwapInt is a native interface

3. The underlying implementation principle: through the mov instruction, move the exchange_value and other values into the register, and then through the accumulator to calculate the value, and finally through the bus to refresh the main memory to achieve the effect

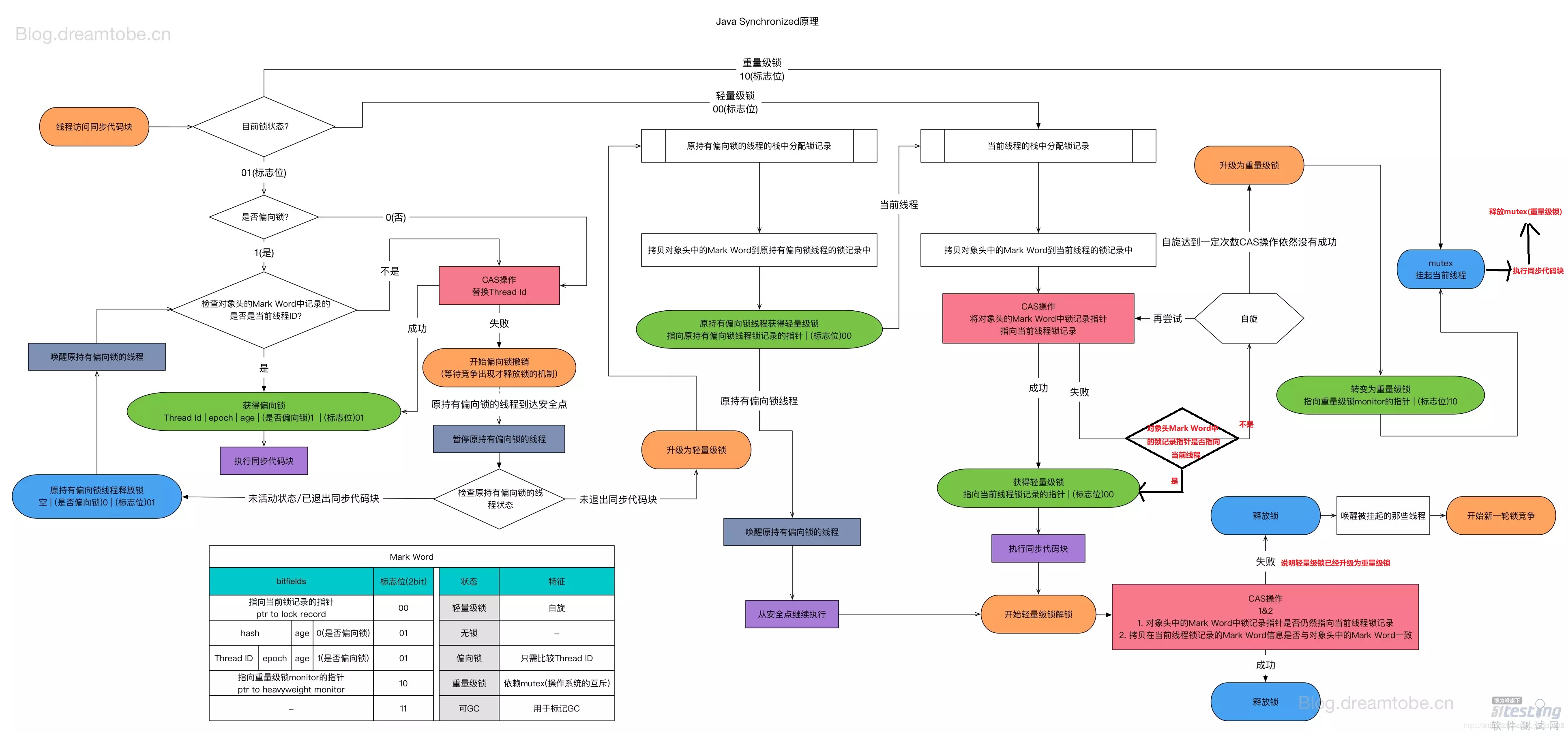

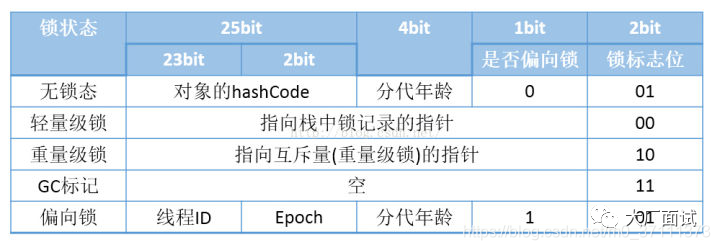

Five heavy locks brought by synchronized, and the biased locks at the beginning of the thread, and lightweight locks

5.1 Java objects

- Object header + instance data + alignment fill

- Mark Word+ pointer to the class to which the object belongs (if it is an array object, it will also contain the length

5.2 How to upgrade the lock

- Bias lock —> Lightweight lock —> Heavyweight lock

- Bias lock upgrade: When a thread obtains a bias lock, during execution, as long as another thread tries to obtain a bias lock, and the thread currently holding the bias lock is still executing in the synchronization block, the bias lock will be upgraded to light Magnitude lock.

- Lightweight lock upgrade: Use the CAS operation to replace the Mark Word of the lock with the pointer of the lock record copied in the thread stack. If it succeeds, the current thread acquires the lock. If it fails, it means that the Mark Word has been replaced with the lock record of other threads, indicating that it is competing with other threads for the lock, and the current thread tries to use spin to acquire the lock. When the number of self-selection exceeds a certain number, it will be upgraded to a heavyweight lock

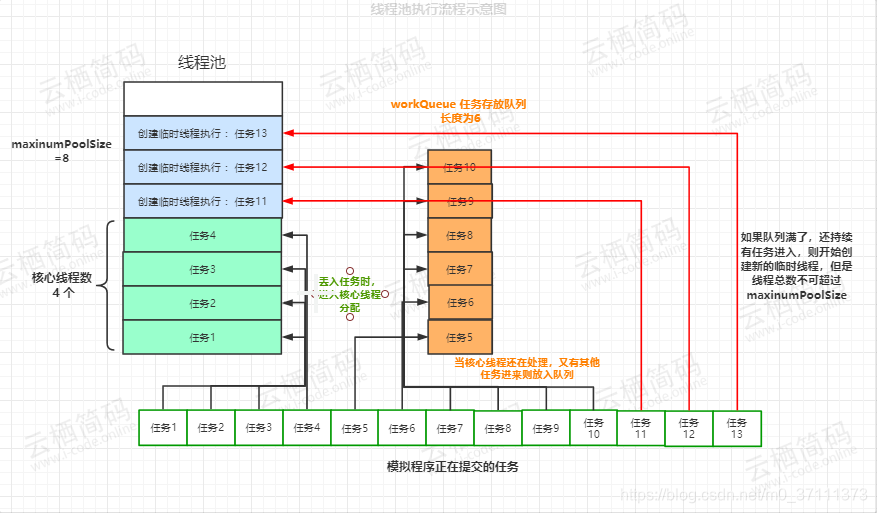

Six ThreadPoolExecutor thread pool concept and parameter analysis

6.1 Main parameters

| parameter name | Parameter meaning |

|---|---|

| corePoolSize | Number of core threads |

| maxinumPoolSize | Maximum number of threads |

| keepAliveTime | Idle thread survival time |

| unit | Unit of survival time |

| workQueue | Store thread task queue |

| threadFactory | Thread factory, create a new thread |

| handler | The thread pool refuses to process the task |

corePoolSize: represents the number of core threads, these threads will not be eliminated after creation, but a kind of resident thread

maxinumPoolSize: represents the maximum number of threads allowed to be created, when the number of core threads are used up

workQueue: used to store to be executed The task, assuming that we have now all core threads are used, and tasks come in, all are put into the queue, until the entire queue is full but the task continues to enter, it will start to create new threads

keepAliveTime, unit: means beyond the core thread The idle survival time of threads outside the number, that is, core threads will not be eliminated, but some threads that exceed the number of core threads will be eliminated if they are idle for a certain period of time.

threadFactory: Thread factory, used to produce threads to perform tasks.

Handler: There are two A method to trigger the task rejection strategy, 1. Call shutdown 2. The maximum number of threads in the thread pool has been reached, this time is also rejected

6.2 Rejection policy

- ThreadPoolExecutor.AbortPolicy: Discard the task and throw RejectedExecutionException.

- ThreadPoolExecutor.DiscardPolicy: also discards tasks, but does not throw exceptions.

- ThreadPoolExecutor.DiscardOldestPolicy: Discard the first task in the queue, and then try to execute the task again (repeat this process)

- ThreadPoolExecutor.CallerRunsPolicy: the task is handled by the calling thread

6.3 Two submission methods of thread pool: submit and execute

- Execute can only submit tasks of Runnable type and has no return value. Submit can submit tasks of Runnable type or Callable type. There will be a return value of type Future, but when the task type is Runnable, the return value is null.

- When execute executes a task, if it encounters an exception, it will throw it directly, but submit will not throw it directly. It will only throw an exception when the return value is obtained using the get method of Future.

6.4 workQueue

- SynchronousQueue: blocking queue

- LinkedBlockingQueue: unlimited queue

- ArrayBlockingQueue: a bounded blocking queue supported by an array, FIFO

Seven concurrent collections in java

-

CopyOnWriteArrayList is equivalent to thread-safe ArrayList

-

CopyOnWriteArraySet is equivalent to thread-safe HashSet

-

ConcurrentHashMap is equivalent to thread-safe HashMap

-

ConcurrentSkipListMap is equivalent to a thread-safe TreeMap

-

ConcurrentSkipListSet is equivalent to a thread-safe TreeSet

-

ArrayBlockingQueue is a thread-safe bounded blocking queue implemented by array

-

LinkedBlockingQueue is a blocking queue implemented by a singly linked list (size can be specified)

-

ConcurrentLinkedQueue is an unbounded queue implemented by a singly linked list