Overview

1) ceph cluster: nautilus version, monitoring is 192.168.39.19-21

2) kuberntes cluster environment: v1.14.2

3) integration method: storageclass dynamically provides storage

4) k8s cluster nodes have installed ceph-common package.

1) Step 1: Create a pool of user ceph rbd block and a common user in the ceph cluster

ceph osd pool create ceph-demo 16 16

ceph osd pool application enable ceph-demo rbd

ceph auth get-or-create client.kubernetes mon 'profile rbd' osd 'profile rbd pool=ceph-demo' mgr 'profile rbd pool=ceph-demo'

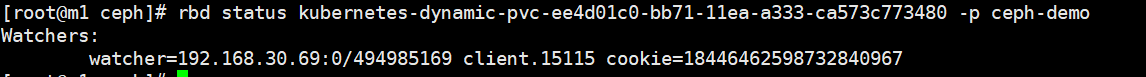

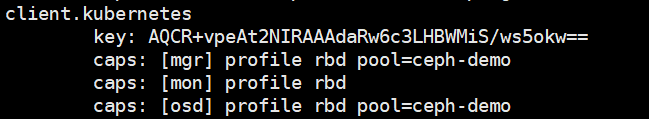

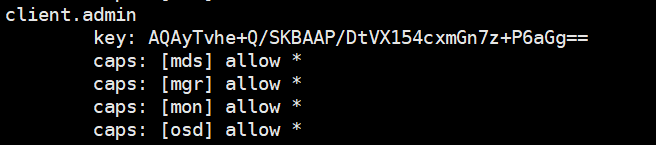

At this time, there are admin users and kubernetes users in the ceph cluster, as shown in the figure:

2) Step 2: Create a secret to store ceph users in k8s

#创建secret对象保存ceph admin用户的key

kubectl create secret generic ceph-admin --type="kubernetes.io/rbd" --from-literal=key=AQAyTvhe+Q/SKBAAP/DtVX154cxmGn7z+P6aGg== --namespace=kube-system

#创建secret对象保存ceph kubernetes用户的key

kubectl create secret generic ceph-kubernetes --type="kubernetes.io/rbd" --from-literal=key=AQCR+vpeAt2NIRAAAdaRw6c3LHBWMiS/ws5okw== --namespace=kube-system

3) Step 3: Deploy the csi plugin in k8s

git clone https://github.com/kubernetes-incubator/external-storage.git

cd external-storage/ceph/rbd/deploy

export NAMESPACE=kube-system

sed -r -i "s/namespace: [^ ]+/namespace: $NAMESPACE/g" ./rbac/clusterrolebinding.yaml ./rbac/rolebinding.yaml

kubectl -n $NAMESPACE apply -f ./rbac

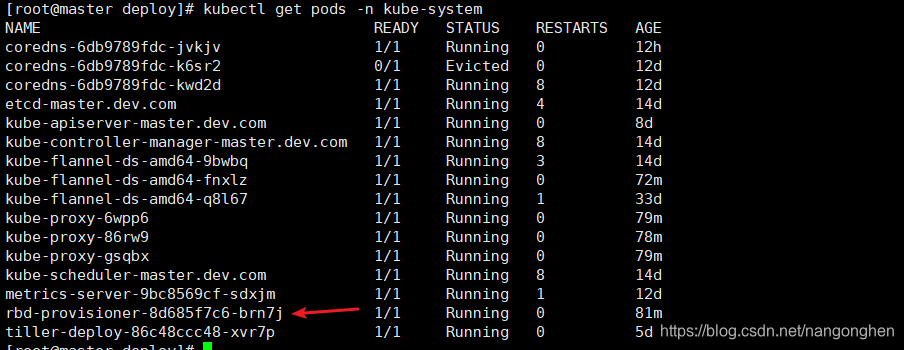

The csi plug-in is deployed successfully, as shown in the figure:

4) Step 4: Create storageclass in k8s

[root@master deploy]# cat storageclass-ceph-rbd.yaml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: ceph-rbd

provisioner: ceph.com/rbd

parameters:

monitors: 192.168.39.19:6789,192.168.39.20:6789,192.168.39.21:6789

adminId: admin

adminSecretName: ceph-admin

adminSecretNamespace: kube-system

#userId字段会变成插件创建的pv的spec.rbd.user字段

userId: kubernetes

userSecretName: ceph-kubernetes

userSecretNamespace: kube-system

pool: ceph-demo

fsType: xfs

imageFormat: "2"

imageFeatures: "layering"

Step 5: Create pvc with specified storageclass in k8s

[root@master deploy]# cat ceph-rbd-pvc-test.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: ceph-rbd-claim

spec:

accessModes:

- ReadWriteOnce

storageClassName: ceph-rbd

resources:

requests:

storage: 32Mi

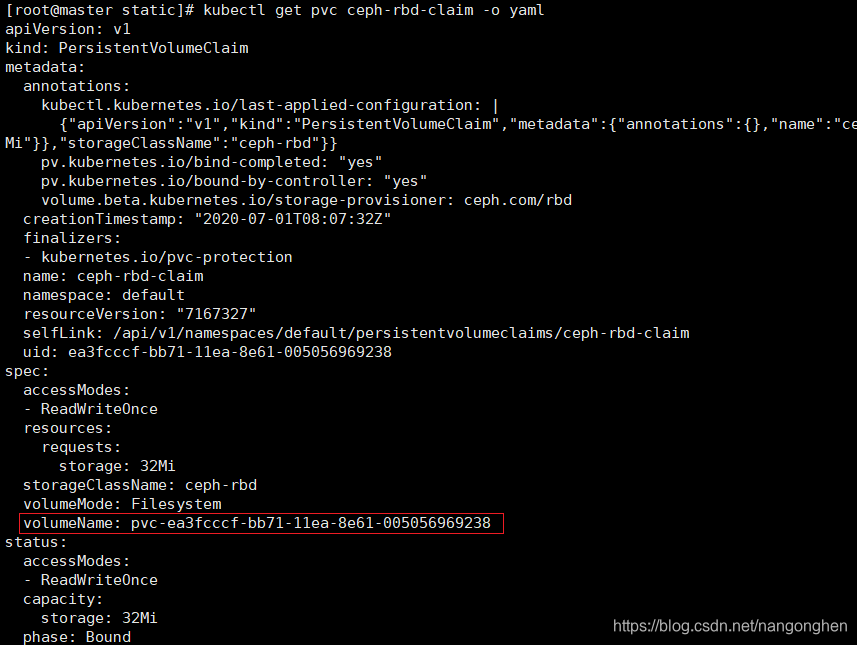

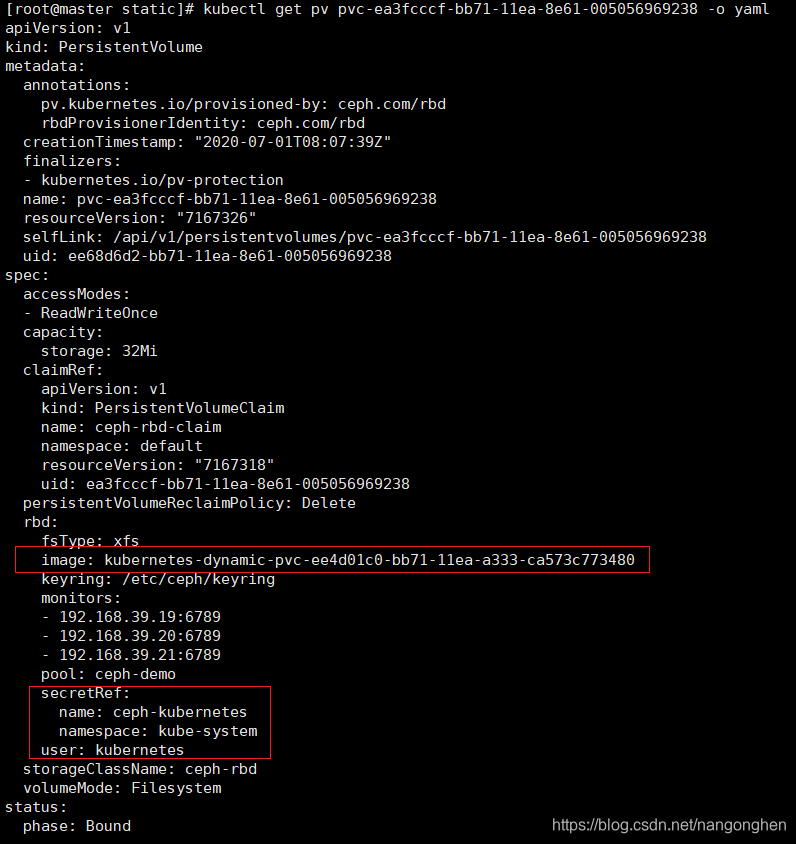

Check the detailed information of pvc and pv at this time, as shown in the figure:

6) Step 6: Create a pod of specified pvc in k8s

[root@master deploy]# cat ceph-busybox-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: ceph-rbd-busybox

spec:

containers:

- name: ceph-busybox

image: busybox:1.27

command: ["sleep", "60000"]

volumeMounts:

- name: ceph-vol1

mountPath: /usr/share/busybox

readOnly: false

volumes:

- name: ceph-vol1

persistentVolumeClaim:

claimName: ceph-rbd-claim

7) Step 7: Check the pod mounting situation and ceph rbd information.

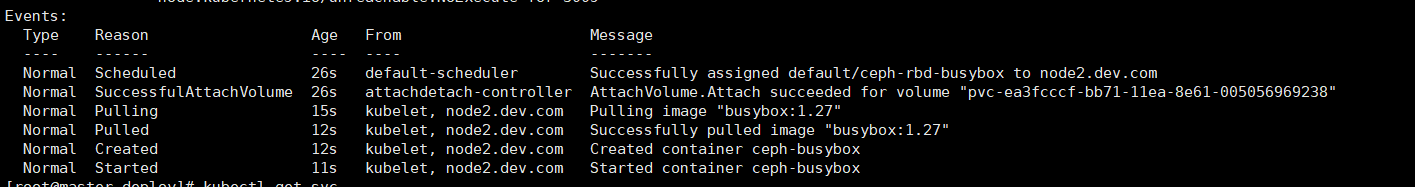

The pod starts correctly, and the event is as shown in the figure:

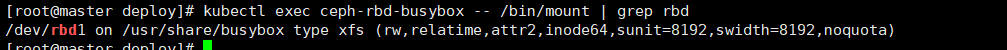

Mount the rbd block in the pod correctly, enter the pod and use the mount command to view:

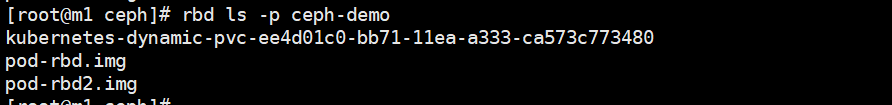

there is an rbd block created by the csi plugin in the ceph-demo pool in the ceph cluster: