I. Introduction

This article will focus on Linux's BIO, NIO and IO multiplexing.

Second, the basic use of Netcat software

Netcat (abbreviated as nc) is a powerful network command tool that can perform TCP and UDP-related operations in Linux, such as port scanning, port redirection, port monitoring and even remote connection

Here, we use nc to simulate a server that receives messages and a client that sends messages

1. Install nc software

sudo yum install -y nc2. Use nc to create a server that listens on port 9999

nc -l -p 9999 # -l表示listening,监听After successful startup, nc will block

3. Create a new bash, and use nc to create a client to send messages

nc localhost 9999Enter the information to be sent on the console and check whether the server has received it

4. Check the file descriptor in the nc process above

ps -ef | grep nc # 查看nc的进程号,这里假设是2603

ls /proc/2603/fd # 查看2603进程下的文件描述符

You can see that there is a socket under this process. This is a socket created between the client and server of nc.

After this series of operations, I believe we have a basic understanding of the Netcat software. Let’s introduce BIO.

Three, strace tracking system call

Strace software description: It is a software that can track system calls and signals, through which we can learn about BIO

Environment description: The demonstration here is based on the old version of linux, because the new version of linux does not use BIO, the demonstration can not be shown

1. Use strace to track system calls

sudo yum install -y strace # 安装strace软件

mkdir ~/strace # 新建一个目录,存放追踪的信息

cd ~/strace # 进入到这个目录

strace -ff -o out nc -l -p 8080 # 使用strace追踪后边的命令进行的系统调用

# -ff 表示追踪后面命令创建的进程及子进程的所有系统调用,

# 并根据进程id号分开输出到文件

# -o 表示追踪到的信息输出到指定名称的文件,这里是out

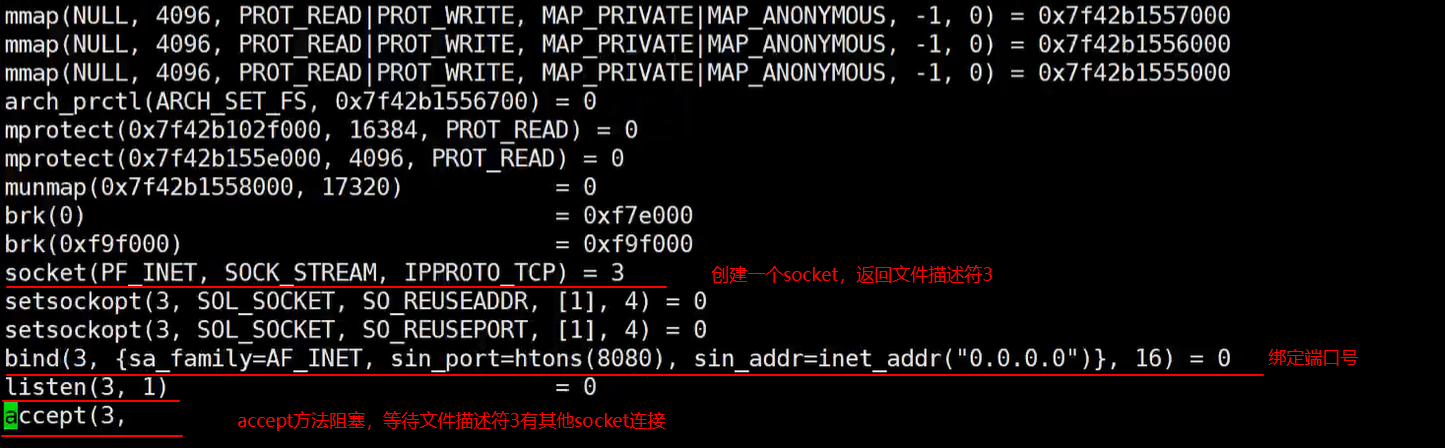

2. View the system call created by the server

In the directory entered in the previous step, an out.pid file appeared, the contents of which are all the system call process after the execution of this command nc -l -p 9999, use the vim command to view

vim out.92459 # nc进程id为92459

Here the accept() method is blocked , it has to wait for other sockets to connect to it

3. Client connection, check system call

Exit vim, use tail to view

tail -f out.92459-f parameter: When the file has additional content, it can be printed on the console in real time, so that it is very convenient to view the system calls made after the client is connected

nc localhost 8080

View system calls

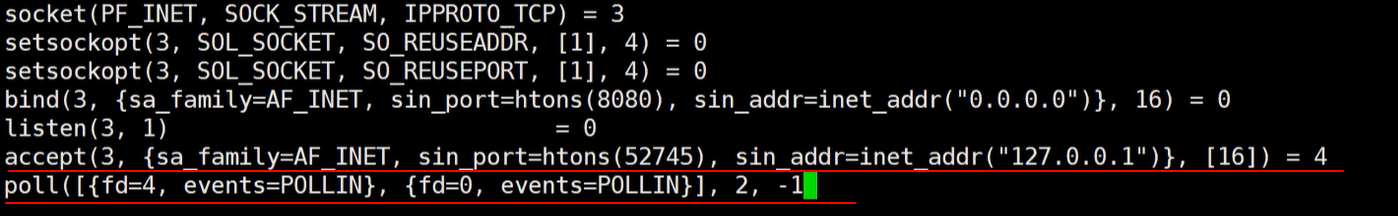

- Here after the client is connected, the accept() method gets the client connection and returns file descriptor 4. This 4 is the newly created socket on the server to communicate with the client

- Then use the multiplexer poll to monitor the file descriptors 4 and 0 on the server. 0 is the standard input file descriptor. Which file descriptor is read if an event occurs, and if no event occurs, it will be blocked.

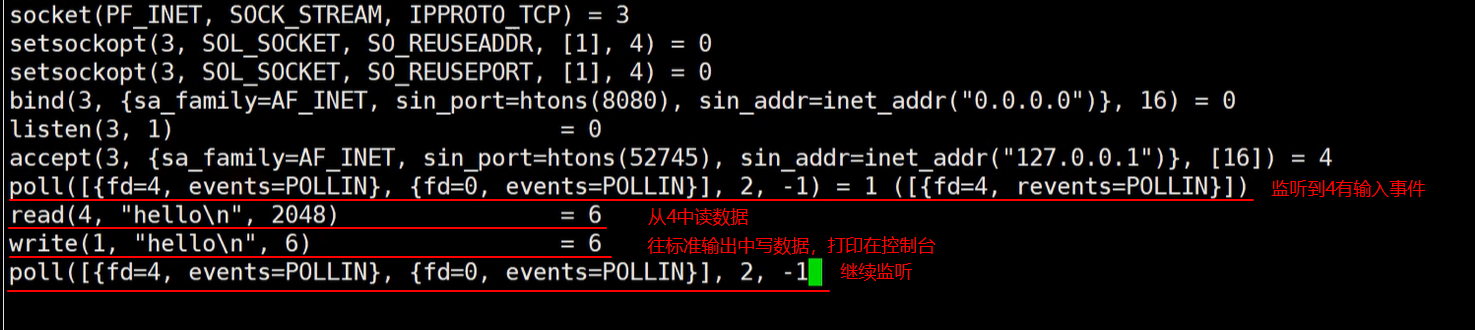

4. The client sends a message to view the system call

The client sends data to the server, and the server can monitor the occurrence of an event from the socket, and can perform corresponding processing, and continue to block after processing, and wait for the next event to occur

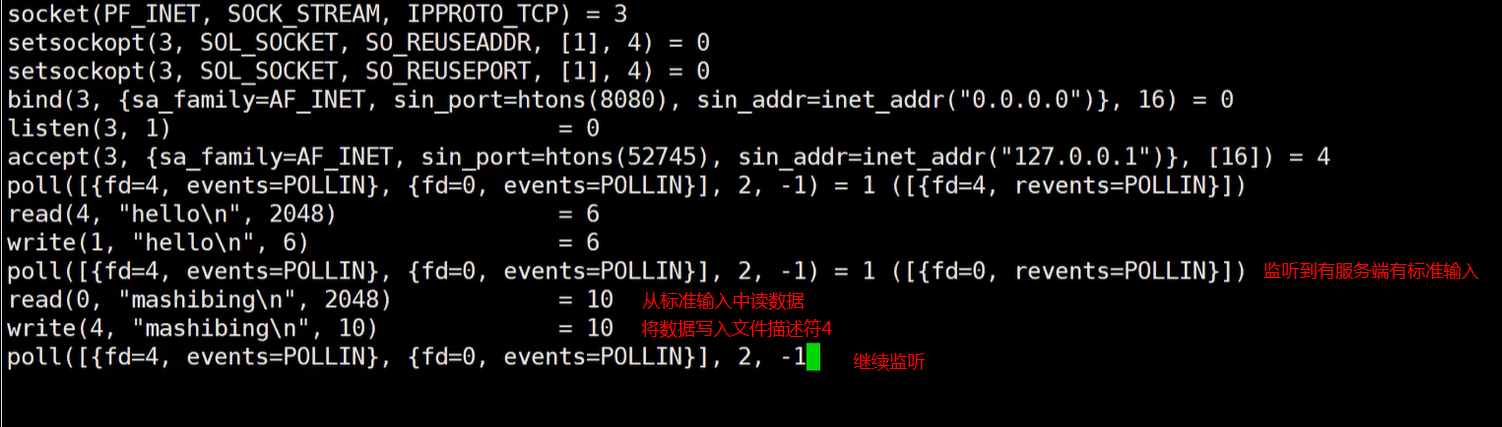

5. The server sends data to the client to view the system call

The server sends data, it must be input from the keyboard, which is standard input 0, read from 0 and send the data to socket 4

Share More on C / C ++ Linux back-end development of the underlying principles of knowledge and learning network to enhance the learning materials , complete technology stack, content knowledge, including Linux, Nginx, ZeroMQ, MySQL, Redis, fastdfs, MongoDB, ZK, streaming media, audio and video development , Linux kernel, CDN, P2P, K8S, Docker, TCP/IP, coroutine, DPDK, etc.

Click on the video learning materials: C/C++Linux server development/Linux background architect-learning video

Four, BIO (blocking IO)

In our third section, we used the strace tool to view the system calls during the use of the nc software. In fact, the previous section reflects the BIO. We summarize the above series of system calls, based on an intuitive understanding of BIO

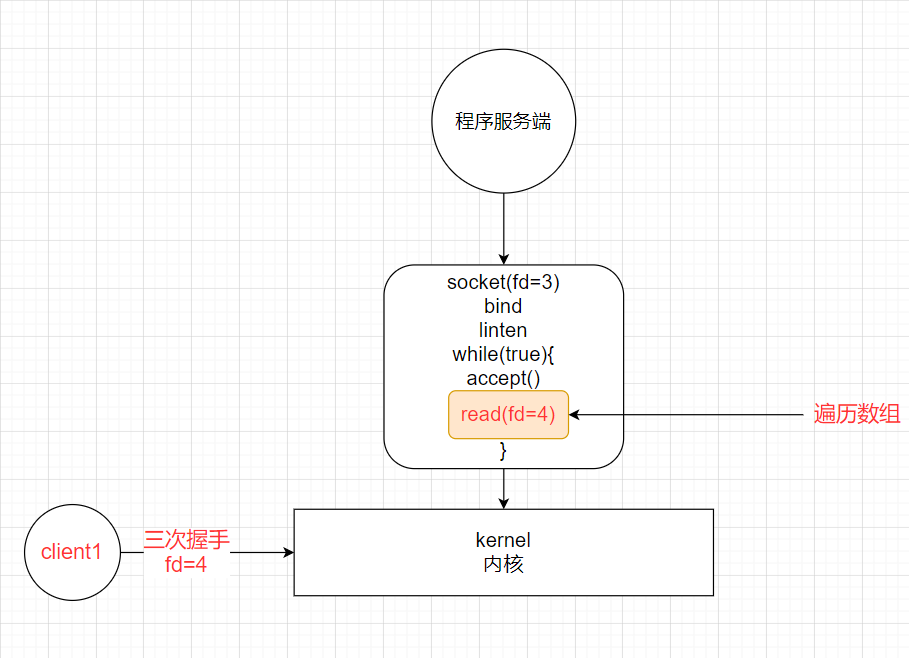

1. Single-threaded mode

1.1, process demonstration

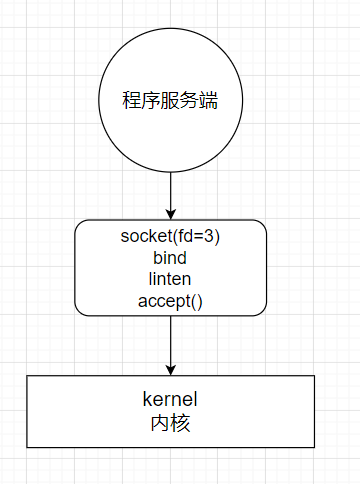

1. Server start

Start the server, wait for the socket connection, the accept() method blocks

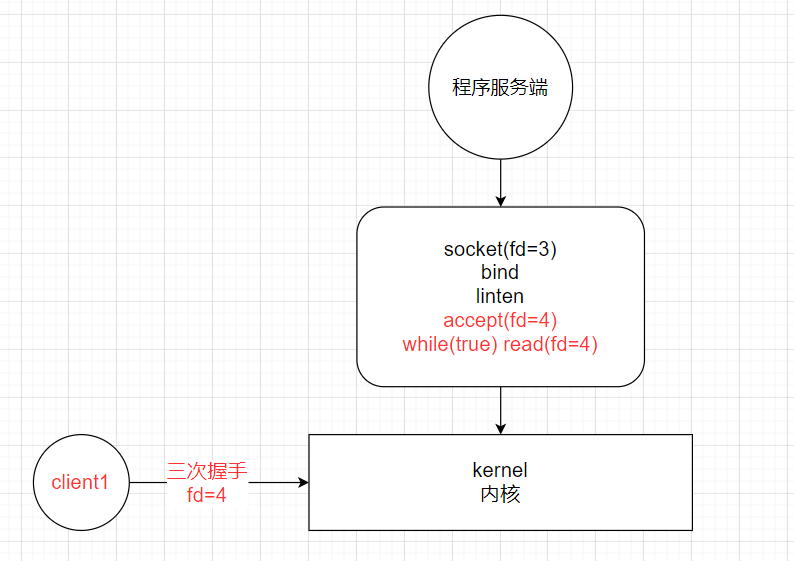

2, the client connects, no data is sent

Connect the client, the accept() method is executed, the data sent by client1 is not received, the read() method blocks

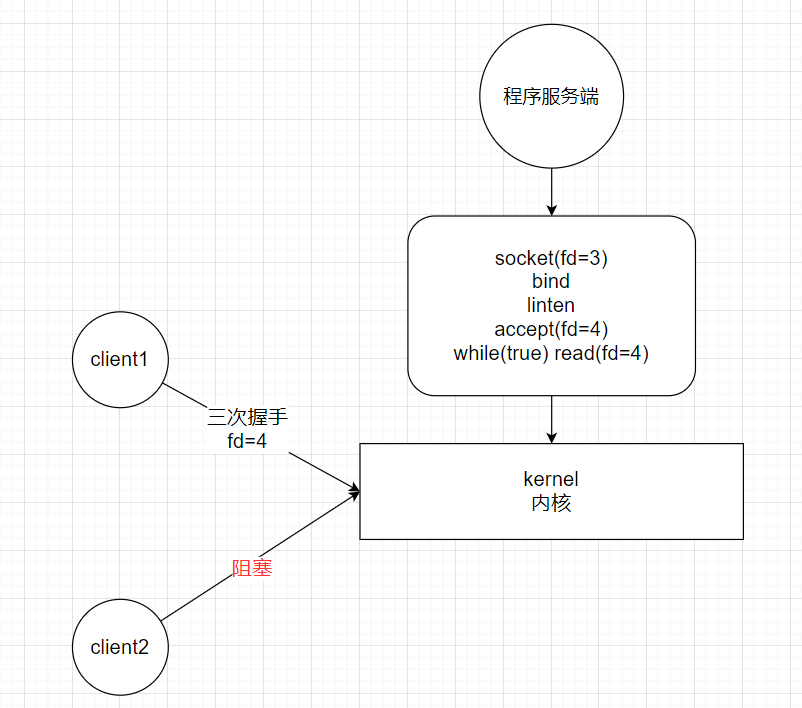

3. Another client connects

Because the read() method is blocked, the accept() method cannot be executed, so the cpu can only handle one socket at a time

1.2. Existing problems

The above model has a big problem. If the client establishes a connection with the server and the client delays sending data, the process will always be blocked on the read() method, so other clients cannot connect, that is Only one client can be processed at a time, which is very unfriendly to customers

1.3 How to solve

In fact, it is very simple to solve this problem. You can use multiple threads. As long as a socket is connected, the operating system allocates a thread to process, so that the read() method is blocked on each thread, and multiple operations can be performed without blocking the main thread. Socket, which socket in which thread has data, which socket to read

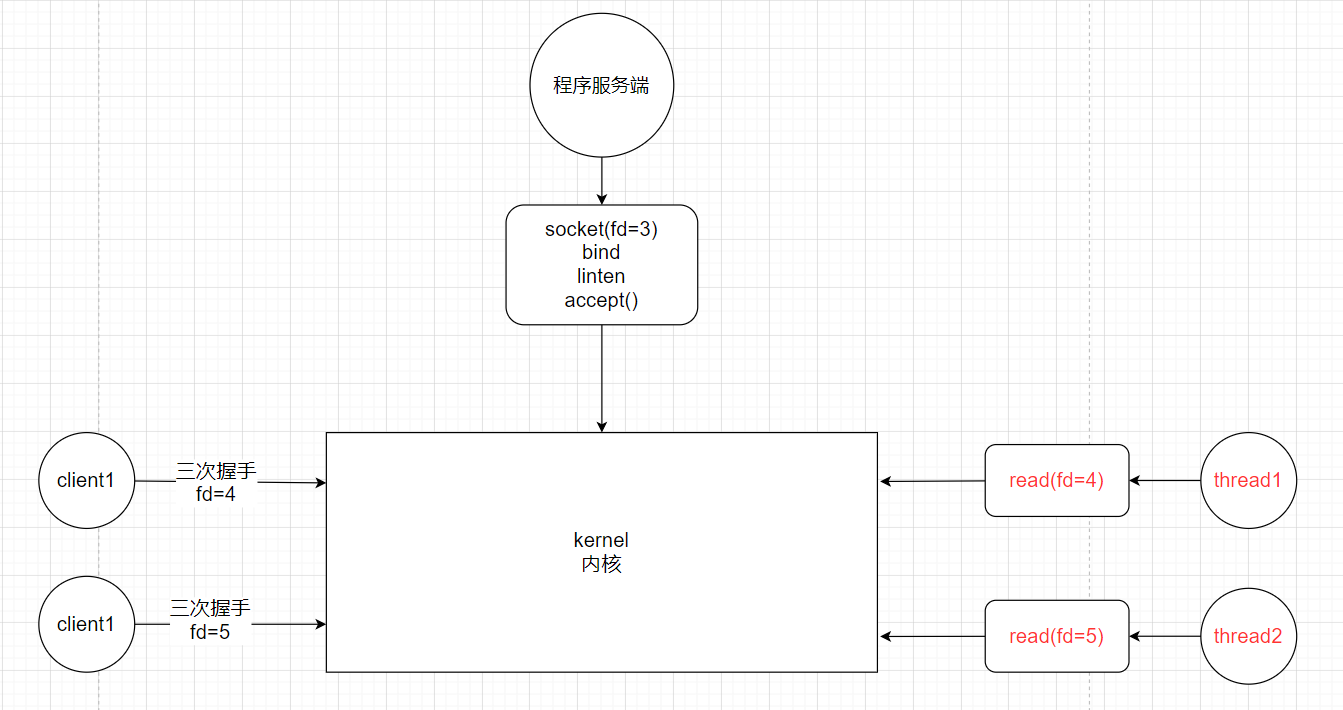

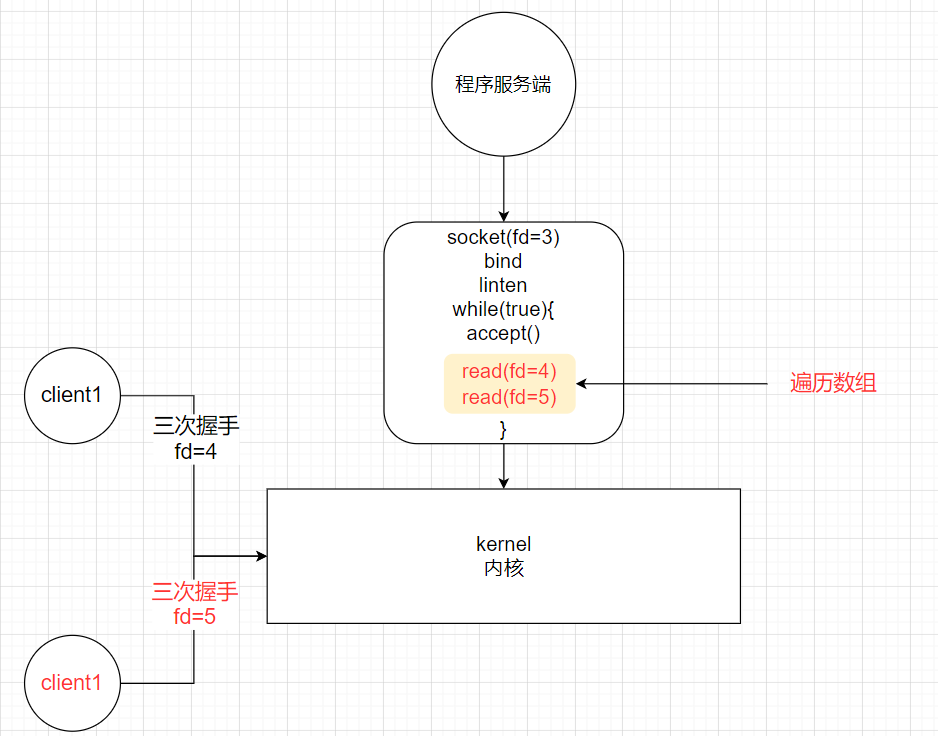

2. Multi-threaded mode

1.1 Process demonstration

- The program server is only responsible for monitoring whether there is a client connection, using accept() to block

- Client 1 connects to the server, and opens a thread (thread1) to execute the read() method, and the program server continues to monitor

- Client 2 connects to the server and also opens a thread to execute the read() method

- Any socket on any thread has data sent, it can be read immediately by read(), and the cpu can process it.

1.2. Existing problems

The above multi-threaded model seems to be perfect, but it also has big problems. Every time a client comes, one thread must be opened. If there are 10,000 clients, then 10,000 threads must be opened. In the operating system, the user mode cannot directly open up threads. It is necessary to call the 80 soft interrupt of the cpu and let the kernel create a thread. This also involves the user state switching (context switching), which is very resource intensive.

1.3 How to solve

The first method: use the thread pool, this can be used in the case of few client connections, but in the case of a large number of users, you don't know how big the thread pool is. If it is too large, the memory may not be enough, and it is not feasible. The

second Solution: Because the read() method is blocked, it is necessary to open up multiple threads. If any method can make the read() method not block, then there is no need to open up multiple threads. This uses another IO model, NIO (non- Blocking IO)

Five, NIO (non-blocking IO)

1. Process demonstration

1. The server has just been created and there is no client connection

In NIO, the accept() method is also non-blocking. It is in a while loop

2. When a client connects

3. When there is a second client to connect

2. Summary

In NIO mode, everything is non-blocking:

- The accept() method is non-blocking, if there is no client connection, it returns error

- The read() method is non-blocking. If the read() method cannot read the data, it returns error. If the data is read, it only blocks the time of the read() method to read the data.

In NIO mode, there is only one thread:

- When a client connects to the server, the socket will be added to an array and traverse once every time to see if the socket's read() method can read the data

- Such a thread can handle the connection and reading of multiple clients

3. Existing problems

NIO has successfully solved the problem that BIO needs to open multithreading. One thread in NIO can solve multiple sockets, which seems to be perfect, but there are still problems.

This model is very useful when there are few clients, but if there are many clients, such as 10,000 clients connecting, then 10,000 sockets will be traversed in each loop. If there are only 10 sockets out of 10,000 sockets If there is data, 10,000 sockets will be changed, and a lot of useless work will be done. And this traversal process is carried out in the user mode. The user mode determines whether the socket has data or calls the kernel's read() method. This involves switching between the user mode and the kernel mode. Each traversal has to be switched once, and the overhead is a lot

because of these problems, IO multiplexing came into being

Share More on C / C ++ Linux back-end development of the underlying principles of knowledge and learning network to enhance the learning materials , complete technology stack, content knowledge, including Linux, Nginx, ZeroMQ, MySQL, Redis, fastdfs, MongoDB, ZK, streaming media, audio and video development , Linux kernel, CDN, P2P, K8S, Docker, TCP/IP, coroutine, DPDK, etc.

Click on the video learning materials: C/C++Linux server development/Linux background architect-learning video

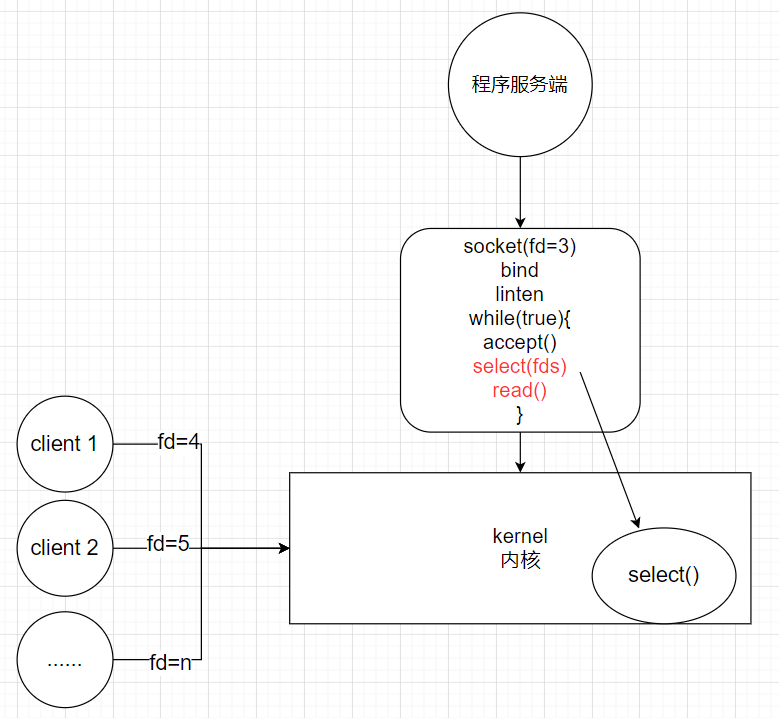

Six, IO Multiplexing (IO multiplexing)

There are three implementations of IO multiplexing, select, poll, and epoll. Now let's take a look at the true colors of these three implementations.

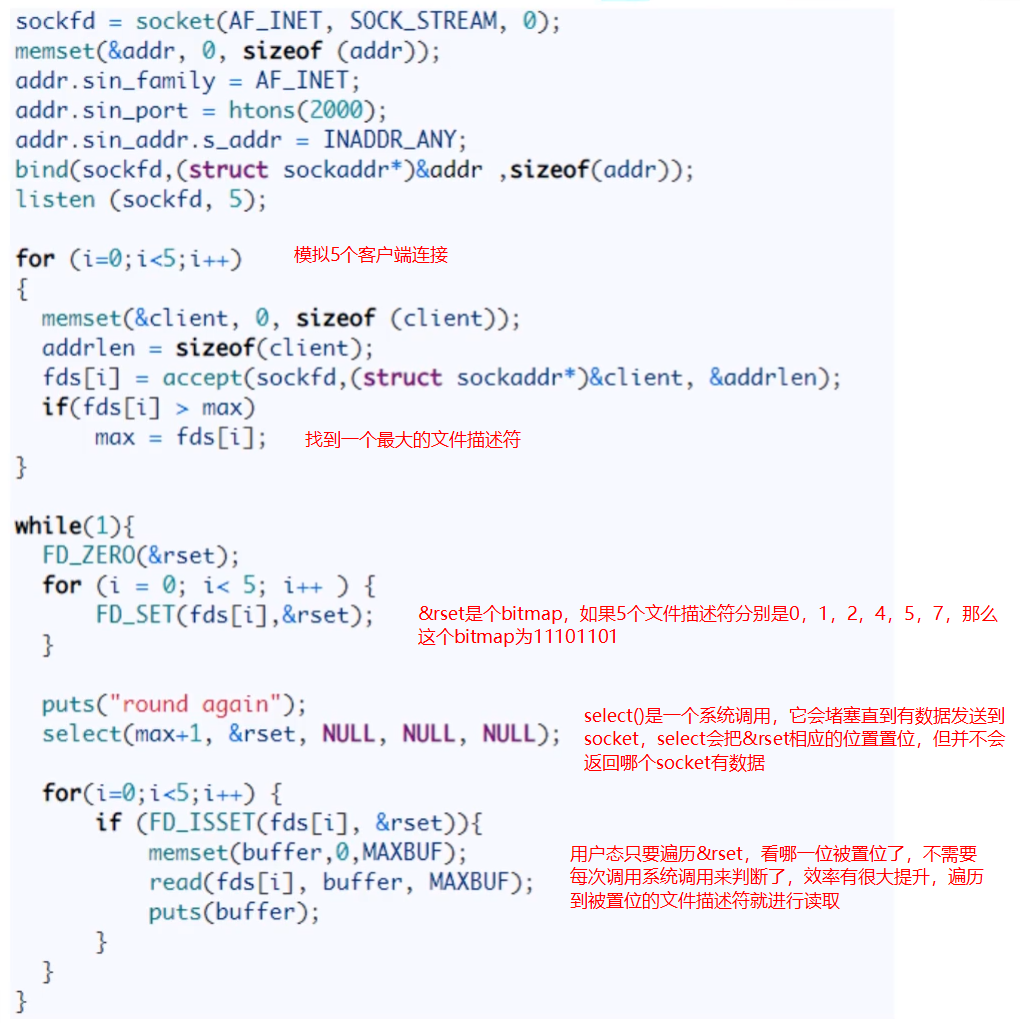

1、select

There are also code examples implemented by select code

1.1 Advantages

Select actually copies the fd array to be traversed in the user mode of NIO to the kernel mode, and let the kernel mode to traverse, because the user mode judges whether the socket has data or the kernel mode is called. After all the copies are copied to the kernel mode, the judgment is traversed like this There is no need to switch frequently between user mode and kernel mode

. As can be seen from the code, after the select system call, a set &rset is returned, so that the user mode only needs to perform a simple binary comparison, and you can quickly know Which sockets need to read data, which effectively improves efficiency

1.2 Existing problems

1. The bitmap has a maximum of 1024 bits, and a process can only handle 1024 clients.

2. &rset is not reusable. The corresponding bit will be set every time the socket has data.

3. The file descriptor array is copied to the kernel state, and there is still overhead

4. Select does not notify the user which socket has data, and O(n) traversal is still required

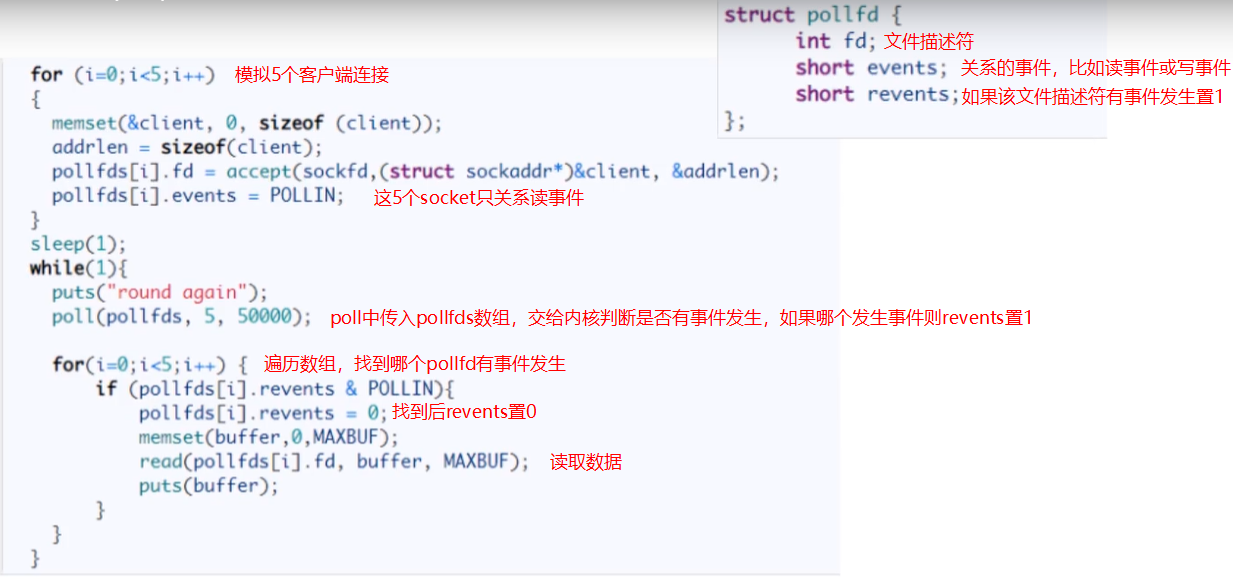

2、poll

2.1 Code example

In poll, the file descriptor has an independent data structure pollfd, and the pollfd array is passed into poll, and the other implementation logic is the same as select

2.2 Advantages

1. Poll uses the pollfd array instead of the bitmap in select. The array does not have a 1024 limit and can manage more

clients at a time. 2. When an event occurs in the pollfds array, the corresponding revens is set to 1, and it is set again when traversing. Back to 0, realize the reuse of pollfd array

2.3 Disadvantages

Poll solves the first two of the shortcomings of select. The essential principle is still the method of select. There are still the original problems in select.

1. The pollfds array is copied to the kernel mode, and there is still overhead.

2. The poll does not notify the user which socket is in the mode. Data, still need O(n) traversal

3、epoll

3.1 Code example

3.2 Event notification mechanism

1. When there is data on the network card, it will first be placed in the DMA (a buffer in the memory, the network card can directly access this data area)

2. The network card initiates an interrupt to the cpu, let the cpu handle the network card first

3. Interrupt The number will be bound to a callback in the memory, which socket has data, the callback function will put which socket into the ready list

3.3 detailed process

First, epoll_create creates an epoll instance. It will create the required red-black tree, ready linked list, and file handle representing the epoll instance. In fact, it opens up a memory space in the kernel, and all sockets connected to the server will be placed in this space. , These sockets exist in the form of red-black trees, and there will be a space to store the ready linked list; the red-black tree stores the node data of the monitored file descriptors, and the ready linked list stores the node data of the ready file descriptors;

epoll_ctl adds new Firstly, it is judged whether there is this file descriptor node on the red-black tree, and if there is, it will return immediately. If not, insert a new node on the trunk and tell the kernel to register the callback function. When receiving data from a file descriptor, the kernel inserts the node into the ready linked list.

epoll_wait will receive the message, copy the data to the user space, and clear the linked list.

3.4 Horizontal trigger and edge trigger

Level_triggered: When a read-write event occurs on the monitored file descriptor, epoll_wait() will notify the handler to read and write. If you do not read and write all the data at one time this time (for example, the read and write buffer is too small), then next time you call epoll_wait(), it will notify you to continue reading and writing on the file descriptor that has not been read and written. , Of course, if you keep reading and writing, it will keep you informed! ! ! If there are a large number of ready file descriptors in the system that you do not need to read or write, and they will return every time, this will greatly reduce the efficiency of the processing program to retrieve the ready file descriptors that it cares about! ! !

Edge_triggered: When a read-write event occurs on the monitored file descriptor, epoll_wait() will notify the handler to read and write. If you do not read and write all the data this time (such as the read and write buffer is too small), then next time you call epoll_wait (), it will not notify you, that is, it will only notify you once, until the file descriptor You will be notified when the second read-write event occurs! ! ! This mode is more efficient than horizontal triggering, and the system will not be flooded with a large number of ready file descriptors that you don't care about! ! !

3.5 Advantages

Epoll is currently the most advanced IO multiplexer. Redis, Nginx, and Java NIO in Linux all use epoll.

1. There is only one copy from the user mode to the kernel mode in the life cycle of a socket, and the overhead is small

2 、Using the event notification mechanism, every time there is data in the socket, it will actively notify the kernel and add it to the ready list, without traversing all sockets