I haven’t had anything to do in the past few days. When I was working and fishing, I found a more lightweight distributed logging system PlumeLog on Code Cloud. I researched it, wrote a demo, and made a record.

1. Introduction to PlumeLog

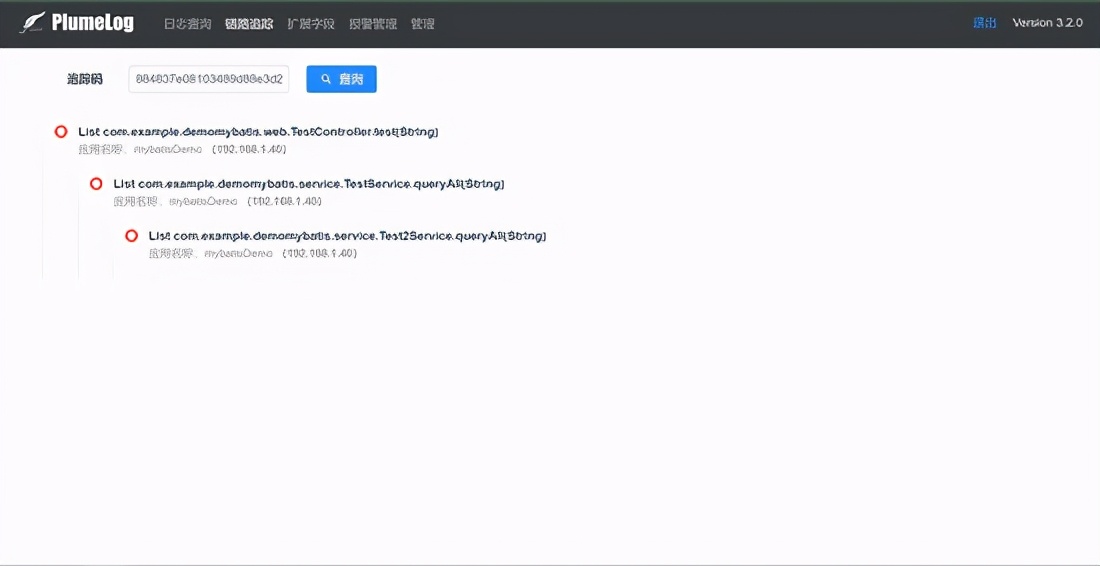

- Non-intrusive distributed log system, collect logs based on log4j, log4j2, logback, set the link ID to facilitate query related logs

- Based on elasticsearch as a query engine

- High throughput, high query efficiency

- The whole process does not occupy the local disk space of the application and is maintenance-free; it is transparent to the project and does not affect the operation of the project itself

- No need to modify the old project, import and use directly, support dubbo, support springcloud

2. Preparation

Server installation

- The first is the message queue. PlumeLog is adapted to redis or kafka. The general project redis is enough. I also use redis directly. Redis official website: https://redis.io

- Then you need to install elasticsearch, the official website download address: https://www.elastic.co/cn/downloads/past-releases

- Finally, download the Plumelog package, plumelog-server, download address: https://gitee.com/frankchenlong/plumelog/releases

Service start

- Start redis to ensure that redis can be connected locally (server security group open port, redis configuration can access ip)

- Start elasticsearch, the default startup port is 9200, direct access to display this means that the startup is successful

Three, modify the configuration

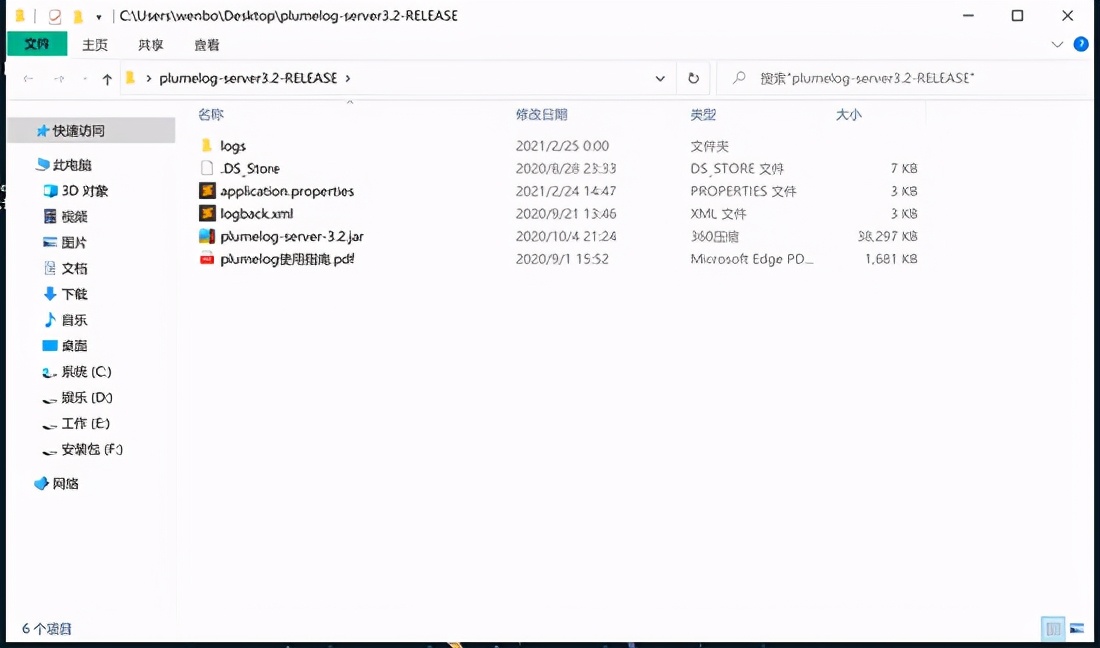

These files are available after the Plumelog compressed package is decompressed

Modify application.properties, paste my configuration here, the main thing to change is the configuration of redis and es

spring.application.name=plumelog_server

server.port=8891

spring.thymeleaf.mode=LEGACYHTML5

spring.mvc.view.prefix=classpath:/templates/

spring.mvc.view.suffix=.html

spring.mvc.static-path-pattern=/plumelog/**

#值为4种 redis,kafka,rest,restServer

#redis 表示用redis当队列

#kafka 表示用kafka当队列

#rest 表示从rest接口取日志

#restServer 表示作为rest接口服务器启动

#ui 表示单独作为ui启动

plumelog.model=redis

#如果使用kafka,启用下面配置

#plumelog.kafka.kafkaHosts=172.16.247.143:9092,172.16.247.60:9092,172.16.247.64:9092

#plumelog.kafka.kafkaGroupName=logConsumer

#redis配置,3.0版本必须配置redis地址,因为需要监控报警

plumelog.redis.redisHost=127.0.0.1:6379

#如果使用redis有密码,启用下面配置

plumelog.redis.redisPassWord=123456

plumelog.redis.redisDb=0

#如果使用rest,启用下面配置

#plumelog.rest.restUrl=http://127.0.0.1:8891/getlog

#plumelog.rest.restUserName=plumelog

#plumelog.rest.restPassWord=123456

#elasticsearch相关配置

plumelog.es.esHosts=127.0.0.1:9200

#ES7.*已经去除了索引type字段,所以如果是es7不用配置这个,7.*以下不配置这个会报错

#plumelog.es.indexType=plumelog

#索引分片数量设定,建议值:单日日志大小/ES节点机器jvm内存大小 合理的分片大小保证ES写入效率和查询效率

plumelog.es.shards=5

plumelog.es.replicas=0

plumelog.es.refresh.interval=30s

#日志索引建立方式day表示按天、hour表示按照小时

plumelog.es.indexType.model=day

#ES设置密码,启用下面配置

#plumelog.es.userName=elastic

#plumelog.es.passWord=FLMOaqUGamMNkZ2mkJiY

#单次拉取日志条数

plumelog.maxSendSize=5000

#拉取时间间隔,kafka不生效

plumelog.interval=1000

#plumelog-ui的地址 如果不配置,报警信息里不可以点连接

plumelog.ui.url=http://127.0.0.1:8891

#管理密码,手动删除日志的时候需要输入的密码

admin.password=123456

#日志保留天数,配置0或者不配置默认永久保留

admin.log.keepDays=30

#登录配置

#login.username=admin

#login.password=adminDo not start plumelog-server first, wait for the last to start

Recommended reference configuration method to improve performance

The daily log volume is less than 50G, and the SSD hard disk used

plumelog.es.shards=5

plumelog.es.replicas=0

plumelog.es.refresh.interval=30s

plumelog.es.indexType.model=day

The daily log volume is above 50G, and the mechanical hard disk used

plumelog.es.shards=5

plumelog.es.replicas=0

plumelog.es.refresh.interval=30s

plumelog.es.indexType.model=hour

The daily log volume is more than 100G, and the mechanical hard disk used

plumelog.es.shards=10

plumelog.es.replicas=0

plumelog.es.refresh.interval=30s

plumelog.es.indexType.model=hour

The daily log volume is more than 1000G, and the SSD hard disk used, this configuration can run to more than 10T a day without any problem

plumelog.es.shards=10

plumelog.es.replicas=1

plumelog.es.refresh.interval=30s

plumelog.es.indexType.model=hour

The increase in plumelog.es.shards and the need to adjust the maximum number of shards in the ES cluster in hour mode

PUT /_cluster/settings

{

"persistent": {

"cluster": {

"max_shards_per_node":100000

}

}

}Fourth, create a springboot project

Project configuration

pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.4.3</version>

<relativePath/> <!-- lookup parent from repository -->

</parent>

<groupId>com.example</groupId>

<artifactId>demo-mybatis</artifactId>

<version>0.0.1-SNAPSHOT</version>

<name>demo-mybatis</name>

<description>Demo project for Spring Boot</description>

<properties>

<java.version>1.8</java.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.mybatis.spring.boot</groupId>

<artifactId>mybatis-spring-boot-starter</artifactId>

<version>2.1.4</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<!--分布式日志收集plumelog-->

<dependency>

<groupId>com.plumelog</groupId>

<artifactId>plumelog-logback</artifactId>

<version>3.3</version>

</dependency>

<dependency>

<groupId>com.plumelog</groupId>

<artifactId>plumelog-trace</artifactId>

<version>3.3</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-aop</artifactId>

<version>2.1.11.RELEASE</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>cn.hutool</groupId>

<artifactId>hutool-core</artifactId>

<version>5.5.8</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<configuration>

<excludes>

<exclude>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

</exclude>

</excludes>

</configuration>

</plugin>

</plugins>

</build>

</project>Two configuration files under resources

application.properties

server.port=8888

spring.datasource.url=jdbc:mysql://127.0.0.1:3306/test?useUnicode=true&characterEncoding=utf-8&allowMultiQueries=true&useSSL=false

spring.datasource.driver-class-name=com.mysql.cj.jdbc.Driver

spring.datasource.username=root

spring.datasource.password=123456

spring.datasource.hikari.maximum-pool-size=10

# mybatis配置

mybatis.type-aliases-package=com.example.demomybatis.model

mybatis.configuration.map-underscore-to-camel-case=true

mybatis.configuration.default-fetch-size=100

mybatis.configuration.default-statement-timeout=30

# jackson格式化日期时间

spring.jackson.date-format=YYYY-MM-dd HH:mm:ss

spring.jackson.time-zone=GMT+8

spring.jackson.serialization.write-dates-as-timestamps=false

# sql日志打印

logging.level.com.example.demomybatis.dao=debug

## 输出的日志文件

logging.file.path=/loglogback-spring.xml

<?xml version="1.0" encoding="UTF-8" ?>

<configuration>

<appender name="consoleApp" class="ch.qos.logback.core.ConsoleAppender">

<layout class="ch.qos.logback.classic.PatternLayout">

<pattern>

%date{yyyy-MM-dd HH:mm:ss.SSS} %-5level[%thread]%logger{56}.%method:%L -%msg%n

</pattern>

</layout>

</appender>

<appender name="fileInfoApp" class="ch.qos.logback.core.rolling.RollingFileAppender">

<filter class="ch.qos.logback.classic.filter.LevelFilter">

<level>ERROR</level>

<onMatch>DENY</onMatch>

<onMismatch>ACCEPT</onMismatch>

</filter>

<encoder>

<pattern>

%date{yyyy-MM-dd HH:mm:ss.SSS} %-5level[%thread]%logger{56}.%method:%L -%msg%n

</pattern>

</encoder>

<!-- 滚动策略 -->

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<!-- 路径 -->

<fileNamePattern>log/info.%d.log</fileNamePattern>

</rollingPolicy>

</appender>

<appender name="fileErrorApp" class="ch.qos.logback.core.rolling.RollingFileAppender">

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<level>ERROR</level>

</filter>

<encoder>

<pattern>

%date{yyyy-MM-dd HH:mm:ss.SSS} %-5level[%thread]%logger{56}.%method:%L -%msg%n

</pattern>

</encoder>

<!-- 设置滚动策略 -->

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<!-- 路径 -->

<fileNamePattern>log/error.%d.log</fileNamePattern>

<!-- 控制保留的归档文件的最大数量,超出数量就删除旧文件,假设设置每个月滚动,

且<maxHistory> 是1,则只保存最近1个月的文件,删除之前的旧文件 -->

<MaxHistory>1</MaxHistory>

</rollingPolicy>

</appender>

<appender name="plumelog" class="com.plumelog.logback.appender.RedisAppender">

<appName>mybatisDemo</appName>

<redisHost>127.0.0.1</redisHost>

<redisAuth>123456</redisAuth>

<redisPort>6379</redisPort>

<runModel>2</runModel>

</appender>

<!-- root 一定要放在最后,因有加载顺序的问题 -->

<root level="INFO">

<appender-ref ref="consoleApp"/>

<appender-ref ref="fileInfoApp"/>

<appender-ref ref="fileErrorApp"/>

<appender-ref ref="plumelog"/>

</root>

</configuration>Pay attention to the appender of plumelog in the logback configuration file, and then reference the appender in root. This is the configuration of pushing logs to the redis queue

Traceid settings and link tracking global management configuration

traceid interceptor configuration

package com.example.demomybatis.common.interceptor;

import com.plumelog.core.TraceId;

import org.springframework.stereotype.Component;

import org.springframework.web.servlet.HandlerInterceptor;

import org.springframework.web.servlet.ModelAndView;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import java.util.UUID;

/**

* Created by wenbo on 2021/2/25.

*/

@Component

public class Interceptor implements HandlerInterceptor {

@Override

public boolean preHandle(HttpServletRequest request, HttpServletResponse response, Object handler) {

//设置TraceID值,不埋此点链路ID就没有

TraceId.logTraceID.set(UUID.randomUUID().toString().replaceAll("-", ""));

return true;

}

@Override

public void postHandle(HttpServletRequest request, HttpServletResponse response, Object handler, ModelAndView modelAndView) {

}

@Override

public void afterCompletion(HttpServletRequest request, HttpServletResponse response, Object handler, Exception ex) {

}

}package com.example.demomybatis.common.interceptor;

import org.springframework.context.annotation.Configuration;

import org.springframework.web.servlet.config.annotation.InterceptorRegistry;

import org.springframework.web.servlet.config.annotation.WebMvcConfigurer;

/**

* Created by wenbo on 2021/2/25.

*/

@Configuration

public class InterceptorConfig implements WebMvcConfigurer {

@Override

public void addInterceptors(InterceptorRegistry registry) {

// 自定义拦截器,添加拦截路径和排除拦截路径

registry.addInterceptor(new Interceptor()).addPathPatterns("/**");

}

}Link tracking global management configuration

package com.example.demomybatis.common.config;

import com.plumelog.trace.aspect.AbstractAspect;

import org.aspectj.lang.JoinPoint;

import org.aspectj.lang.annotation.Around;

import org.aspectj.lang.annotation.Aspect;

import org.springframework.stereotype.Component;

/**

* Created by wenbo on 2021/2/25.

*/

@Aspect

@Component

public class AspectConfig extends AbstractAspect {

@Around("within(com.example..*))")

public Object around(JoinPoint joinPoint) throws Throwable {

return aroundExecute(joinPoint);

}

}Here you need to replace the entry point path with your own package path

@ComponentScan({"com.plumelog","com.example.demomybatis"})

Finally put the demo link: https://gitee.com/wen_bo/demo-plumelog

Five, start the project

Start up springboot first, then start plumelog with java -jar plumelog-server-3.2.jar

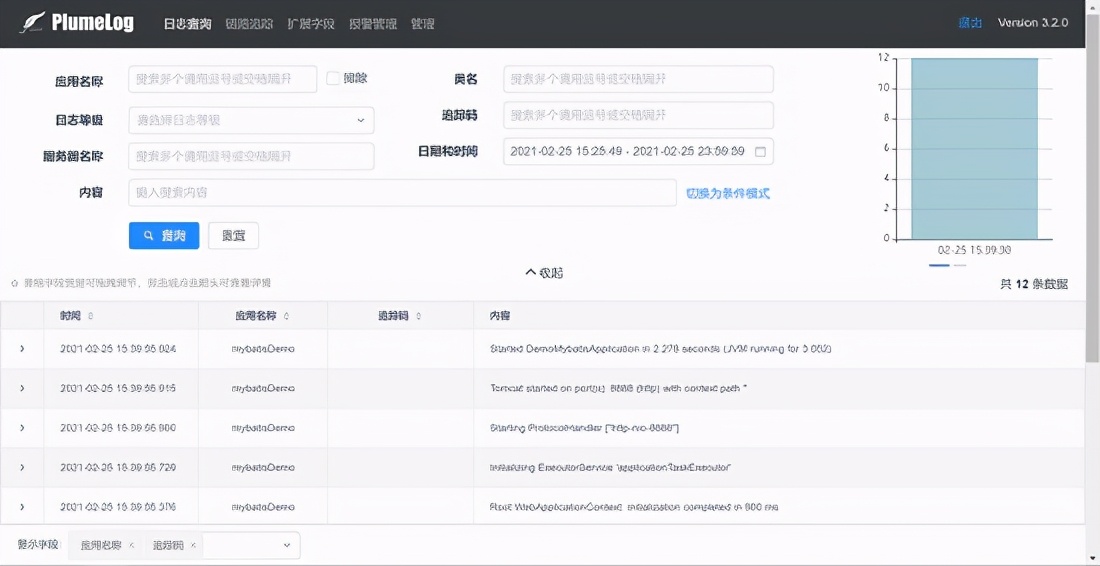

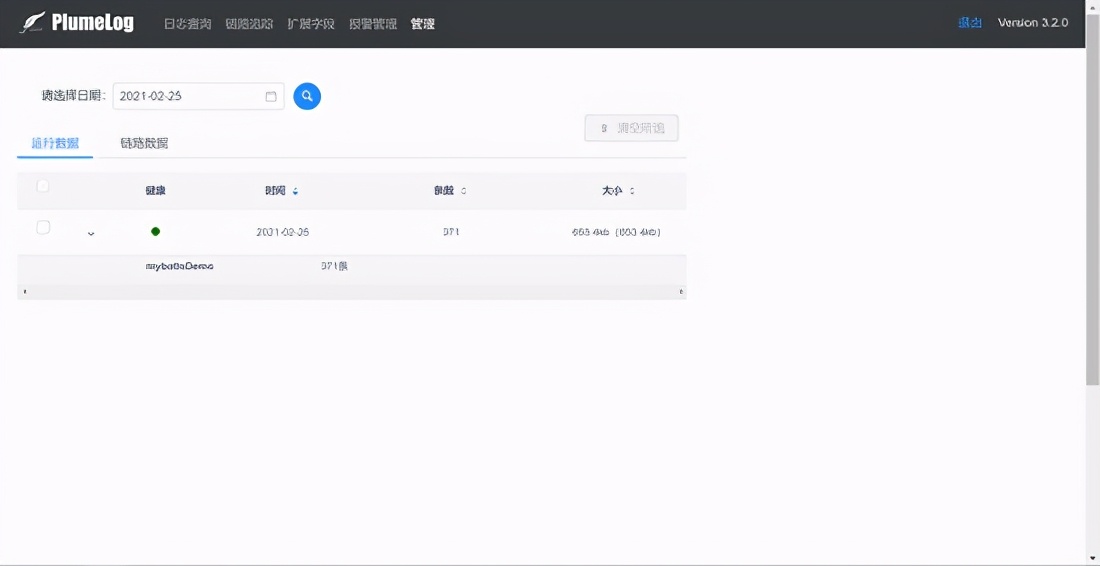

Visit http://127.0.0.1:8891

The default username and password are both admin

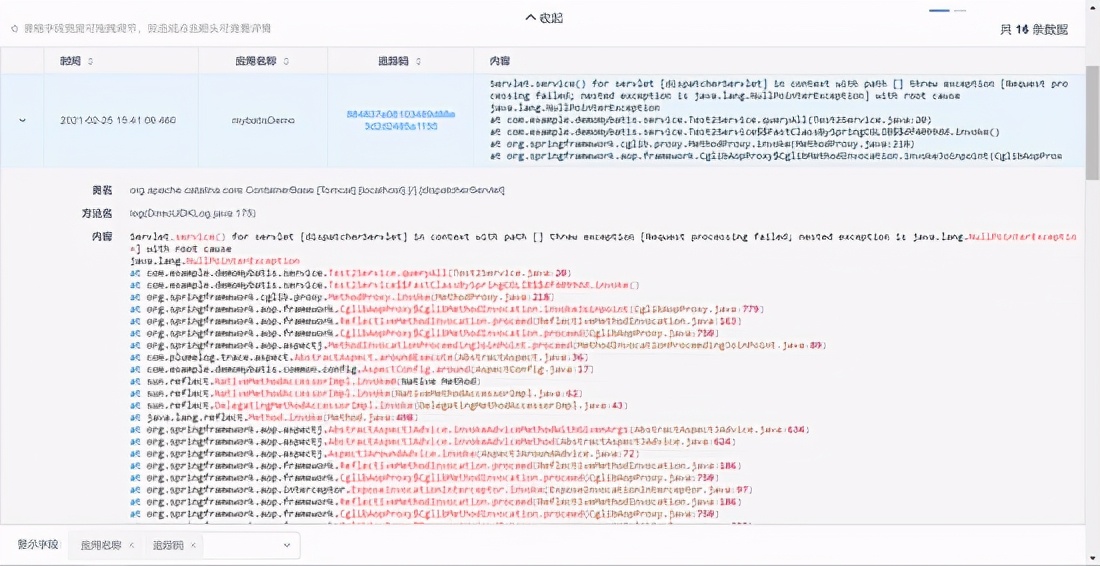

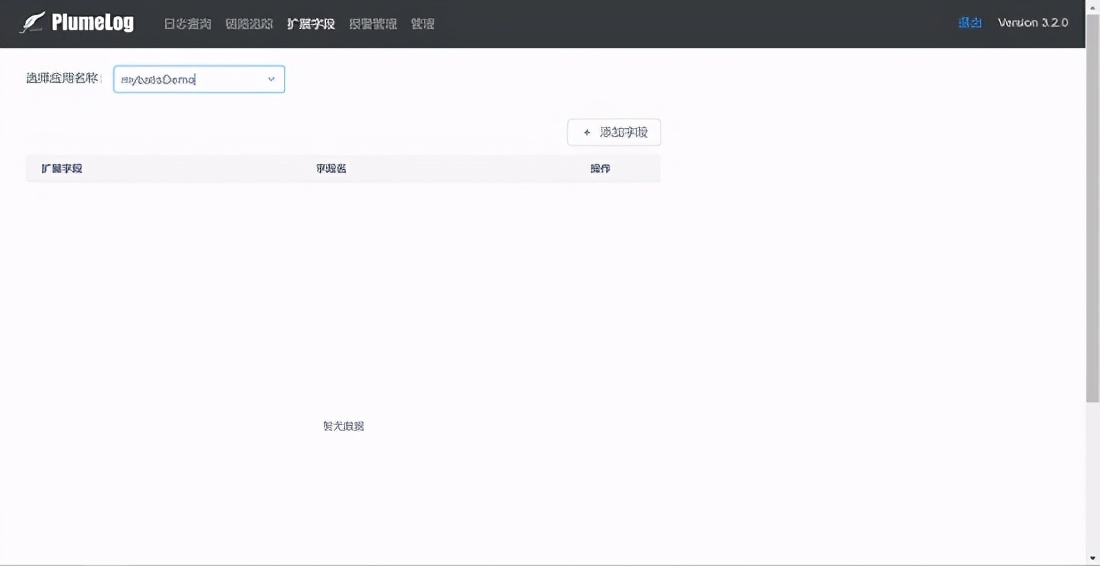

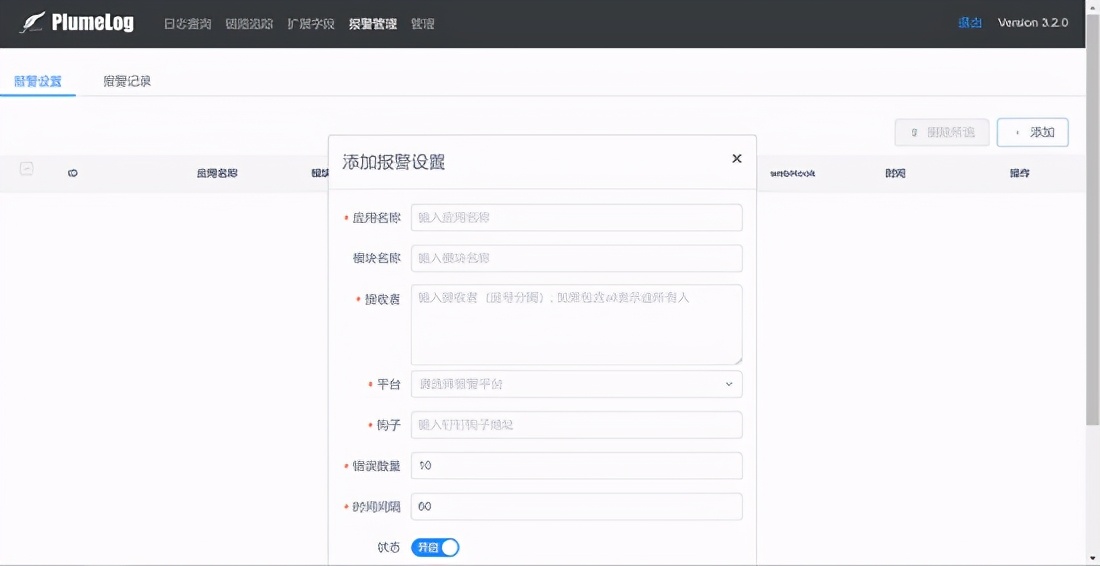

Finally, show the effect picture

Author: wenbo

Original link: https://www.haowenbo.com/articles/2021/02/25/1614235359857.html

If you think this article is helpful to you, you can pay attention to my official account and reply to the keyword [Interview] to get a compilation of Java core knowledge points and an interview gift package! There are more technical dry goods articles and related materials to share, let's learn and make progress together!