core concept

(1) Pod

The smallest unit of K8s is not a container, but a Pod. Pod is the basis of all business types. K8s does not directly deal with containers, but Pod. Pod is composed of one or more containers.

A Pod is a collection of a group of Docker containers, that is, there can be multiple containers inside the Pod, not a Pod represents a container.

All containers in a Pod share the network. If there are three containers A, B, and C in the Pod, and I now A container listens to port 80, then the two containers B and C can also get port 80.

The Pod life cycle is short and does not exist all the time.For example, if my server restarts, then the Pod will die, and it will be a new Pod.

1. Why is the smallest unit a Pod instead of a container

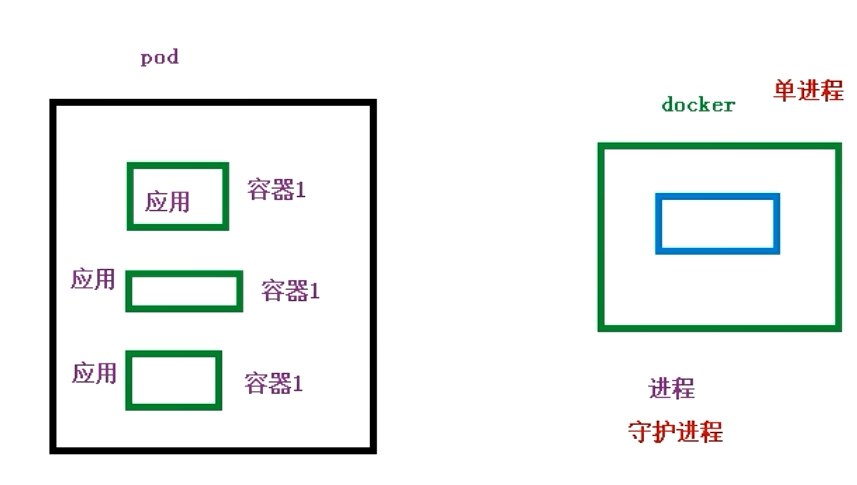

The docker design is single-process, and a container is a process.

A docker container runs one application, of course, it can also run multiple, but this is not easy to manage.

In order to solve the inconvenient management of docker running multiple programs in one container, there is a pod

Pod is a multi-process design and can run multiple applications.

pod design

There are multiple docker containers in the pod, and each docker container runs an application.

Pod exists for intimate applications

What is intimacy application

1. Two applications or multiple applications must interact with each other. For example, there are two containers in a pod, and container A and container B have interactive behavior, for example, container A calls container B, or container A is reading, container B is a write operation.

2. Calls between networks, for example, for example, there are multiple containers in a pod, A container is Nginx, B container is a Java project, then A container calls B container operation is a call between networks, if you two containers are not If it is in a Pod, then the IP extranet is required. If the A container and the B container are in the same pod, they can be called directly through 127.0.0.1 or through the socket, which is definitely more convenient.

Intimacy applications have higher performance and are more convenient

2. Pod implementation mechanism

Are these two mechanisms:

\1. Shared network

\2. Shared storage

a) Shared network

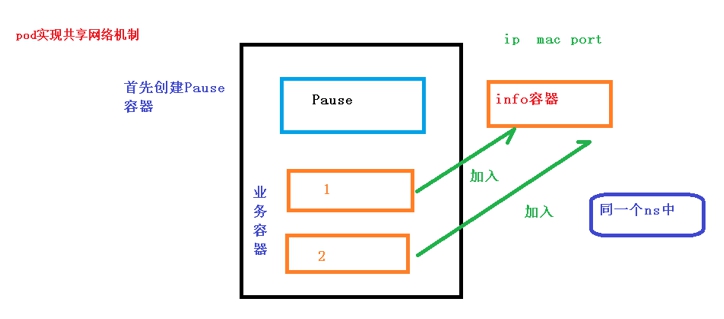

Inside the Pod is a docker container, and the docker container itself is isolated from each other, and the Linux namespace or group is used for isolation.

But the docker containers between pods share the network, how is this achieved?

Just let multiple containers in the same Linux namespace. In this way, network sharing can be achieved.

How is this implemented in the pod?

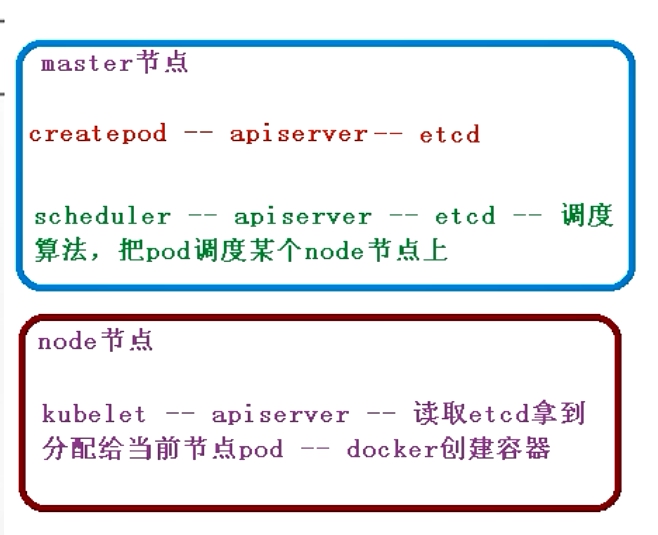

The process is to first create 1 container and 2 container through Pod.First, create a container called Pause.This Pause container can be understood as an info container, which is the root container.The business container will be created below, namely 1 container and 2 container.

Business containers (1 container and 2 container) will be added to the info container. It is managed by the info container, so these containers are all under the same namespace. Network sharing can be achieved.

An ip mac port will be independently generated in the info container, and the network sharing mechanism can be realized.

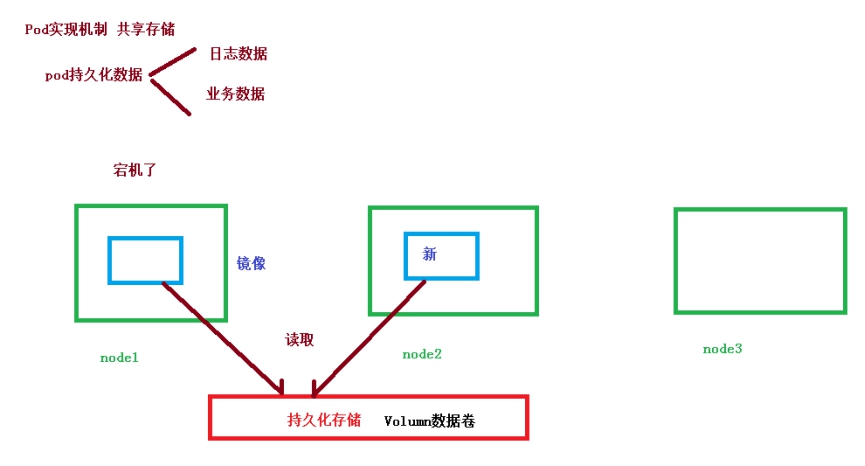

b) Shared storage

Pod implementation mechanism, shared storage, a lot of data will definitely be generated in the operation of the pod container. If the data is used all the time, it must be persisted. For the next time you continue to use it, if you do not do the persistence operation After that, it will definitely be gone next time.

The pod's persistent data may contain log data, business data (addition, deletion, modification, and operation data). These data can be used for persistence operations. If you don't do persistence operations, you won't be able to use them later.

If K8s has three nodes, and the node1 machine fails during operation, then the data of the node1 machine will be transferred to the node2 node (this is actually a mirror transfer). If you do not perform a persistent operation on the log data business data, then node2 The new container that the machine fails over cannot obtain the logs in the container that was previously running on node1.

So K8s has persistent storage, which is Volumn data volume. Volumn data volume. Volumn data volume is something similar to a database. Node2 can read the persistent data of node1 from Volumn data volume.

3. Image pull strategy

When running a container in Kubernetes, you need to obtain an image for the container. There are three sources for the image of the container in the Pod, namely, the Docker public image warehouse, the private image warehouse and the local image. In the Kubernetes scenario used in the intranet, it is necessary to build and use a private mirror warehouse. Pod supports three types of image pull policies. The image pull policy is set through the imagePullPolicy field in the configuration file:

IfNotPresent (default policy): Only when the local mirror does not exist, will the mirror pull

Always: A pull will be performed regardless of whether there is a local mirror or not

Never: The pod will not be pulled regardless of whether there is a local mirror or not, and the programmer needs to pull it manually

There are points to note here: the default value of the mirror pull strategy is IfNotPresent, but the default value of the mirror of the :lastest tag is Always. Therefore, avoid using the: lastest tag in a production environment. There is also docker will check when pulling the image, if the MD5 code in the image has not changed, it will not pull the image data.

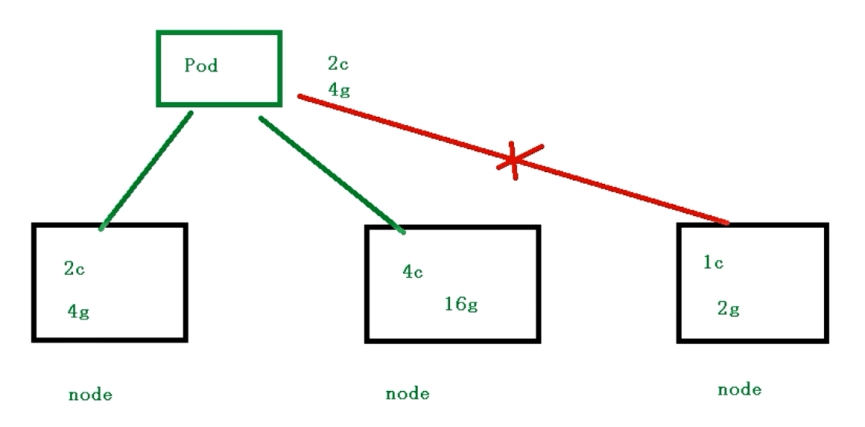

4. Resource limit

Kubernetes uses cgroups to limit the computing resources such as the CPU and memory of the container. When creating a Pod, you can set resource requests and resource limits for each container in the Pod. The resource request is the minimum resource requirement required by the container. The resource limit is the upper limit of the resource that the container can use. The unit of CPU is core, and the unit of memory is byte.

If I now have three Node machines, I create a pod, and the pod will be scheduled to a certain node node. The pod has such a characteristic in the scheduling process.

For example, the remaining resources of my node1 machine is 2 core 4G memory

The remaining resources of the node2 machine are 4 cores and 16G memory

The remaining resources of the node3 machine is 1 core 2G memory

And my pod needs at least 2 cores and 4G memory to support the operation when scheduling, then when the pod is scheduling, it is found that the node3 resources cannot be satisfied. Then the node3 machine is not scheduled

That is to say, pod scheduling is scheduled according to resource constraints. If the node machine does not meet the pod resources, then the pod will not be scheduled on this node machine. This ensures the maximum and reasonable application of the pod, which is the resource limit.

5. How Pod works

Generally, an independent Pod is not created directly in Kubernetes, because Pod is a temporary entity. When creating an independent Pod directly, if there is a lack of resources or the scheduled Node fails, the Pod will be deleted directly. It should be noted here that restarting the Pod and restarting the container in the Pod is not a concept. The Pod itself will not run, it is just an environment in which the container runs. The Pod itself has no self-healing ability. If the Node where the Pod is located fails, or if the scheduling operation itself fails, the Pod will be deleted; similarly, if there is a lack of resources, the Pod will also fail. Kubernetes uses a high-level abstraction, the controller to manage temporary Pods. Through the controller, multiple Pods can be created and managed, and replicas can be processed, deployed, and self-healing capabilities can be provided within the cluster.

6. Restart strategy

For example, there are many containers in my pod. When the container is terminated or exited, what operations need to be done, this is the restart strategy

Pod supports three restart strategies, which need to be set in the configuration file through the restartPolicy field:

Always (default): As long as you exit, it will restart. It is used where services are continuously provided, such as nginx (services can only be provided in the started state).

OnFailure: Only when it exits abnormally (that is, when the exit code is not equal to 0), it will restart. For example, if there is a batch task, it will only be executed once, and it will not be executed after the execution.

Never: Do not restart as long as the container terminates and exits

7. health examination

[root@zjj101 config]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-f89759699-rh5lk 1/1 Running 0 17h

It can be seen that although the container is in the running state, it may be unhealthy.For example, the Java project has a memory overflow problem and cannot provide services, but through the kubectl get pods command, it may also be in the running state (because the process is still exist).

So kubectl get pods cannot see whether the container can provide services normally.

We can go to the application-level health check to see if the container is really working properly. For example, access the application in the container through the ip port.

There are two inspection mechanisms for k8s inspection, readiness and liveness

Liveness is a survival check. If the check fails, the container is killed and the operation is performed according to the restartPolicy (restart policy configured by the programmer) in the pod

Readiness is a readiness check.If the check fails, k8s will remove the pod from the current container and use other containers to provide services.

Probe supports the following three inspection methods

\1. httpGet: Send HTTP request, return 200-400 range status code as success

\2. exec: execute the shell command and the return status code is 0, it means success

\3. tcpSocket: Initiate TCP Socket to establish a connection, if it is established, it means success.

8. Terminate Pod

In a cluster, Pod represents running processes, but when these processes are no longer needed, how to gracefully terminate these processes is very important. When a user requests to delete a Pod, Kubernetes will send a termination signal to each container. Once the grace period has passed, it will send a kill signal to delete the Pod through the APIServer. Usually the default graceful exit time is 30s. A slowly closed Pod can still continue to serve externally until the load balancer removes it. When the graceful exit time expires, any running processes in the Pod will be killed.

9. Pod life cycle

1、Pending

Pod has been accepted by the Kubernetes system, but there are still one or more container images that have not been created. This includes the time when the Pod is being scheduled and the mirror is downloaded from the network.

2、Running

Pod has been bound to a Node, all containers have been created, and at least one container is running

3、Succeeded

All containers in the Pod have been successfully stopped and will no longer be restarted

4、Failed

All containers in the Pod have been terminated, and at least one container was terminated abnormally, that is, the container exited with a non-zero status or was forcibly terminated by the system.

5、Unknown

Pod cannot be acquired for some reasons. It is usually a network problem.

10. Pod scheduling strategy

I use kubectl apply -f .yml to create a pod,

I can view pods from kubectl get pods

This Pod may be allocated to the node1 machine, or it may be allocated to the Node2 machine.