Chapter Three FFmpeg Video Decoder

It is recommended to read before reading this chapter: FFmpeg+SDL-----Syllabus

table of Contents

• Knowledge of video decoding

• Construction of FFmpeg development environment under VC

• Sample program operation

• FFmpeg decoding function

• FFmpeg decoding data structure

• Practice

Video decoding knowledge

1. Pure video decoding process

▫ compressed coded data -> pixel data.

▫ For example, to decode H.264, it is "H.264 stream->YUV".

2. General video decoding

process▫ The video stream is generally stored in a certain encapsulation format (such as MP4, AVI, etc.). The encapsulation format usually also contains content such as audio stream.

▫ For the video in the encapsulation format, you need to extract the video stream from the encapsulation format first, and then decode it.

▫ For example, to decode a video file in MKV format, it is "MKV->H.264 stream->YUV".

PS: This course directly discusses the second process

Construction of FFmpeg development environment under VC

1. Create a new console

project▫ Open VC++

▫ File->New->Project->Win32 console application

2. Copy FFmpeg development

files▫ Copy the header file ( .h) to the include subfolder of the project

folder▫ Import Copy the library file ( .lib) to the lib subfolder of the project folder

▫ Copy the dynamic library file (*.dll) to the project folder.

PS: If you directly use the FFmpeg development file downloaded from the official website. You may also need to copy the three files inttypes.h, stdint.h, and _mingw.h in the MinGW installation directory to the include subfolder of the project folder.

3. Configure development files

- Open the properties panel

- Solution Explorer -> Right click on the project -> Properties

- Header file configuration

- Configuration Properties ->C/C+±>General ->Additional include directory, enter "include" (the directory where you just copied the header file)

- Import library configuration

- Configure Properties -> Linker -> General -> Additional Library Directory, enter "lib" (the directory where the library file was copied just now)

- Configuration properties -> linker -> input -> additional dependencies, enter "avcodec.lib; avformat.lib; avutil.lib; avdevice.lib; avfilter.lib; postproc.lib; swresample.lib; swscale.lib" ( Import library file name)

- Dynamic library does not need to be configured

test

- Create source code file

- Create a C/C++ file containing the main() function in the project (if you already have one, you can skip this step).

- Include header file

- If FFmpeg is used in C language, use the following code directly

#include "libavcodec/avcodec.h" - If FFmpeg is used in C++ language, use the following code

#define __STDC_CONSTANT_MACROS extern "C" { #include "libavcodec/avcodec.h " }

- If FFmpeg is used in C language, use the following code directly

- Call an FFmpeg interface function in main()

- For example, the following code prints out the configuration information of FFmpeg. If it runs without error, it means that FFmpeg has been configured.

int main(int argc, char* argv[]){

printf("%s", avcodec_configuration());

return 0;

}

Introduction to FFmpeg library

FFmpeg contains a total of 8 libraries:

▫ avcodec: Codec (the most important library).

▫ avformat: package format processing.

▫ avfilter: filter special effects processing.

▫ avdevice: input and output of various devices.

▫ avutil: Tool library (most libraries need the support of this library).

▫ postproc: post-processing.

▫ swresample: audio sample data format conversion.

▫ swscale: video pixel data format conversion.

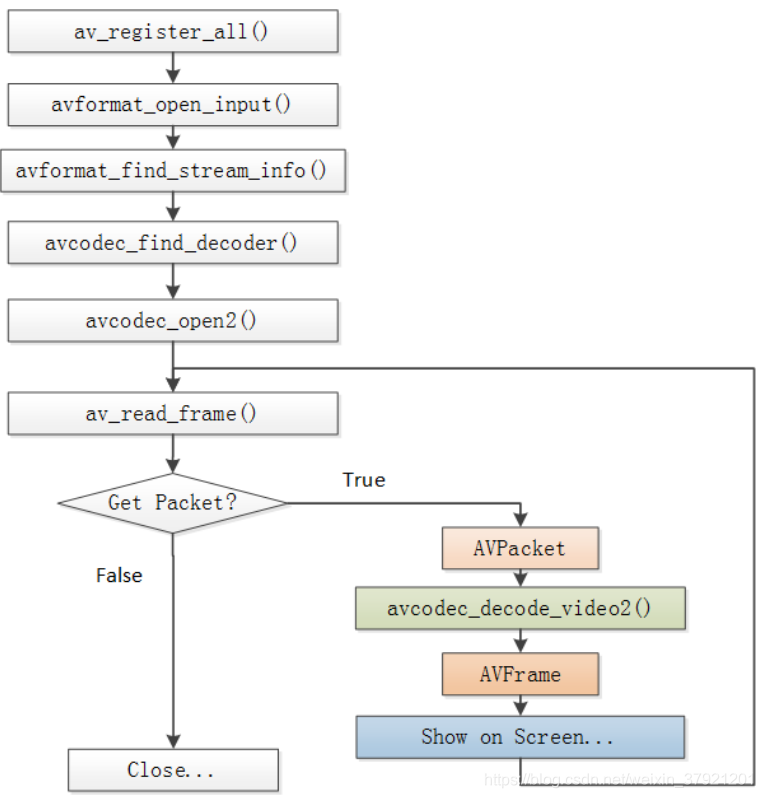

The flow chart of FFmpeg decoding is shown below

Introduction to FFmpeg decoding function

av_register_all: This function is called at the beginning of all ffmpeg to register the required components

avformat_open_input(): open the video stream domain (if VC++ debugging, the second parameter file should be in the same directory as the .cpp file)

avformat_find_stream_info(): get video stream information Decoder type, width and

height, etc. avcodec_find_decoder(): find out the corresponding decoder

avcodec_open2(): open the decoder

av_read_frame(): read one frame of compressed data, namely H.264 stream

AVPacket: read by the above function After the frame information is out, it is filled into this structure, which is filled with 264

avcode_decode_video2(): decoding function

AVFrame: the decoded information is filled into this structure, and it is filled with YUV

avcodec_close(): close the decoder.

avformat_close_input(): Close the input video file

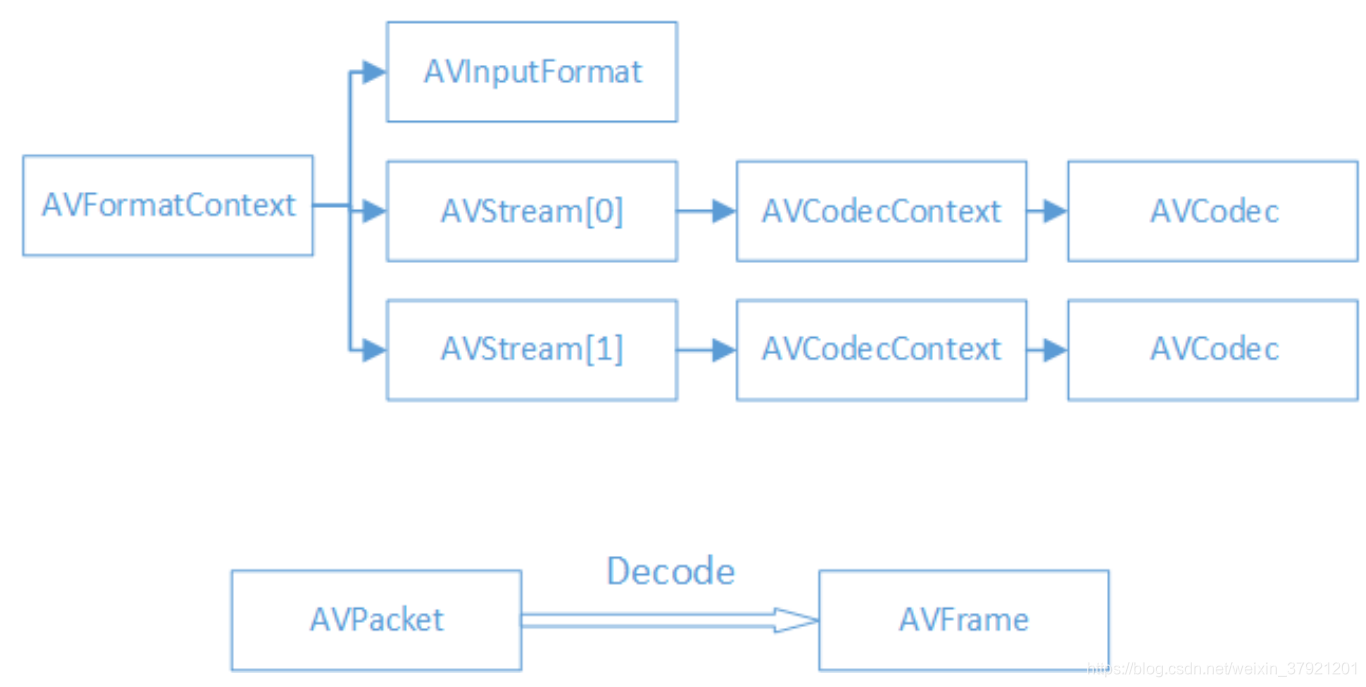

FFmpeg decoded data structure

Introduction to the data structure of FFmpeg decoding

▫ AVFormatContext: The encapsulation format context structure, which is also the structure that governs the whole world, and saves the relevant information of the video file encapsulation format.

▫ AVInputFormat: Each package format (such as FLV, MKV, MP4, AVI) corresponds to this structure.

▫ AVStream: Each video (audio) stream in the video file corresponds to this structure.

▫ AVCodecContext: Encoder context structure, which saves video (audio) codec related information.

▫ AVCodec: Each video (audio) codec (such as H.264 decoder) corresponds to this structure.

▫ AVPacket: Store one frame of compressed coded data.

▫ AVFrame: Store the decoded pixel (sample) data of a frame.

FFmpeg data structure analysis

- AVFormatContext

- iformat: AVInputFormat of input video

- nb_streams: the number of AVStreams of the input video

- streams: the AVStream[] array of the input video

- duration: the duration of the input video (in microseconds)

- bit_rate: the bit rate of the input video

- VInputFormat

- name: Package format name

- long_name: the long name of the encapsulation format

- extensions: the extension of the package format

- id: Encapsulation format ID

- Some interface functions for encapsulation format processing

- AVStream

- id: serial number

- codec: AVCodecContext corresponding to the stream

- time_base: the time base of the stream

- _frame_rate: The frame rate of the stream

- AVCodecContext

- codec: AVCodec of the codec

- width, height: the width and height of the image (only for video)

- pix_fmt: pixel format (only for video)

- sample_rate: sample rate (for audio only)

- channels: number of channels (only for audio)

- sample_fmt: sample format (for audio only)

- AVCodec

- name: Codec name

- long_name: long name of the codec

- type: Codec type

- id: Codec ID

- Some codec interface functions

- AVPacket

- pts: display timestamp (multiply and combine with the previous time_base)

- dts: decoding timestamp

- data: compressed coded data

- size: the size of the compressed coded data

- stream_index: the AVStream to which it belongs (represents the array subscript of the previous AVStream)

- AVFrame

- data: decoded image pixel data (audio sampling data).

- linesize: For video, it is the size of a line of pixels in the image; for audio, it is the size of the entire audio frame

- width, height: the width and height of the image (only for video).

- key_frame: Whether it is a key frame (only for video).

- pict_type: Frame type (only for video). For example, I, P, B.

Supplementary knowledge

▫ Why should the decoded data be processed by the sws_scale() function?

The decoded YUV format video pixel data is stored in data[0], data[1], and data[2] of AVFrame. However, these pixel values are not stored continuously, and some invalid pixels are stored after each row of valid pixels. Take luminance Y data as an example, data[0] contains a total of linesize[0]*height data. However, for optimization and other considerations, linesize[0] is not actually equal to width, but a value larger than width. Therefore, you need to use sws_scale() for conversion. After the conversion, the invalid data is removed, and the width and linesize[0] are equal.

PS: You can also do without sws_scale(). Think about how to do it?

Source code:

#include <stdio.h>

#define __STDC_CONSTANT_MACROS

extern "C"

{

#include "libavcodec/avcodec.h"

#include "libavformat/avformat.h"

#include "libswscale/swscale.h"

};

int main(int argc, char* argv[])

{

AVFormatContext *pFormatCtx;

int i, videoindex;

AVCodecContext *pCodecCtx;

AVCodec *pCodec;

AVFrame *pFrame,*pFrameYUV;

uint8_t *out_buffer;

AVPacket *packet;

int y_size;

int ret, got_picture;

struct SwsContext *img_convert_ctx;

//输入文件路径

char filepath[]="Titanic.ts"; //在当前目录下

int frame_cnt;

av_register_all();

avformat_network_init();

pFormatCtx = avformat_alloc_context();

if(avformat_open_input(&pFormatCtx,filepath,NULL,NULL)!=0){

printf("Couldn't open input stream.\n");

return -1;

}

if(avformat_find_stream_info(pFormatCtx,NULL)<0){

printf("Couldn't find stream information.\n");

return -1;

}

videoindex=-1;

for(i=0; i<pFormatCtx->nb_streams; i++)

if(pFormatCtx->streams[i]->codec->codec_type==AVMEDIA_TYPE_VIDEO){

videoindex=i;

break;

}

if(videoindex==-1){

printf("Didn't find a video stream.\n");

return -1;

}

pCodecCtx=pFormatCtx->streams[videoindex]->codec;

pCodec=avcodec_find_decoder(pCodecCtx->codec_id);

if(pCodec==NULL){

printf("Codec not found.\n");

return -1;

}

if(avcodec_open2(pCodecCtx, pCodec,NULL)<0){

printf("Could not open codec.\n");

return -1;

}

/*

* 在此处添加输出视频信息的代码

* 取自于pFormatCtx,使用fprintf()

*/

pFrame=av_frame_alloc();

pFrameYUV=av_frame_alloc();

out_buffer=(uint8_t *)av_malloc(avpicture_get_size(PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height));

avpicture_fill((AVPicture *)pFrameYUV, out_buffer, PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height);

packet=(AVPacket *)av_malloc(sizeof(AVPacket));

//Output Info-----------------------------

printf("--------------- File Information ----------------\n");

av_dump_format(pFormatCtx,0,filepath,0);

printf("-------------------------------------------------\n");

img_convert_ctx = sws_getContext(pCodecCtx->width, pCodecCtx->height, pCodecCtx->pix_fmt,

pCodecCtx->width, pCodecCtx->height, PIX_FMT_YUV420P, SWS_BICUBIC, NULL, NULL, NULL);

frame_cnt=0;

while(av_read_frame(pFormatCtx, packet)>=0){

if(packet->stream_index==videoindex){

/*

* 在此处添加输出H264码流的代码

* 取自于packet,使用fwrite()

*/

ret = avcodec_decode_video2(pCodecCtx, pFrame, &got_picture, packet);

if(ret < 0){

printf("Decode Error.\n");

return -1;

}

if(got_picture){

sws_scale(img_convert_ctx, (const uint8_t* const*)pFrame->data, pFrame->linesize, 0, pCodecCtx->height,

pFrameYUV->data, pFrameYUV->linesize);

printf("Decoded frame index: %d\n",frame_cnt);

/*

* 在此处添加输出YUV的代码

* 取自于pFrameYUV,使用fwrite()

*/

frame_cnt++;

}

}

av_free_packet(packet);

}

sws_freeContext(img_convert_ctx);

av_frame_free(&pFrameYUV);

av_frame_free(&pFrame);

avcodec_close(pCodecCtx);

avformat_close_input(&pFormatCtx);

return 0;

}