图像的特征点检测是图像配准的第一步,下面将为大家介绍尺度不变特征检测(SIFT)和加速鲁棒特征检测(SURF)两种算法。

One, SIFT algorithm

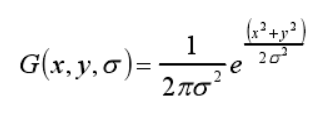

The SIFT algorithm is a high-precision feature point detection algorithm. The feature points detected by this algorithm contain scale, gray level and direction information, which is highly robust. The feature point detection of the SIFT algorithm needs to be performed on the scale space of the image, and the scale space is generated by convolution of the image and the Gaussian function. The Gaussian function is defined as: The

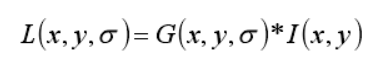

image scale space L (x, y) is calculated as:

1.

The image at the bottom of the scale space pyramid is the clearest, and it is twice the size of the original image. The bottom image is marked as The first layer of the first group, the more blurry you go up. The first step of construction is to perform Gaussian filtering on the bottom image as the second layer of the group, repeat this operation on the newly generated image, the image set after several operations forms a group, and then the most in the group The blurred image is downsampled by 2 times, that is, the length and width of the image are reduced to half of the original. Then the size of the image becomes a quarter of the original image size. Use the newly generated image as the initial image of the next group, and repeat the above steps to complete the construction of the Gaussian pyramid, as shown in the figure. The sizes of the images in the same group are the same. Assume that the number of groups and layers of the Gaussian pyramid to be constructed is m and n respectively. Then (m, n) is the scale space it represents.

The differential Gaussian pyramid is further constructed based on the Gaussian pyramid [38]. The image formed by the difference between the second and the first layer of the first group of the Gaussian pyramid is used as the first layer of the first group of the differential Gaussian pyramid, and the image formed by the difference between the third and second layers of the first group of the Gaussian pyramid is used as the difference The first group and the second layer of the Gaussian pyramid...The images generated according to this rule constitute a differential Gaussian pyramid, so the number of layers in each group of the differential Gaussian pyramid is one less than the number of layers in the corresponding group of the Gaussian pyramid. The calculation formula is shown in the following formula.

2. Extremum point detection

After completing the construction of DOG, due to the stable nature of its internal extremum points, it is necessary to adopt an algorithm strategy to find out and use it as a candidate for feature points. That is, each point in the DOG is searched to determine whether it satisfies the condition as an extreme point. The basis for judgment is to compare this point with its neighboring pixels in the three-dimensional space, (that is, the 8 pixels around the layer plus the 9 adjacent pixels in the next two layers, a total of 26) to see if this point is This is the maximum or minimum in the scale space. If the point is the highest among the 26 points by comparison, then the point is an extreme point detected.

3. Feature point positioning.

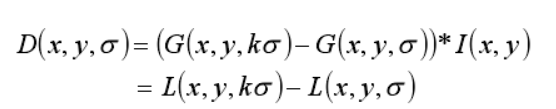

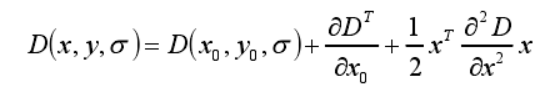

Feature point positioning eliminates inaccurate points on the edge or points that are susceptible to noise interference. A three-dimensional quadratic function model is fitted to the detected extreme points to determine the location and location of key points. scale. Interpolation needs to express discrete image expressions as continuous functions, so Taylor series expansion of Gaussian difference function:

Derivative D can get the exact position of the extreme point, and make the derivative zero can be obtained:

if |D( xmax)<=0.03| means that it is a low-contrast point that is susceptible to noise interference, so remove it, otherwise, keep it.

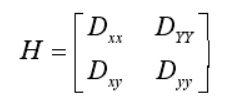

In order to eliminate the edge response points of the image, a 2*2 Hessian matrix is required to calculate the principal curvature.

Because the principal curvature of D is proportional to the eigenvalue of H, it can be calculated according to the ratio of the rank T® of the H matrix and the determinant Det(H) Determine whether it is an edge response point. Let α and β be the two eigenvalues of the Hessian matrix H respectively, then:

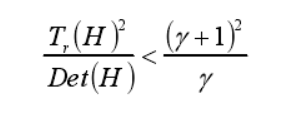

Determine the relationship between the principal curvature and the threshold by checking whether the following formula 2-15 is true.

4. Determining the gradient direction of the extreme point

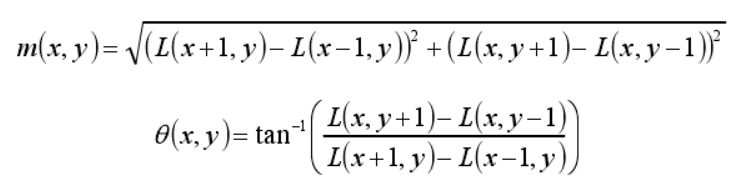

In order to meet the requirement that the detected feature points have rotation invariance, it is necessary to give the feature points a direction. This direction is obtained using the image gradient through the local image structure where the feature points are located. The gradient value m(x,y) and the gradient direction θ(x,y) at the

feature point (x,y) are obtained by the following formula: where L(x,y) represents the scale of the feature point (x,y).

The gradient value m (x, y) and the gradient direction (x, y) of the feature point of the neighborhood pixel calculated by the above formula are counted by histogram. As shown in Figure 2-5, the abscissa represents the direction interval, such as 0-45 degrees, 45-90 degrees, and the ordinate represents the sum of the gradient values of the characteristic points in this interval. The construction method is to divide the circumference 360o into 8 columns, one column every 45 degrees. The value of the highest bar in the gradient histogram represents the main direction of this feature point. In addition, when there is another bar whose value is equivalent to 80% or more of the highest value, the direction of this bar is set as that of this feature point. Auxiliary direction.

The feature point descriptors to generate

the image feature points in the neighborhood where the rotation angle, so that the direction of the main axis direction of the feature point in order to ensure uniform rotation invariant feature vectors, the process shown below:

The feature points in the neighborhood The pixels of is divided into 16 sub-domains, and a square containing 16 sub-domains is a block, a total of 4×4 blocks. Calculate the gradient histogram (8 directions) in each block separately. After processing, a 4×4×8=128-dimensional feature descriptor is obtained.

2. SURF feature detection

SURF is an improved feature detection algorithm based on the SIFT algorithm. It has the advantages of high accuracy and robustness of the SIFT algorithm. Its algorithm flow is similar to SIFT:

1. Location of feature points

Because the Hessian detector has the advantages of more stability and repeatability, the SURF algorithm first uses the detector to detect feature points. The image filtering process can be achieved by convolution of the high-order differential of the Gaussian function with the discrete image function f(x,y). Similar to the key point positioning of the SIFT algorithm, SURF compares the

pixel point with the 26 surrounding Hessian determinants in the three-dimensional space. If the surrounding Hessian determinant values are all less than it, then this point is a feature point.

2. Determination of the direction of the feature point

In order to obtain a good rotation invariance, a region is defined, and the Harr wavelet response in the region is calculated to obtain the main direction of the feature point. The range of this area is a circle with this characteristic point as the center and a radius of 6 times the scale. The template of Haar wavelet is shown in the figure: The template in the above figure shows

the Harr response in different directions. The black and white colors represent -1 and 1 respectively. Using the above template to process the points in the delimited area, you will get the response of each feature point in two directions.

Then Gaussian weighting is performed on the response after template processing. Finally, in the delineated circular area, a 60-degree sector is used to count the total number of Harr wavelet features in the x and y directions. The fan shape is rotated at a certain interval and repeated statistical operations are performed, and the final direction of the fan shape with the largest statistical value is the main direction of the feature point.

3. Generating feature point descriptors The

final feature descriptors generated by the SURF algorithm need to go through the following process: select a square near the feature point and divide it into 16 small square areas (each small square has a side length of 5s) , The direction of the selected square is consistent with the direction of the feature point. Then in the 4×4 square area,

the harr wavelet characteristics of the pixels are counted. The number of pixels is 25. The statistics include dx, dx, dy and dy. Each area will have 4 statistical values, so this square area with a side length of 20s will have 4×4×4=64 dimensions, as shown in the figure.

Copyright: Dong Shuai, please indicate the source for reprinting.