Preface

The default log driver of docker is json-file , each container will generate a /var/lib/docker/containers/containerID/containerID-json.log locally, and the log driver supports extensions. This chapter mainly explains the Fluentd driver Collect docker logs.

Fluentd is an open source data collector for the unified logging layer. It is the sixth CNCF graduated project after Kubernetes, Prometheus, Envoy, CoreDNS and containerd. It is often used to compare elastic logstash, which is relatively lightweight. Flexible, now the development is very fast, the community is very active.At the time of writing this blog, the star of github is 8.8k, and the fork is 1k.

premise

- docker

- Understanding fluentd configuration

- docker-compose

Prepare configuration file

docker-compose.yml

version: '3.7'

x-logging:

&default-logging

driver: fluentd

options:

fluentd-address: localhost:24224

fluentd-async-connect: 'true'

mode: non-blocking

max-buffer-size: 4m

tag: "kafeidou.{

{.Name}}" #配置容器的tag,以kafeidou.为前缀,容器名称为后缀,docker-compose会给容器添加副本后缀,如 fluentd_1

services:

fluentd:

image: fluent/fluentd:v1.3.2

ports:

- 24224:24224

volumes:

- ./:/fluentd/etc

- /var/log/fluentd:/var/log/fluentd

environment:

- FLUENTD_CONF=fluentd.conf

fluentd-worker:

image: fluent/fluentd:v1.3.2

depends_on:

- fluentd

logging: *default-loggingfluentd.conf

<source>

@type forward

port 24224

bind 0.0.0.0

</source>

<match kafeidou.*>

@type file

path /var/log/fluentd/kafeidou/${tag[1]}

append true

<format>

@type single_value

message_key log

</format>

<buffer tag,time>

@type file

timekey 1d

timekey_wait 10m

flush_mode interval

flush_interval 5s

</buffer>

</match>

<match **>

@type file

path /var/log/fluentd/${tag}

append true

<format>

@type single_value

message_key log

</format>

<buffer tag,time>

@type file

timekey 1d

timekey_wait 10m

flush_mode interval

flush_interval 5s

</buffer>

</match>Because fluentd need to have write permissions in the directory configuration, so you need to be ready to store log directory and give permission.

Create a directory

mkdir /var/log/fluentdGive permission, here for experimental demonstration, directly authorize 777

chmod -R 777 /var/log/fluentdExecute the command in the directory of docker-compose.yml and fluentd.conf:

docker-compose up -d

[root@master log]# docker-compose up -d

WARNING: The Docker Engine you're using is running in swarm mode.

Compose does not use swarm mode to deploy services to multiple nodes in a swarm. All containers will be scheduled on the current node.

To deploy your application across the swarm, use `docker stack deploy`.

Creating network "log_default" with the default driver

Creating fluentd ... done

Creating fluentd-worker ... doneCheck the log directory, there should be a corresponding container log file:

[root@master log]# ls /var/log/fluentd/kafeidou/

fluentd-worker.20200215.log ${tag[1]}This is the result of my last experiment. A $(tag[1]) directory will be created, which is strange, and there will be two files in this directory.

[root@master log]# ls /var/log/fluentd/kafeidou/\$\{tag\[1\]\}/

buffer.b59ea0804f0c1f8b6206cf76aacf52fb0.log buffer.b59ea0804f0c1f8b6206cf76aacf52fb0.log.metaIf anyone understands this, welcome to communicate together!

Architecture summary

Why not use docker's original log?

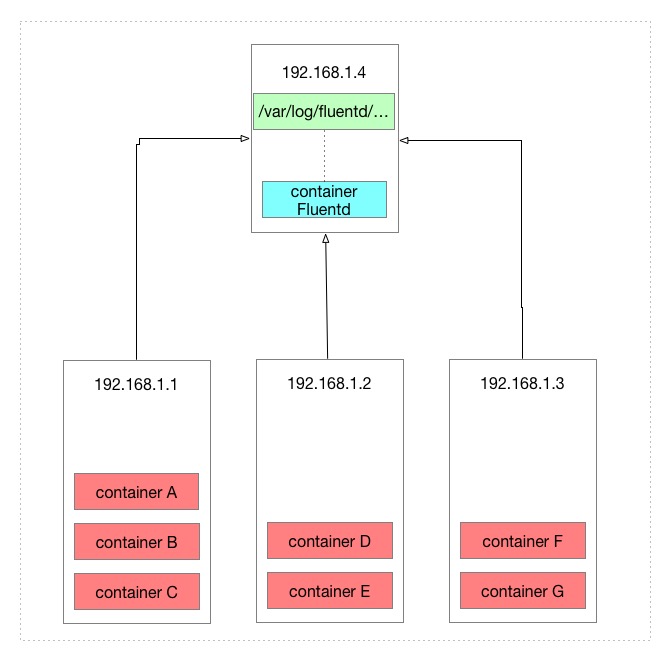

Let's first take a look at the architecture of the original docker log:

Docker will generate a log file for each container in the local /var/lib/docker/containers/containerID/containerID-json.log path to store docker logs.

There are a total of 7 containers in the above figure.If it is regarded as 7 microservices, it is already very inconvenient when you need to view the logs.In the worst case, you need to view the logs of each container on three machines.

What is the difference after using fluentd?

After using fluentd to collect docker logs, the containers can be aggregated together. Let's take a look at the architecture after configuring the fluentd configuration file in this article:

Since fluentd is configured to be stored in the local directory of the machine where fluentd is located, the effect is to collect the container logs of other machines into the local directory of the machine where fluentd is located.

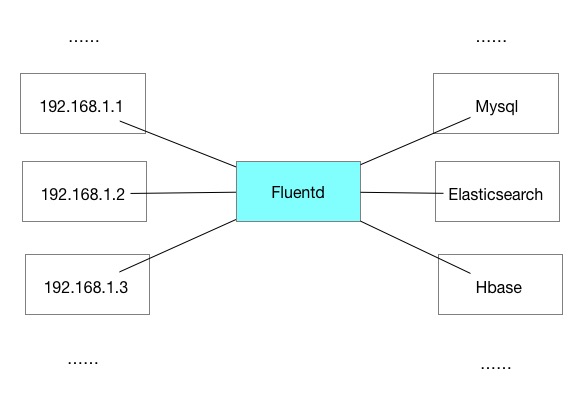

Can fluentd only collect container logs locally?

Fluentd can actually transfer the collected logs again, for example to storage software such as elasticsearch:

fluentd flexibility

There are many things that fluentd can do. Fluentd itself can be used as a transmission node and as a receiving node. It can also filter specific logs, format logs with specific content, and transmit the matching specific logs again. Here is just a simple The effect of collecting docker container logs.

Originating in four coffee beans , reproduced, please declare the source.

Follow the public account -> [Four Coffee Beans] Get the latest content