This article is still the previous idea. In the previous article, we shared an idea. You can download the access log of the storage account through the PowerShell script, and then automatically upload it to Log Analytics, so that it can be directly in LA Analyze and query the log. Although this method is simple and convenient, it is not an automated method after all. After expanding our ideas, we might as well try to use eventgrid combined with function to convert this method into an automated solution.

First, let's first popularize what EventGrid and Function are

EventGrid

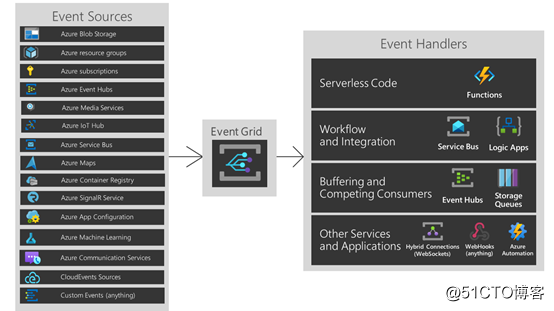

With Azure Event Grid, applications can be easily generated using an event-based architecture. First, select the Azure resource you want to subscribe to, and then provide an event handler or WebHook endpoint to send events to. The event grid includes built-in support for events from Azure services, such as storage blobs and resource groups. The event grid also supports its own events using custom themes.

Simply put, it is like a trigger, which can trigger various events and then make targeted responses. It sounds a bit like triggers in logic apps, but eventgrid is just a simple event transfer tool, downstream processing methods Completed by other products, the following picture can also be seen where the eventgrid is located, similar to a message queue for event transmission

https://docs.microsoft.com/en-us/azure/event-grid/?WT.mc_id=AZ-MVP-5001235

Function

Azure Functions is a serverless solution that allows users to reduce code writing, reduce infrastructure that needs to be maintained, and save costs. No need to worry about deploying and maintaining servers, the cloud infrastructure provides all the latest resources needed to keep applications running.

Function is actually easier to understand. Now all clouds basically have similar products. If you compare it horizontally, it is AWS lambda, a fully managed code running platform.

https://docs.microsoft.com/zh-cn/azure/azure-functions/functions-overview?WT.mc_id=AZ-MVP-5001235

Combining the above figure, we can actually see our thinking. Eventgird has built-in blob triggers, which means that when a new blob appears, it will automatically trigger eventgrid, and downstream processing procedures, we can combine function to Do, the code is actually ready-made, just use the previous one, it just needs to be changed slightly, the overall workload is very small

Of course, there is actually a hidden problem here, because the storage account log is stored in the $logs container, and this container will not trigger the eventgrid in the Azure backend. This is a bit tricky. The method we use is to create a function , And then use azcopy to periodically sync log sync to another container. Just one line of code is easy, so I won’t write it out for now.

Implementation steps

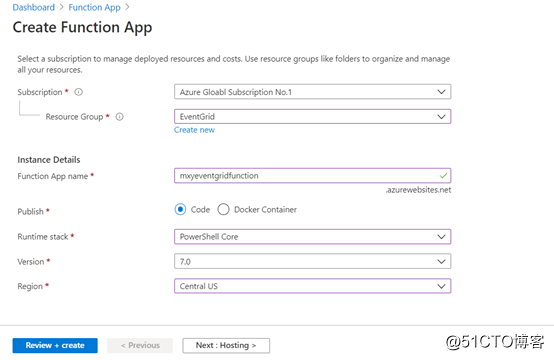

Let's look at the specific implementation steps below. First, create a function app. The relationship between function app and function is very simple. Function app is equivalent to the platform on which the function runs. The code runs on this platform. The function app can contain many Function

Create Function App

The process of creating function app is very simple, we choose runtime as PowerShell Core

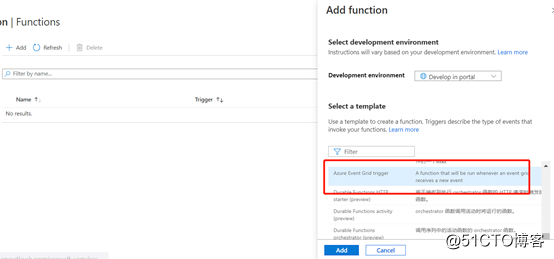

Create Function

After the Function app is created, you can create functions in it. Azure actually has built-in evenr grid trigger function, just select it when creating it

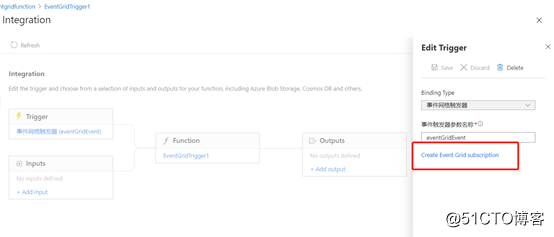

Create Event Grid Subscription

The function is ready, then you can prepare eventgrid, you can create event grid subscription directly in the function

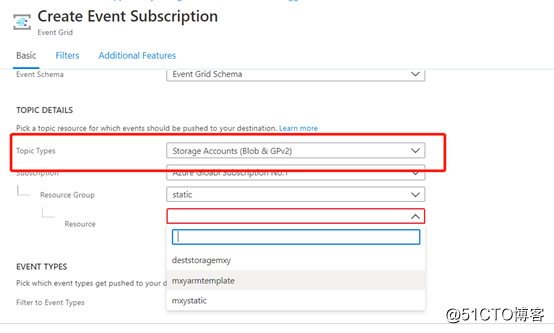

When creating event grid subscription, there are many types that can be selected. Here, please choose the storage type.

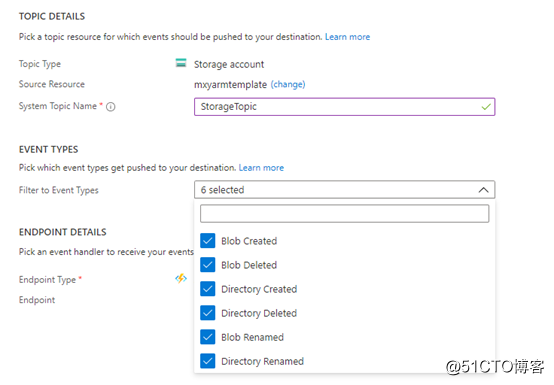

Configure event type of Storage account

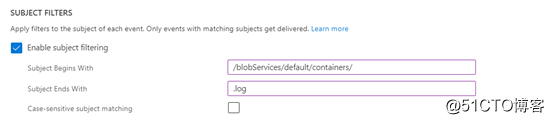

Then pay attention to the need to configure the filter, because we have to limit the path to a specific range, not all blobs will trigger events

The trigger is also ready, and then you can prepare the code to be processed in the function

Write Code

Because the previous code is processed in a loop, and eventgrid is actually pushed one by one, the logic here needs to be adjusted slightly

Function Build-Signature ($customerId, $sharedKey, $date, $contentLength, $method, $contentType, $resource)

{

$xHeaders = "x-ms-date:" + $date

$stringToHash = $method + "`n" + $contentLength + "`n" + $contentType + "`n" + $xHeaders + "`n" + $resource

$bytesToHash = [Text.Encoding]::UTF8.GetBytes($stringToHash)

$keyBytes = [Convert]::FromBase64String($sharedKey)

$sha256 = New-Object System.Security.Cryptography.HMACSHA256

$sha256.Key = $keyBytes

$calculatedHash = $sha256.ComputeHash($bytesToHash)

$encodedHash = [Convert]::ToBase64String($calculatedHash)

$authorization = 'SharedKey {0}:{1}' -f $customerId,$encodedHash

return $authorization

}

Function Post-LogAnalyticsData($customerId, $sharedKey, $body, $logType)

{

$method = "POST"

$contentType = "application/json"

$resource = "/api/logs"

$rfc1123date = [DateTime]::UtcNow.ToString("r")

$contentLength = $body.Length

$signature = Build-Signature `

-customerId $customerId `

-sharedKey $sharedKey `

-date $rfc1123date `

-contentLength $contentLength `

-method $method `

-contentType $contentType `

-resource $resource

$uri = "https://" + $customerId + ".ods.opinsights.azure.com" + $resource + "?api-version=2016-04-01"

$headers = @{

"Authorization" = $signature;

"Log-Type" = $logType;

"x-ms-date" = $rfc1123date;

"time-generated-field" = $TimeStampField;

}

$response = Invoke-WebRequest -Uri $uri -Method $method -ContentType $contentType -Headers $headers -Body $body -UseBasicParsing

return $response.StatusCode

}

Function ConvertSemicolonToURLEncoding([String] $InputText)

{

$ReturnText = ""

$chars = $InputText.ToCharArray()

$StartConvert = $false

foreach($c in $chars)

{

if($c -eq '"') {

$StartConvert = ! $StartConvert

}

if($StartConvert -eq $true -and $c -eq ';')

{

$ReturnText += "%3B"

} else {

$ReturnText += $c

}

}

return $ReturnText

}

Function FormalizeJsonValue($Text)

{

$Text1 = ""

if($Text.IndexOf("`"") -eq 0) { $Text1=$Text } else {$Text1="`"" + $Text+ "`""}

if($Text1.IndexOf("%3B") -ge 0) {

$ReturnText = $Text1.Replace("%3B", ";")

} else {

$ReturnText = $Text1

}

return $ReturnText

}

Function ConvertLogLineToJson([String] $logLine)

{

$logLineEncoded = ConvertSemicolonToURLEncoding($logLine)

$elements = $logLineEncoded.split(';')

$FormattedElements = New-Object System.Collections.ArrayList

foreach($element in $elements)

{

$NewText = FormalizeJsonValue($element)

$FormattedElements.Add($NewText) > null

}

$Columns =

( "version-number",

"request-start-time",

"operation-type",

"request-status",

"http-status-code",

"end-to-end-latency-in-ms",

"server-latency-in-ms",

"authentication-type",

"requester-account-name",

"owner-account-name",

"service-type",

"request-url",

"requested-object-key",

"request-id-header",

"operation-count",

"requester-ip-address",

"request-version-header",

"request-header-size",

"request-packet-size",

"response-header-size",

"response-packet-size",

"request-content-length",

"request-md5",

"server-md5",

"etag-identifier",

"last-modified-time",

"conditions-used",

"user-agent-header",

"referrer-header",

"client-request-id"

)

$logJson = "[{";

For($i = 0;$i -lt $Columns.Length;$i++)

{

$logJson += "`"" + $Columns[$i] + "`":" + $FormattedElements[$i]

if($i -lt $Columns.Length - 1) {

$logJson += ","

}

}

$logJson += "}]";

return $logJson

}

$storageAccount = Get-AzStorageAccount -ResourceGroupName $ResourceGroup -Name $StorageAccountName -ErrorAction SilentlyContinue

if($null -eq $storageAccount)

{

throw "The storage account specified does not exist in this subscription."

}

$storageContext = $storageAccount.Context

$token = $Null

$maxReturn = 5000

$successPost = 0

$failedPost = 0

$subject=$eventGridEvent.subject.ToString()

$BlobArray=$subject.Split('/')

$container=$BlobArray[$BlobArray.indexof('containers')+1]

$BlobIndex=$subject.indexof('blobs/')+6

$Blob=$subject.substring($BlobIndex,$subject.length - $BlobIndex)

Write-Output("> Downloading blob: {0}" -f $blob)

$filename = ".\log.txt"

Get-AzStorageBlobContent -Context $storageContext -Container $container -Blob $blob -Destination $filename -Force > Null

Write-Output("> Posting logs to log analytic workspace: {0}" -f $blob)

$lines = Get-Content $filename

foreach($line in $lines)

{

$json = ConvertLogLineToJson($line)

$response = Post-LogAnalyticsData -customerId $customerId -sharedKey $sharedKey -body ([System.Text.Encoding]::UTF8.GetBytes($json)) -logType $logType

if($response -eq "200") {

$successPost++

} else {

$failedPost++

Write-Output "> Failed to post one log to Log Analytics workspace"

}

}

remove-item $filename -Force

Write-Output "> Log lines posted to Log Analytics workspace: success = $successPost, failure = $failedPost"

The last step is to authorize the function app to access storage. This step will not be discussed in detail. You can also use storage account key to do it. Of course, this method is not very recommended.

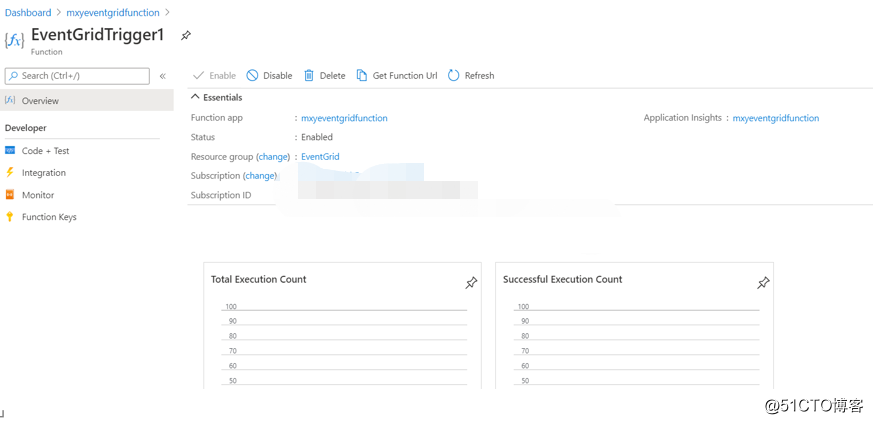

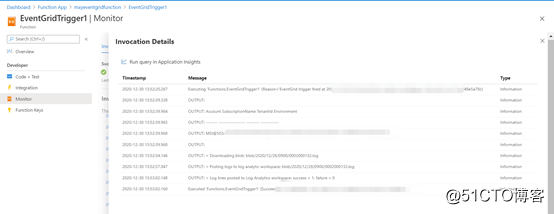

Finally, you can see the completed effect in the monitoring in the function

to sum up

Generally speaking, it has not changed much compared to the way of simply using PowerShell scripts, but because of the addition of eventgrid and functions, the entire solution has become more flexible. Similar ideas can also be extended to many other tasks to be more cloud-based to look at and deal with problems in a native way