We have discussed with you , in addition to the number of stars, what other indicators can evaluate the quality of an open source project. Of course, the "good or bad" here not only refers to the "good or not" of an open source software itself, but also includes the community health and sustainability of an open source project. Recently, industry experts have proposed two new indicators to evaluate the construction of an open source project community.

Nowadays, there are many indicators for people to refer to for an open source project, including star, fork, pull request (PR), merge request (MR), number of contributors, and so on. Kevin Xu, former director of global strategy and operations of PingCAP, believes that these bright indicators are easily affected by marketing strategies, such as brushing stars and forks, and even some projects deliberately decompose a larger component into smaller parts. Increase the number of PR...Almost all indicators may be abused, resulting in inaccurate assessments of the health and sustainability of an open source project, and some even serious distortions .

Therefore, Kevin proposed two new indicators, namely:

- The breakdown of PR or MR reviewers

- Leaderboard for community interaction

By continuously tracking, measuring and optimizing these two indicators, the health of an open source community can be continuously evaluated more objectively, thereby helping project managers build a strong and self-improving open source community.

Why are these two indicators?

Kevin believes that the long-term goal of any open source project community construction is to reach a critical point so that the project can continue to develop after the original creator or maintainer fades out. Especially for those open source software (COSS) companies that build commercialization around open source technology, this goal is particularly important. Imagine that if an open source project stagnates due to the exit of the founder, the company that commercializes the project will be destroyed.

This is a lofty goal, but few projects can achieve it.

Burnout of project maintainers is a very common problem. A recent example is Salvatore Sanfilippo, the creator of Redis, who shared his struggle as an open source maintainer last year and resigned as CEO of Redis Labs earlier this year. In reality, maintainers of various projects, big or small, struggle with similar burnout every day.

Therefore, paying attention to the above two indicators, especially in the early stages of the project's open source journey, can increase the chances of establishing sustainable development, because these two indicators illustrate two important factors that promote the sustainability of the project: ownership and incentives .

Segment PR/MR reviewer = ownership

This indicator can track who is actively reviewing contributions in the community and is a good indicator of ownership. At the beginning of the project, the creator did most of the review work, but over time and the development of the project, this situation will be difficult to continue. Community managers can consciously operate in the early stage, recruit potential high-quality maintainers, and appropriately delegate power so that more people have background knowledge, confidence, and a welcome attitude to review the PR/MR received. Refer to Linux solicited by Linus Torvalds Core maintainers, this is essential to the long-term sustainability of the project.

On the other hand, the review contribution link has an element similar to customer service. If there are not enough maintainers to participate in the review in this link, it will cause a PR or MR to be unattended for two weeks or more. , This will bring a big blow to an originally enthusiastic newcomer, thereby affecting the growth of newcomers in the community.

Community Interactive Ranking = Incentive Mechanism

Tracking some community-oriented interactions and possibly using rewards to "gamify" them within the community will help promote positive interactions among community members with various experience levels. Some of the interactions that community managers can track are the number of PR/MR submitted, comments, suggestions, etc. These interactions may have different values and qualities, but the bigger goal here is to understand who is doing what and who is good at what , and to consciously cultivate more user behaviors based on people's strengths and interests.

For example, maybe some people are good at providing useful comments, but do not have enough technical background to review PR/MR. It is best to determine who they are and provide them with more information so that they can grow into maintenance one day People; maybe some people are very keen to monitor this project, which can be seen from their frequent responses, but they may not be willing to participate in comments and suggestions. If they can know who they are and help them gain more background, It would be a good thing to understand the inner workings of the project to add more value to the communities they obviously care about.

How to use these indicators

So how to track and interpret these indicators? Kevin used two examples of open source projects to illustrate:

- Kong, an API gateway

- Apache Pulsar, a pub-sub messaging system

Kevin used the open source data visualization tool Apache Superset to grab some data from the Kong and Apache Pulsar projects:

Indicators for PR examiners

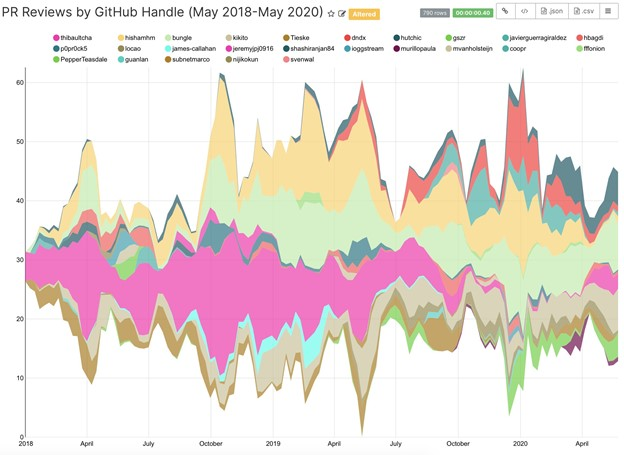

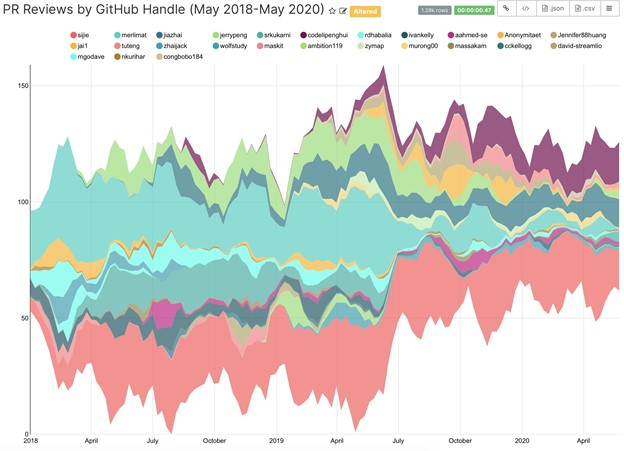

The following two pictures respectively show the PR responses of Kong and Pulsar on GitHub in the past two years. Different colors represent different PR reviewers:

Kong's PR response on GitHub

Pulsar's PR response on GitHub

Based on these data, it can be observed that Kong's PR examiner ratio is more "balanced" than Pulsar. This "balance" was achieved as the Kong project gradually matured. The most important point is that neither Aghi nor Marco , the creators of Kong, are the maintainers who participated in the most PR responses, which is a good thing. In other words, as the project matures, enough maintainers have appeared in the Kong project community to replace some of the code review work of the project founder, which is a good sign of the sustainable development of the project.

As a relatively young project, Pulsar has not yet reached the same level, but it is on the way to achieve this balance. Sijie , Jia and Penghui did most of the review work. All three of them are members of the project management committee and lead Pulsar's COSS company SteamNative. Other major players, including Splunk (especially after it acquired Streamlio), also contributed to this project, which is a good leading indicator of the final balance.

Note: Kevin deliberately ignored the differences in governance processes between the Apache Software Foundation project and other projects, which will affect the speed and qualifications of a contributor to become a reviewer or maintainer. Therefore, this comparison does not reflect the actual situation 100%, but it can see the impact of the community governance process on the condition of the project maintainers.

Community interaction indicators

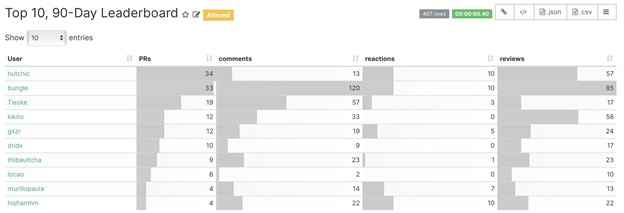

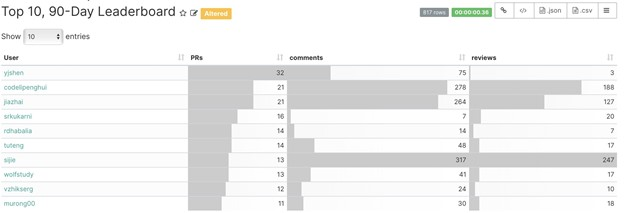

The following are two interactive indicator rankings, showing the community interactions of Kong and Pulsar on GitHub in the 90 days (from March to May 2020) since the most recent data collection, including interactions such as PR, comments, reviews, etc. data.

Kong Interactive Leaderboard Chart

Pulsar Interactive Leaderboard Chart

The interactive rankings reflect the same "level of balance" as the previous indicator. It is clear who is doing what kind of interaction, how many interactions have occurred, and who seems to be doing it all at a glance.

As for establishing the correct incentive structure, this leaderboard-style view can help community managers understand the source of interaction, so that they can design a corresponding reward or incentive system to accelerate these positive interactions.

These data are also useful for internal management and external community building. Those who observe carefully can find that many of the leaders on these charts are employees of the project's COSS companies Kong Inc. and StreamNative. In reality, it is very common for active community contributors to become employees of the project commercialization company. No matter where they are employed, if they want to cultivate a sustainable project, surpass the original creator (which in turn will affect the COSS company Sustainable development), it is necessary to measure, track and incentivize positive interactive behavior.

Finally, no matter how beautiful the graph is, the data does not explain the whole problem. And no matter how successful a project is, its experience should not be templated and directly applied to different projects. Project managers should make good use of these two indicators, observe who is contributing to the project, and actively maintain project-related documents, set clear guidelines for project contribution quality, and grasp the threshold that suits them. In terms of the number and quality of newcomers A good balance between the two can make an excellent open source project community truly sustainable.

Reference link:

https://opensource.com/article/20/11/open-source-community-sustainability

Further reading

Now that Star can be used, how do we judge the quality of an open source project?

Redis author resigned as leader and maintainer of Redis project