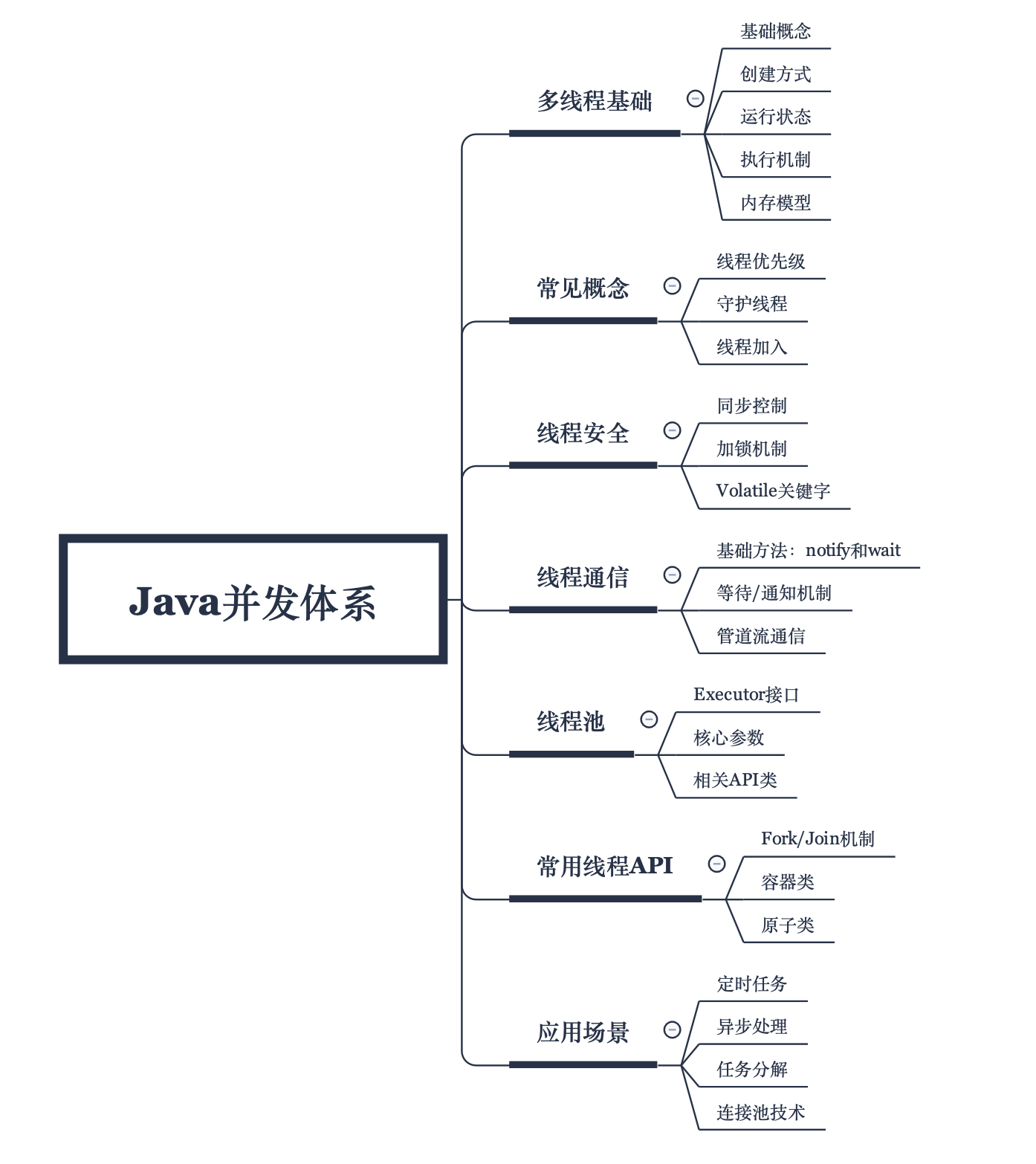

One, multi-threaded map

2. Multithreading basics

1. Basic concepts

A thread is the smallest unit that the operating system can perform operation scheduling, included in the process, and is the actual operation unit in the process. A thread refers to a single sequential control flow in a process. Multiple threads can be concurrent in a process, and each thread executes different tasks in parallel.

2. Creation method

Inherit the Thread class, implement the Runnable interface, based on the Callable and Future interfaces, and Timer is a background thread and thread pool.

3. Thread status

State description: initial state, running state, blocking state, waiting state, overtime waiting state, and termination state.

4. Execution mechanism

An application in the JVM can be executed in parallel by multiple threads. The threads are mapped one-to-one to the operating system thread where the service is located, scheduled to be executed on the available CPU, and an operating system thread is created when the thread is started; Operating system threads will also be recycled.

5. Memory model

When the virtual machine starts and runs, multiple threads are created. Some modules in the data area are shared by threads, and some are private:

Thread sharing: metadata area, heap;

Thread private: virtual machine stack, local method stack, program counter;

A single CPU can only execute one thread at a specific moment, so multiple threads use several blocks of space and then constantly compete for the execution time period of the CPU.

Three, common concepts

1. Thread priority

The thread scheduler tends to execute threads with high thread priority. High thread priority indicates that the probability of acquiring CPU resources is high, or the execution time fragments acquired are high, and the probability of being executed is high, but it does not mean that the low priority must be executed last.

2. Daemon thread

The daemon thread supports auxiliary threads, which mainly play a scheduling and supporting role in the program. When all the non-daemon threads in the Jvm end, the daemon thread will also end.

3. Thread join

In thread A, execute the joining method of thread B, then thread A will wait for thread B to finish executing before returning to continue execution.

4. Local thread

ThreadLocal is also called thread local variable. It creates a copy of the variable in each thread. Each thread can access its own internal copy variable, and the threads do not affect each other.

Recommended viewing: Portal

Fourth, thread safety

From the perspective of how the threads and memory space are occupied in the above figure, it is necessary to ensure thread safety when the threads access the shared memory block.

1. Synchronous control

Synchronized keyword synchronization control, can modify methods, modify code blocks, modify static methods, etc., synchronization control resources are few, can improve the efficiency of multithreading.

2. Locking mechanism

Lock interface: One of the root interfaces for resource locking in Java concurrent programming, which specifies several basic methods for resource locking.

ReentrantLock class: Reentrant lock that implements the Lock interface, that is, if a thread acquires the lock of the current instance and enters the task method, it can enter the task method again without releasing the lock. Features: mutual exclusion, namely Only one thread enters the task at a time.

Condition interface: Describes the condition variables that may be associated with the lock, and provides more powerful functions. For example, Conditon can implement multiple notifications and selective notifications in the thread waiting/notification mechanism.

3. Volatile keywords

Volatile modifies member variables and cannot modify the method, that is, when the thread accesses this variable, it needs to be obtained from the shared memory. Modifications to the variable also need to be flushed to the shared memory synchronously, ensuring the visibility of the variable to all threads.

Five, thread communication

A thread is an independent entity, but in the process of thread execution, if the same business logic is processed, resource contention may occur, resulting in concurrency problems, and even deadlock. Coordination between threads requires a communication mechanism to guarantee.

1. Basic method

The related method is the basic method of the Object level in Java, and any object has this method: notify() randomly informs a thread waiting on the object to end the wait state and return; wait() thread enters the waiting state, it will not Competing for the lock object, you can also set the waiting time;

2. Waiting/notification mechanism

Waiting/notification mechanism. In this mode, thread A calls the object wait() method to enter the waiting state when the task execution is not satisfied. Thread B modifies the execution condition of thread A and calls the object notify() or notifyAll() method , Thread A returns from the wait state after receiving the notification, and then performs subsequent operations. The two threads complete the interaction between waiting and notification through wait()/notify()/notifyAll() provided by the object, which improves the scalability of the program.

3. Pipeline flow communication

Pipeline flow is mainly used to directly transfer data between different threads. One thread sends data to the output pipe, and the other thread reads data from the input pipe, thereby realizing communication between different threads.

Six, thread pool

1. Executor interface

In the Executor system, thread task submission and task execution are designed to be decoupled. Executor has various powerful implementation classes that provide convenient ways to submit tasks and obtain task execution results, encapsulating the task execution process, and no longer need it Thread().start() method explicitly creates threads and performs tasks associated with them.

2. Core parameters

3. Related API classes

Thread pool task: core interface: Runnable, Callable interface and interface implementation class;

The result of the task: interface Future and implementation class FutureTask;

Task execution: core interface Executor and ExecutorService interface. In the Executor framework, there are two core classes that implement the ExecutorService interface, ThreadPoolExecutor and ScheduledThreadPoolExecutor.

Seven, common thread API

1. Fork/Join mechanism

The Fork/Join framework is used to execute tasks in parallel. The core idea is to divide a large task into multiple small tasks, and then summarize the execution results of each small task to get the final result of the large task. Core process: split tasks, asynchronous execution of module tasks, and single task results merge.

2. Container

ConcurrentHashMap: Use the segment lock mechanism to divide the data in the container into segments and store it in segments, and then assign a lock to each segment of data. When a thread occupies the lock to access one segment of data, the data in other segments can also be used by other segments. Thread access, considering both security and execution efficiency.

ConcurrentLinkedQueue: An unbounded thread-safe queue based on linked nodes. The elements are sorted according to the FIFO principle. The head of the queue is the element with the longest time in the queue, and the tail of the queue is the element with the shortest time in the queue. New elements are added To the end of the queue, the get element operation is obtained from the head of the queue.

3. Atomic

The JDK comes with atomic operation classes, which handle the simultaneous operation of a variable by multiple threads, including: basic types, array types, reference types, and attribute modification types.

Eight, application scenarios

1. Timed tasks

Through configuration settings, some programs are executed regularly at a specified time point or cycle time. The execution of tasks here is based on multithreading technology.

2. Asynchronous processing

Asynchronous processing means that the program is not executed according to the current synchronous code block. Asynchronous processing and synchronous processing are opposite. The realization of asynchronous also requires multiple threads or multiple processes to improve program efficiency.

3. Task decomposition

Common operations in distributed databases. Data is distributed in copies of different databases. When performing a query, each service must run a query task, and finally merge data on one service, or provide an intermediate engine layer for aggregation Data, in large-scale timing tasks, the tasks to be processed are often fragmented according to a specific strategy, and multiple threads are processed at the same time.

4. Connection pool technology

The technology of creating and managing a buffer pool of connections, these connections are ready to be used by any thread that needs them, reducing the problem of continuous creation and release of connections, and improving program efficiency.

Original link: https://www.cnblogs.com/cicada-smile/p/13763564.html

If you think this article is helpful to you, you can like it and follow it to support it, or you can follow my public account. There are more technical dry goods articles and related information sharing on it, everyone learns and progresses together!