Lock and CAS mechanism

(1) The cost of locks and the advantages of lock-free

Locking is the simplest way to do concurrency, and of course its cost is the highest. Kernel state locks require the operating system to perform a context switch. Locking and releasing the lock will cause more context switching and scheduling delays. The threads waiting for the lock will be suspended until the lock is released. During context switching, the instructions and data previously cached by the cpu will be invalid, which will cause a great loss in performance. The operating system's judgment on multi-threaded locks is like two sisters arguing about a toy. The operating system is the parent who can determine who can get the toy. This is very slow. Although user-mode locks avoid these problems, they are only effective when there is no real competition.

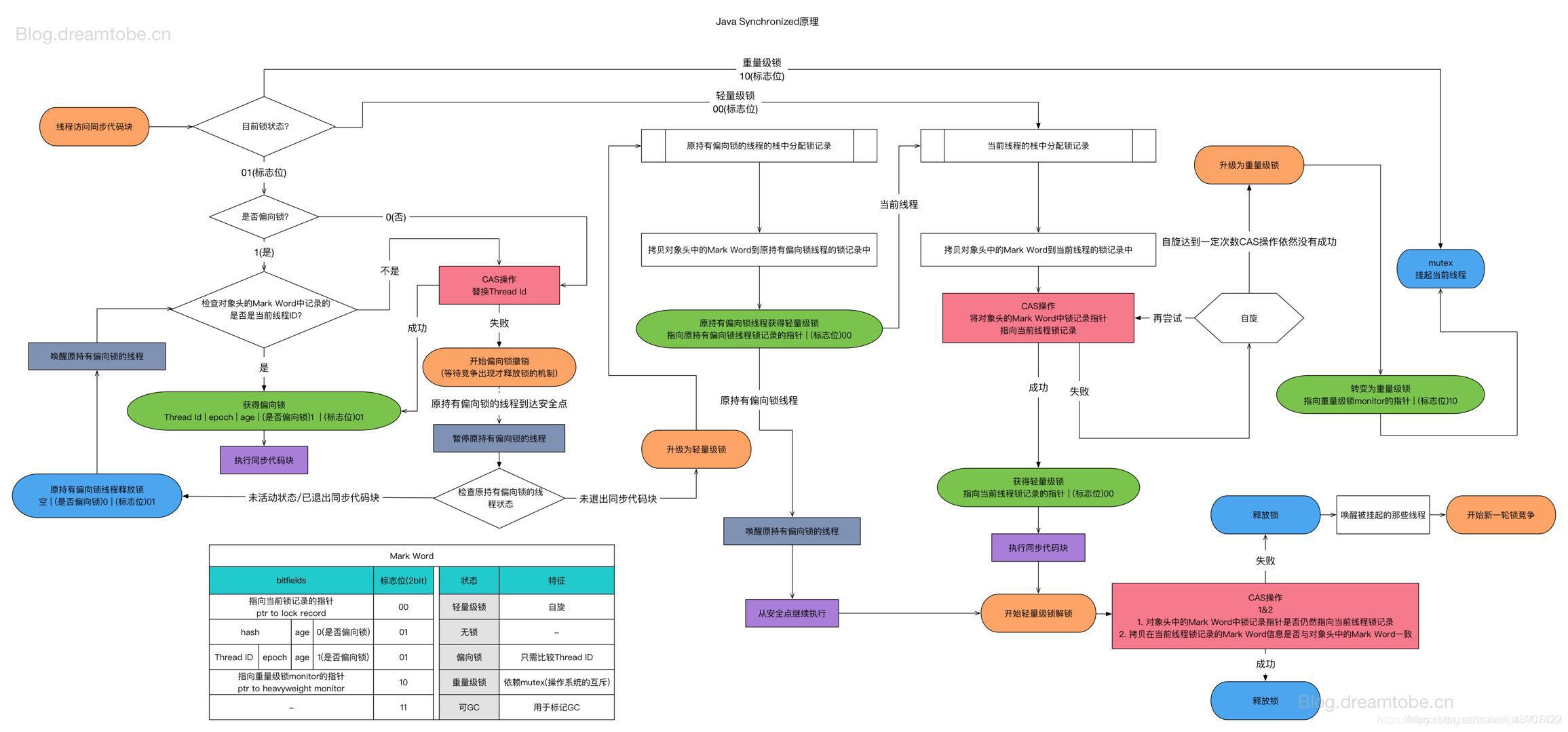

Prior to JDK1.5, synchronization was guaranteed by the synchronized keyword. By using a consistent locking protocol to coordinate access to the shared state, it can ensure that no matter which thread holds the lock of the guardian variable, it uses an exclusive way to access these Variables. If multiple threads access the lock at the same time, some threads will be suspended. When the thread resumes execution, it must wait for other threads to finish their time slice before it can be scheduled for execution. It exists during suspension and resume execution. It’s a lot of overhead. Locks also have other disadvantages. When a thread is waiting for the lock, it cannot do anything. If a thread is delayed while holding a lock, all threads that need this lock will not be able to execute. If the priority of the blocked thread is high and the priority of the thread holding the lock is low, the priority will be reversed. The following figure shows the complex process of synchronized:

CAS can solve this kind of drawbacks, so what is CAS? For concurrency control, locking is a pessimistic strategy that will block thread execution, while lock-free is an optimistic strategy. It assumes that there is no conflict when accessing resources. Since there is no conflict, there is no need to wait, and the thread does not. Need to block. How do multiple threads access the resources of the critical section together? The lock-free strategy uses a comparison and exchange technology CAS (compare and swap) to identify thread conflicts. Once a conflict is detected, the current operation is repeated until there is no conflict. Compared with locks, CAS will make program design more complicated, but because of its inherent immunity to deadlock (there is no lock at all, of course, there will be no threads blocked all the time), and more importantly, there is nothing to do with the use of lock-free methods. The overhead caused by competition does not have the overhead caused by frequent scheduling between threads. It has superior performance than the lock-based approach, so it has been widely used at present.

(2) Optimistic lock and pessimistic lock

Just mentioned pessimistic strategy and optimistic strategy, so let's take a look at what is optimistic locking and pessimistic locking:

Optimistic locking (also known as lock-free, it is not actually a lock, but an idea): optimistically think that other threads will not modify the value, if the value is found to be modified, you can try again until it succeeds . The CAS mechanism (Compare And Swap) we are going to talk about is an optimistic lock.

Pessimistic lock: pessimistically believe that other threads will modify the value. Exclusive lock is a kind of pessimistic lock. After locking, it can ensure that the program will not be interfered by other threads during program execution, so as to get the correct result.

(3) CAS mechanism

The full name of CAS mechanism is compare and swap, which translates as compare and swap, and is a well-known lock-free algorithm. It is also a CPU instruction-level operation that is widely supported by modern CPUs. It has only one atomic operation, so it is very fast. Moreover, CAS avoids the problem of requesting the operating system to adjudicate the lock, and it is done directly inside the CPU.

CAS has three operating parameters:

1. Memory location M (its value is what we want to update)

2. Expected original value E (the value read from memory last time)

3. New value V (the new value that should be written)

CAS operation process: first read the original value of the memory location M, mark it as E, then calculate the new value V, compare the value of the current memory location M with E, if they are equal, it means there is nothing else in the process The thread has modified this value, so the value of the memory location M is updated to V (swap). Of course, this has to be done without ABA problems (ABA problems will be discussed later). If they are not equal, it means that the value of memory location M has been modified by other threads, so it is not updated, and it returns to the beginning of the operation to execute again (spin).

We can look at the CAS algorithm represented by C:

int cas(long *addr, long old, long new) {

if(*addr != old)

return 0;

*addr = new;

return 1;

}

Therefore, when multiple threads try to use CAS to update the same variable at the same time, one of the threads will successfully update the value of the variable, and the rest will fail. The failed thread can continue to retry until it succeeds. Simply put, the meaning of CAS is "what I think the original value should be, if it is, then update the original value to the new value, otherwise it will not be modified, and tell me what the value is now".

Some people may be curious, CAS operation, first read and then compare, and then set the value, so many steps, will it be interfered by other threads and cause conflicts between steps? In fact, it won’t, because the CAS operation in the underlying assembly code is not implemented with three instructions, but just one instruction: lock cmpxchg (x86 architecture), so the time slice will not be robbed during the CAS execution Case. But this involves another problem. The CAS operation relies too much on the design of the CPU, which means that CAS is essentially an instruction in the CPU. If the CPU does not support CAS operations, CAS cannot be implemented.

Since there is a continuous trial process in CAS, will it cause a great waste of resources? The answer is possible, and this is also one of the shortcomings of CAS. However, since CAS is an optimistic lock, the designer should be optimistic when designing. In other words, CAS believes that it has a very high probability that it can successfully complete the current operation, so in CAS's view , Retrying after completion (spin) is a small probability event.

(4) Problems with CAS

-

ABA problem

Because CAS will check whether the old value has changed, there is such an interesting problem here. For example, an old value A becomes B, and then becomes A. It happens that the old value has not changed and remains A, but it has actually changed. The solution can follow the optimistic lock method commonly used in the database, and add a version number to solve it. The original change path A->B->A becomes 1A->2B->3C. AtomicStampedReference is provided in the atomic package after Java 1.5 to solve the ABA problem, and the solution is like this. -

If the spin time is too long

, non-blocking synchronization when using CAS, that is to say, the thread will not be suspended, and it will spin (it is nothing more than an infinite loop) for the next attempt. If the spin time here is too long, the performance will be great Consumption. If the JVM can support the pause instruction provided by the processor, there will be a certain improvement in efficiency. -

The atomic operation

of a shared variable can only be guaranteed. When an operation is performed on a shared variable, CAS can guarantee its atomicity. If multiple shared variables are operated on, CAS cannot guarantee its atomicity. One solution is to use objects to integrate multiple shared variables, that is, the member variables in a class are these shared variables. Then the CAS operation of this object can ensure its atomicity. AtomicReference is provided in atomic to ensure atomicity between referenced objects.

It can be seen that although CAS has some problems, it is constantly optimized and solved.

(5) Some applications of CAS

There is a java.util.concurrent.atomic package in jdk. The classes inside are all lock-free operations based on CAS implementation. These atomic classes are all thread-safe. Atomic package provides a total of 13 classes, belonging to 4 types of atomic update methods, namely, atomic update basic type, atomic update array, atomic update reference and atomic update attribute (field). The classes in the Atomic package are basically packaging classes implemented using Unsafe. We can pick a classic class to talk about (I heard that many people come into contact with CAS from this class), which is the AtomicInteger class.

We can first look at how AtomicInteger is initialized:

public class AtomicInteger extends Number implements java.io.Serializable {

private static final long serialVersionUID = 6214790243416807050L;

// 很显然AtomicInteger是基于Unsafe类实现的

private static final Unsafe unsafe = Unsafe.getUnsafe();

// 属性value值在内存中偏移量

private static final long valueOffset;

static {

try {

valueOffset = unsafe.objectFieldOffset

(AtomicInteger.class.getDeclaredField("value"));

} catch (Exception ex) {

throw new Error(ex); }

}

// AtomicInteger本身是个整型,所以属性就是int,被volatile修饰保证线程可见性

private volatile int value;

/**

* Creates a new AtomicInteger with the given initial value.

*

* @param initialValue the initial value

*/

public AtomicInteger(int initialValue) {

value = initialValue;

}

/**

* Creates a new AtomicInteger with initial value {@code 0}.

*/

public AtomicInteger() {

}

}

We can see several points: Unsafe class (similar to pointers in C language) is the core of CAS, which is the core of AtomicInteger. valueOffset is the offset address of value in memory, and Unsafe provides the corresponding operation method. The value is modified by the volatile keyword to ensure thread visibility. This is the variable that actually stores the value.

Let's take a look at how the most commonly used getAndIncrement method in AtomicInteger is implemented:

public final int getAndIncrement() {

return unsafe.getAndAddInt(this, valueOffset, 1);

}

Obviously he called the getAndAddInt method in unsafe, then we jump to this method:

/**

* Atomically adds the given value to the current value of a field

* or array element within the given object <code>o</code>

* at the given <code>offset</code>.

*

* @param o object/array to update the field/element in

* @param offset field/element offset

* @param delta the value to add

* @return the previous value

* @since 1.8

*/

public final int getAndAddInt(Object o, long offset, int delta) {

int v;

do {

v = getIntVolatile(o, offset);

} while (!compareAndSwapInt(o, offset, v, v + delta));

return v;

}

/**

* Atomically update Java variable to <tt>x</tt> if it is currently

* holding <tt>expected</tt>.

* @return <tt>true</tt> if successful

*/

public final native boolean compareAndSwapInt(Object o, long offset, int expected, int x);

We can see that the do-while loop in the getAndAddInt method is equivalent to the spin part in CAS. If the replacement fails, it will continue to try until it succeeds. The core code of the real CAS (the process of comparing and exchanging) calls the local C++ code in the native method of compareAndSwapInt:

UNSAFE_ENTRY(jboolean, Unsafe_CompareAndSwapInt(JNIEnv *env, jobject unsafe, jobject obj, jlong offset, jint e, jint x))

UnsafeWrapper("Unsafe_CompareAndSwapInt");

oop p = JNIHandles::resolve(obj);

//获取对象的变量的地址

jint* addr = (jint *) index_oop_from_field_offset_long(p, offset);

//调用Atomic操作

//先去获取一次结果,如果结果和现在不同,就直接返回,因为有其他人修改了;否则会一直尝试去修改。直到成功。

return (jint)(Atomic::cmpxchg(x, addr, e)) == e;

UNSAFE_END

We finally saw the command we are familiar with: cmpxchg. In other words, the content we talked about before has been completely concatenated. The essence of CAS is a CPU instruction, and the implementation of all atomic classes call this instruction layer by layer to achieve the lock-free operation we need.

Suppose there is a new AtomicInteger(0); now there are thread 1 and thread 2 to perform the getAndAddInt operation on it at the same time.

1) Thread 1 gets the value 0 first, and then the thread switches;

2) Thread 2 gets the value 0, at this time calling Unsafe to compare the value in the memory is also 0, it is relatively successful, that is, the update operation of +1 is performed, that is The current value is 1. Thread switching;

3) Thread 1 resumes running, using CAS to find that its value is 0, but it is 1 in memory. Get: The value is modified by another thread at this time, and I can't modify it;

4) Thread 1 fails to judge, continue to loop the value and judge. Because volatile modifies the value, the value obtained is also 1. This is performing the CAS operation, and it is found that the value of expect and the memory at this time is equal, the modification is successful, and the value is 2;

5) In the process of the fourth step, even if there is thread 3 to preempt resources during the CAS operation, it still cannot The preemption is successful because compareAndSwapInt is an atomic operation.

May 30, 2020