Source code of this article: GitHub · click here || GitEE · click here

1. Introduction to Data Synchronization

1. Scene description

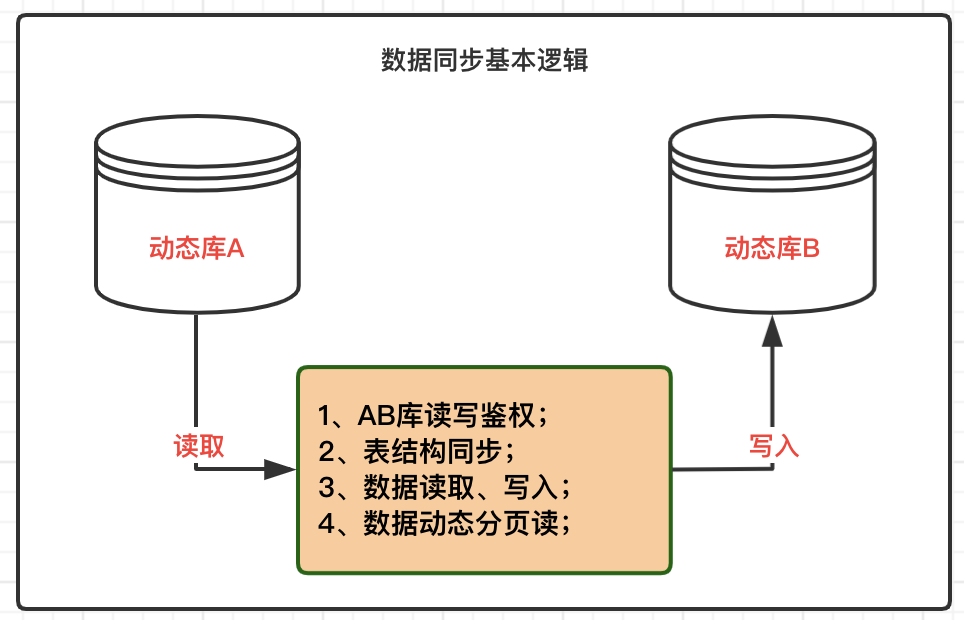

If you are often exposed to data development, there will be a scenario where Service A provides a data source, which is called dynamic data source A, and you need to read the data under the data source; Service B provides a data source, which is called dynamic data source. B, need to write data to the data source. This scenario is usually described as data synchronization, or data handling.

2. Basic process

Based on the above flowchart, the overall steps are as follows:

- Test whether multiple data sources are connected successfully and manage them dynamically;

- Determine whether the account provided by the data source has operation authority, such as reading and writing;

- Read the table structure of data source A, create a table in data source B;

- Data read or page read, write to data source B;

- Without knowing the table structure, you also need to read the table structure and generate SQL;

3. JDBC basic API

- Statement

An important interface for performing database operations under JDBC in Java, based on the established database connection, sends the SQL statement to be executed to the database.

- PreparedStatement

Inherit the Statement interface and implement SQL pre-compilation to improve batch processing efficiency. Commonly used in batch data writing scenarios.

- ResultSet

The object that stores the JDBC query result set. The ResultSet interface provides a method for retrieving column values from the current row.

Second, basic tool packaging

1. Data source management

Provide a factory for data source management. In the current scenario, it mainly manages a read library, data source A, and a write library, data source B. The data source connection is verified and placed in a container.

@Component

public class ConnectionFactory {

private volatile Map<String, Connection> connectionMap = new HashMap<>();

@Resource

private JdbcConfig jdbcConfig ;

@PostConstruct

public void init (){

ConnectionEntity read = new ConnectionEntity(

"MySql","jdbc:mysql://localhost:3306/data_read","user01","123");

if (jdbcConfig.getConnection(read) != null){

connectionMap.put(JdbcConstant.READ,jdbcConfig.getConnection(read));

}

ConnectionEntity write = new ConnectionEntity(

"MySql","jdbc:mysql://localhost:3306/data_write","user01","123");

if (jdbcConfig.getConnection(write) != null){

connectionMap.put(JdbcConstant.WRITE,jdbcConfig.getConnection(write));

}

}

public Connection getByKey (final String key){

return connectionMap.get(key) ;

}

}

2. Dynamic SQL stitching

Basic SQL management

It mainly provides basic SQL templates, such as full table search, paging search, and table structure query.

public class BaseSql {

public static String READ_SQL = "SELECT * FROM %s LIMIT 1";

public static String WRITE_SQL = "INSERT INTO %s (SELECT * FROM %s WHERE 1=0)" ;

public static String CREATE_SQL = "SHOW CREATE TABLE %s" ;

public static String SELECT_SQL = "SELECT * FROM %s" ;

public static String COUNT_SQL = "SELECT COUNT(1) countNum FROM %s" ;

public static String PAGE_SQL = "SELECT * FROM %s LIMIT %s,%s" ;

public static String STRUCT_SQL (){

StringBuffer sql = new StringBuffer() ;

sql.append(" SELECT ");

sql.append(" COLUMN_NAME, ");

sql.append(" IS_NULLABLE, ");

sql.append(" COLUMN_TYPE, ");

sql.append(" COLUMN_KEY, ");

sql.append(" COLUMN_COMMENT ");

sql.append(" FROM ");

sql.append(" information_schema.COLUMNS ");

sql.append(" WHERE ");

sql.append(" table_schema = '%s' ");

sql.append(" AND table_name = '%s' ");

return String.valueOf(sql) ;

}

}

SQL parameter stitching

According to the missing parameters in the SQL template, dynamic completion is performed to generate and complete the SQL statement.

public class BuildSql {

/**

* 读权限SQL

*/

public static String buildReadSql(String table) {

String readSql = null ;

if (StringUtils.isNotEmpty(table)){

readSql = String.format(BaseSql.READ_SQL, table);

}

return readSql;

}

/**

* 读权限SQL

*/

public static String buildWriteSql(String table){

String writeSql = null ;

if (StringUtils.isNotEmpty(table)){

writeSql = String.format(BaseSql.WRITE_SQL, table,table);

}

return writeSql ;

}

/**

* 表创建SQL

*/

public static String buildStructSql (String table){

String structSql = null ;

if (StringUtils.isNotEmpty(table)){

structSql = String.format(BaseSql.CREATE_SQL, table);

}

return structSql ;

}

/**

* 表结构SQL

*/

public static String buildTableSql (String schema,String table){

String structSql = null ;

if (StringUtils.isNotEmpty(table)){

structSql = String.format(BaseSql.STRUCT_SQL(), schema,table);

}

return structSql ;

}

/**

* 全表查询SQL

*/

public static String buildSelectSql (String table){

String selectSql = null ;

if (StringUtils.isNotEmpty(table)){

selectSql = String.format(BaseSql.SELECT_SQL,table);

}

return selectSql ;

}

/**

* 总数查询SQL

*/

public static String buildCountSql (String table){

String countSql = null ;

if (StringUtils.isNotEmpty(table)){

countSql = String.format(BaseSql.COUNT_SQL,table);

}

return countSql ;

}

/**

* 分页查询SQL

*/

public static String buildPageSql (String table,int offset,int size){

String pageSql = null ;

if (StringUtils.isNotEmpty(table)){

pageSql = String.format(BaseSql.PAGE_SQL,table,offset,size);

}

return pageSql ;

}

}

3. Business Process

1. Basic authentication

The library read attempts a single data read, and the library write attempts an unconditional write. If there is no permission, a corresponding exception will be thrown.

@RestController

public class CheckController {

@Resource

private ConnectionFactory connectionFactory ;

// MySQLSyntaxErrorException: SELECT command denied to user

@GetMapping("/checkRead")

public String checkRead (){

try {

String sql = BuildSql.buildReadSql("rw_read") ;

ExecuteSqlUtil.query(connectionFactory.getByKey(JdbcConstant.READ),sql) ;

return "success" ;

} catch (SQLException e) {

e.printStackTrace();

}

return "fail" ;

}

// MySQLSyntaxErrorException: INSERT command denied to user

@GetMapping("/checkWrite")

public String checkWrite (){

try {

String sql = BuildSql.buildWriteSql("rw_read") ;

ExecuteSqlUtil.update(connectionFactory.getByKey(JdbcConstant.WRITE),sql) ;

return "success" ;

} catch (SQLException e) {

e.printStackTrace();

}

return "fail" ;

}

}

2. Synchronization table structure

Here, the simplest operation is performed, and the query statement for reading the library table is queried out and thrown into the writing library for execution.

@RestController

public class StructController {

@Resource

private ConnectionFactory connectionFactory ;

@GetMapping("/syncStruct")

public String syncStruct (){

try {

String sql = BuildSql.buildStructSql("rw_read") ;

ResultSet resultSet = ExecuteSqlUtil.query(connectionFactory.getByKey(JdbcConstant.READ),sql) ;

String createTableSql = null ;

while (resultSet.next()){

createTableSql = resultSet.getString("Create Table") ;

}

if (StringUtils.isNotEmpty(createTableSql)){

ExecuteSqlUtil.update(connectionFactory.getByKey(JdbcConstant.WRITE),createTableSql) ;

}

return "success" ;

} catch (SQLException e) {

e.printStackTrace();

}

return "fail" ;

}

}

3. Synchronize table data

The table data of the reading library is read and put into the writing library in batches. In particular, here is a method: statement.setObject (); when the number and type of parameters are not known, the data type is automatically adapted.

@RestController

public class DataSyncController {

@Resource

private ConnectionFactory connectionFactory ;

@GetMapping("/dataSync")

public List<RwReadEntity> dataSync (){

List<RwReadEntity> rwReadEntities = new ArrayList<>() ;

try {

Connection readConnection = connectionFactory.getByKey(JdbcConstant.READ) ;

String sql = BuildSql.buildSelectSql("rw_read") ;

ResultSet resultSet = ExecuteSqlUtil.query(readConnection,sql) ;

while (resultSet.next()){

RwReadEntity rwReadEntity = new RwReadEntity() ;

rwReadEntity.setId(resultSet.getInt("id"));

rwReadEntity.setSign(resultSet.getString("sign"));

rwReadEntities.add(rwReadEntity) ;

}

if (rwReadEntities.size() > 0){

Connection writeConnection = connectionFactory.getByKey(JdbcConstant.WRITE) ;

writeConnection.setAutoCommit(false);

PreparedStatement statement = writeConnection.prepareStatement("INSERT INTO rw_read VALUES(?,?)");

// 基于动态获取列,和statement.setObject();自动适配数据类型

for (int i = 0 ; i < rwReadEntities.size() ; i++){

RwReadEntity rwReadEntity = rwReadEntities.get(i) ;

statement.setInt(1,rwReadEntity.getId()) ;

statement.setString(2,rwReadEntity.getSign()) ;

statement.addBatch();

if (i>0 && i%2==0){

statement.executeBatch() ;

}

}

// 处理最后一批数据

statement.executeBatch();

writeConnection.commit();

}

return rwReadEntities ;

} catch (SQLException e) {

e.printStackTrace();

}

return null ;

}

}

4. Pagination query

Provide a paging query tool, which can not read a large amount of data at one time when the data volume is large, to avoid excessive resource consumption.

public class PageUtilEntity {

/**

* 分页生成方法

*/

public static PageHelperEntity<Object> pageResult (int total, int pageSize,int currentPage, List dataList){

PageHelperEntity<Object> pageBean = new PageHelperEntity<Object>();

// 总页数

int totalPage = PageHelperEntity.countTotalPage(pageSize,total) ;

// 分页列表

List<Integer> pageList = PageHelperEntity.pageList(currentPage,pageSize,total) ;

// 上一页

int prevPage = 0 ;

if (currentPage==1){

prevPage = currentPage ;

} else if (currentPage>1&¤tPage<=totalPage){

prevPage = currentPage -1 ;

}

// 下一页

int nextPage =0 ;

if (totalPage==1){

nextPage = currentPage ;

} else if (currentPage<=totalPage-1){

nextPage = currentPage+1 ;

}

pageBean.setDataList(dataList);

pageBean.setTotal(total);

pageBean.setPageSize(pageSize);

pageBean.setCurrentPage(currentPage);

pageBean.setTotalPage(totalPage);

pageBean.setPageList(pageList);

pageBean.setPrevPage(prevPage);

pageBean.setNextPage(nextPage);

pageBean.initjudge();

return pageBean ;

}

}

Fourth, the final summary

Many high-complexity businesses need to be solved with the help of basic APIs. Because of the high complexity, it is not easy to abstract and encapsulate unified packages. If data synchronization is a business, it can be adapted to multiple databases and can be encapsulated independently as middleware. There are also a lot of middleware about multi-party data synchronization or calculation in open source projects. You can understand it yourself and expand your horizons.

Five, source code address

GitHub·地址

https://github.com/cicadasmile/data-manage-parent

GitEE·地址

https://gitee.com/cicadasmile/data-manage-parent