1. A simple recurrent neural network

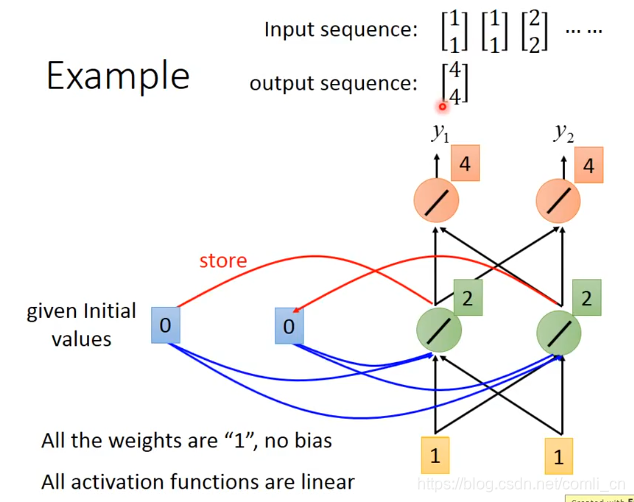

As shown in the figure above, first put the first node of the input sequence (Input sequence)

Enter the simple version of the memory network shown in the figure above, which is inside the orange box in the figure. The blue box is the memory unit, which stores the information calculated at the last time point. Because this is the first time point, the blue box is assigned an initial value first.

. And because it is assumed that the weight of all activation functions is 1, and all the bias values are 0, it is calculated by the green circle

, That is, 1 + 1 + 0 + 0 = 2, then the calculated value should be stored in the blue box, and finally calculated by the light red circle

, At this point the first time node is calculated.

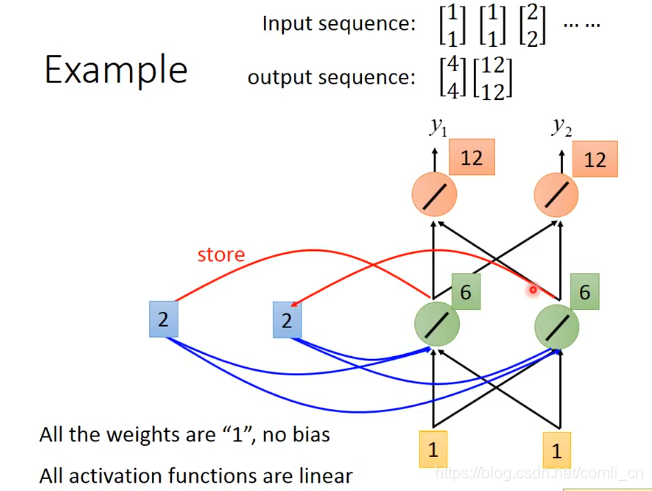

As shown in the figure above, first put the first node of the input sequence (Input sequence)

Enter the simple version of the memory network shown in the figure above, which is inside the orange box in the figure. The blue box is the memory unit, which stores the information calculated at the last time point. Because this is the first time point, the blue box is assigned an initial value first.

. And because it is assumed that the weight of all activation functions is 1, and all the bias values are 0, it is calculated by the green circle

, That is, 1 + 1 + 0 + 0 = 2, then the calculated value should be stored in the blue box, and finally calculated by the light red circle

, At this point the first time node is calculated.

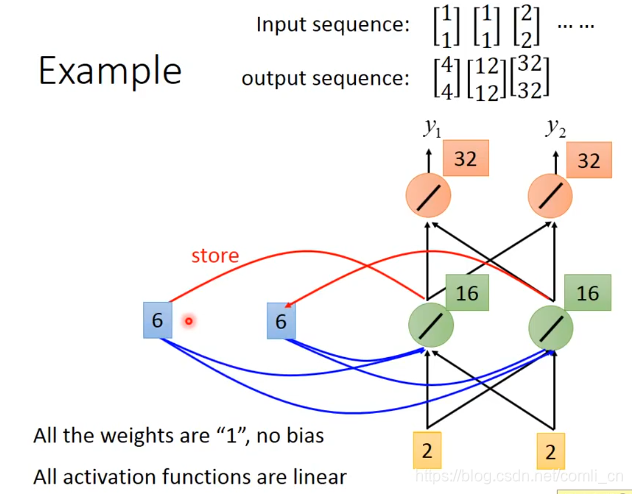

Then repeat the above work: In

this way, you get the output sequence (output sequence).It should be noted here that the order of the input sequence cannot be adjusted at will, because the output sequence will be different if adjusted at will

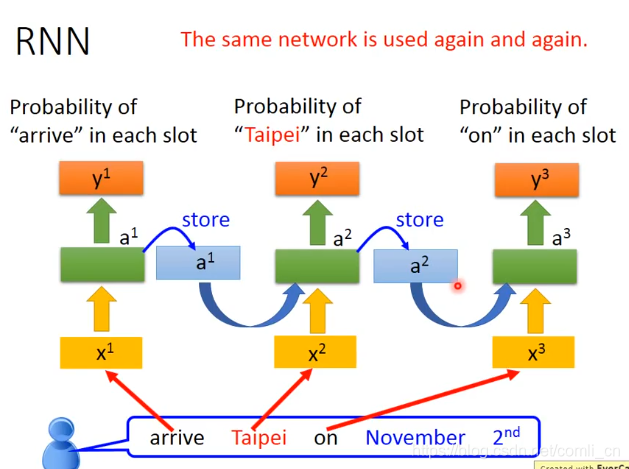

2. Application examples of RNN (Elman Network)

As shown in the figure above, to extract the location and time from the sentence "arrive Taipe on November 2nd", you can put this sentence into the RNN and process it, because RNN will summarize "arrive" from the training data after a lot of training. "The probability of a place following this word is relatively high, so the probability is that the place in this sentence is" Taipei ". Similarly, it will be based on the first three words" arrive Taipe on "and" November 2nd " A probability indicates that "November 2nd" is a relatively high probability in the world.

As shown in the figure above, to extract the location and time from the sentence "arrive Taipe on November 2nd", you can put this sentence into the RNN and process it, because RNN will summarize "arrive" from the training data after a lot of training. "The probability of a place following this word is relatively high, so the probability is that the place in this sentence is" Taipei ". Similarly, it will be based on the first three words" arrive Taipe on "and" November 2nd " A probability indicates that "November 2nd" is a relatively high probability in the world.

The green box in the figure above is the hidden layer, and there can be several more hidden layers.

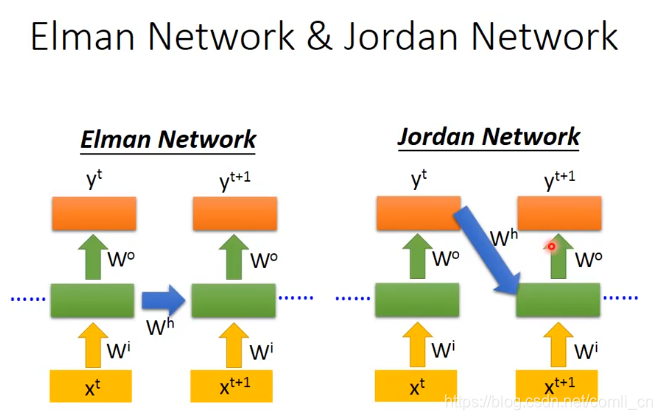

3. Other forms of RNN

The difference between Jordan Network and Elman Network in Jordan Network is shown in the following figure: the data in the hidden layer is different

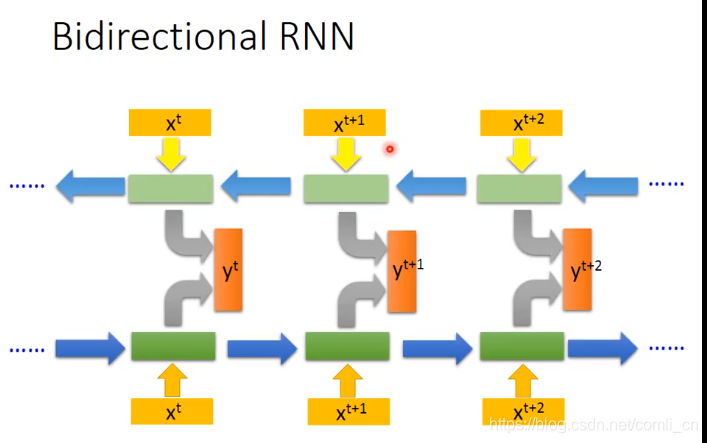

Bidirectional RNN

1 the reference .

Reference 2 .

Reference 3: Pytorch the RNN .