Time

More Code Cases may refer to: https: //github.com/perkinls/flink-local-train

Fink supports the type of Time

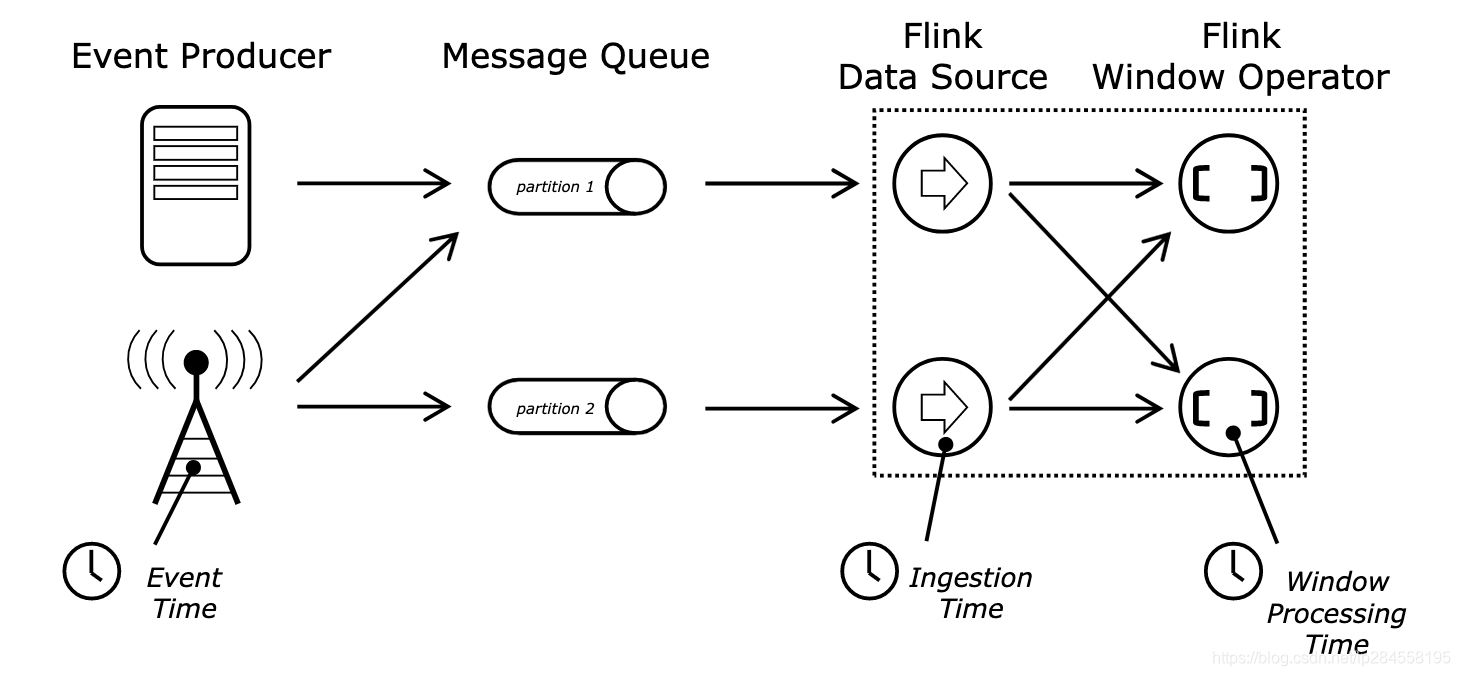

Flink supports different notions of time in the program stream.

Processing Time (Processing time)

Correction processing time is the system time in the machine to perform the corresponding operations. When the flow proceeds as processing time, all the respective system clock of the computer operator operates based on the operation time (e.g., time window). Hourly time window processing system comprising a record clock indicating a particular operator to reach all integer between hours.

For example, if an application is running at 9:15 am, the time the first hour treatment window will include the event between 9:15 am and 10:00 am treated, the next window will be included in 10:00 event handling between am and 11:00 am, and so on.

Time Event (Event time)

Event time is the time of each event generated on the device. This time is usually embedded in the incident. Use event time schedule depending on the data, rather than the system operator time the child is located. Event-time event time must specify how to generate Watermark. watermark is a mechanism for the progress of time-based event processing.

In the ideal case, the event will have time to deal with exactly the same and the result of the determination, regardless of when the event or how to reach their order. However, unless the event is known by timestamp (by timestamp) arrive, otherwise the event will have some time to deal with delays while waiting for disorderly events. Since we can only wait for a limited time, so this limits the availability of deterministic event time of the application.

Assuming that all the data have arrived, the operating time of the event will run as expected, even in the event of late or out of order or reprocess historical data, will produce accurate and consistent results. For example, events per hour time window will include all records having a timestamp falling within hours of the event, regardless of the order of their arrival time or processing.

Event processing time usually results in a certain delay, because it needs to wait for a certain time lag events and disorderly events.

Injection time (Ingestion time)

The injection time is the event of the entry time flink. Each event will get the current time in Sources Sources operator as a time stamp, the time stamp will be based on the time-based operations (such as windows).

Injected on the concept of time in the event between time and processing time. Compared with the processing time, some slightly more consumption performance, but provides a predictable result. Because the fixed injection time stamp (at time partitioned Sources), different window operations are using the same time, using the processing time of each window operation, may be assigned to a different message time window (operating on the local system time ).

Compared with the event time (event time), the injection time can not handle without the need of any events or hysteresis data, but the program does not specify how to generate watermark.

Internally, the injection time and events are very similar, but the injection time with automatic time stamp allocation and automatic generation watermark function.

Setting time characteristics

Flink DataStream first portion of the program wherein the time reference is usually provided. This setting defines the behavior of the data flow source (e.g., whether they will be assigned a time stamp), and such KeyedStream.timeWindow (Time.seconds (30)) such windowing concept of what time should be used.

final StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setStreamTimeCharacteristic(TimeCharacteristic.ProcessingTime);

-- 不设置,默认是 ProcessTime

Note that in order to run this example uses the event time requirements:

Sources directly or use data defined time events and generate emission watermark.

Or a program must be injected Timestamp Assigner & Watermark Generator after Sources.

These features are primarily describes how to use the disorder of the event timestamp and event flow out of the show.

Event time and watermark

Support event time stream processors need a way to measure the progress of the event time. For example, windows window of one hour, when the event end time has exceeded the time window needs to inform the operator operation, operator so that the operator can close the window in progress.

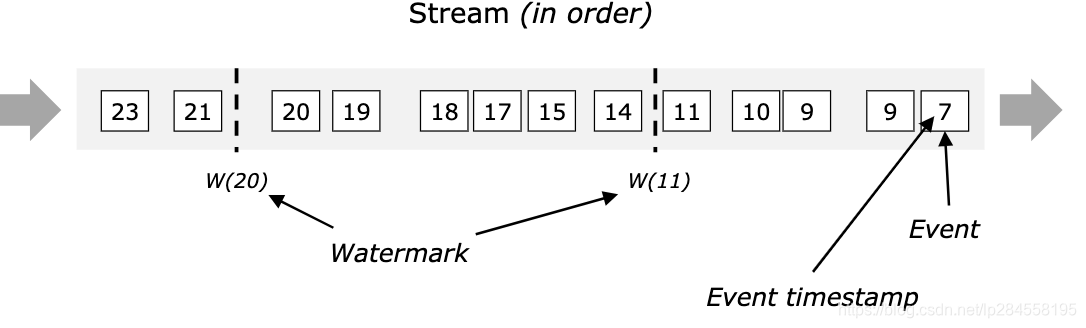

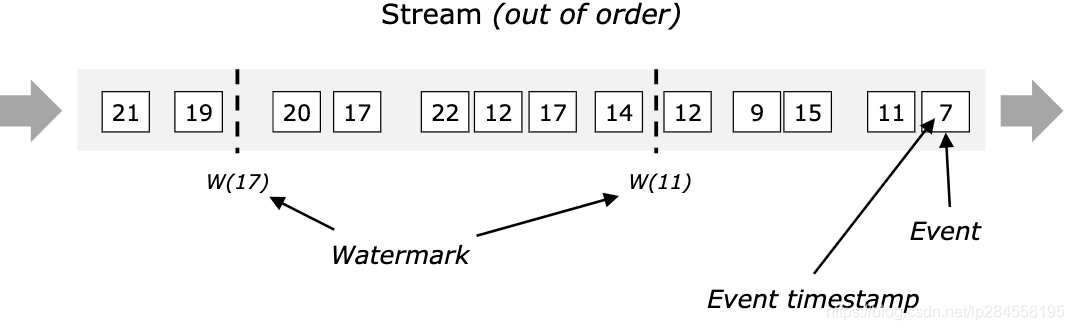

Independent processing time and event time. For example, in a program, the operator operating current event time may be slightly behind the processing time (delay cause event). Flink watermark is used to measure the progress of events based on time, Watermark as part of the data stream, carrying a timestamp t. Watermark (t) declaring the event time has been reached time t, mean that the event has no time t1 <= t of the elements present in the stream.

Watermark is essential for the chaotic flow, as shown below, events are not sorted according to time stamp. In general, watermark is a statement that should have arrived before the event watermark on behalf of all time. Once the operator reaches the watermark operator, the operator can operate to improve the internal time to a value specified watermark.

Please note that the event is a time (or more) streams newly created element produced from their event or trigger the creation of these elements Watermark inherited.

watermark in the parallel flow

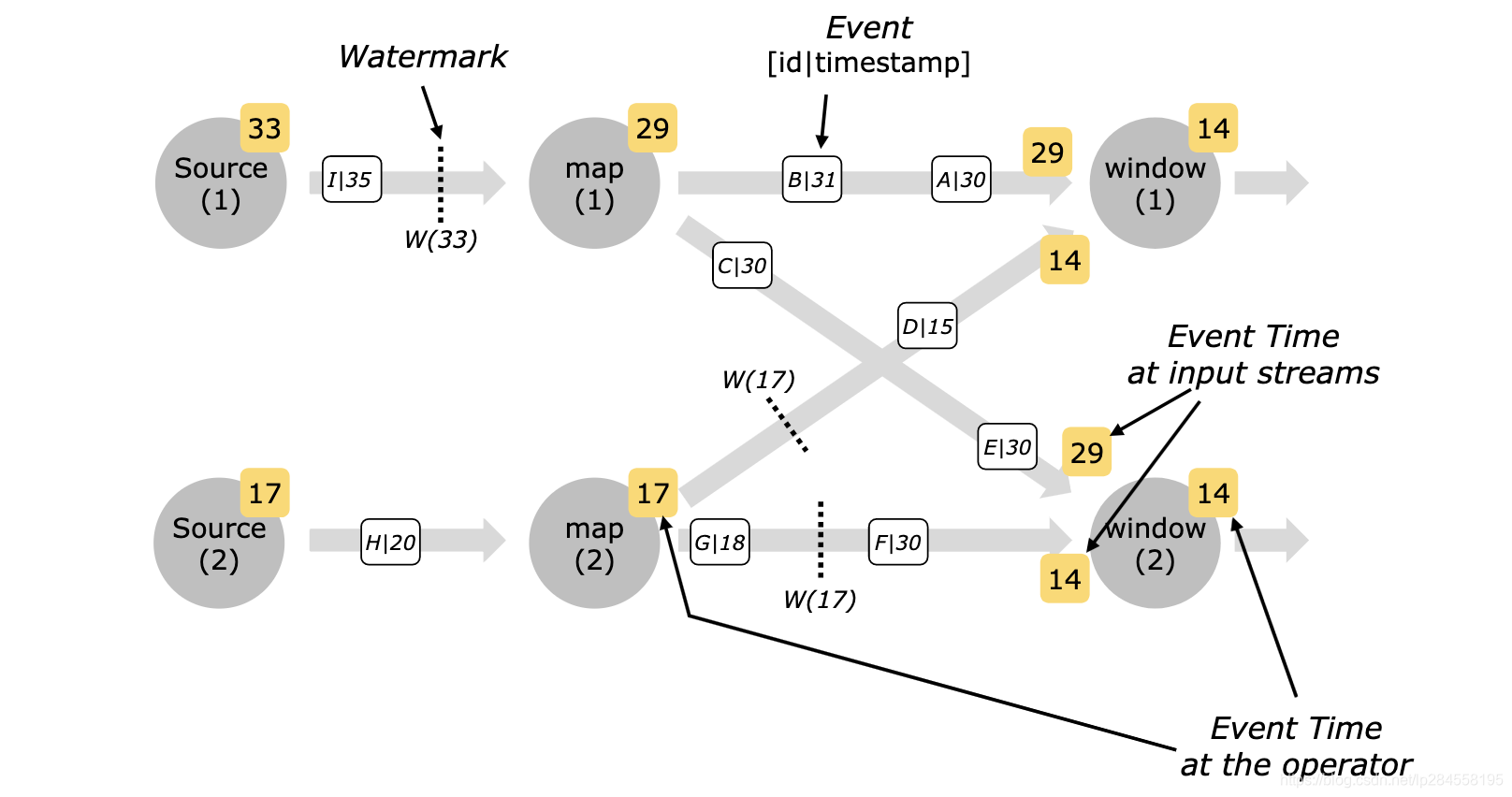

Watermark is specified in the following generation function either directly or Source. A parallel source of each subtask Operators generally independent generating watermark, which defines an event time specific watermark parallel source. When the program stream flowing through the watermark, it will adjust the operation time of the event to the time the operator reaches the watermark. Whenever the operator to adjust the operation of its own event time, it will generate a new watermark for the subsequent operation operator.

Some operators have a plurality of operation input streams. After the operation, for example, union operations or keyBy (...) or Partition (...). The current event timing of these operators is the smallest of all the input stream event time. When the input stream update their event time, operating operator will be updated.

The following figure shows an example of the event and watermark parallel streams flowing through trace events and operator time.

- Typically, Watermark generated in the source function, but may be at any stage of the source, if the specified times specified later overwrite the previous value. source of each sub task independently generated watermark

- watermark operator would advance through early event time operators, operators will simultaneously generate a new watermark downstream

- Multiple input operator (union, keyBy, partition) of the current event time is the minimum event time of the input stream

Hysteresis element

Some elements may violate watermark condition, which means that even after the occurrence of watermark (t), there will be more timestamp t '<= t of the element. In fact, in reality there may be some element of any delay, so that all the elements arrive before the watermark become less likely. Even though the delay is bounded delay watermark too much is not desirable, because it will lead to a big event delay the time window calculation.

For this reason, the streaming program may explicitly abandon lag element after the watermark appears.

Window window

The more common areas of processing real-time processing mode is event-driven processing and window treatment, flink support event-driven processing, also supports the window handle, the common window treatment mainly in two ways: the sliding window and the conversation window.

- Flink considered Batch Streaming is a special case, because the bottom of the engine is Flink streaming engine, the realization of this flow and batch. The window (Window) to Batch bridge, Flink provides a very well-window mechanism.

- A cutting Window is limited to a wireless data block set and the corresponding calculation processing means

- In streaming applications, the data is continuous, so we can not wait until all the data to begin processing. When we can each be a message on the processing time, but sometimes we need to do some type of polymerization process, such as: how many users clicked on our website in the last minute. In this case, we must define a window for collecting data within the last one minute, and the data in this window are calculated.

Window distributor (Window Assigner)

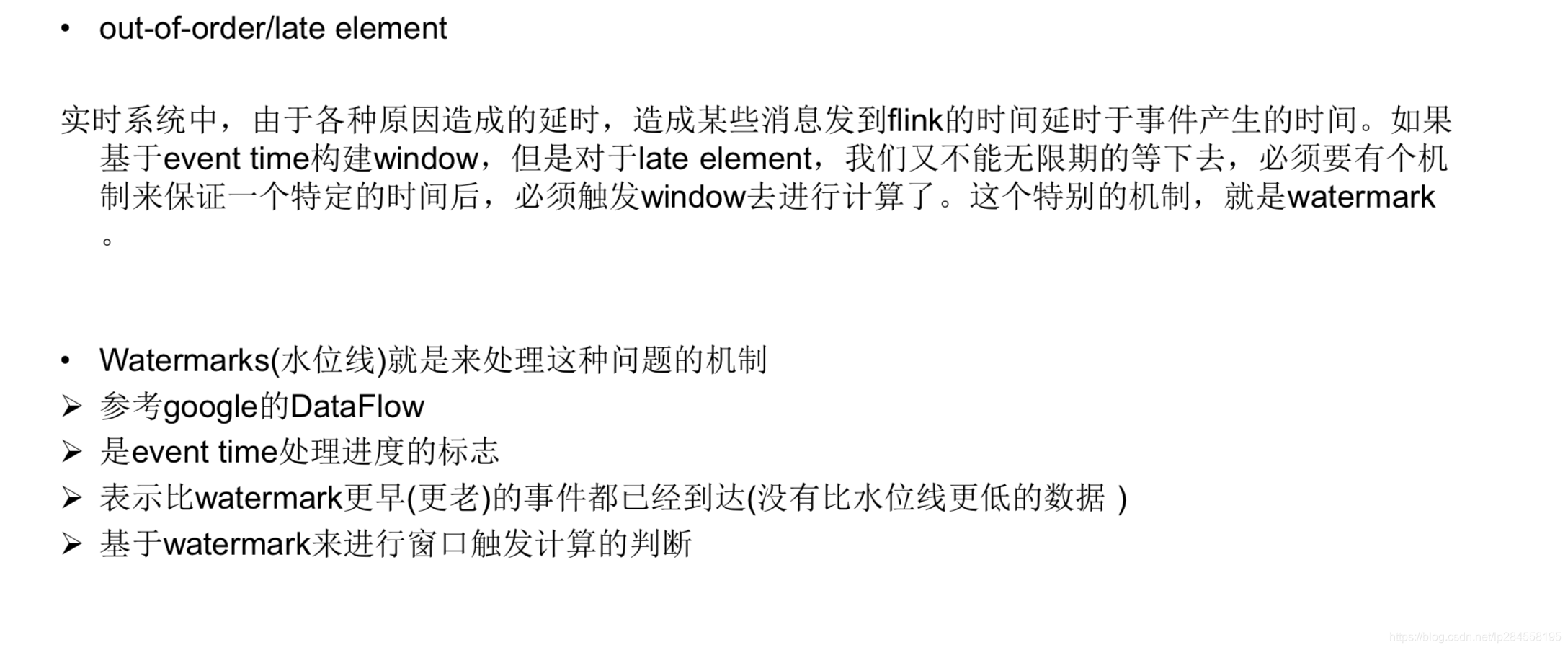

Flink window from the API used is a little difference, and a non-keyed keyed into the window, i.e. there is no convection using keyBy function. You can see, the only difference is keyBy of keying stream of (...) and calls on the window non-keyed stream of (...) becomes windowAll (...).

WindowAssigner decides to enter flink element of which will be assigned to the window. Flink has for the most common use cases predefined window dispenser that scrolling window, sliding window, the session window and global window. Of course, we can also be achieved WindowAssigner a custom class by inheriting WindowAssigner. Built WindowAssinger (except global window) is assigned to the window element according to the time, the event may be a time or processing time.

Time-based window has a start time (included) and an end time (not included) to describe the size of the window, which together describe the size of the window. Code, using TimeWindow Flink using time-based window when the window is used to query having the start and end time stamp, and an additional method returns the given window allows for the maximum time stamp maxTimestamp ()

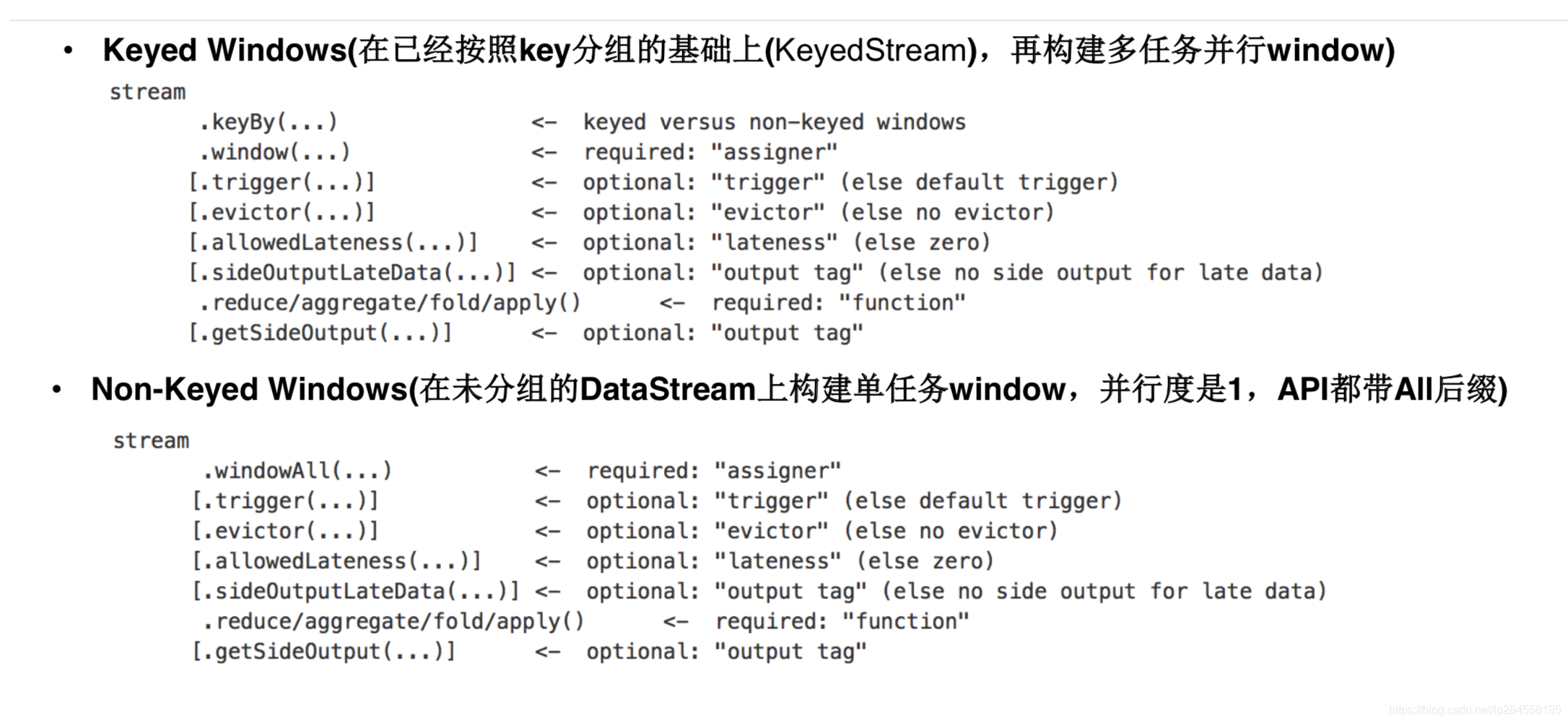

Scrolling window (Tumbling Windows)

Scroll window distributor assigns each element to specify the window size of the window. Scroll window has a fixed size, and do not overlap. For example, if you specify the size of the window roll 5 minutes, then evaluate the current window, and every five minutes to start a new window, as shown below.

The following code snippet shows how to use a rolling window.

val input: DataStream[T] = ...

// tumbling event-time windows

input

.keyBy(<key selector>)

.window(TumblingEventTimeWindows.of(Time.seconds(5)))

.<windowed transformation>(<window function>)

// tumbling processing-time windows

input

.keyBy(<key selector>)

.window(TumblingProcessingTimeWindows.of(Time.seconds(5)))

.<windowed transformation>(<window function>)

// daily tumbling event-time windows offset by -8 hours.

input

.keyBy(<key selector>)

.window(TumblingEventTimeWindows.of(Time.days(1), Time.hours(-8)))

.<windowed transformation>(<window function>)

Features **: ** time alignment, the length of the fixed window, event no overlap

** Applicable scene: ** BI Statistics (index calculated for each time period)

Alignment **: ** Default is aligned with epoch (the whole point, aliquot, the entire seconds), offset parameters may be changed by alignment

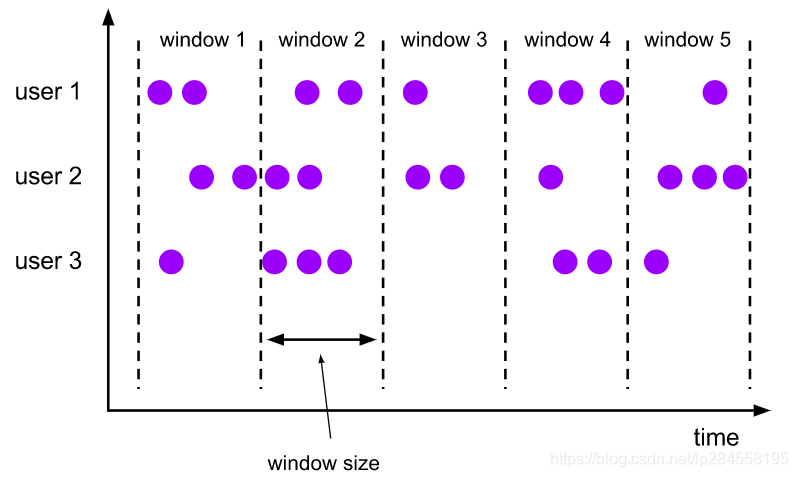

Sliding window (Sliding Windows)

Sliding window distributor element is assigned to a fixed length window. Scrolling window similar to the distributor, the size of the window by the window size configuration parameters. Additional sliding window parameter to control the start of the sliding window. Thus, if the time is less than the sliding window size, the sliding window may overlap. In this case, the element is assigned to a plurality of windows.

For example, you can set the size of 10-minute window slid for 5 minutes. Thus, every five minutes that you will get a window containing event arrives in the last 10 minutes, as shown below.

The following code fragment shows how to use the sliding window.

val input: DataStream[T] = ...

// sliding event-time windows

input

.keyBy(<key selector>)

.window(SlidingEventTimeWindows.of(Time.seconds(10), Time.seconds(5)))

.<windowed transformation>(<window function>)

// sliding processing-time windows

input

.keyBy(<key selector>)

.window(SlidingProcessingTimeWindows.of(Time.seconds(10), Time.seconds(5)))

.<windowed transformation>(<window function>)

// sliding processing-time windows offset by -8 hours

input

.keyBy(<key selector>)

.window(SlidingProcessingTimeWindows.of(Time.hours(12), Time.hours(1), Time.hours(-8)))

.<windowed transformation>(<window function>)

You may be used Time.milliseconds (x), Time.seconds (x), Time.minutes (x) to specify the time interval. As shown in the last example, the dispenser also uses the sliding window offset optional parameter that can be used to change the alignment of the window. For example, in a case where there is no offset, the slide hour 30 minute window and epoch aligned, that is, you will get 1: 00: 00.000-1: 59: 59.999,1: 30: 00.000-2: 29: 59.999 and the like windows, and so on. If you want to change, you can provide an offset.

For example, using a 15 minute offset, you will get 1: 15: 00.000-2: 14: 59.999,1: 45: 00.000-2: 44: 59.999 and the like. Important embodiments with offset window adjustment is to the time zone in addition to UTC-0. For example, in China, you will have to specify Time.hours (-8) offset.

- Sliding window size equal interval: This window is scrolled, the data do not overlap, the data is not lost.

- Sliding window size is greater than the distance: this time the data will be overlapped, that is, the data is repeated.

- Sliding window size is smaller than the interval: inevitably result in data loss, undesirable.

Features **: ** time alignment, the window length is fixed, event overlaps

** Applicable scene: ** monitored the scene, the statistics for a past period of time (such as: seeking an interface failure rate recently 5min to decide whether or not to alarm)

Alignment **: ** Default is aligned with epoch (the whole point, aliquot, the second, etc.), offset parameters may be changed by alignment

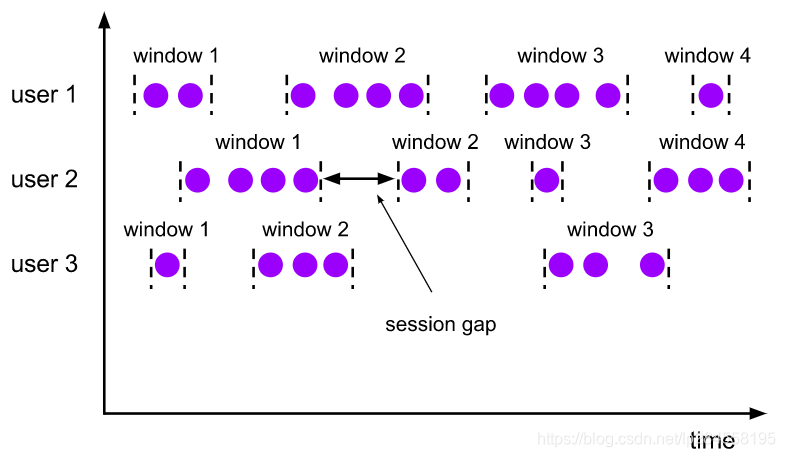

Session window (Session Windows)

Active session by session window distributor group elements. Compared with the rolling window and sliding window, the session windows do not overlap and there is no fixed start and end times. In contrast, when the session window is not received in a certain period of time element, i.e., when the inactive interval occurs, it will close. The dispenser may be configured with the conversation window interval static session, the session may be arranged with a thinning function, which defines the length of time of inactivity. When this period expires, the current session will be closed, then the elements will be assigned to the new conversation window.

The following code snippet shows how to use the session window.

val input: DataStream[T] = ...

// event-time session windows with static gap

input

.keyBy(<key selector>)

.window(EventTimeSessionWindows.withGap(Time.minutes(10)))

.<windowed transformation>(<window function>)

// event-time session windows with dynamic gap

input

.keyBy(<key selector>)

.window(EventTimeSessionWindows.withDynamicGap(new SessionWindowTimeGapExtractor[String] {

override def extract(element: String): Long = {

// determine and return session gap

}

}))

.<windowed transformation>(<window function>)

// processing-time session windows with static gap

input

.keyBy(<key selector>)

.window(ProcessingTimeSessionWindows.withGap(Time.minutes(10)))

.<windowed transformation>(<window function>)

// processing-time session windows with dynamic gap

input

.keyBy(<key selector>)

.window(DynamicProcessingTimeSessionWindows.withDynamicGap(new SessionWindowTimeGapExtractor[String] {

override def extract(element: String): Long = {

// determine and return session gap

}

}))

.<windowed transformation>(<window function>)

You may be used Time.milliseconds (x), Time.seconds (x), one of the specified static gap Time.minutes (x) et (fixed gap). Specified gap by implementing dynamic SessionWindowTimeGapExtractor interface (dynamic gap).

注意:

由于会话窗口没有固定的开始和结束,因此对它们的评估不同于滚动窗口和滑动窗口。在内部,会话窗口运算符会为每个到达的记录创建一个新窗口,如果窗口彼此之间的距离比已定义的间隔小,则将它们合并在一起。为了可合并,会话窗口运算符需要合并触发器和合并窗口函数,例如ReduceFunction,AggregateFunction或ProcessWindowFunction(FoldFunction无法合并)。

** Features: ** No aligned, event do not overlap, there is no fixed end time start River

** Applicable scene: ** Online user behavior analysis

Global Window (Global Windows)

All elements Global dispenser windows with the same key assigned to the same single global window. Only when you specify a custom trigger, this window scheme to be useful. Otherwise, it will not perform any calculations, because the global window can not end the polymerization process of natural elements.

The following code snippet shows how to use the global window.

val input: DataStream[T] = ...

input

.keyBy(<key selector>)

.window(GlobalWindows.create())

.<windowed transformation>(<window function>)

注意:

必须指定自定义触发器,否则无任何意义

Window Life Cycle

In simple terms, the window is created in the first element belonging to the window reaches the end of the event time or processing time has reached the end of the time window allowed to add user-defined delay time.

As a simple example, such a scrolling window, window size 5min, allowable delay 1min, when a first element is between 12:00 to 12:05 have entered the flink, flink 12:00 and 12 will open a : window between 05, when the watermark will arrive 12:06 remove the window.

In addition, each window will have a flip-flop and a number of handlers . The main trigger is used to trigger the window after window before calculating the demise of creation.

Triggers

Evictors

Flink addition WindowAssigner model window and Trigger, can also specify an optional Evictor. It may be used evictor (...) method to do this. evictor can well before applying the window function and / or after removing elements from the window after the fire the trigger. To this end, Evictor in two ways:

/**

* Optionally evicts elements. Called before windowing function.

*

* @param elements The elements currently in the pane.

* @param size The current number of elements in the pane.

* @param window The {@link Window}

* @param evictorContext The context for the Evictor

*/

void evictBefore(Iterable<TimestampedValue<T>> elements, int size, W window, EvictorContext evictorContext);

/**

* Optionally evicts elements. Called after windowing function.

*

* @param elements The elements currently in the pane.

* @param size The current number of elements in the pane.

* @param window The {@link Window}

* @param evictorContext The context for the Evictor

*/

void evictAfter(Iterable<TimestampedValue<T>> elements, int size, W window, EvictorContext evictorContext);

evictBefore () contains the logic to be expelled before the application of the window function, while evictAfter () contains the logic to be expelled after application window function, the window function expelled elements before applying it will not be processed.

Flink comes with three pre-deportation procedures implemented. these are:

- CountEvictor: retention element from a user specified number of windows, and discard the rest of the elements from the start of the window buffer.

- DeltaEvictor: DeltaFunction and using the threshold value, calculating the delta between the last element of the remainder of each element in the window buffer, and delete elements increment greater than or equal to the threshold value.

- TimeEvictor: In a milliseconds interval as a parameter, for a given window, it will find the maximum element in its max_ts time stamp, and remove all elements is less than the time stamp of max_ts-interval.

By default, all pre-deportation procedures are implemented application logic before the window function.

note:

- Specifies evictor prevents any pre-aggregated, since all the elements of the window must be passed prior to the application evictor calculation.

- Flink not guarantee the order of elements in the window. This means that although evictor can remove elements from the start of the window, but not necessarily elements of the first to arrive.

Permissible delay

When an event window of time, the elements may occur late arrival of the situation, namely Flink track event schedule for the watermark has exceeded the end timestamp element belongs window. By default, when a watermark over the end of the window will be removed later elements.

Set the delay time

Flink allows you to specify the maximum delay allowed for the window operator. Allow delay before the specified element can delete the delay time, the default value is 0. After the end of the watermark window through the window but before the end of the element reaches the allowable delay plus, still added to the window. According to trigger the use of the delay but did not drop the elements may cause the window again triggered. EventTimeTrigger is the case.

To make this work normally, Flink holding state of the window, until the delay expires allowed. Once this happens, Flink will remove the window and remove its state, as in the "life-cycle window" section.

By default, allowable delay is set to 0. In other words, the elements after reaching the watermark will be discarded.

You can specify the allowable delay:

val input: DataStream[T] = ...

input

.keyBy(<key selector>)

.window(<window assigner>)

.allowedLateness(<time>)

.<windowed transformation>(<window function>)

When using GlobalWindows window distributor, will never consider any data because the end timestamp Global Window is Long.MAX_VALUE.

Data output side

Flink using output side can be obtained discarded data stream. First, you need to use sideOutputLateData (OutputTag) on the window you want to get the latest data stream. Then, you can get the output stream side window according to the results of operations:

val lateOutputTag = OutputTag[T]("late-data")

val input: DataStream[T] = ...

val result = input

.keyBy(<key selector>)

.window(<window assigner>)

.allowedLateness(<time>)

.sideOutputLateData(lateOutputTag)

.<windowed transformation>(<window function>)

val lateStream = result.getSideOutput(lateOutputTag)

When the allowable delay is greater than 0, after the end of the watermark by a window, the window and its contents retained specified. In these cases, when the delay reaches an element but not discarded, it could trigger another trigger of the window. These triggers called delayed trigger, because they are triggered by the delay of events, with the main trigger (ie window of a trigger) opposite. In the case of the conversation window, the late trigger further cause the window to merge, as they may "bridge" the gap between the two unincorporated pre-existing windows.

Late trigger element calculation shall be deemed to update the results previously calculated, that is, your data stream will contain multiple results of the same calculation. Depending on your application, you need to consider these results or repeat them deduplication.

The results of the processing window

Results DataStream or window operation results of the operation element does not retain any information about the window operation, therefore, if you want to keep information about the window element, the element must result in ProcessWindowFunction of encoding the information manually. The only information provided on the resulting element is the element timestamp. Since the window is the only end timestamp, which is thus set to the maximum allowed time stamp processing window, i.e. End timestamp -. 1 . Please note that the time window for the event, and the processing time window is true. That is, after the operation window element always has a time stamp, but it can be a timestamp or time event processing time stamp. For the treatment time window, it no special meaning, but the time window for the event, along with interaction with watermark window together, continuous window can operate in the same window size. How to view the watermark window after interacting with, we will introduce.

watermark and windows of interaction

When reaching the watermark window operator, it will trigger two things:

- watermark triggers the greatest of all time stamp (ie End timestamp - 1 ) the calculation is less than the new watermark of all windows

- watermark is (as is) forwarded to downstream operations

Intuitively, once received downstream operations watermark, watermark will "flushes" all in the downstream operations are considered late window.

Continuous window operation

As described above, the timestamp calculation results of windowing scheme and interact with the window allows the watermark continuous windowing operations together. When you want to perform two consecutive windowing operation, if you want to use different keys, but still want the same elements from the upstream window downstream of the final in the same window, this feature is useful. Consider the following example:

val input: DataStream[Int] = ...

val resultsPerKey = input

.keyBy(<key selector>)

.window(TumblingEventTimeWindows.of(Time.seconds(5)))

.reduce(new Summer())

val globalResults = resultsPerKey

.windowAll(TumblingEventTimeWindows.of(Time.seconds(5)))

.process(new TopKWindowFunction())

In this example, the time window [0,5) is a result of the operation is also the first time window subsequent window operations [0,5) in the end. This allows calculation of the sum of each key is then calculated in the same window in the top-k elements of the second operation.

Useful to consider the size of the state

Windows can be defined for a long time (eg days, weeks or months), thus accumulated a great state. In estimating the storage requirements for the calculation of the window, a few rules to keep in mind:

- Flink window to create a copy of each element belongs. In view of this, scroll the window will retain a copy of each element (an element happens to belong to a window, unless it is delayed placement). Instead, the sliding window creates multiple for each element. Therefore, the size of a one-day sliding window and sliding one second sliding window is probably not a good idea.

- ReduceFunction, AggregateFunction FoldFunction and can greatly reduce storage requirements, as they desire the polymerization elements and store only one value for each window. Instead, only use ProcessWindowFunction need to accumulate all the elements.

- Use Evictor prevents any prepolymerization, because all the elements must be transmitted through the window before applying evictor calculation.