introduction

step, iteration, epoch, batchsize, learning rate are for the model in terms of training, is ultra-parameter model train set.

sample

In machine learning, sample refers to part of a complete data set of individual data. A middle school student achievement such as table of results for all subjects, handwritten digital data set of a certain digital pictures.

What is positive samples?

The so-called positive samples correctly classified refers to want a multi-category corresponding samples. For example, to determine whether a person is male avatar photo. Then the training data, when men picture is positive samples and negative samples that women photos.

step, also known as iteration

Is usually translated as iterations, each iteration parameters of the model will be updated

epoch

Typically translated number wheel, refers to the number of "round" (i.e., train) for all samples in the training data set (data) input model.

Why do we need more epoch?

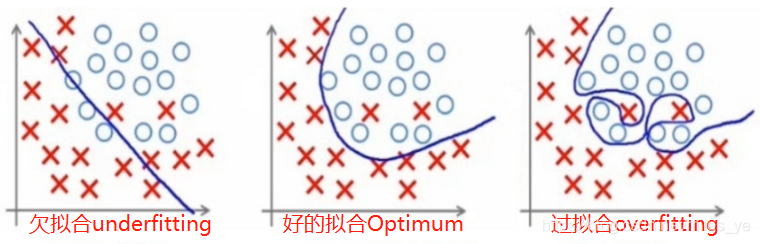

Model training data needs to be multiple training set, through optimization of the model parameters in the gradient descent training model, for optimal model (Optimum). epoch too small will lead to less fit (underfitting), epoch too large lead to over-fitting (overfitting).

How many epoch is appropriate?

The problem is not the right answer. Epoch need to give more appropriate value depending on the application and the training set. This is preferable to set the parameters of the problem over.

What is the relationship between two epoch?

One epoch will model parameters (e.g.: w neural network and b) is passed to the next epoch.

Note: After each epoch, the need for the training data set shuffle, and then into the next round of training.

batchsize

batch

Batch is typically translated, means to extract a subset of the training set (which is part of the training data set) is a training model, even if a loss function (Loss Function).

Why batch?

In order to find the best balance between the memory capacity and memory efficiency

batchsize

Is usually translated as batch size, refers to the number of samples required for a model training, that is a batch (batch) the number of samples (data).

How to set batchsize?

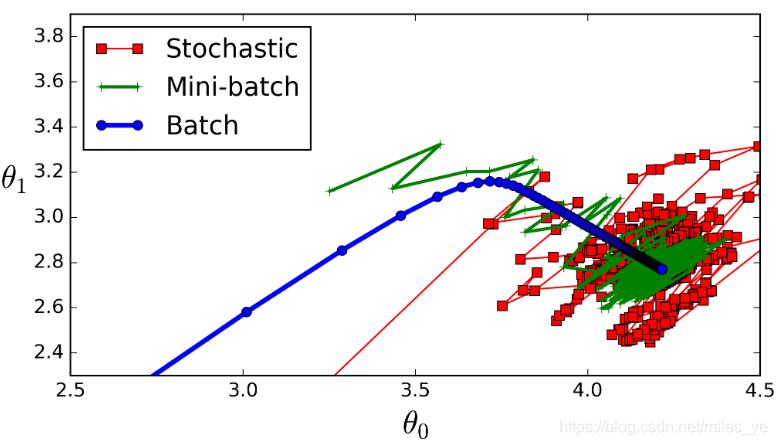

- Stochastic FIG red for the (random), means batchsize = 1, the model train once with a sample, a gradient decrease updated. Using this model training mode is called stochastic gradient descent (SGD, Stochastic Gradient Descent). In this way the training is faster, but the convergence is not good. This can be seen from the figure manner slow convergence speed, often difficult to achieve convergence.

- FIG green on Mini-batch (mini), which is commonly referred to BatchSize, whose value is between Stochastic (FIG red top) and the Batch (FIG on blue) between. The input samples batchsize model, calculating an average value of loss produced in all samples. Way to use this model of training is called Mini-Batch Gradient Descent

- FIG Batch the blue (full batch), batchsize means of all the training set samples, using all the training a training data set. Way to use this model of training is called batch gradient descent (BGD, Batch Gradient Descent). When the data set is small, the whole batch well represent the overall sample, more accurate gradient descent toward the extreme value. However, large computation, slow calculation does not support online learning. When the data set is large, but can not use this approach less effective.