Problem Description:

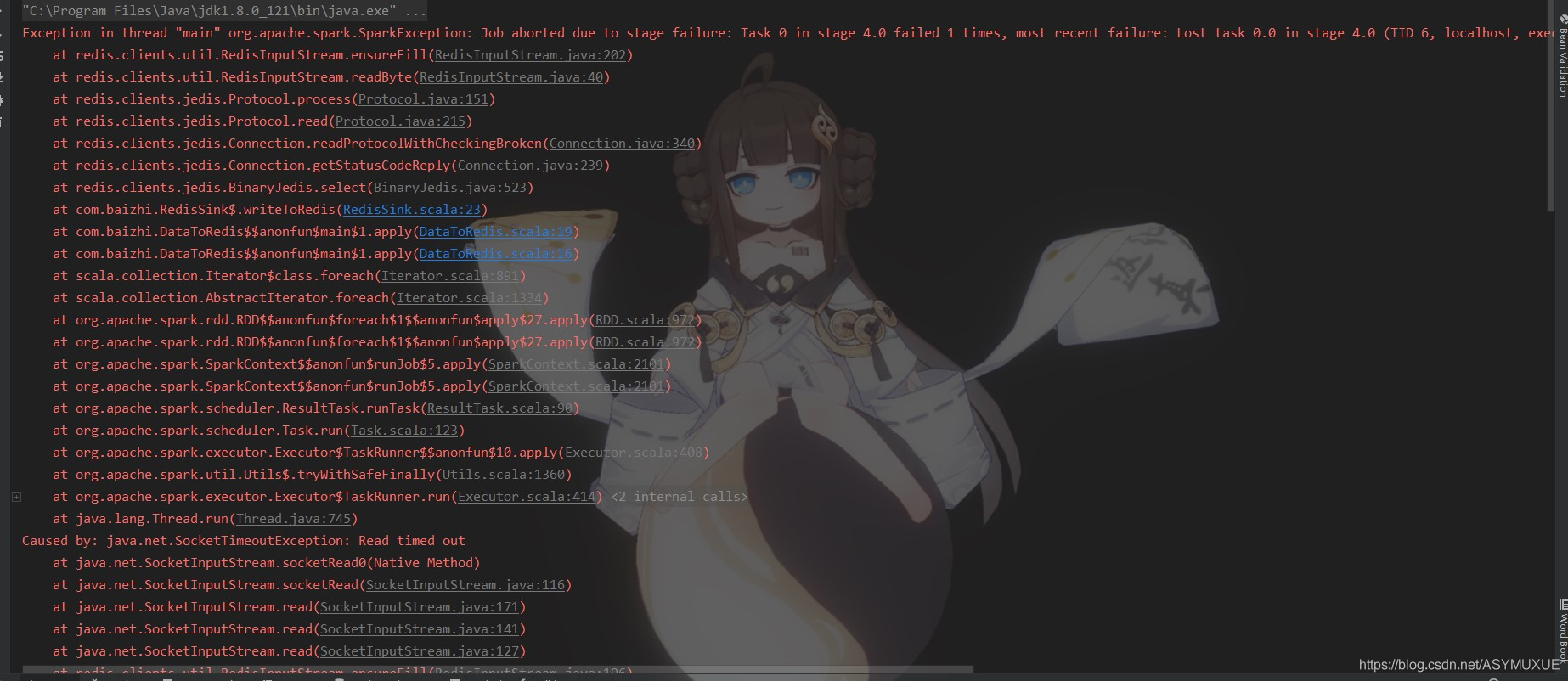

Using the Spark, the result of the processing at the redistime of writing data, the following exception is thrown:

Exception in thread "main" org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 4.0 failed 1 times, most recent failure: Lost task 0.0 in stage 4.0 (TID 6, localhost, executor driver): redis.clients.jedis.exceptions.JedisConnectionException: java.net.SocketTimeoutException: Read timed out:

Causes of the problem:

Later, after testing, we found that because of the creation rediswhen connected directly on the property defines the connection, because redis是单例of, does not support concurrent; at the same time, a single connection on the singleton class property, is using multiple threads, certainly not possible.

solve:

使每个线程都获取一条连接

//定义redis写入的单例类。

package com.baizhi

import redis.clients.jedis.{Jedis, JedisPool, JedisPoolConfig}

object RedisSink {

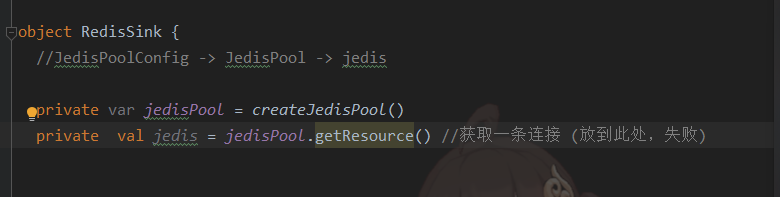

//JedisPoolConfig -> JedisPool -> jedis

private var jedisPool = createJedisPool()

/* private val jedis = jedisPool.getResource() *///获取的是同一条连接 (放到此处,失败)

def createJedisPool():JedisPool={

//连接池相关参数的配置

val poolConf = new JedisPoolConfig

poolConf.setMaxTotal(6) //设置最大连接数

//创建连接池对象

val pool =new JedisPool(poolConf,"hbase")

return pool

}

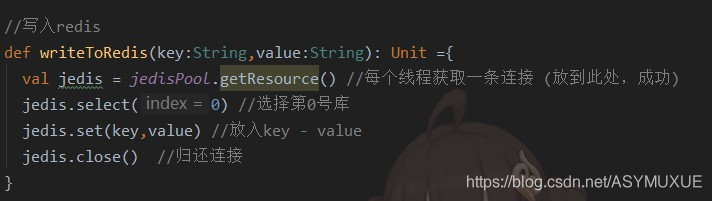

//写入redis

def writeToRedis(key:String,value:String): Unit ={

val jedis = jedisPool.getResource() //获取一条连接 (放到此处,成功)

jedis.select(0) //选择第0号库

jedis.set(key,value) //放入key - value

jedis.close() //归还连接

}

//监控JVM退出,如果JVM退出系统回调该⽅法

Runtime.getRuntime.addShutdownHook(new Thread(new Runnable {

override def run(): Unit = {

println("连接池资源回收启动")

jedisPool.close()

}

}))

}

//测试

package com.baizhi

import org.apache.spark.{SparkConf, SparkContext}

object DataToRedis {

def main(args: Array[String]): Unit = {

//创建SparkContext

val conf = new SparkConf()

.setMaster("local[*]")

.setAppName("DataToRedis")

val sc = new SparkContext(conf)

//创建RDD,并执行转换操作

sc.textFile("hdfs://hbase:9000/zyl.log") .flatMap(_.split("\\s+"))

.map((_,1))

.reduceByKey(_+_) .sortBy(_._2,true) .foreach(vs=>{

var key = vs._1 //获取元素的key

var value = vs._2.toString //获取元素的value

RedisSink.writeToRedis(key,value) //向redis 写入数据

})

//关闭sc

sc.stop()

}

}