Spark和Hive集成(MySql)

一、 编辑hive安装目录下conf目录下的hive-site.xml

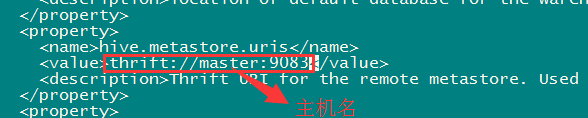

更改:hive.metastore.uris

<property>

<name>hive.metastore.uris</name>

<value>thrift://master:9083</value>

<description>Thrift uri for the remote metastore. Used bymetastore client to connect to remote metastore.</description>

</property>

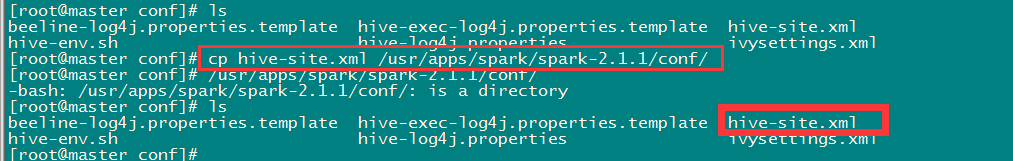

二、 将hive-site.xml拷贝到spark安装目录的conf目录下

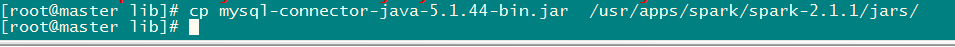

三、 将hive安装目录lib目录下的mysql驱动包拷贝到spark安装目录的jars目录下

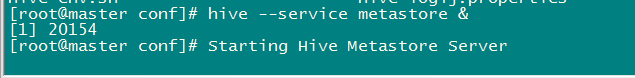

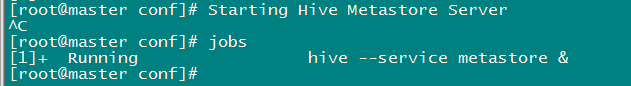

四、 启动元数据metastore

命令:hive --service metastore &

五、 查看元数据metastore

命令:jobs

六、 关闭元数据

命令:kill %1

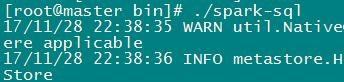

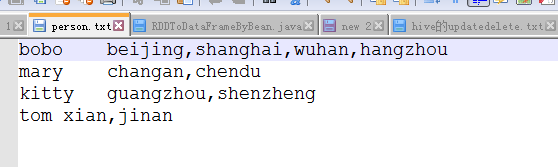

七、 启动spark-sql

启动成功。

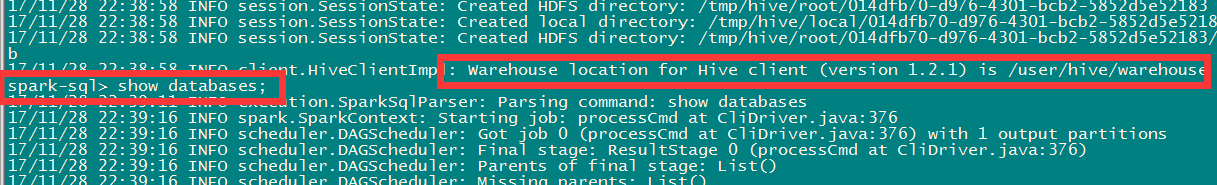

查询所有数据库:

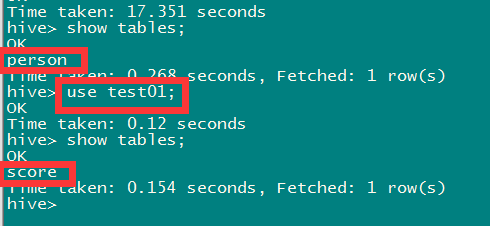

八、 测试spark和hive集成(default库)

1. 在hive命令新建表

CREATE TABLE PERSON(NAME STRING,WORK_LOCATIONSARRAY<STRING>) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' COLLECTIONITEMS TERMINATED BY ',';

2. 新建person.txt

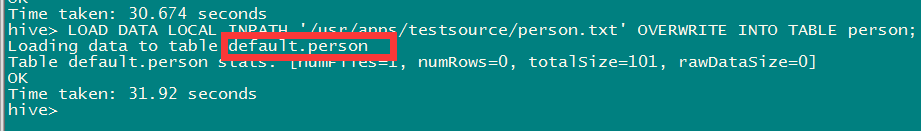

3. 将数据load到新建的person表中

LOAD DATA LOCAL INPATH '/usr/apps/testsource/person.txt'OVERWRITE INTO TABLE person;

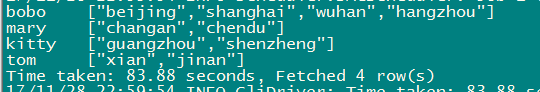

4. 在spark-sql中查询

九、 测试spark和hive集成(自定义库)

1. 在hive命令新建一张表

CREATE TABLETEST01.SCORE(NAME STRING, SCORE MAP<STRING,INT>) ROW FORMAT DELIMITEDFIELDS TERMINATED BY '\t' COLLECTION ITEMS TERMINATED BY ',' MAP KEYS TERMINATEDBY ':';

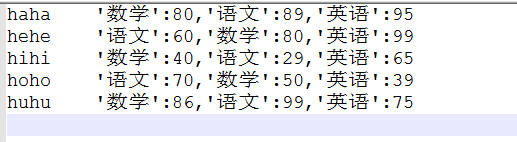

2. 新建score.txt

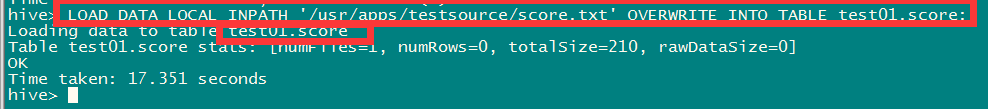

3. 将数据load到新建的score表中

LOAD DATA LOCALINPATH '/usr/apps/testsource/score.txt' OVERWRITE INTO TABLE test01.score;

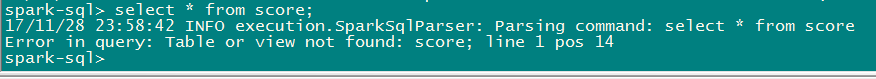

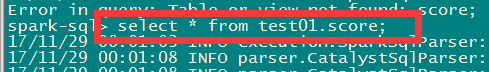

4. 在spark-sql中查询

查询:select * from score;

原因:没有指定库名。

select * from test01.score;

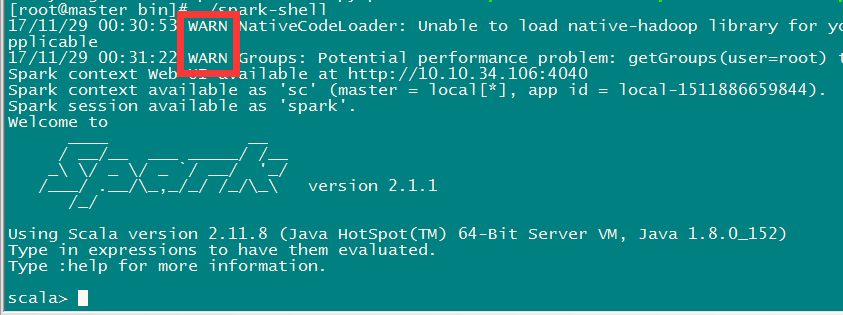

十、 更改日志启动级别

在spark安装目录下的conf目录下log4j.properties中

将INFO改为WARN

十一、 重新启动