这种问题是fluent多线程问题,一旦出现这种问题整个fluent就死掉了,所有的数据都无法保存,问题很严重。

但是问题一般情况不是多线程本身的问题,而是因为线程里面运行的计算过程出现了问题。

1、MPI_Finalize() with status 2 原因之一:出现负体积

只要出现负体积,线程的计算就无法进行下去了,这时候线程要抛出异常终止

Error at Node 3: Update-Dynamic-Mesh failed. Negative cell volume detected.

WARNING: 2 cells with non-positive volume detected.MPI Application rank 0 exited before MPI_Finalize() with status 2

2、MPI_Finalize() with status 2 原因之二:任何原因出现发散或速度或移动推进速度过快的情况,如Courant数超大

112 more time steps

Updating solution at time level N...

Global Courant Number [Explicit VOF Criteria] : 471.06

Error at Node 0: Global courant number is greater than 250.00 The

velocity field is probably diverging. Please check the solution

and reduce the time-step if necessary.

Error at Node 1: Global courant number is greater than 250.00 The

velocity field is probably diverging. Please check the solution

and reduce the time-step if necessary.

Error at Node 2: Global courant number is greater than 250.00 The

velocity field is probably diverging. Please check the solution

and reduce the time-step if necessary.

Error at Node 3: Global courant number is greater than 250.00 The

velocity field is probably diverging. Please check the solution

and reduce the time-step if necessary.

MPI Application rank 0 exited before MPI_Finalize() with status 2

===============Message from the Cortex Process================================

Fatal error in one of the compute processes.

==============================================================================

Error: Cortex received a fatal signal (unrecognized signal).

Error Object: ()

Error: There is no active application.

Error Object: (case-modified?)

Error: No journal response to dialog box message:'There is no active application.'

. Internally, cancelled the dialog.

Error: There is no active application.

Error Object: (rp-var-value 'physical-time-step)

Error: There is no active application.

Error Object: (rp-var-value 'delta-time-sampled)

3、MPI_Finalize() with status 2 原因之三:内存过度紧张,多线程中只要任何一个线程无法分配到足够的内存,就会终止

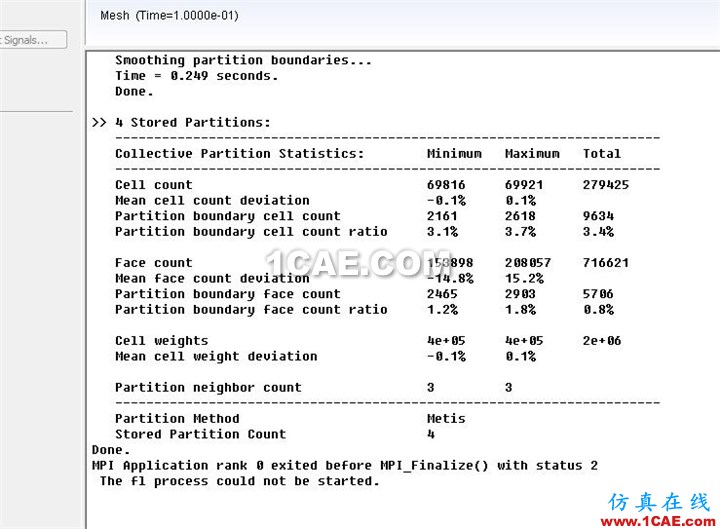

>>问题关键:一般情况下,MPI_Finalize() with status 出现之前会有错误信息,如上面e文所示,但是有些情况是没有的

如下图所示:

上面的计算是在进行一次正常的动网格重构完成后,进入下一次迭代求解计算的时候,直接出现了问题。

笔者检查机器此时内存占用已经达到92%的水平,为了进一步验证这个猜测,本人马上用一个可以正常计算的case,在内存90%以上的情况下进行计算

开始可以计算一步,第二部就直接MPI_Finalize() with status 2

fluent多线程mpi异常退出问题,还有多种不同的status,如-1最多,其实只有两种类型的错误,一种是脚本错误,一种是物理模型数据错误

前者如 journal file 脚本 udf脚本,这些错误一般会导致-1或其他-值,后者就是发散、超指标等导致异常物理指标的情况终止。

情况很多,各位要具体问题具体分析,先看出现问题之前的log,如果有log这就是问题根源,如果没有log提示,很可能就是内存问题

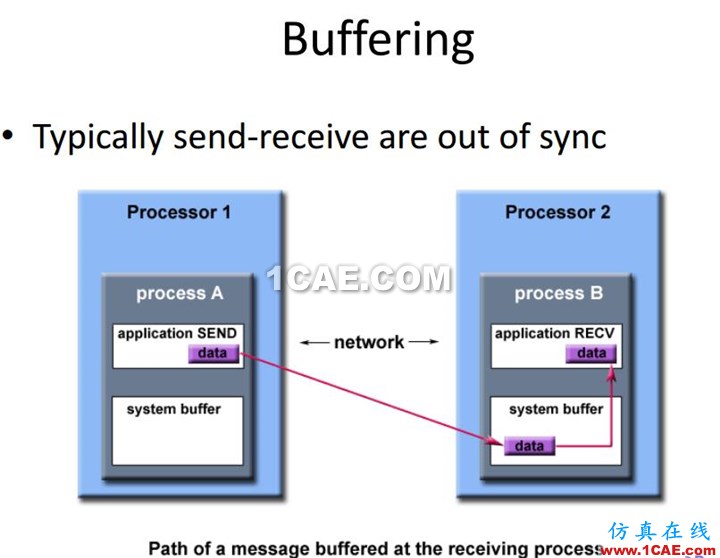

还有一种情况是在多线程计算中其实内部不同线程一直在持续通讯,如果计算过程网络环境变化,直接就回出问题,下图是计算过程通讯图,如果网络改变了,比如ip或网卡属性变化,在计算期间是不允许的。